Nick Cheney

Activation Function Design Sustains Plasticity in Continual Learning

Sep 26, 2025Abstract:In independent, identically distributed (i.i.d.) training regimes, activation functions have been benchmarked extensively, and their differences often shrink once model size and optimization are tuned. In continual learning, however, the picture is different: beyond catastrophic forgetting, models can progressively lose the ability to adapt (referred to as loss of plasticity) and the role of the non-linearity in this failure mode remains underexplored. We show that activation choice is a primary, architecture-agnostic lever for mitigating plasticity loss. Building on a property-level analysis of negative-branch shape and saturation behavior, we introduce two drop-in nonlinearities (Smooth-Leaky and Randomized Smooth-Leaky) and evaluate them in two complementary settings: (i) supervised class-incremental benchmarks and (ii) reinforcement learning with non-stationary MuJoCo environments designed to induce controlled distribution and dynamics shifts. We also provide a simple stress protocol and diagnostics that link the shape of the activation to the adaptation under change. The takeaway is straightforward: thoughtful activation design offers a lightweight, domain-general way to sustain plasticity in continual learning without extra capacity or task-specific tuning.

Evolutionary Brain-Body Co-Optimization Consistently Fails to Select for Morphological Potential

Aug 24, 2025Abstract:Brain-body co-optimization remains a challenging problem, despite increasing interest from the community in recent years. To understand and overcome the challenges, we propose exhaustively mapping a morphology-fitness landscape to study it. To this end, we train controllers for each feasible morphology in a design space of 1,305,840 distinct morphologies, constrained by a computational budget. First, we show that this design space constitutes a good model for studying the brain-body co-optimization problem, and our attempt to exhaustively map it roughly captures the landscape. We then proceed to analyze how evolutionary brain-body co-optimization algorithms work in this design space. The complete knowledge of the morphology-fitness landscape facilitates a better understanding of the results of evolutionary brain-body co-optimization algorithms and how they unfold over evolutionary time in the morphology space. This investigation shows that the experimented algorithms cannot consistently find near-optimal solutions. The search, at times, gets stuck on morphologies that are sometimes one mutation away from better morphologies, and the algorithms cannot efficiently track the fitness gradient in the morphology-fitness landscape. We provide evidence that experimented algorithms regularly undervalue the fitness of individuals with newly mutated bodies and, as a result, eliminate promising morphologies throughout evolution. Our work provides the most concrete demonstration of the challenges of evolutionary brain-body co-optimization. Our findings ground the trends in the literature and provide valuable insights for future work.

Morphological Cognition: Classifying MNIST Digits Through Morphological Computation Alone

Aug 24, 2025Abstract:With the rise of modern deep learning, neural networks have become an essential part of virtually every artificial intelligence system, making it difficult even to imagine different models for intelligent behavior. In contrast, nature provides us with many different mechanisms for intelligent behavior, most of which we have yet to replicate. One of such underinvestigated aspects of intelligence is embodiment and the role it plays in intelligent behavior. In this work, we focus on how the simple and fixed behavior of constituent parts of a simulated physical body can result in an emergent behavior that can be classified as cognitive by an outside observer. Specifically, we show how simulated voxels with fixed behaviors can be combined to create a robot such that, when presented with an image of an MNIST digit zero, it moves towards the left; and when it is presented with an image of an MNIST digit one, it moves towards the right. Such robots possess what we refer to as ``morphological cognition'' -- the ability to perform cognitive behavior as a result of morphological processes. To the best of our knowledge, this is the first demonstration of a high-level mental faculty such as image classification performed by a robot without any neural circuitry. We hope that this work serves as a proof-of-concept and fosters further research into different models of intelligence.

How Weight Resampling and Optimizers Shape the Dynamics of Continual Learning and Forgetting in Neural Networks

Jul 02, 2025

Abstract:Recent work in continual learning has highlighted the beneficial effect of resampling weights in the last layer of a neural network (``zapping"). Although empirical results demonstrate the effectiveness of this approach, the underlying mechanisms that drive these improvements remain unclear. In this work, we investigate in detail the pattern of learning and forgetting that take place inside a convolutional neural network when trained in challenging settings such as continual learning and few-shot transfer learning, with handwritten characters and natural images. Our experiments show that models that have undergone zapping during training more quickly recover from the shock of transferring to a new domain. Furthermore, to better observe the effect of continual learning in a multi-task setting we measure how each individual task is affected. This shows that, not only zapping, but the choice of optimizer can also deeply affect the dynamics of learning and forgetting, causing complex patterns of synergy/interference between tasks to emerge when the model learns sequentially at transfer time.

Controller Distillation Reduces Fragile Brain-Body Co-Adaptation and Enables Migrations in MAP-Elites

Apr 09, 2025Abstract:Brain-body co-optimization suffers from fragile co-adaptation where brains become over-specialized for particular bodies, hindering their ability to transfer well to others. Evolutionary algorithms tend to discard such low-performing solutions, eliminating promising morphologies. Previous work considered applying MAP-Elites, where niche descriptors are based on morphological features, to promote better search over morphology space. In this work, we show that this approach still suffers from fragile co-adaptation: where a core mechanism of MAP-Elites, creating stepping stones through solutions that migrate from one niche to another, is disrupted. We suggest that this disruption occurs because the body mutations that move an offspring to a new morphological niche break the robots' fragile brain-body co-adaptation and thus significantly decrease the performance of those potential solutions -- reducing their likelihood of outcompeting an existing elite in that new niche. We utilize a technique, we call Pollination, that periodically replaces the controllers of certain solutions with a distilled controller with better generalization across morphologies to reduce fragile brain-body co-adaptation and thus promote MAP-Elites migrations. Pollination increases the success of body mutations and the number of migrations, resulting in better quality-diversity metrics. We believe we develop important insights that could apply to other domains where MAP-Elites is used.

No-brainer: Morphological Computation driven Adaptive Behavior in Soft Robots

Jul 23, 2024

Abstract:It is prevalent in contemporary AI and robotics to separately postulate a brain modeled by neural networks and employ it to learn intelligent and adaptive behavior. While this method has worked very well for many types of tasks, it isn't the only type of intelligence that exists in nature. In this work, we study the ways in which intelligent behavior can be created without a separate and explicit brain for robot control, but rather solely as a result of the computation occurring within the physical body of a robot. Specifically, we show that adaptive and complex behavior can be created in voxel-based virtual soft robots by using simple reactive materials that actively change the shape of the robot, and thus its behavior, under different environmental cues. We demonstrate a proof of concept for the idea of closed-loop morphological computation, and show that in our implementation, it enables behavior mimicking logic gates, enabling us to demonstrate how such behaviors may be combined to build up more complex collective behaviors.

Towards Multi-Morphology Controllers with Diversity and Knowledge Distillation

Apr 22, 2024Abstract:Finding controllers that perform well across multiple morphologies is an important milestone for large-scale robotics, in line with recent advances via foundation models in other areas of machine learning. However, the challenges of learning a single controller to control multiple morphologies make the `one robot one task' paradigm dominant in the field. To alleviate these challenges, we present a pipeline that: (1) leverages Quality Diversity algorithms like MAP-Elites to create a dataset of many single-task/single-morphology teacher controllers, then (2) distills those diverse controllers into a single multi-morphology controller that performs well across many different body plans by mimicking the sensory-action patterns of the teacher controllers via supervised learning. The distilled controller scales well with the number of teachers/morphologies and shows emergent properties. It generalizes to unseen morphologies in a zero-shot manner, providing robustness to morphological perturbations and instant damage recovery. Lastly, the distilled controller is also independent of the teacher controllers -- we can distill the teacher's knowledge into any controller model, making our approach synergistic with architectural improvements and existing training algorithms for teacher controllers.

Investigating Premature Convergence in Co-optimization of Morphology and Control in Evolved Virtual Soft Robots

Feb 14, 2024Abstract:Evolving virtual creatures is a field with a rich history and recently it has been getting more attention, especially in the soft robotics domain. The compliance of soft materials endows soft robots with complex behavior, but it also makes their design process unintuitive and in need of automated design. Despite the great interest, evolved virtual soft robots lack the complexity, and co-optimization of morphology and control remains a challenging problem. Prior work identifies and investigates a major issue with the co-optimization process -- fragile co-adaptation of brain and body resulting in premature convergence of morphology. In this work, we expand the investigation of this phenomenon by comparing learnable controllers with proprioceptive observations and fixed controllers without any observations, whereas in the latter case, we only have the optimization of the morphology. Our experiments in two morphology spaces and two environments that vary in complexity show, concrete examples of the existence of high-performing regions in the morphology space that are not able to be discovered during the co-optimization of the morphology and control, yet exist and are easily findable when optimizing morphologies alone. Thus this work clearly demonstrates and characterizes the challenges of optimizing morphology during co-optimization. Based on these results, we propose a new body-centric framework to think about the co-optimization problem which helps us understand the issue from a search perspective. We hope the insights we share with this work attract more attention to the problem and help us to enable efficient brain-body co-optimization.

Reset It and Forget It: Relearning Last-Layer Weights Improves Continual and Transfer Learning

Oct 12, 2023

Abstract:This work identifies a simple pre-training mechanism that leads to representations exhibiting better continual and transfer learning. This mechanism -- the repeated resetting of weights in the last layer, which we nickname "zapping" -- was originally designed for a meta-continual-learning procedure, yet we show it is surprisingly applicable in many settings beyond both meta-learning and continual learning. In our experiments, we wish to transfer a pre-trained image classifier to a new set of classes, in a few shots. We show that our zapping procedure results in improved transfer accuracy and/or more rapid adaptation in both standard fine-tuning and continual learning settings, while being simple to implement and computationally efficient. In many cases, we achieve performance on par with state of the art meta-learning without needing the expensive higher-order gradients, by using a combination of zapping and sequential learning. An intuitive explanation for the effectiveness of this zapping procedure is that representations trained with repeated zapping learn features that are capable of rapidly adapting to newly initialized classifiers. Such an approach may be considered a computationally cheaper type of, or alternative to, meta-learning rapidly adaptable features with higher-order gradients. This adds to recent work on the usefulness of resetting neural network parameters during training, and invites further investigation of this mechanism.

Many-objective Optimization via Voting for Elites

Jul 05, 2023

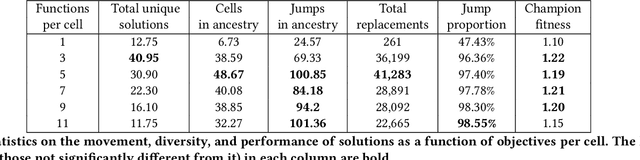

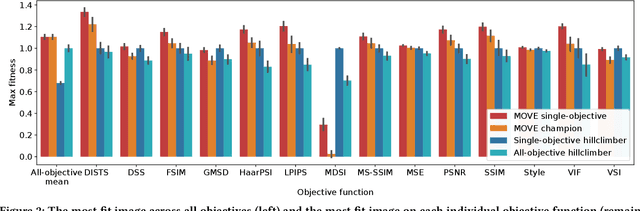

Abstract:Real-world problems are often comprised of many objectives and require solutions that carefully trade-off between them. Current approaches to many-objective optimization often require challenging assumptions, like knowledge of the importance/difficulty of objectives in a weighted-sum single-objective paradigm, or enormous populations to overcome the curse of dimensionality in multi-objective Pareto optimization. Combining elements from Many-Objective Evolutionary Algorithms and Quality Diversity algorithms like MAP-Elites, we propose Many-objective Optimization via Voting for Elites (MOVE). MOVE maintains a map of elites that perform well on different subsets of the objective functions. On a 14-objective image-neuroevolution problem, we demonstrate that MOVE is viable with a population of as few as 50 elites and outperforms a naive single-objective baseline. We find that the algorithm's performance relies on solutions jumping across bins (for a parent to produce a child that is elite for a different subset of objectives). We suggest that this type of goal-switching is an implicit method to automatic identification of stepping stones or curriculum learning. We comment on the similarities and differences between MOVE and MAP-Elites, hoping to provide insight to aid in the understanding of that approach $\unicode{x2013}$ and suggest future work that may inform this approach's use for many-objective problems in general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge