Many-objective Optimization via Voting for Elites

Paper and Code

Jul 05, 2023

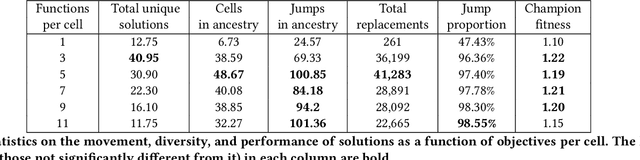

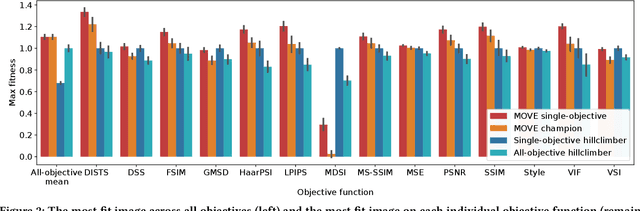

Real-world problems are often comprised of many objectives and require solutions that carefully trade-off between them. Current approaches to many-objective optimization often require challenging assumptions, like knowledge of the importance/difficulty of objectives in a weighted-sum single-objective paradigm, or enormous populations to overcome the curse of dimensionality in multi-objective Pareto optimization. Combining elements from Many-Objective Evolutionary Algorithms and Quality Diversity algorithms like MAP-Elites, we propose Many-objective Optimization via Voting for Elites (MOVE). MOVE maintains a map of elites that perform well on different subsets of the objective functions. On a 14-objective image-neuroevolution problem, we demonstrate that MOVE is viable with a population of as few as 50 elites and outperforms a naive single-objective baseline. We find that the algorithm's performance relies on solutions jumping across bins (for a parent to produce a child that is elite for a different subset of objectives). We suggest that this type of goal-switching is an implicit method to automatic identification of stepping stones or curriculum learning. We comment on the similarities and differences between MOVE and MAP-Elites, hoping to provide insight to aid in the understanding of that approach $\unicode{x2013}$ and suggest future work that may inform this approach's use for many-objective problems in general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge