Netanel Frank

SimCol3D -- 3D Reconstruction during Colonoscopy Challenge

Jul 20, 2023Abstract:Colorectal cancer is one of the most common cancers in the world. While colonoscopy is an effective screening technique, navigating an endoscope through the colon to detect polyps is challenging. A 3D map of the observed surfaces could enhance the identification of unscreened colon tissue and serve as a training platform. However, reconstructing the colon from video footage remains unsolved due to numerous factors such as self-occlusion, reflective surfaces, lack of texture, and tissue deformation that limit feature-based methods. Learning-based approaches hold promise as robust alternatives, but necessitate extensive datasets. By establishing a benchmark, the 2022 EndoVis sub-challenge SimCol3D aimed to facilitate data-driven depth and pose prediction during colonoscopy. The challenge was hosted as part of MICCAI 2022 in Singapore. Six teams from around the world and representatives from academia and industry participated in the three sub-challenges: synthetic depth prediction, synthetic pose prediction, and real pose prediction. This paper describes the challenge, the submitted methods, and their results. We show that depth prediction in virtual colonoscopy is robustly solvable, while pose estimation remains an open research question.

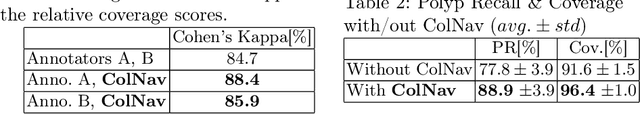

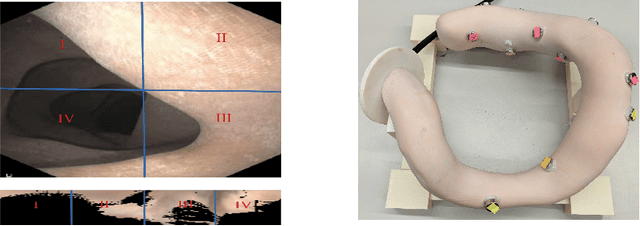

ColNav: Real-Time Colon Navigation for Colonoscopy

Jun 07, 2023

Abstract:Colorectal cancer screening through colonoscopy continues to be the dominant global standard, as it allows identifying pre-cancerous or adenomatous lesions and provides the ability to remove them during the procedure itself. Nevertheless, failure by the endoscopist to identify such lesions increases the likelihood of lesion progression to subsequent colorectal cancer. Ultimately, colonoscopy remains operator-dependent, and the wide range of quality in colonoscopy examinations among endoscopists is influenced by variations in their technique, training, and diligence. This paper presents a novel real-time navigation guidance system for Optical Colonoscopy (OC). Our proposed system employs a real-time approach that displays both an unfolded representation of the colon and a local indicator directing to un-inspected areas. These visualizations are presented to the physician during the procedure, providing actionable and comprehensible guidance to un-surveyed areas in real-time, while seamlessly integrating into the physician's workflow. Through coverage experimental evaluation, we demonstrated that our system resulted in a higher polyp recall (PR) and high inter-rater reliability with physicians for coverage prediction. These results suggest that our real-time navigation guidance system has the potential to improve the quality and effectiveness of Optical Colonoscopy and ultimately benefit patient outcomes.

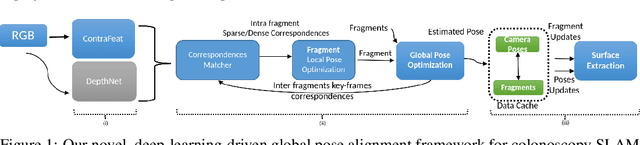

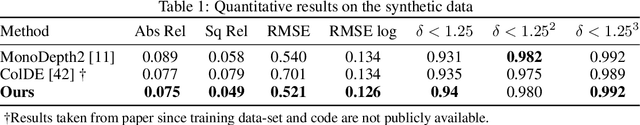

C$^3$Fusion: Consistent Contrastive Colon Fusion, Towards Deep SLAM in Colonoscopy

Jun 04, 2022

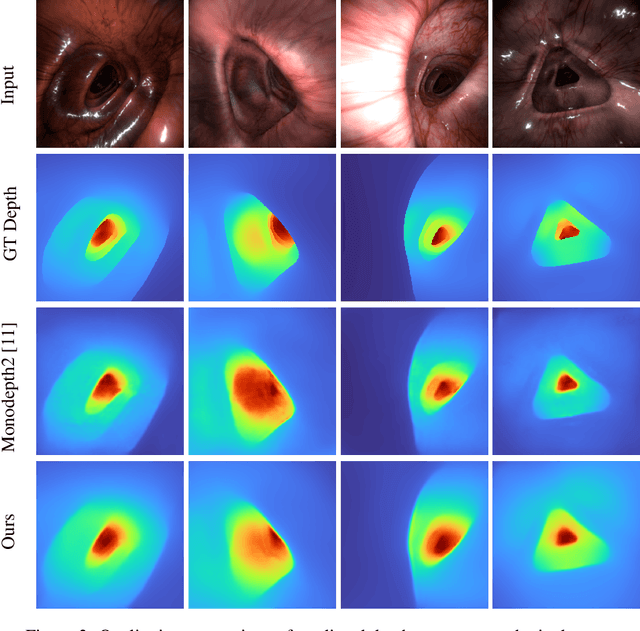

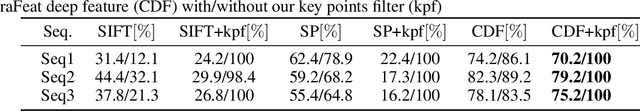

Abstract:3D colon reconstruction from Optical Colonoscopy (OC) to detect non-examined surfaces remains an unsolved problem. The challenges arise from the nature of optical colonoscopy data, characterized by highly reflective low-texture surfaces, drastic illumination changes and frequent tracking loss. Recent methods demonstrate compelling results, but suffer from: (1) frangible frame-to-frame (or frame-to-model) pose estimation resulting in many tracking failures; or (2) rely on point-based representations at the cost of scan quality. In this paper, we propose a novel reconstruction framework that addresses these issues end to end, which result in both quantitatively and qualitatively accurate and robust 3D colon reconstruction. Our SLAM approach, which employs correspondences based on contrastive deep features, and deep consistent depth maps, estimates globally optimized poses, is able to recover from frequent tracking failures, and estimates a global consistent 3D model; all within a single framework. We perform an extensive experimental evaluation on multiple synthetic and real colonoscopy videos, showing high-quality results and comparisons against relevant baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge