Naveen Sundar Govindarajulu

Rensselaer AI and Reasoning Lab

AI Can Stop Mass Shootings, and More

Feb 05, 2021

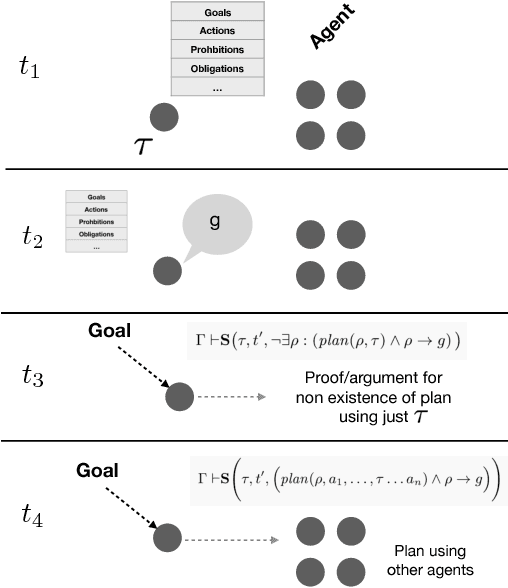

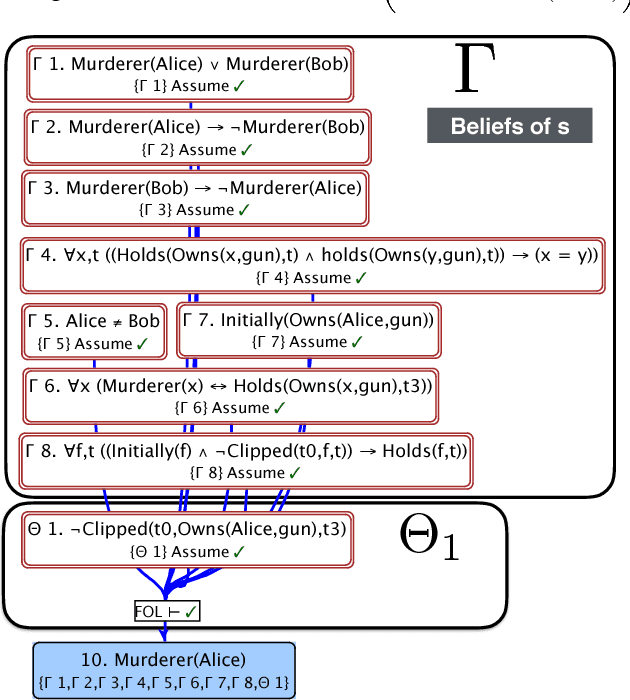

Abstract:We propose to build directly upon our longstanding, prior r&d in AI/machine ethics in order to attempt to make real the blue-sky idea of AI that can thwart mass shootings, by bringing to bear its ethical reasoning. The r&d in question is overtly and avowedly logicist in form, and since we are hardly the only ones who have established a firm foundation in the attempt to imbue AI's with their own ethical sensibility, the pursuit of our proposal by those in different methodological camps should, we believe, be considered as well. We seek herein to make our vision at least somewhat concrete by anchoring our exposition to two simulations, one in which the AI saves the lives of innocents by locking out a malevolent human's gun, and a second in which this malevolent agent is allowed by the AI to be neutralized by law enforcement. Along the way, some objections are anticipated, and rebutted.

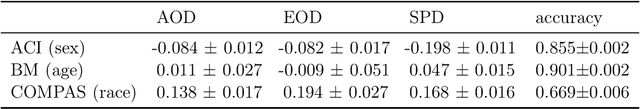

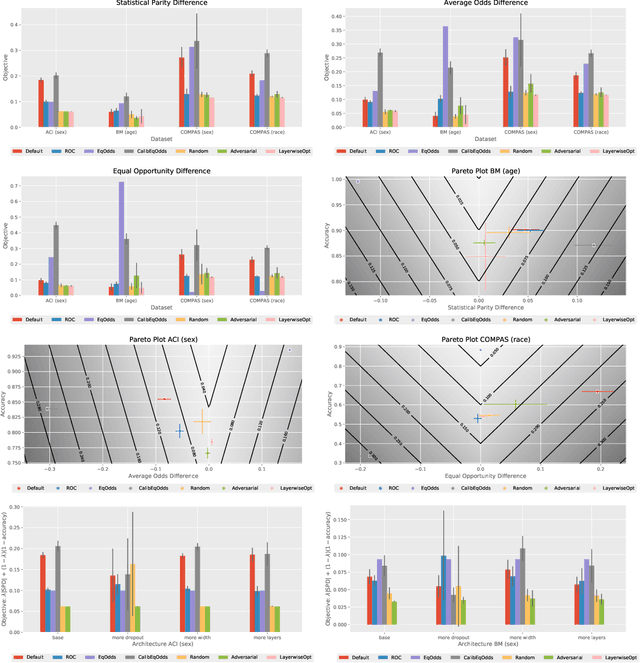

Post-Hoc Methods for Debiasing Neural Networks

Jun 15, 2020

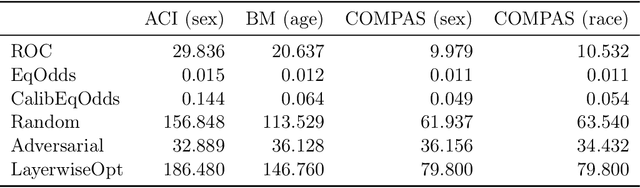

Abstract:As deep learning models become tasked with more and more decisions that impact human lives, such as hiring, criminal recidivism, and loan repayment, bias is becoming a growing concern. This has led to dozens of definitions of fairness and numerous algorithmic techniques to improve the fairness of neural networks. Most debiasing algorithms require retraining a neural network from scratch, however, this is not feasible in many applications, especially when the model takes days to train or when the full training dataset is no longer available. In this work, we present a study on post-hoc methods for debiasing neural networks. First we study the nature of the problem, showing that the difficulty of post-hoc debiasing is highly dependent on the initial conditions of the original model. Then we define three new fine-tuning techniques: random perturbation, layer-wise optimization, and adversarial fine-tuning. All three techniques work for any group fairness constraint. We give a comparison with six algorithms - three popular post-processing debiasing algorithms and our three proposed methods - across three datasets and three popular bias measures. We show that no post-hoc debiasing technique dominates all others, and we identify settings in which each algorithm performs the best. Our code is available at https://github.com/realityengines/post_hoc_debiasing.

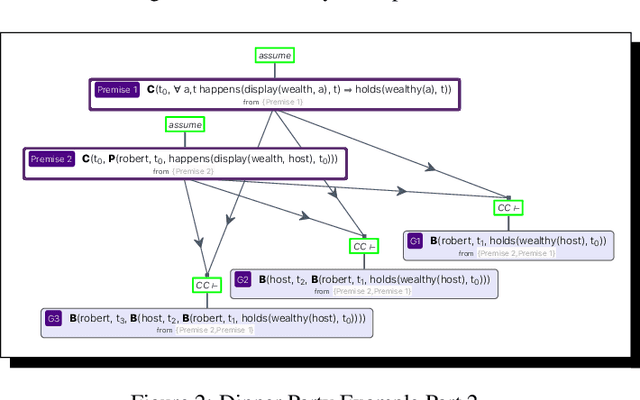

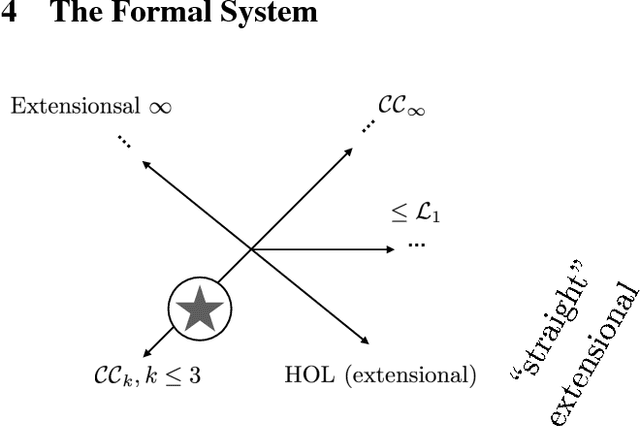

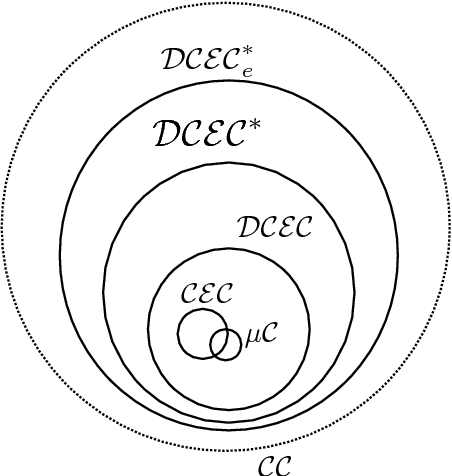

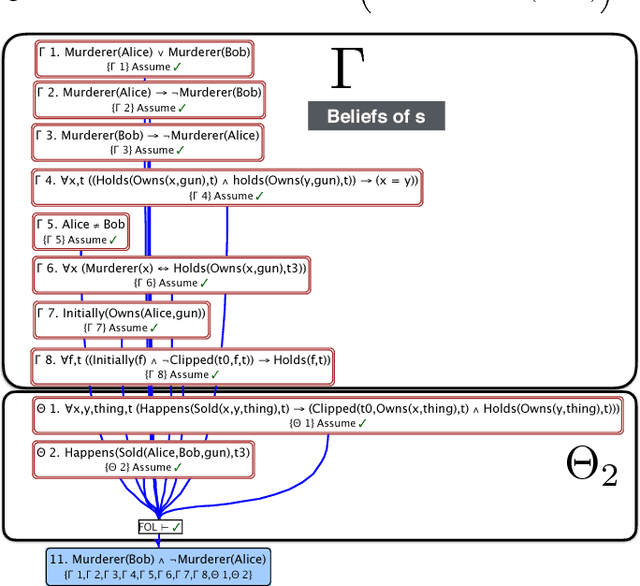

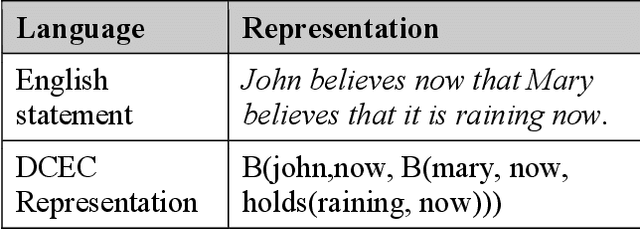

On Quantified Modal Theorem Proving for Modeling Ethics

Dec 30, 2019

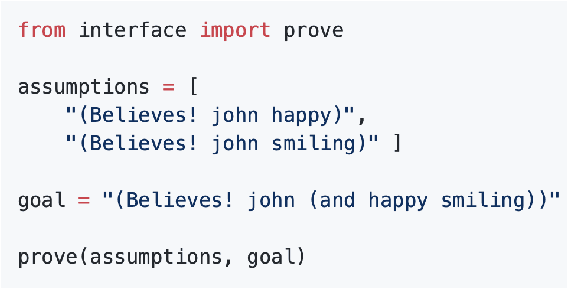

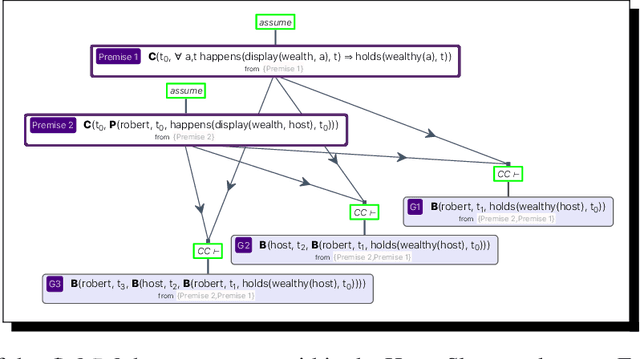

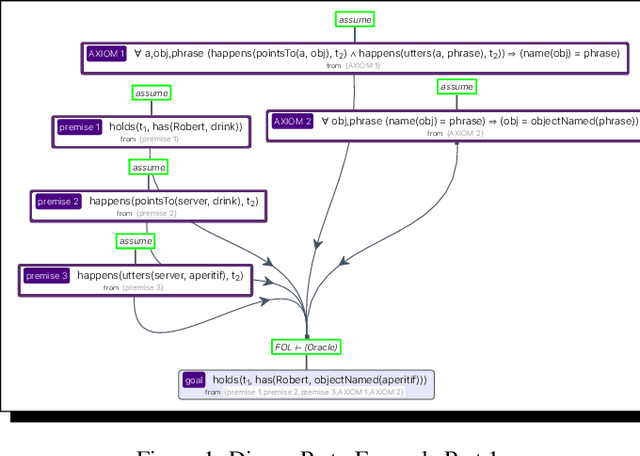

Abstract:In the last decade, formal logics have been used to model a wide range of ethical theories and principles with the goal of using these models within autonomous systems. Logics for modeling ethical theories, and their automated reasoners, have requirements that are different from modal logics used for other purposes, e.g. for temporal reasoning. Meeting these requirements necessitates investigation of new approaches for proof automation. Particularly, a quantified modal logic, the deontic cognitive event calculus (DCEC), has been used to model various versions of the doctrine of double effect, akrasia, and virtue ethics. Using a fragment of DCEC, we outline these distinct characteristics and present a sketches of an algorithm that can help with some aspects proof automation for DCEC.

* In Proceedings ARCADE 2019, arXiv:1912.11786

Learning $\textit{Ex Nihilo}$

Mar 04, 2019

Abstract:This paper introduces, philosophically and to a degree formally, the novel concept of learning $\textit{ex nihilo}$, intended (obviously) to be analogous to the concept of creation $\textit{ex nihilo}$. Learning $\textit{ex nihilo}$ is an agent's learning "from nothing," by the suitable employment of schemata for deductive and inductive reasoning. This reasoning must be in machine-verifiable accord with a formal proof/argument theory in a $\textit{cognitive calculus}$ (i.e., roughly, an intensional higher-order multi-operator quantified logic), and this reasoning is applied to percepts received by the agent, in the context of both some prior knowledge, and some prior and current interests. Learning $\textit{ex nihilo}$ is a challenge to contemporary forms of ML, indeed a severe one, but the challenge is offered in the spirt of seeking to stimulate attempts, on the part of non-logicist ML researchers and engineers, to collaborate with those in possession of learning-$\textit{ex nihilo}$ frameworks, and eventually attempts to integrate directly with such frameworks at the implementation level. Such integration will require, among other things, the symbiotic interoperation of state-of-the-art automated reasoners and high-expressivity planners, with statistical/connectionist ML technology.

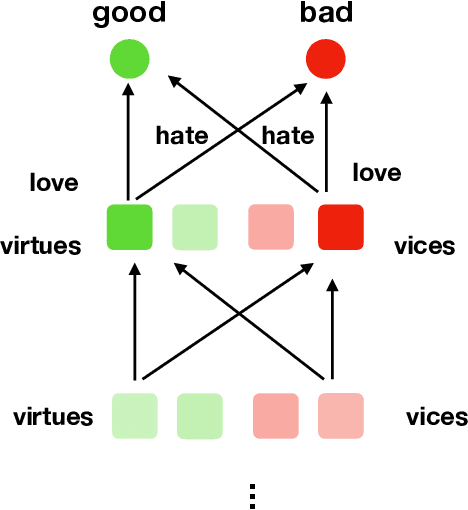

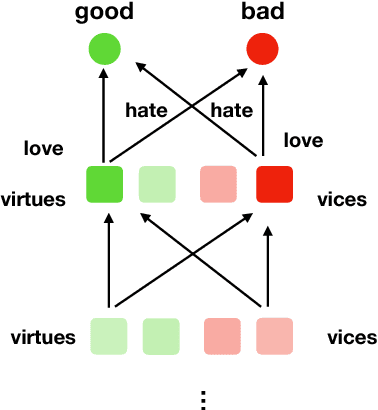

Toward the Engineering of Virtuous Machines

Dec 30, 2018

Abstract:While various traditions under the 'virtue ethics' umbrella have been studied extensively and advocated by ethicists, it has not been clear that there exists a version of virtue ethics rigorous enough to be a target for machine ethics (which we take to include the engineering of an ethical sensibility in a machine or robot itself, not only the study of ethics in the humans who might create artificial agents). We begin to address this by presenting an embryonic formalization of a key part of any virtue-ethics theory: namely, the learning of virtue by a focus on exemplars of moral virtue. Our work is based in part on a computational formal logic previously used to formally model other ethical theories and principles therein, and to implement these models in artificial agents.

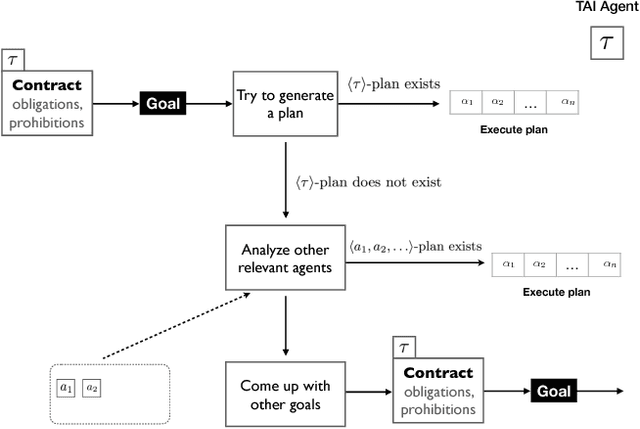

Tentacular Artificial Intelligence, and the Architecture Thereof, Introduced

Oct 14, 2018

Abstract:We briefly introduce herein a new form of distributed, multi-agent artificial intelligence, which we refer to as "tentacular." Tentacular AI is distinguished by six attributes, which among other things entail a capacity for reasoning and planning based in highly expressive calculi (logics), and which enlists subsidiary agents across distances circumscribed only by the reach of one or more given networks.

Strength Factors: An Uncertainty System for a Quantified Modal Logic

May 28, 2018

Abstract:We present a new system S for handling uncertainty in a quantified modal logic (first-order modal logic). The system is based on both probability theory and proof theory. The system is derived from Chisholm's epistemology. We concretize Chisholm's system by grounding his undefined and primitive (i.e. foundational) concept of reasonablenes in probability and proof theory. S can be useful in systems that have to interact with humans and provide justifications for their uncertainty. As a demonstration of the system, we apply the system to provide a solution to the lottery paradox. Another advantage of the system is that it can be used to provide uncertainty values for counterfactual statements. Counterfactuals are statements that an agent knows for sure are false. Among other cases, counterfactuals are useful when systems have to explain their actions to users. Uncertainties for counterfactuals fall out naturally from our system. Efficient reasoning in just simple first-order logic is a hard problem. Resolution-based first-order reasoning systems have made significant progress over the last several decades in building systems that have solved non-trivial tasks (even unsolved conjectures in mathematics). We present a sketch of a novel algorithm for reasoning that extends first-order resolution. Finally, while there have been many systems of uncertainty for propositional logics, first-order logics and propositional modal logics, there has been very little work in building systems of uncertainty for first-order modal logics. The work described below is in progress; and once finished will address this lack.

One Formalization of Virtue Ethics via Learning

May 20, 2018

Abstract:Given that there exist many different formal and precise treatments of deontologi- cal and consequentialist ethics, we turn to virtue ethics and consider what could be a formalization of virtue ethics that makes it amenable to automation. We present an embroyonic formalization in a cognitive calculus (which subsumes a quantified first-order logic) that has been previously used to model robust ethical principles, in both the deontological and consequentialist traditions.

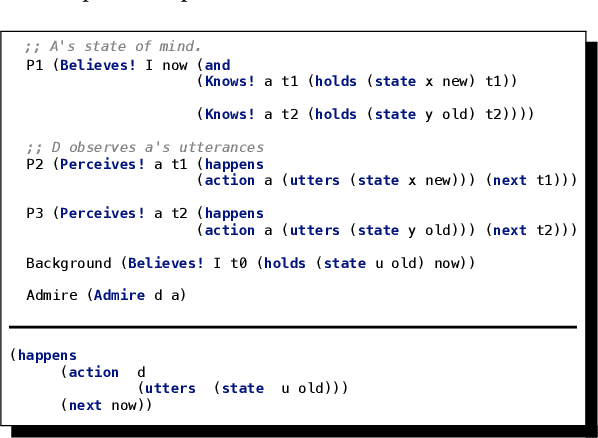

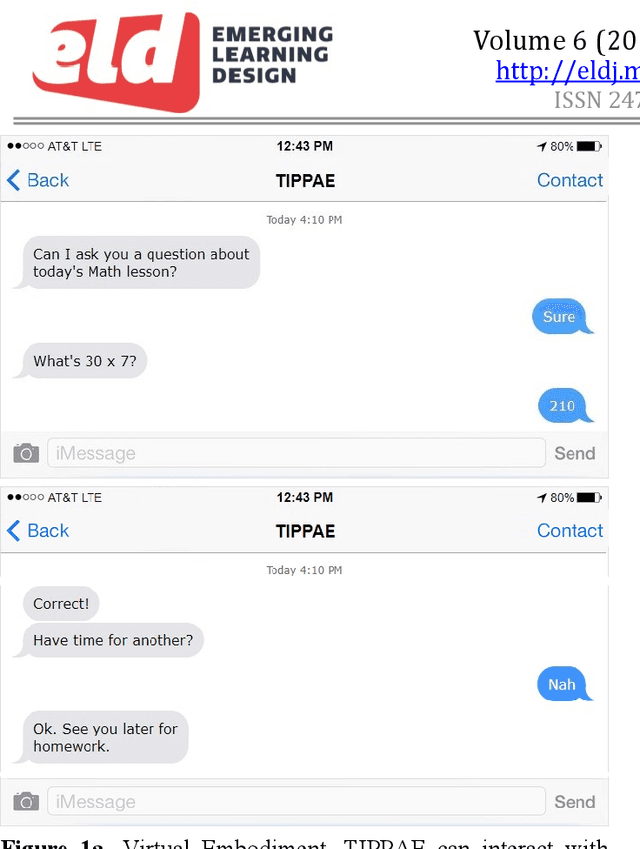

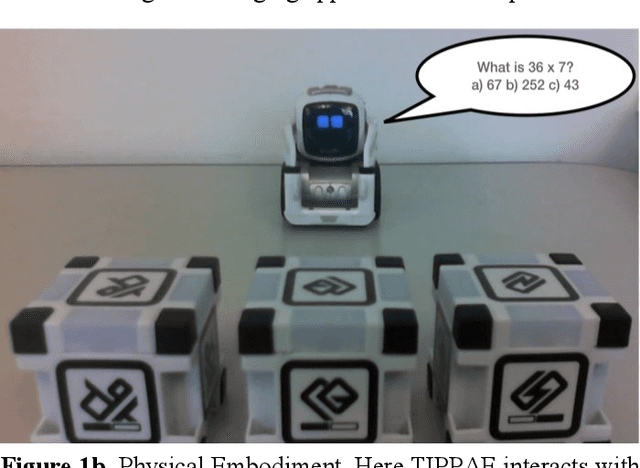

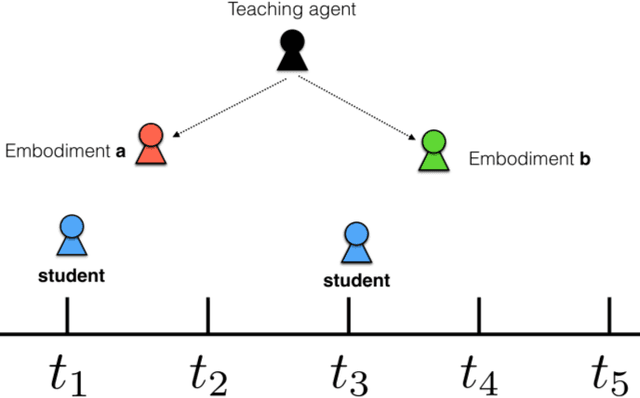

Toward Formalizing Teleportation of Pedagogical Artificial Agents

Apr 10, 2018

Abstract:Our paradigm for the use of artificial agents to teach requires among other things that they persist through time in their interaction with human students, in such a way that they "teleport" or "migrate" from an embodiment at one time t to a different embodiment at later time t'. In this short paper, we report on initial steps toward the formalization of such teleportation, in order to enable an overseeing AI system to establish, mechanically, and verifiably, that the human students in question will likely believe that the very same artificial agent has persisted across such times despite the different embodiments.

Counterfactual Conditionals in Quantified Modal Logic

Nov 02, 2017Abstract:We present a novel formalization of counterfactual conditionals in a quantified modal logic. Counterfactual conditionals play a vital role in ethical and moral reasoning. Prior work has shown that moral reasoning systems (and more generally, theory-of-mind reasoning systems) should be at least as expressive as first-order (quantified) modal logic (QML) to be well-behaved. While existing work on moral reasoning has focused on counterfactual-free QML moral reasoning, we present a fully specified and implemented formal system that includes counterfactual conditionals. We validate our model with two projects. In the first project, we demonstrate that our system can be used to model a complex moral principle, the doctrine of double effect. In the second project, we use the system to build a data-set with true and false counterfactuals as licensed by our theory, which we believe can be useful for other researchers. This project also shows that our model can be computationally feasible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge