Michael Giancola

AI Can Stop Mass Shootings, and More

Feb 05, 2021

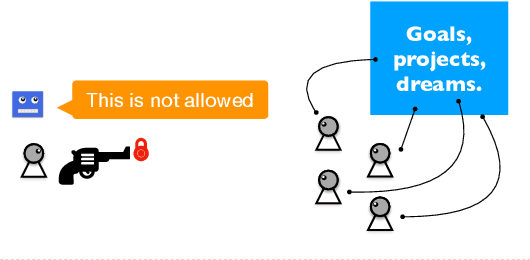

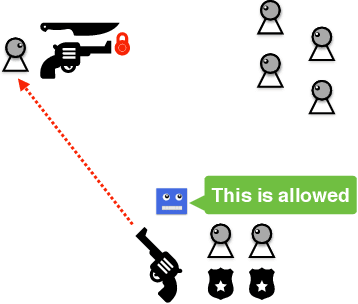

Abstract:We propose to build directly upon our longstanding, prior r&d in AI/machine ethics in order to attempt to make real the blue-sky idea of AI that can thwart mass shootings, by bringing to bear its ethical reasoning. The r&d in question is overtly and avowedly logicist in form, and since we are hardly the only ones who have established a firm foundation in the attempt to imbue AI's with their own ethical sensibility, the pursuit of our proposal by those in different methodological camps should, we believe, be considered as well. We seek herein to make our vision at least somewhat concrete by anchoring our exposition to two simulations, one in which the AI saves the lives of innocents by locking out a malevolent human's gun, and a second in which this malevolent agent is allowed by the AI to be neutralized by law enforcement. Along the way, some objections are anticipated, and rebutted.

A Minimalistic Approach to Segregation in Robot Swarms

Jan 29, 2019

Abstract:We present a decentralized algorithm to achieve segregation into an arbitrary number of groups with swarms of autonomous robots. The distinguishing feature of our approach is in the minimalistic assumptions on which it is based. Specifically, we assume that (i) Each robot is equipped with a ternary sensor capable of detecting the presence of a single nearby robot, and, if that robot is present, whether or not it belongs to the same group as the sensing robot; (ii) The robots move according to a differential drive model; and (iii) The structure of the control system is purely reactive, and it maps directly the sensor readings to the wheel speeds with a simple 'if' statement. We present a thorough analysis of the parameter space that enables this behavior to emerge, along with conditions for guaranteed convergence and a study of non-ideal aspects in the robot design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge