Post-Hoc Methods for Debiasing Neural Networks

Paper and Code

Jun 15, 2020

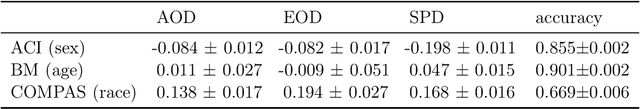

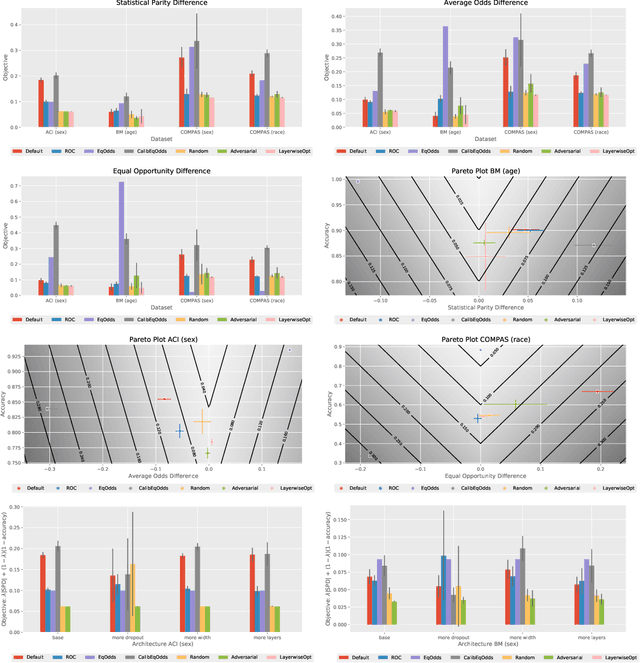

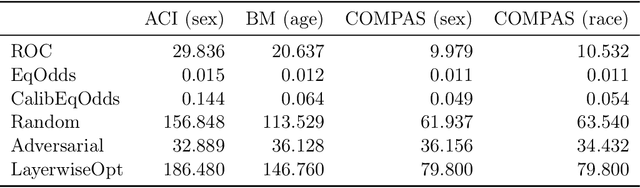

As deep learning models become tasked with more and more decisions that impact human lives, such as hiring, criminal recidivism, and loan repayment, bias is becoming a growing concern. This has led to dozens of definitions of fairness and numerous algorithmic techniques to improve the fairness of neural networks. Most debiasing algorithms require retraining a neural network from scratch, however, this is not feasible in many applications, especially when the model takes days to train or when the full training dataset is no longer available. In this work, we present a study on post-hoc methods for debiasing neural networks. First we study the nature of the problem, showing that the difficulty of post-hoc debiasing is highly dependent on the initial conditions of the original model. Then we define three new fine-tuning techniques: random perturbation, layer-wise optimization, and adversarial fine-tuning. All three techniques work for any group fairness constraint. We give a comparison with six algorithms - three popular post-processing debiasing algorithms and our three proposed methods - across three datasets and three popular bias measures. We show that no post-hoc debiasing technique dominates all others, and we identify settings in which each algorithm performs the best. Our code is available at https://github.com/realityengines/post_hoc_debiasing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge