Matthew Peveler

Rensselaer Polytechnic Institute

On Quantified Modal Theorem Proving for Modeling Ethics

Dec 30, 2019

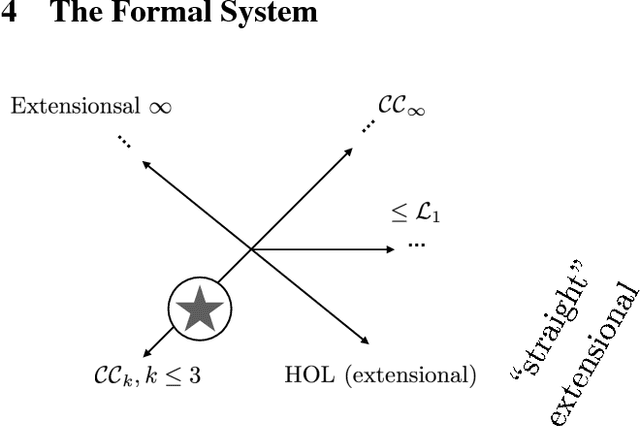

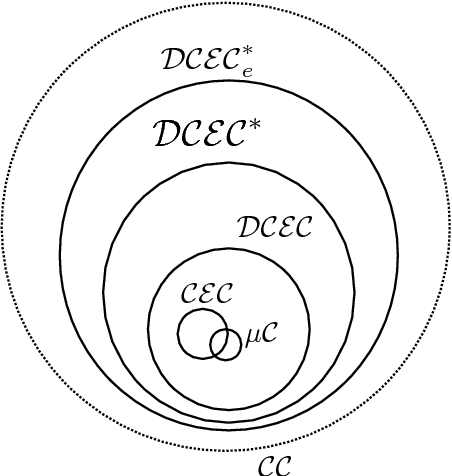

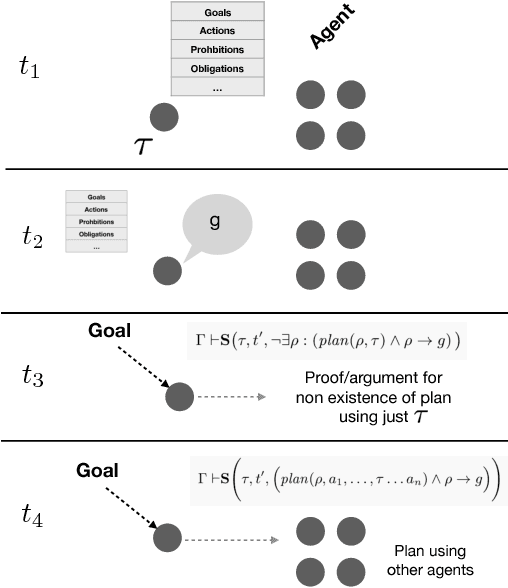

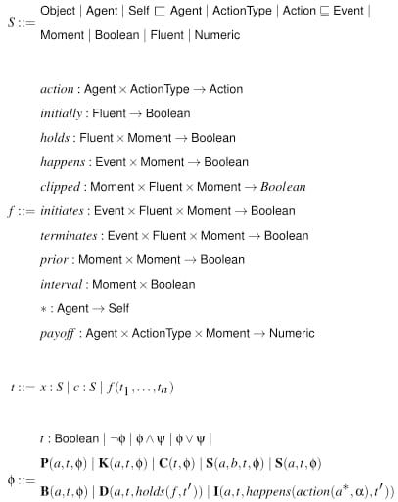

Abstract:In the last decade, formal logics have been used to model a wide range of ethical theories and principles with the goal of using these models within autonomous systems. Logics for modeling ethical theories, and their automated reasoners, have requirements that are different from modal logics used for other purposes, e.g. for temporal reasoning. Meeting these requirements necessitates investigation of new approaches for proof automation. Particularly, a quantified modal logic, the deontic cognitive event calculus (DCEC), has been used to model various versions of the doctrine of double effect, akrasia, and virtue ethics. Using a fragment of DCEC, we outline these distinct characteristics and present a sketches of an algorithm that can help with some aspects proof automation for DCEC.

* In Proceedings ARCADE 2019, arXiv:1912.11786

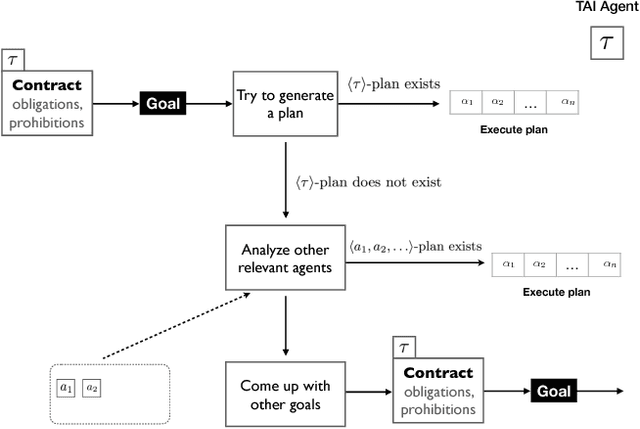

Tentacular Artificial Intelligence, and the Architecture Thereof, Introduced

Oct 14, 2018

Abstract:We briefly introduce herein a new form of distributed, multi-agent artificial intelligence, which we refer to as "tentacular." Tentacular AI is distinguished by six attributes, which among other things entail a capacity for reasoning and planning based in highly expressive calculi (logics), and which enlists subsidiary agents across distances circumscribed only by the reach of one or more given networks.

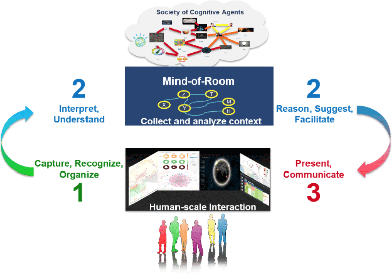

Towards Cognitive-and-Immersive Systems: Experiments in a Shared (or common) Blockworld Framework

Sep 14, 2017

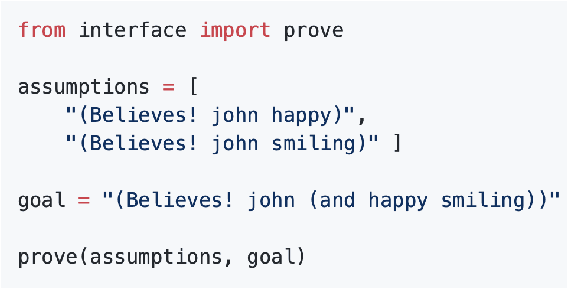

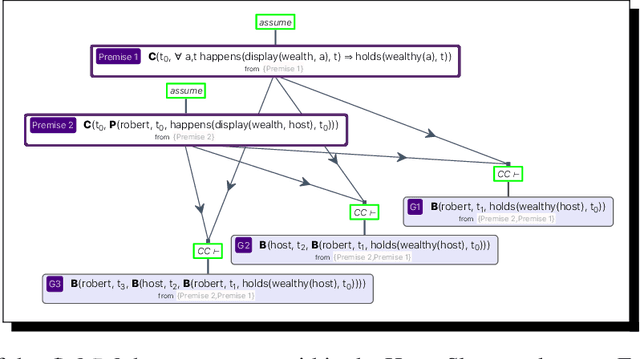

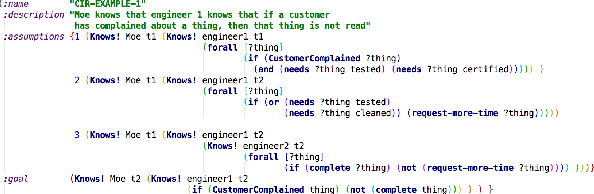

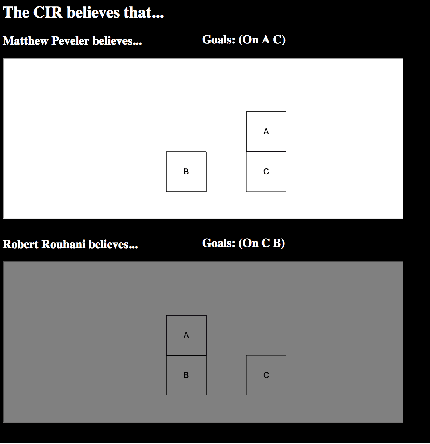

Abstract:As computational power has continued to increase, and sensors have become more accurate, the corresponding advent of systems that are cognitive-and-immersive (CAI) has come to pass. CAI systems fall squarely into the intersection of AI with HCI/HRI: such systems interact with and assist the human agents that enter them, in no small part because such systems are infused with AI able to understand and reason about these humans and their beliefs, goals, and plans. We herein explain our approach to engineering CAI systems. We emphasize the capacity of a CAI system to develop and reason over a "theory of the mind" of its humans partners. This capacity means that the AI in question has a sophisticated model of the beliefs, knowledge, goals, desires, emotions, etc. of these humans. To accomplish this engineering, a formal framework of very high expressivity is needed. In our case, this framework is a \textit{cognitive event calculus}, a partciular kind of quantified multi-modal logic, and a matching high-expressivity planner. To explain, advance, and to a degree validate our approach, we show that a calculus of this type can enable a CAI system to understand a psychologically tricky scenario couched in what we call the \textit{cognitive blockworld framework} (CBF). CBF includes machinery able to represent and plan over not merely blocks and actions, but also agents and their mental attitudes about other agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge