Nam Ik Cho

PhaseMark: A Post-hoc, Optimization-Free Watermarking of AI-generated Images in the Latent Frequency Domain

Jan 19, 2026Abstract:The proliferation of hyper-realistic images from Latent Diffusion Models (LDMs) demands robust watermarking, yet existing post-hoc methods are prohibitively slow due to iterative optimization or inversion processes. We introduce PhaseMark, a single-shot, optimization-free framework that directly modulates the phase in the VAE latent frequency domain. This approach makes PhaseMark thousands of times faster than optimization-based techniques while achieving state-of-the-art resilience against severe attacks, including regeneration, without degrading image quality. We analyze four modulation variants, revealing a clear performance-quality trade-off. PhaseMark demonstrates a new paradigm where efficient, resilient watermarking is achieved by exploiting intrinsic latent properties.

ParallelBench: Understanding the Trade-offs of Parallel Decoding in Diffusion LLMs

Oct 06, 2025Abstract:While most autoregressive LLMs are constrained to one-by-one decoding, diffusion LLMs (dLLMs) have attracted growing interest for their potential to dramatically accelerate inference through parallel decoding. Despite this promise, the conditional independence assumption in dLLMs causes parallel decoding to ignore token dependencies, inevitably degrading generation quality when these dependencies are strong. However, existing works largely overlook these inherent challenges, and evaluations on standard benchmarks (e.g., math and coding) are not sufficient to capture the quality degradation caused by parallel decoding. To address this gap, we first provide an information-theoretic analysis of parallel decoding. We then conduct case studies on analytically tractable synthetic list operations from both data distribution and decoding strategy perspectives, offering quantitative insights that highlight the fundamental limitations of parallel decoding. Building on these insights, we propose ParallelBench, the first benchmark specifically designed for dLLMs, featuring realistic tasks that are trivial for humans and autoregressive LLMs yet exceptionally challenging for dLLMs under parallel decoding. Using ParallelBench, we systematically analyze both dLLMs and autoregressive LLMs, revealing that: (i) dLLMs under parallel decoding can suffer dramatic quality degradation in real-world scenarios, and (ii) current parallel decoding strategies struggle to adapt their degree of parallelism based on task difficulty, thus failing to achieve meaningful speedup without compromising quality. Our findings underscore the pressing need for innovative decoding methods that can overcome the current speed-quality trade-off. We release our benchmark to help accelerate the development of truly efficient dLLMs.

Dataset Distillation for Super-Resolution without Class Labels and Pre-trained Models

Sep 18, 2025Abstract:Training deep neural networks has become increasingly demanding, requiring large datasets and significant computational resources, especially as model complexity advances. Data distillation methods, which aim to improve data efficiency, have emerged as promising solutions to this challenge. In the field of single image super-resolution (SISR), the reliance on large training datasets highlights the importance of these techniques. Recently, a generative adversarial network (GAN) inversion-based data distillation framework for SR was proposed, showing potential for better data utilization. However, the current method depends heavily on pre-trained SR networks and class-specific information, limiting its generalizability and applicability. To address these issues, we introduce a new data distillation approach for image SR that does not need class labels or pre-trained SR models. In particular, we first extract high-gradient patches and categorize images based on CLIP features, then fine-tune a diffusion model on the selected patches to learn their distribution and synthesize distilled training images. Experimental results show that our method achieves state-of-the-art performance while using significantly less training data and requiring less computational time. Specifically, when we train a baseline Transformer model for SR with only 0.68\% of the original dataset, the performance drop is just 0.3 dB. In this case, diffusion model fine-tuning takes 4 hours, and SR model training completes within 1 hour, much shorter than the 11-hour training time with the full dataset.

Semantic Watermarking Reinvented: Enhancing Robustness and Generation Quality with Fourier Integrity

Sep 09, 2025Abstract:Semantic watermarking techniques for latent diffusion models (LDMs) are robust against regeneration attacks, but often suffer from detection performance degradation due to the loss of frequency integrity. To tackle this problem, we propose a novel embedding method called Hermitian Symmetric Fourier Watermarking (SFW), which maintains frequency integrity by enforcing Hermitian symmetry. Additionally, we introduce a center-aware embedding strategy that reduces the vulnerability of semantic watermarking due to cropping attacks by ensuring robust information retention. To validate our approach, we apply these techniques to existing semantic watermarking schemes, enhancing their frequency-domain structures for better robustness and retrieval accuracy. Extensive experiments demonstrate that our methods achieve state-of-the-art verification and identification performance, surpassing previous approaches across various attack scenarios. Ablation studies confirm the impact of SFW on detection capabilities, the effectiveness of the center-aware embedding against cropping, and how message capacity influences identification accuracy. Notably, our method achieves the highest detection accuracy while maintaining superior image fidelity, as evidenced by FID and CLIP scores. Conclusively, our proposed SFW is shown to be an effective framework for balancing robustness and image fidelity, addressing the inherent trade-offs in semantic watermarking. Code available at https://github.com/thomas11809/SFWMark

UNCAGE: Contrastive Attention Guidance for Masked Generative Transformers in Text-to-Image Generation

Aug 07, 2025Abstract:Text-to-image (T2I) generation has been actively studied using Diffusion Models and Autoregressive Models. Recently, Masked Generative Transformers have gained attention as an alternative to Autoregressive Models to overcome the inherent limitations of causal attention and autoregressive decoding through bidirectional attention and parallel decoding, enabling efficient and high-quality image generation. However, compositional T2I generation remains challenging, as even state-of-the-art Diffusion Models often fail to accurately bind attributes and achieve proper text-image alignment. While Diffusion Models have been extensively studied for this issue, Masked Generative Transformers exhibit similar limitations but have not been explored in this context. To address this, we propose Unmasking with Contrastive Attention Guidance (UNCAGE), a novel training-free method that improves compositional fidelity by leveraging attention maps to prioritize the unmasking of tokens that clearly represent individual objects. UNCAGE consistently improves performance in both quantitative and qualitative evaluations across multiple benchmarks and metrics, with negligible inference overhead. Our code is available at https://github.com/furiosa-ai/uncage.

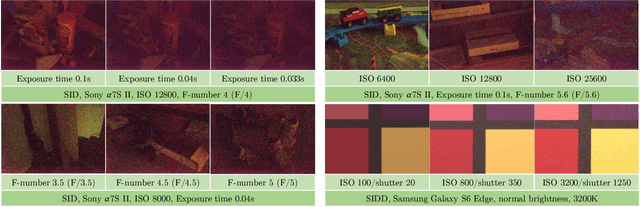

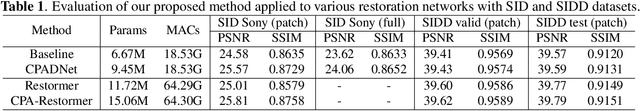

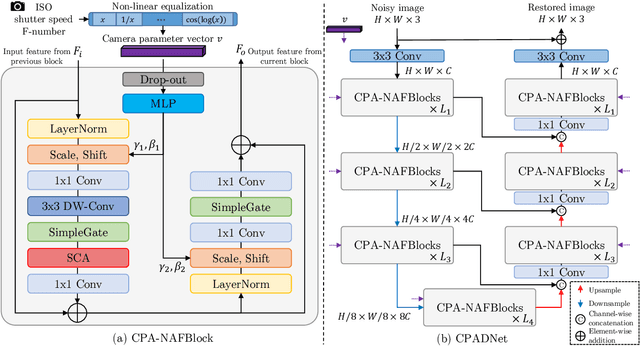

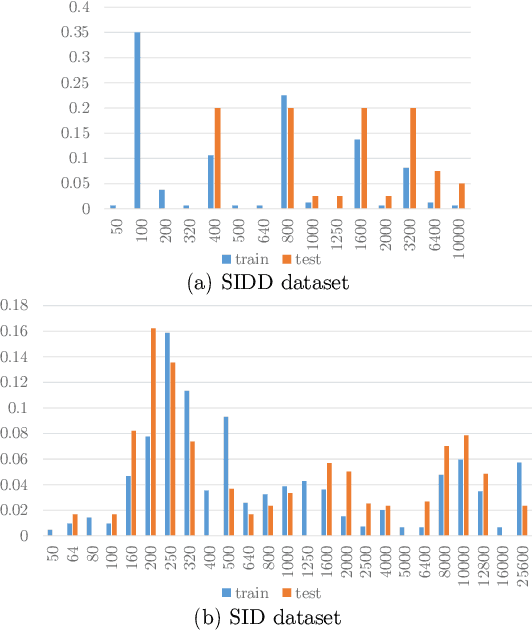

Towards Controllable Real Image Denoising with Camera Parameters

Jul 02, 2025

Abstract:Recent deep learning-based image denoising methods have shown impressive performance; however, many lack the flexibility to adjust the denoising strength based on the noise levels, camera settings, and user preferences. In this paper, we introduce a new controllable denoising framework that adaptively removes noise from images by utilizing information from camera parameters. Specifically, we focus on ISO, shutter speed, and F-number, which are closely related to noise levels. We convert these selected parameters into a vector to control and enhance the performance of the denoising network. Experimental results show that our method seamlessly adds controllability to standard denoising neural networks and improves their performance. Code is available at https://github.com/OBAKSA/CPADNet.

Enhancing Multi-Exposure High Dynamic Range Imaging with Overlapped Codebook for Improved Representation Learning

Jul 02, 2025Abstract:High dynamic range (HDR) imaging technique aims to create realistic HDR images from low dynamic range (LDR) inputs. Specifically, Multi-exposure HDR imaging uses multiple LDR frames taken from the same scene to improve reconstruction performance. However, there are often discrepancies in motion among the frames, and different exposure settings for each capture can lead to saturated regions. In this work, we first propose an Overlapped codebook (OLC) scheme, which can improve the capability of the VQGAN framework for learning implicit HDR representations by modeling the common exposure bracket process in the shared codebook structure. Further, we develop a new HDR network that utilizes HDR representations obtained from a pre-trained VQ network and OLC. This allows us to compensate for saturated regions and enhance overall visual quality. We have tested our approach extensively on various datasets and have demonstrated that it outperforms previous methods both qualitatively and quantitatively

RefPose: Leveraging Reference Geometric Correspondences for Accurate 6D Pose Estimation of Unseen Objects

May 16, 2025Abstract:Estimating the 6D pose of unseen objects from monocular RGB images remains a challenging problem, especially due to the lack of prior object-specific knowledge. To tackle this issue, we propose RefPose, an innovative approach to object pose estimation that leverages a reference image and geometric correspondence as guidance. RefPose first predicts an initial pose by using object templates to render the reference image and establish the geometric correspondence needed for the refinement stage. During the refinement stage, RefPose estimates the geometric correspondence of the query based on the generated references and iteratively refines the pose through a render-and-compare approach. To enhance this estimation, we introduce a correlation volume-guided attention mechanism that effectively captures correlations between the query and reference images. Unlike traditional methods that depend on pre-defined object models, RefPose dynamically adapts to new object shapes by leveraging a reference image and geometric correspondence. This results in robust performance across previously unseen objects. Extensive evaluation on the BOP benchmark datasets shows that RefPose achieves state-of-the-art results while maintaining a competitive runtime.

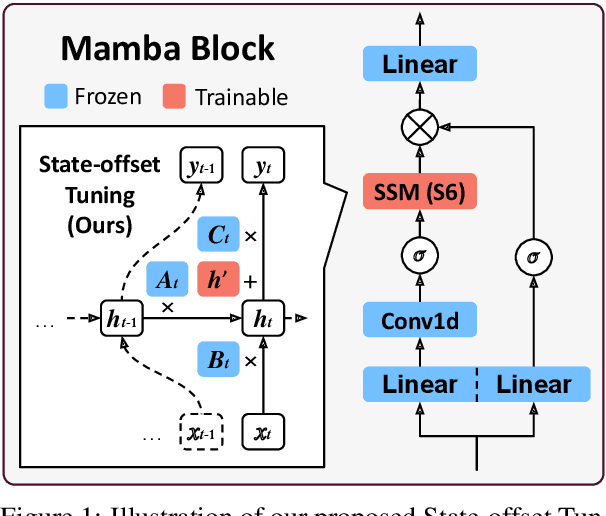

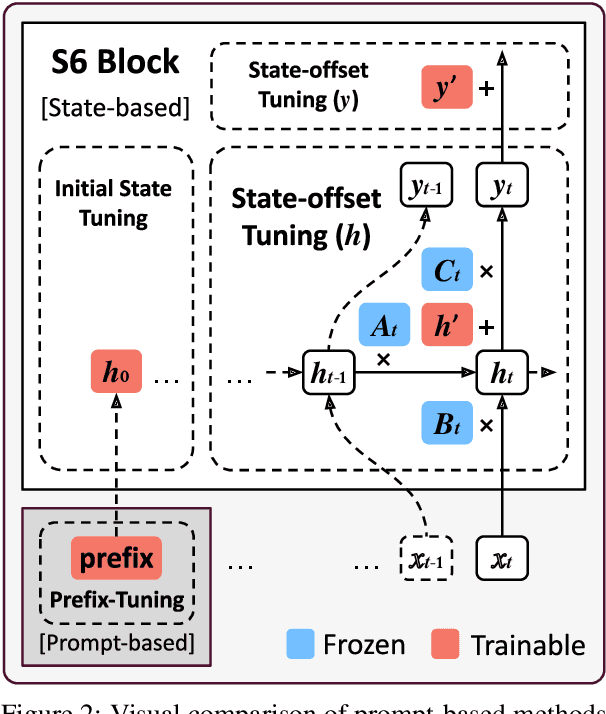

State-offset Tuning: State-based Parameter-Efficient Fine-Tuning for State Space Models

Mar 05, 2025

Abstract:State Space Models (SSMs) have emerged as efficient alternatives to Transformers, mitigating their quadratic computational cost. However, the application of Parameter-Efficient Fine-Tuning (PEFT) methods to SSMs remains largely unexplored. In particular, prompt-based methods like Prompt Tuning and Prefix-Tuning, which are widely used in Transformers, do not perform well on SSMs. To address this, we propose state-based methods as a superior alternative to prompt-based methods. This new family of methods naturally stems from the architectural characteristics of SSMs. State-based methods adjust state-related features directly instead of depending on external prompts. Furthermore, we introduce a novel state-based PEFT method: State-offset Tuning. At every timestep, our method directly affects the state at the current step, leading to more effective adaptation. Through extensive experiments across diverse datasets, we demonstrate the effectiveness of our method. Code is available at https://github.com/furiosa-ai/ssm-state-tuning.

Efficient Attention-Sharing Information Distillation Transformer for Lightweight Single Image Super-Resolution

Jan 27, 2025Abstract:Transformer-based Super-Resolution (SR) methods have demonstrated superior performance compared to convolutional neural network (CNN)-based SR approaches due to their capability to capture long-range dependencies. However, their high computational complexity necessitates the development of lightweight approaches for practical use. To address this challenge, we propose the Attention-Sharing Information Distillation (ASID) network, a lightweight SR network that integrates attention-sharing and an information distillation structure specifically designed for Transformer-based SR methods. We modify the information distillation scheme, originally designed for efficient CNN operations, to reduce the computational load of stacked self-attention layers, effectively addressing the efficiency bottleneck. Additionally, we introduce attention-sharing across blocks to further minimize the computational cost of self-attention operations. By combining these strategies, ASID achieves competitive performance with existing SR methods while requiring only around 300K parameters - significantly fewer than existing CNN-based and Transformer-based SR models. Furthermore, ASID outperforms state-of-the-art SR methods when the number of parameters is matched, demonstrating its efficiency and effectiveness. The code and supplementary material are available on the project page.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge