Nadia Magnenat Thalmann

MIDAS: Multi-sensorial Immersive Dynamic Autonomous System Improves Motivation of Stroke Affected Patients for Hand Rehabilitation

Mar 20, 2022

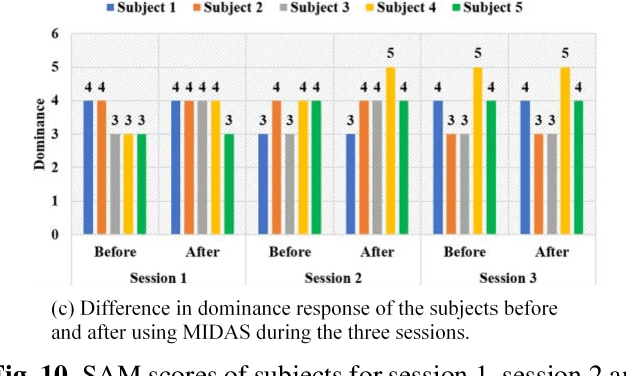

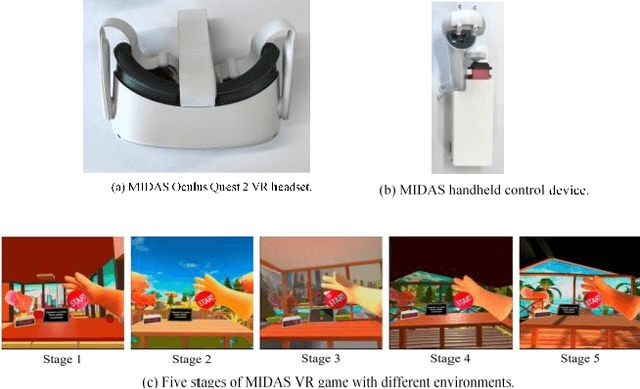

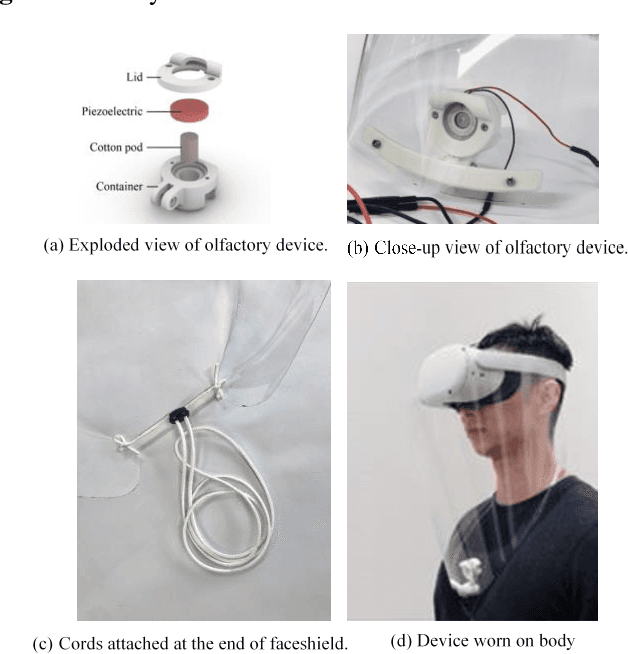

Abstract:Majority of stroke survivors are left with poorly functioning paretic hands. Current rehabilitation devices have failed to motivate the patients enough to continue rehabilitation exercises. The objective of this project, MIDAS (Multi-sensorial Immersive Dynamic Autonomous System) is a proof of concept by using an immersive system to improve motivation of stroke patients for hand rehabilitation. MIDAS is intended for stroke patients who suffer from light to mild stroke. MIDAS is lightweight and portable. It consists of a hand exoskeleton subsystem, a Virtual Reality (VR) subsystem, and an olfactory subsystem. Altogether, MIDAS engages four out of five senses during rehabilitation. To evaluate the efficacy of MIDAS a pilot study consisting of three sessions is carried out on five stroke affected patients. Subsystems of MIDAS are added progressively in each session. The game environment, sonic effects, and scent released is carefully chosen to enhance the immersive experience. 60% of the scores of user experience are above 40 (out of 56). 96% Self Rehabilitation Motivation Scale (SRMS) rating shows that the participants are motivated to use MIDAS and 87% rating shows that MIDAS is exciting for rehabilitation. Participants experienced elevated motivation to continue stroke rehabilitation using MIDAS and no undesired side effects were reported.

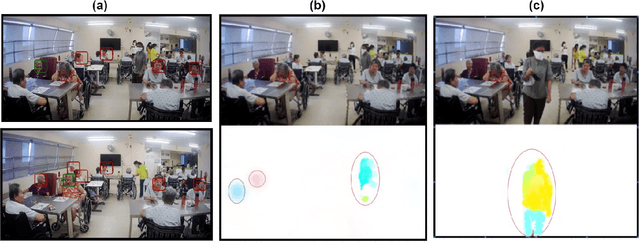

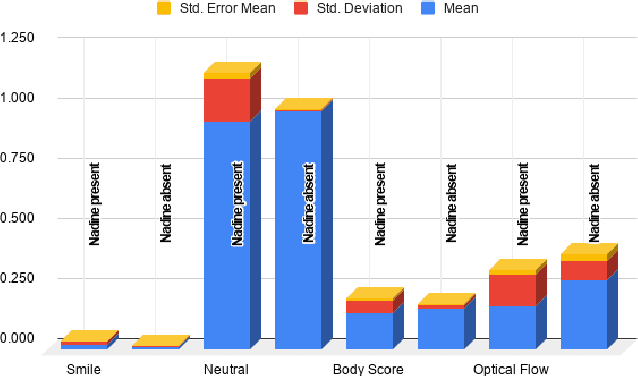

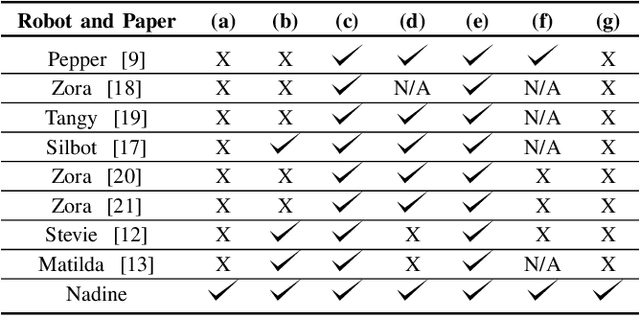

Does elderly enjoy playing Bingo with a robot? A case study with the humanoid robot Nadine

May 05, 2021

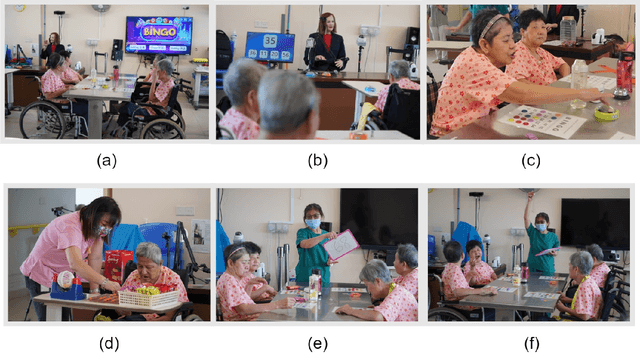

Abstract:There are considerable advancements in medical health care in recent years, resulting in rising older population. As the workforce for such a population is not keeping pace, there is an urgent need to address this problem. Having robots to stimulating recreational activities for older adults can reduce the workload for caretakers and give them time to address the emotional needs of the elderly. In this paper, we investigate the effects of the humanoid social robot Nadine as an activity host for the elderly. This study aims to analyse if the elderly feels comfortable and enjoy playing game/activity with the humanoid robot Nadine. We propose to evaluate this by placing Nadine humanoid social robot in a nursing home as a caretaker where she hosts bingo game. We record sessions with and without Nadine to understand the difference and acceptance of these two scenarios. We use computer vision methods to analyse the activities of the elderly to detect emotions and their involvement in the game. We envision that such humanoid robots will make recreational activities more readily available for the elderly. Our results present positive enforcement during recreational activity, Bingo, in the presence of Nadine.

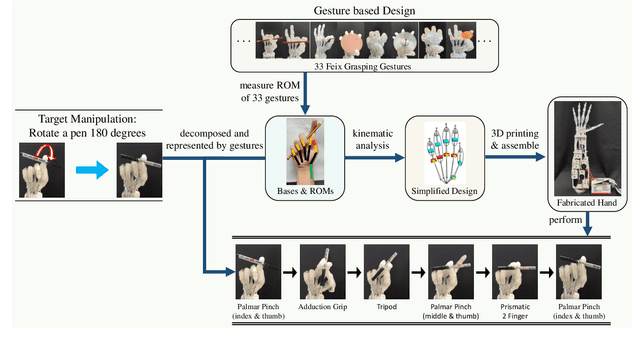

Towards Complex and Continuous Manipulation: A Gesture Based Anthropomorphic Robotic Hand Design

Dec 20, 2020

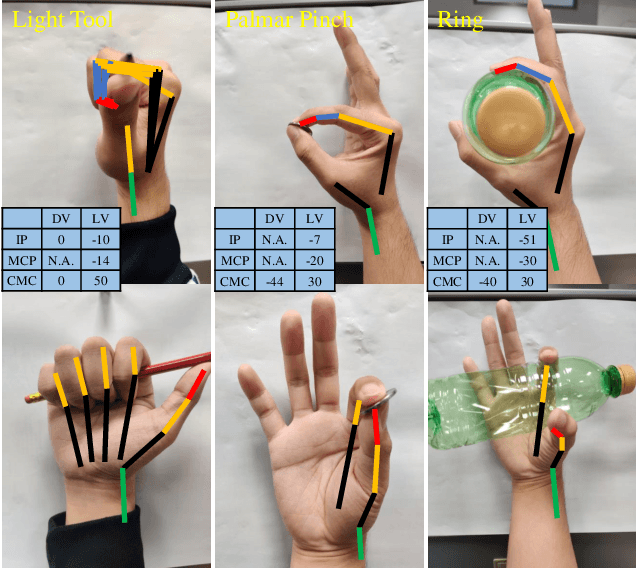

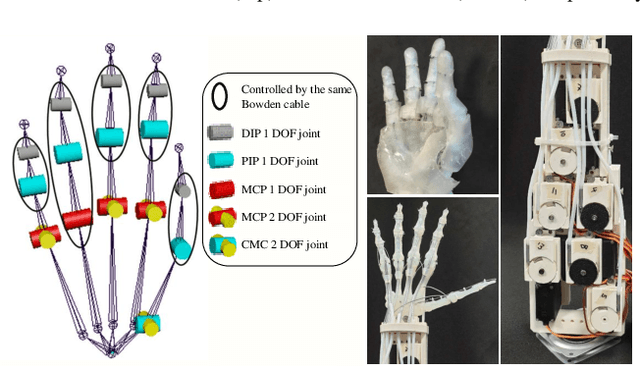

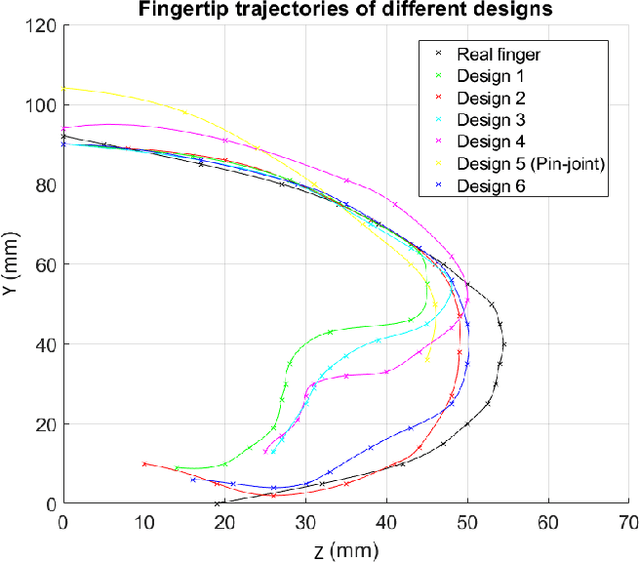

Abstract:Most current anthropomorphic robotic hands can realize part of the human hand functions, particularly for object grasping. However, due to the complexity of the human hand, few current designs target at daily object manipulations, even for simple actions like rotating a pen. To tackle this problem, we introduce a gesture based framework, which adopts the widely-used 33 grasping gestures of Feix as the bases for hand design and implementation of manipulation. In the proposed framework, we first measure the motion ranges of human fingers for each gesture, and based on the results, we propose a simple yet dexterous robotic hand design with 13 degrees of freedom. Furthermore, we adopt a frame interpolation based method, in which we consider the base gestures as the key frames to represent a manipulation task, and use the simple linear interpolation strategy to accomplish the manipulation. To demonstrate the effectiveness of our framework, we define a three-level benchmark, which includes not only 62 test gestures from previous research, but also multiple complex and continuous actions. Experimental results on this benchmark validate the dexterity of the proposed design and our video is available in \url{https://entuedu-my.sharepoint.com/:v:/g/personal/hanhui_li_staff_main_ntu_edu_sg/Ean2GpnFo6JPjIqbKy1KHMEBftgCkcDhnSX-9uLZ6T0rUg?e=ppCGbC}

Fast 3D Modeling of Anthropomorphic Robotic Hands Based on A Multi-layer Deformable Design

Nov 07, 2020

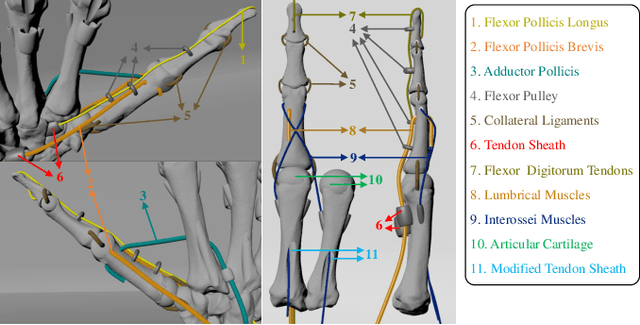

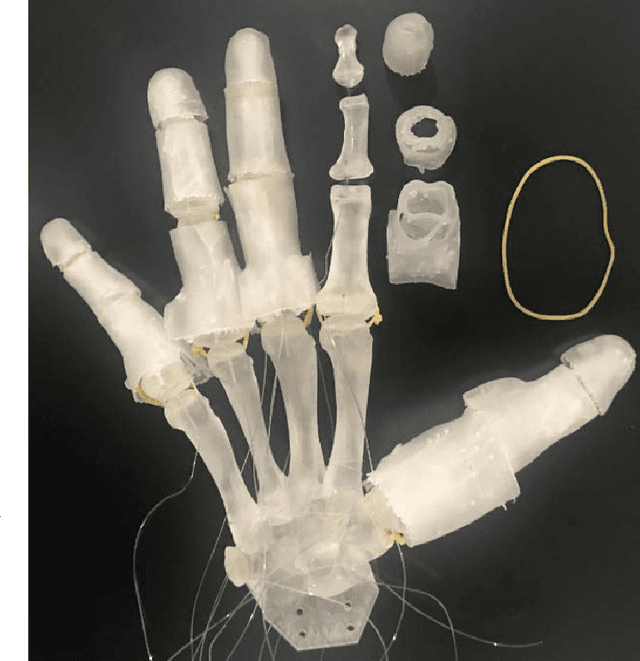

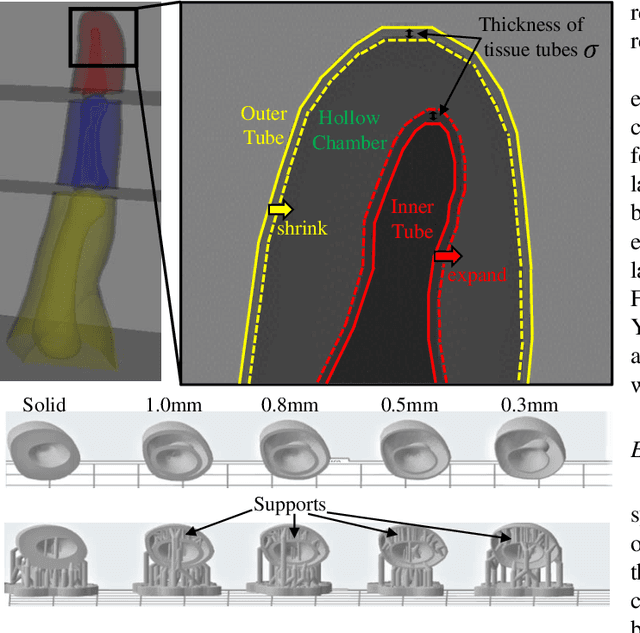

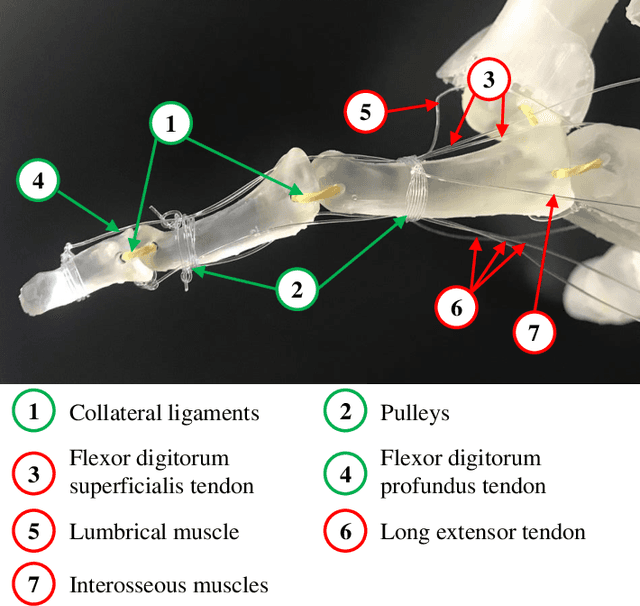

Abstract:Current anthropomorphic robotic hands mainly focus on improving their dexterity by devising new mechanical structures and actuation systems. However, most of them rely on a single structure/system (e.g., bone-only) and ignore the fact that the human hand is composed of multiple functional structures (e.g., skin, bones, muscles, and tendons). This not only increases the difficulty of the design process but also lowers the robustness and flexibility of the fabricated hand. Besides, other factors like customization, the time and cost for production, and the degree of resemblance between human hands and robotic hands, remain omitted. To tackle these problems, this study proposes a 3D printable multi-layer design that models the hand with the layers of skin, tissues, and bones. The proposed design first obtains the 3D surface model of a target hand via 3D scanning, and then generates the 3D bone models from the surface model based on a fast template matching method. To overcome the disadvantage of the rigid bone layer in deformation, the tissue layer is introduced and represented by a concentric tube based structure, of which the deformability can be explicitly controlled by a parameter. Besides, a low-cost yet effective underactuated system is adopted to drive the fabricated hand. The proposed design is tested with 33 widely used object grasping types, as well as special objects like fragile silken tofu, and outperforms previous designs remarkably. With the proposed design, anthropomorphic robotic hands can be produced fast with low cost, and be customizable and deformable.

Boundary-Aware Feature Propagation for Scene Segmentation

Aug 31, 2019

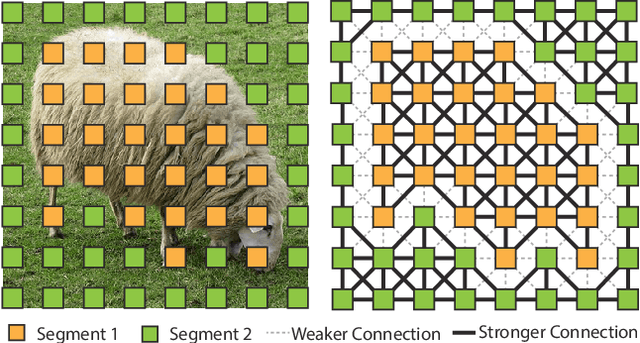

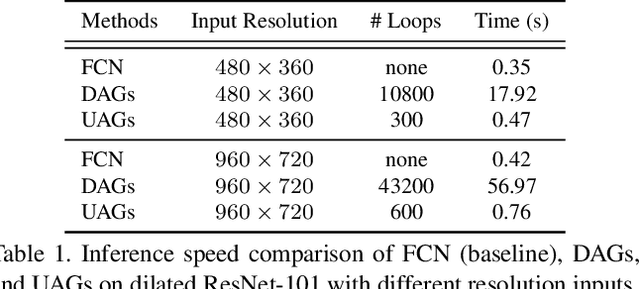

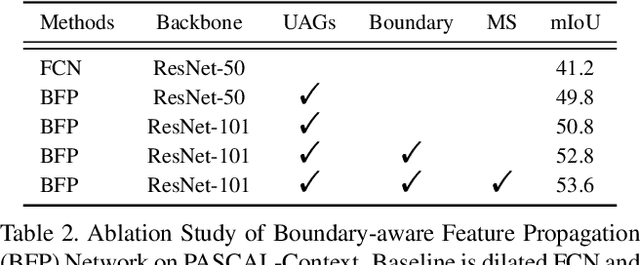

Abstract:In this work, we address the challenging issue of scene segmentation. To increase the feature similarity of the same object while keeping the feature discrimination of different objects, we explore to propagate information throughout the image under the control of objects' boundaries. To this end, we first propose to learn the boundary as an additional semantic class to enable the network to be aware of the boundary layout. Then, we propose unidirectional acyclic graphs (UAGs) to model the function of undirected cyclic graphs (UCGs), which structurize the image via building graphic pixel-by-pixel connections, in an efficient and effective way. Furthermore, we propose a boundary-aware feature propagation (BFP) module to harvest and propagate the local features within their regions isolated by the learned boundaries in the UAG-structured image. The proposed BFP is capable of splitting the feature propagation into a set of semantic groups via building strong connections among the same segment region but weak connections between different segment regions. Without bells and whistles, our approach achieves new state-of-the-art segmentation performance on three challenging semantic segmentation datasets, i.e., PASCAL-Context, CamVid, and Cityscapes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge