Murhaf Hossari

An Advert Creation System for 3D Product Placements

Jun 26, 2020

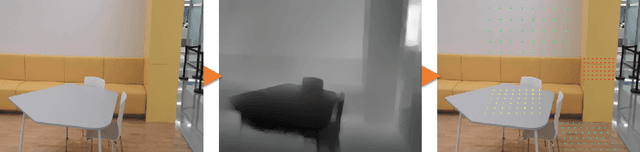

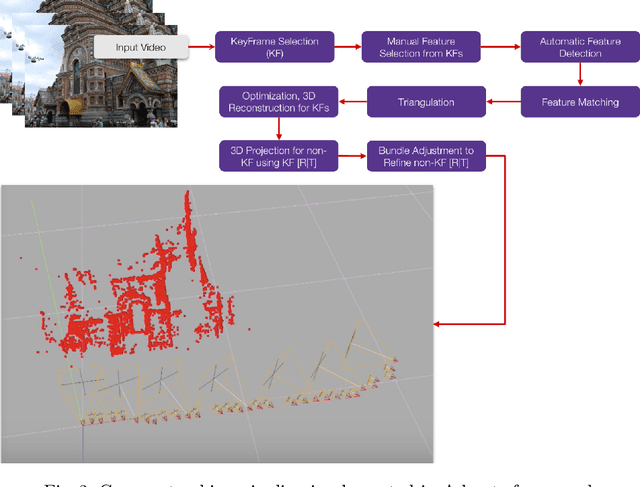

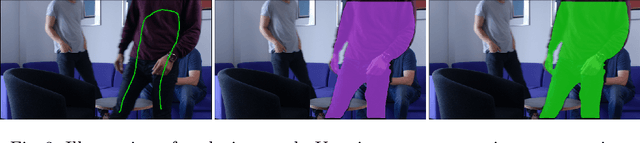

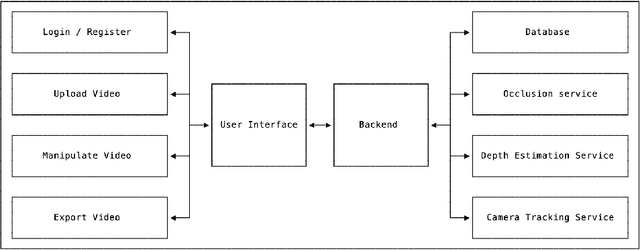

Abstract:Over the past decade, the evolution of video-sharing platforms has attracted a significant amount of investments on contextual advertising. The common contextual advertising platforms utilize the information provided by users to integrate 2D visual ads into videos. The existing platforms face many technical challenges such as ad integration with respect to occluding objects and 3D ad placement. This paper presents a Video Advertisement Placement & Integration (Adverts) framework, which is capable of perceiving the 3D geometry of the scene and camera motion to blend 3D virtual objects in videos and create the illusion of reality. The proposed framework contains several modules such as monocular depth estimation, object segmentation, background-foreground separation, alpha matting and camera tracking. Our experiments conducted using Adverts framework indicates the significant potential of this system in contextual ad integration, and pushing the limits of advertising industry using mixed reality technologies.

Subjective Quality Assessment of Ground-based Camera Images

Dec 16, 2019

Abstract:Image quality assessment is critical to control and maintain the perceived quality of visual content. Both subjective and objective evaluations can be utilised, however, subjective image quality assessment is currently considered the most reliable approach. Databases containing distorted images and mean opinion scores are needed in the field of atmospheric research with a view to improve the current state-of-the-art methodologies. In this paper, we focus on using ground-based sky camera images to understand the atmospheric events. We present a new image quality assessment dataset containing original and distorted nighttime images of sky/cloud from SWINSEG database. Subjective quality assessment was carried out in controlled conditions, as recommended by the ITU. Statistical analyses of the subjective scores showed the impact of noise type and distortion level on the perceived quality.

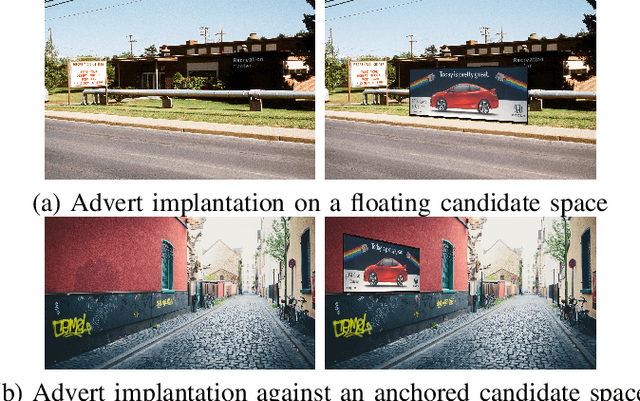

Identifying Candidate Spaces for Advert Implantation

Oct 08, 2019

Abstract:Virtual advertising is an important and promising feature in the area of online advertising. It involves integrating adverts onto live or recorded videos for product placements and targeted advertisements. Such integration of adverts is primarily done by video editors in the post-production stage, which is cumbersome and time-consuming. Therefore, it is important to automatically identify candidate spaces in a video frame, wherein new adverts can be implanted. The candidate space should match the scene perspective, and also have a high quality of experience according to human subjective judgment. In this paper, we propose the use of a bespoke neural net that can assist the video editors in identifying candidate spaces. We benchmark our approach against several deep-learning architectures on a large-scale image dataset of candidate spaces of outdoor scenes. Our work is the first of its kind in this area of multimedia and augmented reality applications, and achieves the best results.

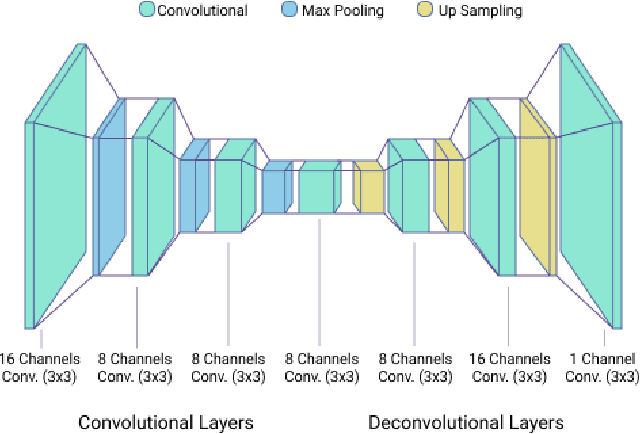

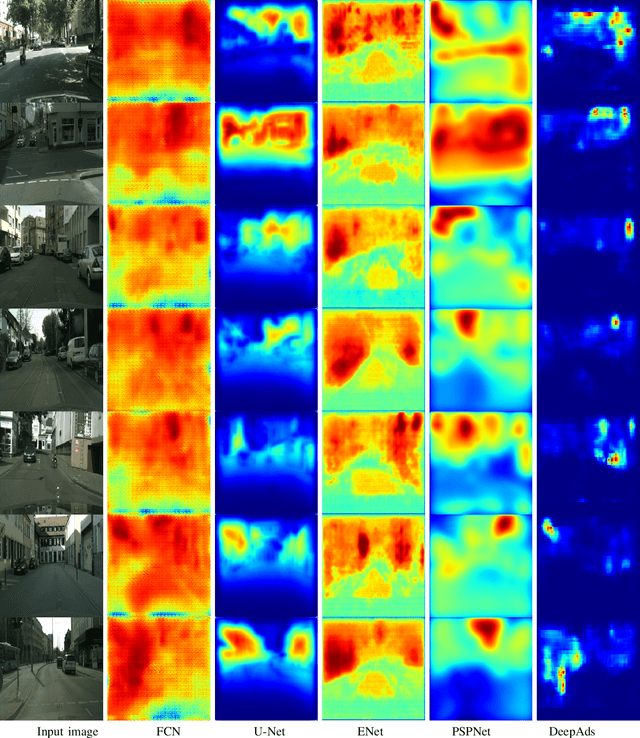

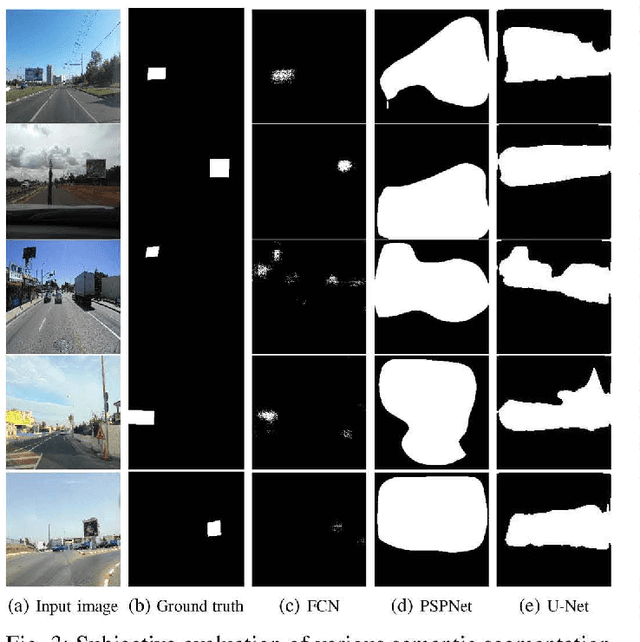

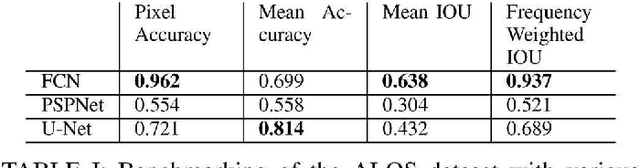

Localizing Adverts in Outdoor Scenes

May 06, 2019

Abstract:Online videos have witnessed an unprecedented growth over the last decade, owing to wide range of content creation. This provides the advertisement and marketing agencies plethora of opportunities for targeted advertisements. Such techniques involve replacing an existing advertisement in a video frame, with a new advertisement. However, such post-processing of online videos is mostly done manually by video editors. This is cumbersome and time-consuming. In this paper, we propose DeepAds -- a deep neural network, based on the simple encoder-decoder architecture, that can accurately localize the position of an advert in a video frame. Our approach of localizing billboards in outdoor scenes using neural nets, is the first of its kind, and achieves the best performance. We benchmark our proposed method with other semantic segmentation algorithms, on a public dataset of outdoor scenes with manually annotated billboard binary maps.

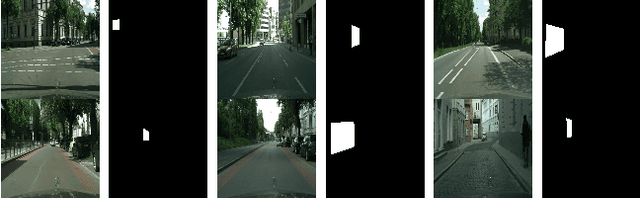

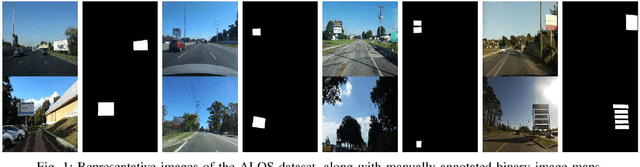

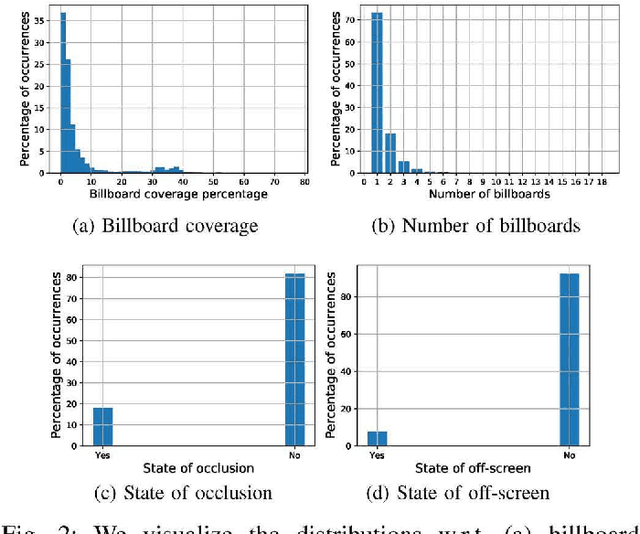

The ALOS Dataset for Advert Localization in Outdoor Scenes

Apr 16, 2019

Abstract:The rapid increase in the number of online videos provides the marketing and advertising agents ample opportunities to reach out to their audience. One of the most widely used strategies is product placement, or embedded marketing, wherein new advertisements are integrated seamlessly into existing advertisements in videos. Such strategies involve accurately localizing the position of the advert in the image frame, either manually in the video editing phase, or by using machine learning frameworks. However, these machine learning techniques and deep neural networks need a massive amount of data for training. In this paper, we propose and release the first large-scale dataset of advertisement billboards, captured in outdoor scenes. We also benchmark several state-of-the-art semantic segmentation algorithms on our proposed dataset.

The CASE Dataset of Candidate Spaces for Advert Implantation

Mar 21, 2019

Abstract:With the advent of faster internet services and growth of multimedia content, we observe a massive growth in the number of online videos. The users generate these video contents at an unprecedented rate, owing to the use of smart-phones and other hand-held video capturing devices. This creates immense potential for the advertising and marketing agencies to create personalized content for the users. In this paper, we attempt to assist the video editors to generate augmented video content, by proposing candidate spaces in video frames. We propose and release a large-scale dataset of outdoor scenes, along with manually annotated maps for candidate spaces. We also benchmark several deep-learning based semantic segmentation algorithms on this proposed dataset.

ADNet: A Deep Network for Detecting Adverts

Nov 09, 2018

Abstract:Online video advertising gives content providers the ability to deliver compelling content, reach a growing audience, and generate additional revenue from online media. Recently, advertising strategies are designed to look for original advert(s) in a video frame, and replacing them with new adverts. These strategies, popularly known as product placement or embedded marketing, greatly help the marketing agencies to reach out to a wider audience. However, in the existing literature, such detection of candidate frames in a video sequence for the purpose of advert integration, is done manually. In this paper, we propose a deep-learning architecture called ADNet, that automatically detects the presence of advertisements in video frames. Our approach is the first of its kind that automatically detects the presence of adverts in a video frame, and achieves state-of-the-art results on a public dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge