Identifying Candidate Spaces for Advert Implantation

Paper and Code

Oct 08, 2019

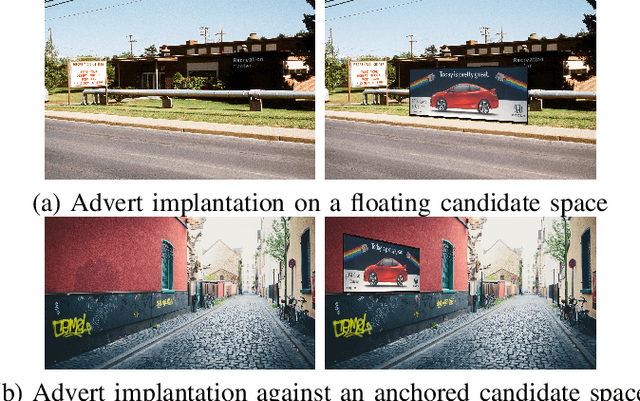

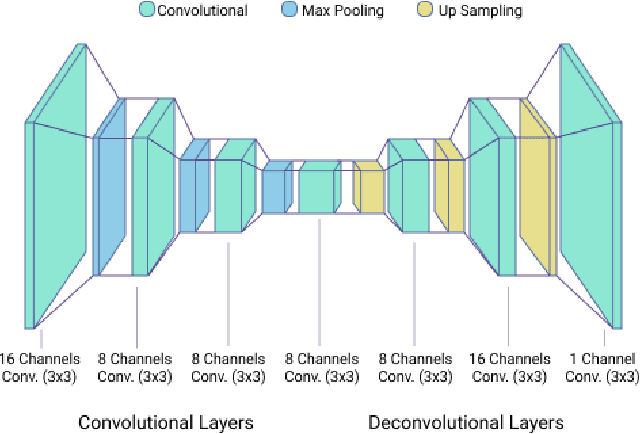

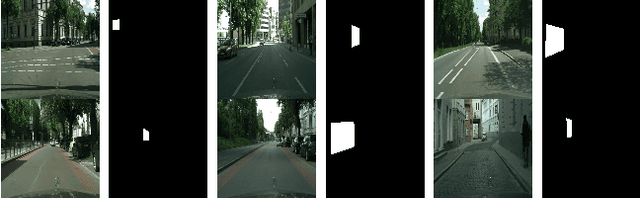

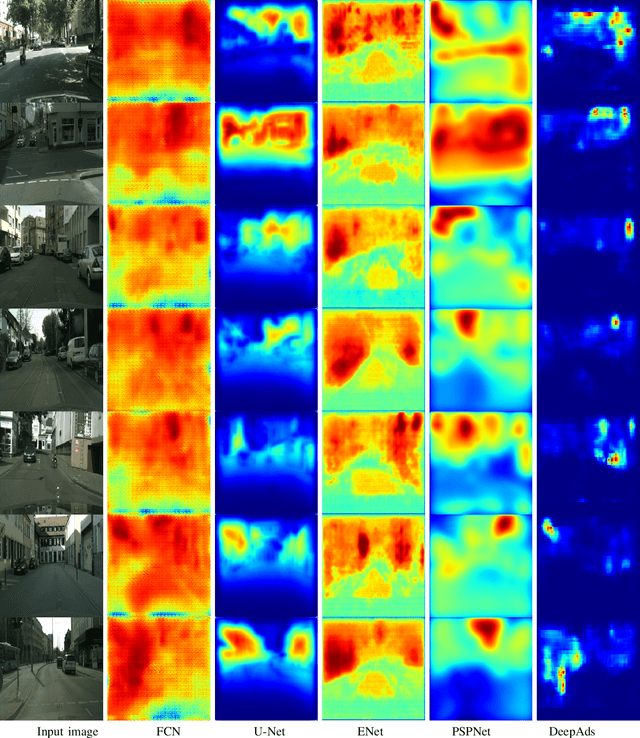

Virtual advertising is an important and promising feature in the area of online advertising. It involves integrating adverts onto live or recorded videos for product placements and targeted advertisements. Such integration of adverts is primarily done by video editors in the post-production stage, which is cumbersome and time-consuming. Therefore, it is important to automatically identify candidate spaces in a video frame, wherein new adverts can be implanted. The candidate space should match the scene perspective, and also have a high quality of experience according to human subjective judgment. In this paper, we propose the use of a bespoke neural net that can assist the video editors in identifying candidate spaces. We benchmark our approach against several deep-learning architectures on a large-scale image dataset of candidate spaces of outdoor scenes. Our work is the first of its kind in this area of multimedia and augmented reality applications, and achieves the best results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge