Motahhare Eslami

MM-SCALE: Grounded Multimodal Moral Reasoning via Scalar Judgment and Listwise Alignment

Feb 03, 2026Abstract:Vision-Language Models (VLMs) continue to struggle to make morally salient judgments in multimodal and socially ambiguous contexts. Prior works typically rely on binary or pairwise supervision, which often fail to capture the continuous and pluralistic nature of human moral reasoning. We present MM-SCALE (Multimodal Moral Scale), a large-scale dataset for aligning VLMs with human moral preferences through 5-point scalar ratings and explicit modality grounding. Each image-scenario pair is annotated with moral acceptability scores and grounded reasoning labels by humans using an interface we tailored for data collection, enabling listwise preference optimization over ranked scenario sets. By moving from discrete to scalar supervision, our framework provides richer alignment signals and finer calibration of multimodal moral reasoning. Experiments show that VLMs fine-tuned on MM-SCALE achieve higher ranking fidelity and more stable safety calibration than those trained with binary signals.

Critical or Compliant? The Double-Edged Sword of Reasoning in Chain-of-Thought Explanations

Nov 19, 2025Abstract:Explanations are often promoted as tools for transparency, but they can also foster confirmation bias; users may assume reasoning is correct whenever outputs appear acceptable. We study this double-edged role of Chain-of-Thought (CoT) explanations in multimodal moral scenarios by systematically perturbing reasoning chains and manipulating delivery tones. Specifically, we analyze reasoning errors in vision language models (VLMs) and how they impact user trust and the ability to detect errors. Our findings reveal two key effects: (1) users often equate trust with outcome agreement, sustaining reliance even when reasoning is flawed, and (2) the confident tone suppresses error detection while maintaining reliance, showing that delivery styles can override correctness. These results highlight how CoT explanations can simultaneously clarify and mislead, underscoring the need for NLP systems to provide explanations that encourage scrutiny and critical thinking rather than blind trust. All code will be released publicly.

PersonaTeaming: Exploring How Introducing Personas Can Improve Automated AI Red-Teaming

Sep 03, 2025Abstract:Recent developments in AI governance and safety research have called for red-teaming methods that can effectively surface potential risks posed by AI models. Many of these calls have emphasized how the identities and backgrounds of red-teamers can shape their red-teaming strategies, and thus the kinds of risks they are likely to uncover. While automated red-teaming approaches promise to complement human red-teaming by enabling larger-scale exploration of model behavior, current approaches do not consider the role of identity. As an initial step towards incorporating people's background and identities in automated red-teaming, we develop and evaluate a novel method, PersonaTeaming, that introduces personas in the adversarial prompt generation process to explore a wider spectrum of adversarial strategies. In particular, we first introduce a methodology for mutating prompts based on either "red-teaming expert" personas or "regular AI user" personas. We then develop a dynamic persona-generating algorithm that automatically generates various persona types adaptive to different seed prompts. In addition, we develop a set of new metrics to explicitly measure the "mutation distance" to complement existing diversity measurements of adversarial prompts. Our experiments show promising improvements (up to 144.1%) in the attack success rates of adversarial prompts through persona mutation, while maintaining prompt diversity, compared to RainbowPlus, a state-of-the-art automated red-teaming method. We discuss the strengths and limitations of different persona types and mutation methods, shedding light on future opportunities to explore complementarities between automated and human red-teaming approaches.

Cognitive Chain-of-Thought: Structured Multimodal Reasoning about Social Situations

Jul 27, 2025Abstract:Chain-of-Thought (CoT) prompting helps models think step by step. But what happens when they must see, understand, and judge-all at once? In visual tasks grounded in social context, where bridging perception with norm-grounded judgments is essential, flat CoT often breaks down. We introduce Cognitive Chain-of-Thought (CoCoT), a prompting strategy that scaffolds VLM reasoning through three cognitively inspired stages: perception, situation, and norm. Our experiments show that, across multiple multimodal benchmarks (including intent disambiguation, commonsense reasoning, and safety), CoCoT consistently outperforms CoT and direct prompting (+8\% on average). Our findings demonstrate that cognitively grounded reasoning stages enhance interpretability and social awareness in VLMs, paving the way for safer and more reliable multimodal systems.

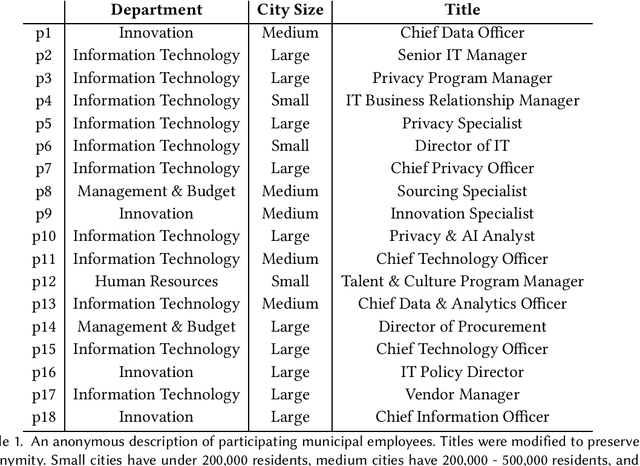

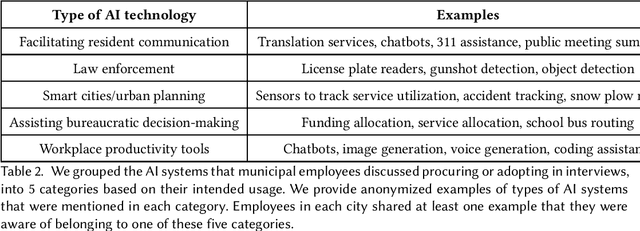

Public Procurement for Responsible AI? Understanding U.S. Cities' Practices, Challenges, and Needs

Nov 07, 2024

Abstract:Most AI tools adopted by governments are not developed internally, but instead are acquired from third-party vendors in a process called public procurement. While scholars and regulatory proposals have recently turned towards procurement as a site of intervention to encourage responsible AI governance practices, little is known about the practices and needs of city employees in charge of AI procurement. In this paper, we present findings from semi-structured interviews with 18 city employees across 7 US cities. We find that AI acquired by cities often does not go through a conventional public procurement process, posing challenges to oversight and governance. We identify five key types of challenges to leveraging procurement for responsible AI that city employees face when interacting with colleagues, AI vendors, and members of the public. We conclude by discussing recommendations and implications for governments, researchers, and policymakers.

'Simulacrum of Stories': Examining Large Language Models as Qualitative Research Participants

Sep 28, 2024Abstract:The recent excitement around generative models has sparked a wave of proposals suggesting the replacement of human participation and labor in research and development--e.g., through surveys, experiments, and interviews--with synthetic research data generated by large language models (LLMs). We conducted interviews with 19 qualitative researchers to understand their perspectives on this paradigm shift. Initially skeptical, researchers were surprised to see similar narratives emerge in the LLM-generated data when using the interview probe. However, over several conversational turns, they went on to identify fundamental limitations, such as how LLMs foreclose participants' consent and agency, produce responses lacking in palpability and contextual depth, and risk delegitimizing qualitative research methods. We argue that the use of LLMs as proxies for participants enacts the surrogate effect, raising ethical and epistemological concerns that extend beyond the technical limitations of current models to the core of whether LLMs fit within qualitative ways of knowing.

"It Might be Technically Impressive, But It's Practically Useless to Us": Practices, Challenges, and Opportunities for Cross-Functional Collaboration around AI within the News Industry

Sep 18, 2024Abstract:Recently, an increasing number of news organizations have integrated artificial intelligence (AI) into their workflows, leading to a further influx of AI technologists and data workers into the news industry. This has initiated cross-functional collaborations between these professionals and journalists. While prior research has explored the impact of AI-related roles entering the news industry, there is a lack of studies on how cross-functional collaboration unfolds between AI professionals and journalists. Through interviews with 17 journalists, 6 AI technologists, and 3 AI workers with cross-functional experience from leading news organizations, we investigate the current practices, challenges, and opportunities for cross-functional collaboration around AI in today's news industry. We first study how journalists and AI professionals perceive existing cross-collaboration strategies. We further explore the challenges of cross-functional collaboration and provide recommendations for enhancing future cross-functional collaboration around AI in the news industry.

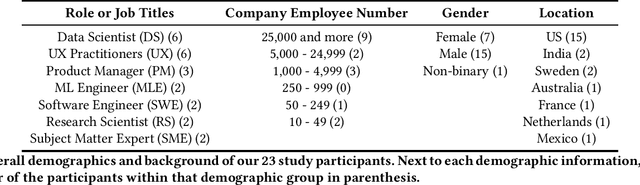

Investigating Practices and Opportunities for Cross-functional Collaboration around AI Fairness in Industry Practice

Jun 10, 2023

Abstract:An emerging body of research indicates that ineffective cross-functional collaboration -- the interdisciplinary work done by industry practitioners across roles -- represents a major barrier to addressing issues of fairness in AI design and development. In this research, we sought to better understand practitioners' current practices and tactics to enact cross-functional collaboration for AI fairness, in order to identify opportunities to support more effective collaboration. We conducted a series of interviews and design workshops with 23 industry practitioners spanning various roles from 17 companies. We found that practitioners engaged in bridging work to overcome frictions in understanding, contextualization, and evaluation around AI fairness across roles. In addition, in organizational contexts with a lack of resources and incentives for fairness work, practitioners often piggybacked on existing requirements (e.g., for privacy assessments) and AI development norms (e.g., the use of quantitative evaluation metrics), although they worry that these tactics may be fundamentally compromised. Finally, we draw attention to the invisible labor that practitioners take on as part of this bridging and piggybacking work to enact interdisciplinary collaboration for fairness. We close by discussing opportunities for both FAccT researchers and AI practitioners to better support cross-functional collaboration for fairness in the design and development of AI systems.

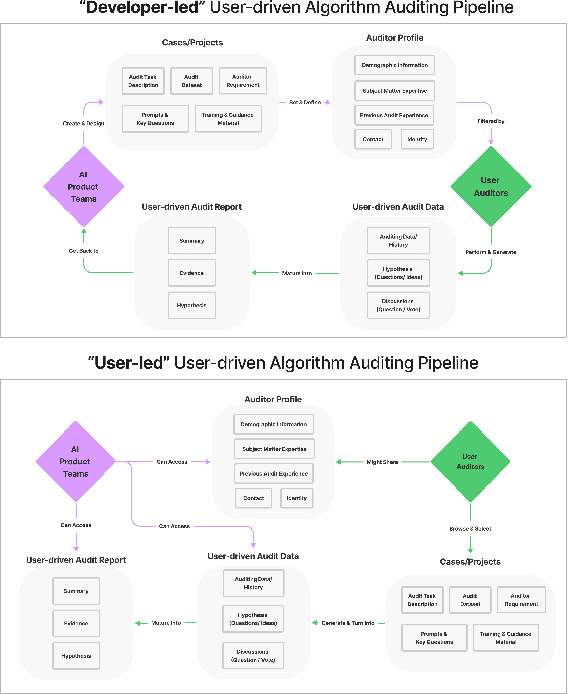

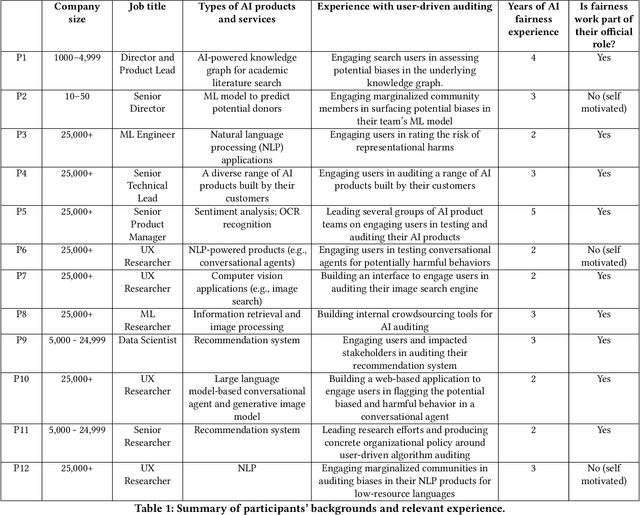

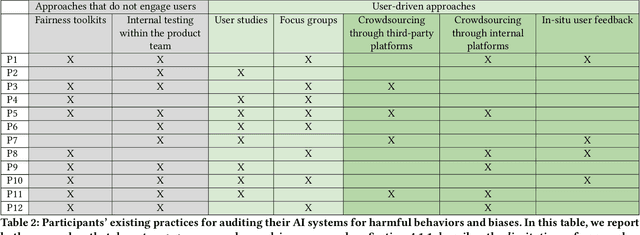

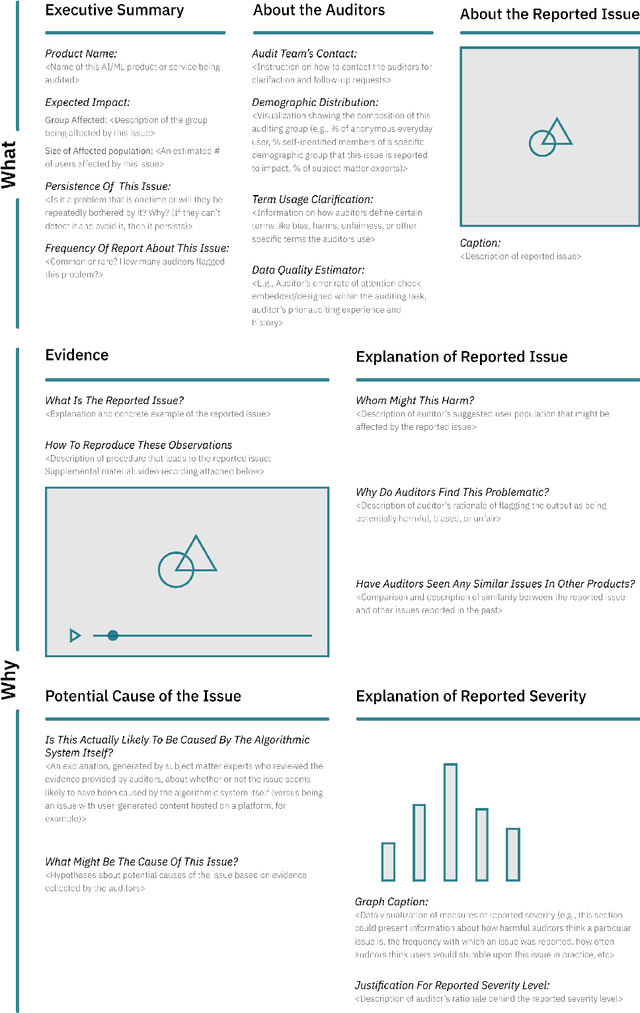

Understanding Practices, Challenges, and Opportunities for User-Driven Algorithm Auditing in Industry Practice

Oct 10, 2022

Abstract:Recent years have seen growing interest among both researchers and practitioners in user-driven approaches to algorithm auditing, which directly engage users in detecting problematic behaviors in algorithmic systems. However, we know little about industry practitioners' current practices and challenges around user-driven auditing, nor what opportunities exist for them to better leverage such approaches in practice. To investigate, we conducted a series of interviews and iterative co-design activities with practitioners who employ user-driven auditing approaches in their work. Our findings reveal several challenges practitioners face in appropriately recruiting and incentivizing user auditors, scaffolding user audits, and deriving actionable insights from user-driven audit reports. Furthermore, practitioners shared organizational obstacles to user-driven auditing, surfacing a complex relationship between practitioners and user auditors. Based on these findings, we discuss opportunities for future HCI research to help realize the potential (and mitigate risks) of user-driven auditing in industry practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge