Mohammed Jouhari

Lightweight Intrusion Detection in IoT via SHAP-Guided Feature Pruning and Knowledge-Distilled Kronecker Networks

Dec 22, 2025Abstract:The widespread deployment of Internet of Things (IoT) devices requires intrusion detection systems (IDS) with high accuracy while operating under strict resource constraints. Conventional deep learning IDS are often too large and computationally intensive for edge deployment. We propose a lightweight IDS that combines SHAP-guided feature pruning with knowledge-distilled Kronecker networks. A high-capacity teacher model identifies the most relevant features through SHAP explanations, and a compressed student leverages Kronecker-structured layers to minimize parameters while preserving discriminative inputs. Knowledge distillation transfers softened decision boundaries from teacher to student, improving generalization under compression. Experiments on the TON\_IoT dataset show that the student is nearly three orders of magnitude smaller than the teacher yet sustains macro-F1 above 0.986 with millisecond-level inference latency. The results demonstrate that explainability-driven pruning and structured compression can jointly enable scalable, low-latency, and energy-efficient IDS for heterogeneous IoT environments.

Lightweight CNN-BiLSTM based Intrusion Detection Systems for Resource-Constrained IoT Devices

Jun 04, 2024Abstract:Intrusion Detection Systems (IDSs) have played a significant role in detecting and preventing cyber-attacks within traditional computing systems. It is not surprising that the same technology is being applied to secure Internet of Things (IoT) networks from cyber threats. The limited computational resources available on IoT devices make it challenging to deploy conventional computing-based IDSs. The IDSs designed for IoT environments must also demonstrate high classification performance, utilize low-complexity models, and be of a small size. Despite significant progress in IoT-based intrusion detection, developing models that both achieve high classification performance and maintain reduced complexity remains challenging. In this study, we propose a hybrid CNN architecture composed of a lightweight CNN and bidirectional LSTM (BiLSTM) to enhance the performance of IDS on the UNSW-NB15 dataset. The proposed model is specifically designed to run onboard resource-constrained IoT devices and meet their computation capability requirements. Despite the complexity of designing a model that fits the requirements of IoT devices and achieves higher accuracy, our proposed model outperforms the existing research efforts in the literature by achieving an accuracy of 97.28\% for binary classification and 96.91\% for multiclassification.

Deep Reinforcement Learning-based Energy Efficiency Optimization For Flying LoRa Gateways

Feb 10, 2023

Abstract:A resource-constrained unmanned aerial vehicle (UAV) can be used as a flying LoRa gateway (GW) to move inside the target area for efficient data collection and LoRa resource management. In this work, we propose deep reinforcement learning (DRL) to optimize the energy efficiency (EE) in wireless LoRa networks composed of LoRa end devices (EDs) and a flying GW to extend the network lifetime. The trained DRL agent can efficiently allocate the spreading factors (SFs) and transmission powers (TPs) to EDs while considering the air-to-ground wireless link and the availability of SFs. In addition, we allow the flying GW to adjust its optimal policy onboard and perform online resource allocation. This is accomplished through retraining the DRL agent using reduced action space. Simulation results demonstrate that our proposed DRL-based online resource allocation scheme can achieve higher EE in LoRa networks over three benchmark schemes.

A Survey on Scalable LoRaWAN for Massive IoT: Recent Advances, Potentials, and Challenges

Feb 22, 2022

Abstract:Long Range (LoRa) is the most widely used technology for enabling Low Power Wide Area Networks (LPWANs) on unlicensed frequency bands. Despite its modest Data Rates (DRs), it provides extensive coverage for low-power devices, making it an ideal communication system for many Internet of Things (IoT) applications. In general, LoRa radio is considered as the physical layer, whereas Long Range Wide Area Networks (LoRaWAN) is the MAC layer of the LoRa stack that adopts star topology to enable communication between multiple End Devices (EDs) and the network Gateway (GW). The Chirp Spread Spectrum (CSS) modulation deals with LoRa signals interference and ensures long-range communication. At the same time, the Adaptive Data Rate (ADR) mechanism allows EDs to dynamically alter some LoRa features such as the Spreading Factor (SF), Code Rate (CR), and carrier frequency to address the time variance of communication conditions in dense networks. Despite the high LoRa connectivity demand, LoRa signals interference and concurrent transmission collisions are major limitations. Therefore, to enhance LoRaWAN capacity, the LoRa alliance released many LoRaWAN versions, and the research community provided numerous solutions to develop scalable LoRaWAN technology. Hence, we thoroughly examined LoRaWAN scalability challenges and the state-of-the-art solutions in both the PHY and MAC layers. Most of these solutions rely on SF, logical, and frequency channel assignment, while others propose new network topologies or implement signal processing schemes to cancel the interference and allow LoRaWAN to connect more EDs efficiently. A summary of the existing solutions in the literature is provided at the end of the paper by describing the advantages and drawbacks of each solution and suggesting possible enhancements as future research directions.

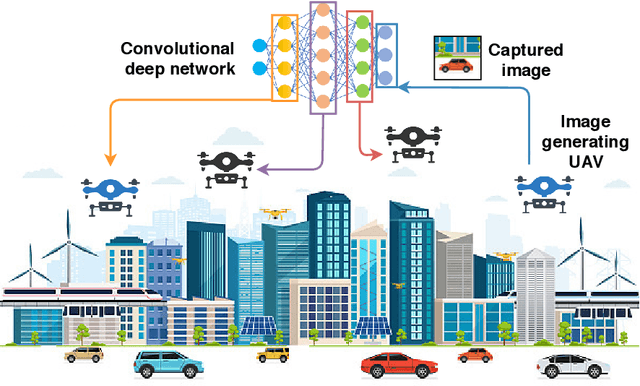

Distributed CNN Inference on Resource-Constrained UAVs for Surveillance Systems: Design and Optimization

May 23, 2021

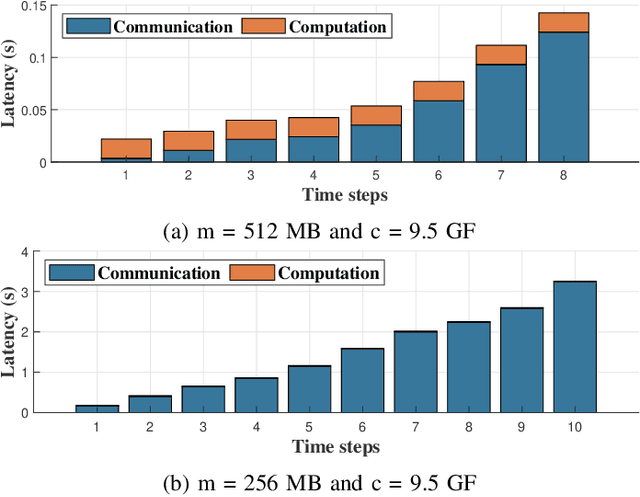

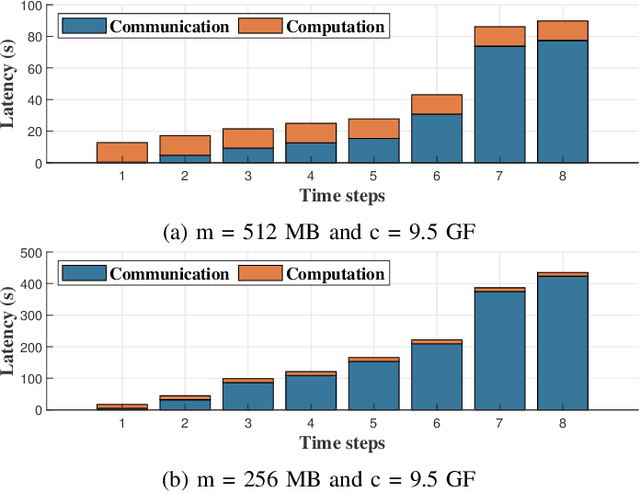

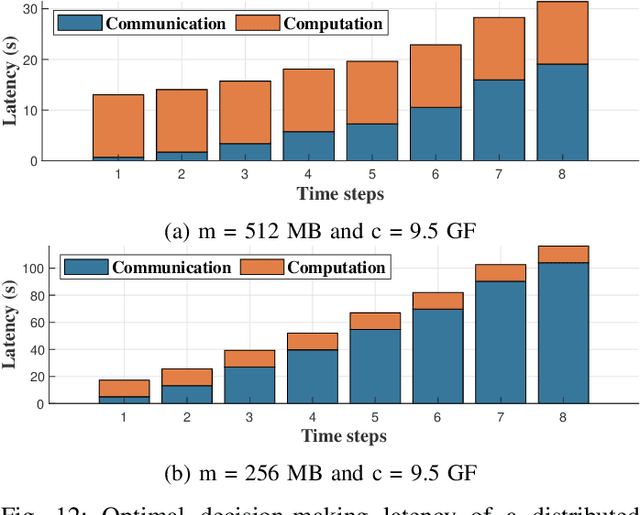

Abstract:Unmanned Aerial Vehicles (UAVs) have attracted great interest in the last few years owing to their ability to cover large areas and access difficult and hazardous target zones, which is not the case of traditional systems relying on direct observations obtained from fixed cameras and sensors. Furthermore, thanks to the advancements in computer vision and machine learning, UAVs are being adopted for a broad range of solutions and applications. However, Deep Neural Networks (DNNs) are progressing toward deeper and complex models that prevent them from being executed on-board. In this paper, we propose a DNN distribution methodology within UAVs to enable data classification in resource-constrained devices and avoid extra delays introduced by the server-based solutions due to data communication over air-to-ground links. The proposed method is formulated as an optimization problem that aims to minimize the latency between data collection and decision-making while considering the mobility model and the resource constraints of the UAVs as part of the air-to-air communication. We also introduce the mobility prediction to adapt our system to the dynamics of UAVs and the network variation. The simulation conducted to evaluate the performance and benchmark the proposed methods, namely Optimal UAV-based Layer Distribution (OULD) and OULD with Mobility Prediction (OULD-MP), were run in an HPC cluster. The obtained results show that our optimization solution outperforms the existing and heuristic-based approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge