Mirko Gschwindt

Autonomous Aerial Cinematography In Unstructured Environments With Learned Artistic Decision-Making

Oct 15, 2019

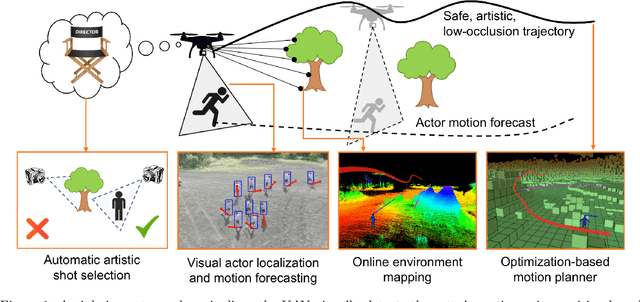

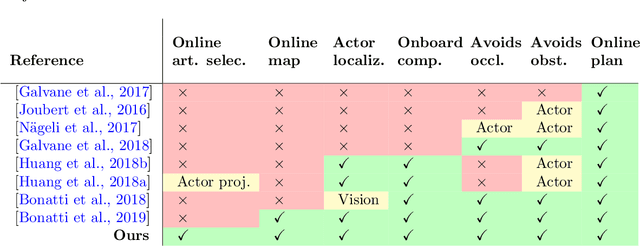

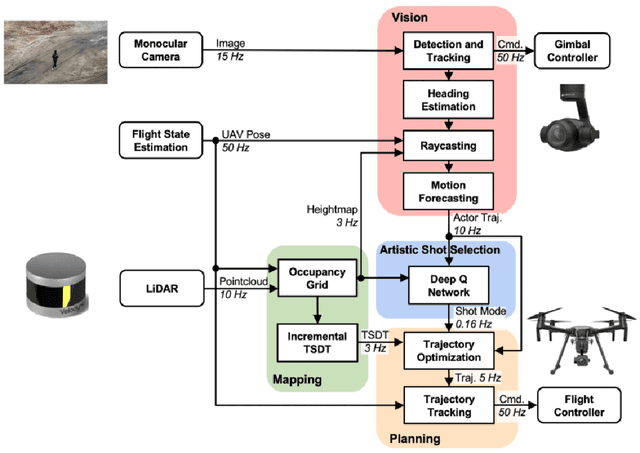

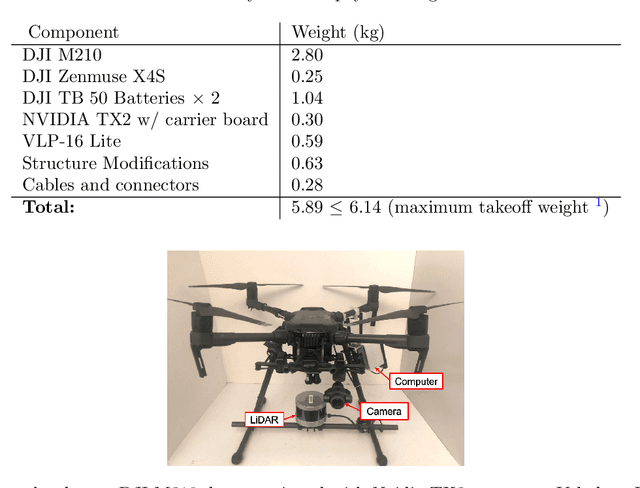

Abstract:Aerial cinematography is revolutionizing industries that require live and dynamic camera viewpoints such as entertainment, sports, and security. However, safely piloting a drone while filming a moving target in the presence of obstacles is immensely taxing, often requiring multiple expert human operators. Hence, there is demand for an autonomous cinematographer that can reason about both geometry and scene context in real-time. Existing approaches do not address all aspects of this problem; they either require high-precision motion-capture systems or GPS tags to localize targets, rely on prior maps of the environment, plan for short time horizons, or only follow artistic guidelines specified before flight. In this work, we address the problem in its entirety and propose a complete system for real-time aerial cinematography that for the first time combines: (1) vision-based target estimation; (2) 3D signed-distance mapping for occlusion estimation; (3) efficient trajectory optimization for long time-horizon camera motion; and (4) learning-based artistic shot selection. We extensively evaluate our system both in simulation and in field experiments by filming dynamic targets moving through unstructured environments. Our results indicate that our system can operate reliably in the real world without restrictive assumptions. We also provide in-depth analysis and discussions for each module, with the hope that our design tradeoffs can generalize to other related applications. Videos of the complete system can be found at: https://youtu.be/ookhHnqmlaU.

Can a Robot Become a Movie Director? Learning Artistic Principles for Aerial Cinematography

Apr 04, 2019

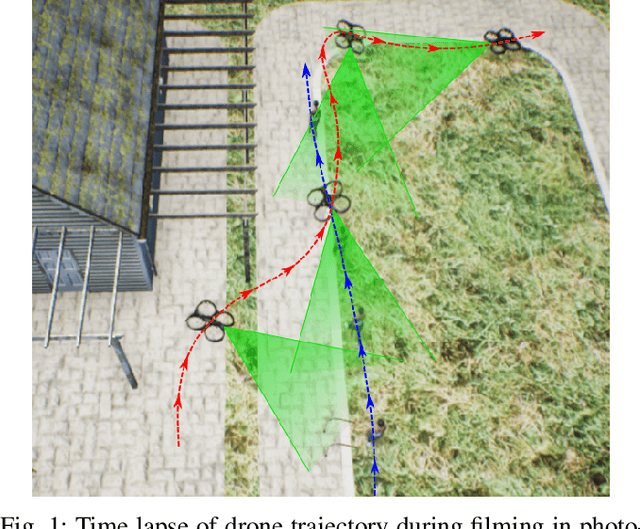

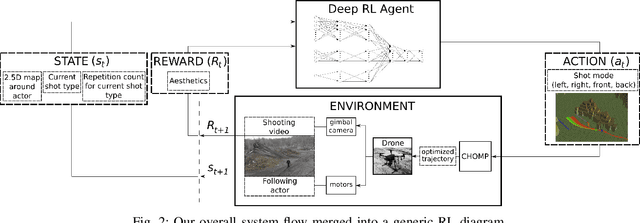

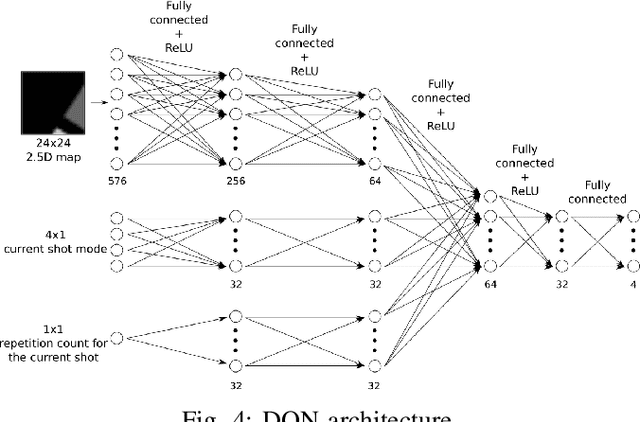

Abstract:Aerial filming is becoming more and more popular thanks to the recent advances in drone technology. It invites many intriguing, unsolved problems at the intersection of aesthetical and scientific challenges. In this work, we propose an intelligent agent which supervises motion planning of a filming drone based on aesthetical values of video shots using deep reinforcement learning. Unlike the current state-of-the-art approaches which mostly require explicit guidance by a human expert, our drone learns how to make favorable shot type selections by experience. We propose a learning scheme which exploits aesthetical features of retrospective shots in order to extract a desirable policy for better prospective shots. We train our agent in realistic AirSim simulations using both hand-crafted and human reward functions. We deploy the same agent on a real DJI M210 drone in order to test generalization capability of our approach to real world conditions. To evaluate the success of our approach in the end, we conduct a comprehensive user study in which participants rate the shots taken using our method and write comments about them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge