Mingxu Feng

Solution for OOD-CV Workshop SSB Challenge 2024 (Open-Set Recognition Track)

Sep 30, 2024

Abstract:This report provides a detailed description of the method we explored and proposed in the OSR Challenge at the OOD-CV Workshop during ECCV 2024. The challenge required identifying whether a test sample belonged to the semantic classes of a classifier's training set, a task known as open-set recognition (OSR). Using the Semantic Shift Benchmark (SSB) for evaluation, we focused on ImageNet1k as the in-distribution (ID) dataset and a subset of ImageNet21k as the out-of-distribution (OOD) dataset.To address this, we proposed a hybrid approach, experimenting with the fusion of various post-hoc OOD detection techniques and different Test-Time Augmentation (TTA) strategies. Additionally, we evaluated the impact of several base models on the final performance. Our best-performing method combined Test-Time Augmentation with the post-hoc OOD techniques, achieving a strong balance between AUROC and FPR95 scores. Our approach resulted in AUROC: 79.77 (ranked 5th) and FPR95: 61.44 (ranked 2nd), securing second place in the overall competition.

The Solution for the sequential task continual learning track of the 2nd Greater Bay Area International Algorithm Competition

Jul 06, 2024

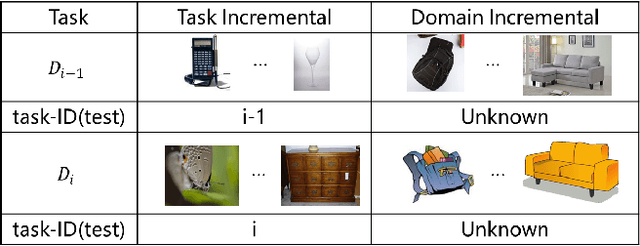

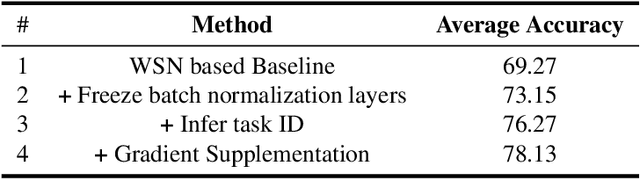

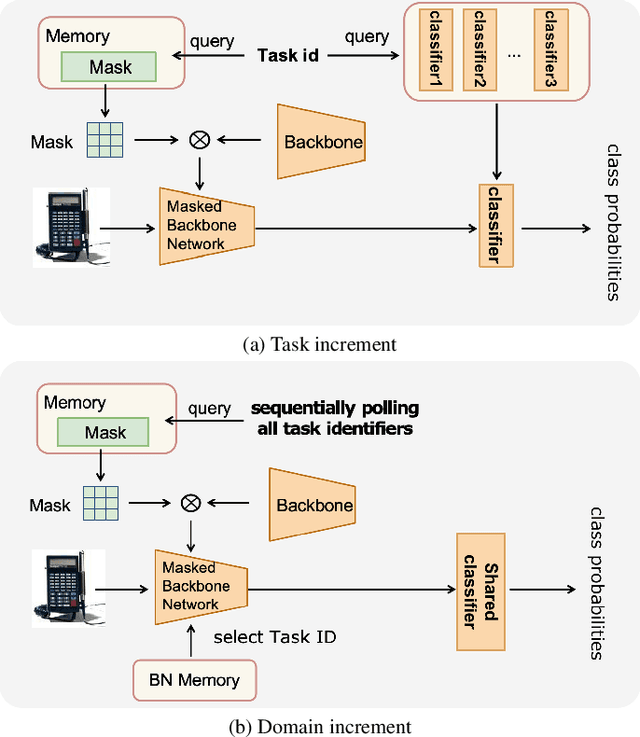

Abstract:This paper presents a data-free, parameter-isolation-based continual learning algorithm we developed for the sequential task continual learning track of the 2nd Greater Bay Area International Algorithm Competition. The method learns an independent parameter subspace for each task within the network's convolutional and linear layers and freezes the batch normalization layers after the first task. Specifically, for domain incremental setting where all domains share a classification head, we freeze the shared classification head after first task is completed, effectively solving the issue of catastrophic forgetting. Additionally, facing the challenge of domain incremental settings without providing a task identity, we designed an inference task identity strategy, selecting an appropriate mask matrix for each sample. Furthermore, we introduced a gradient supplementation strategy to enhance the importance of unselected parameters for the current task, facilitating learning for new tasks. We also implemented an adaptive importance scoring strategy that dynamically adjusts the amount of parameters to optimize single-task performance while reducing parameter usage. Moreover, considering the limitations of storage space and inference time, we designed a mask matrix compression strategy to save storage space and improve the speed of encryption and decryption of the mask matrix. Our approach does not require expanding the core network or using external auxiliary networks or data, and performs well under both task incremental and domain incremental settings. This solution ultimately won a second-place prize in the competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge