Mingming Ding

Learning to Behave Like Clean Speech: Dual-Branch Knowledge Distillation for Noise-Robust Fake Audio Detection

Oct 13, 2023

Abstract:Most research in fake audio detection (FAD) focuses on improving performance on standard noise-free datasets. However, in actual situations, there is usually noise interference, which will cause significant performance degradation in FAD systems. To improve the noise robustness, we propose a dual-branch knowledge distillation fake audio detection (DKDFAD) method. Specifically, a parallel data flow of the clean teacher branch and the noisy student branch is designed, and interactive fusion and response-based teacher-student paradigms are proposed to guide the training of noisy data from the data distribution and decision-making perspectives. In the noise branch, speech enhancement is first introduced for denoising, which reduces the interference of strong noise. The proposed interactive fusion combines denoising features and noise features to reduce the impact of speech distortion and seek consistency with the data distribution of clean branch. The teacher-student paradigm maps the student's decision space to the teacher's decision space, making noisy speech behave as clean. In addition, a joint training method is used to optimize the two branches to achieve global optimality. Experimental results based on multiple datasets show that the proposed method performs well in noisy environments and maintains performance in cross-dataset experiments.

The FruitShell French synthesis system at the Blizzard 2023 Challenge

Sep 01, 2023

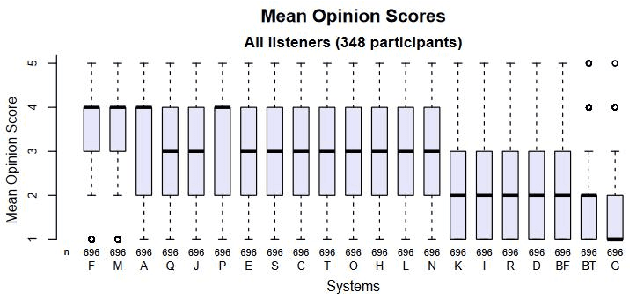

Abstract:This paper presents a French text-to-speech synthesis system for the Blizzard Challenge 2023. The challenge consists of two tasks: generating high-quality speech from female speakers and generating speech that closely resembles specific individuals. Regarding the competition data, we conducted a screening process to remove missing or erroneous text data. We organized all symbols except for phonemes and eliminated symbols that had no pronunciation or zero duration. Additionally, we added word boundary and start/end symbols to the text, which we have found to improve speech quality based on our previous experience. For the Spoke task, we performed data augmentation according to the competition rules. We used an open-source G2P model to transcribe the French texts into phonemes. As the G2P model uses the International Phonetic Alphabet (IPA), we applied the same transcription process to the provided competition data for standardization. However, due to compiler limitations in recognizing special symbols from the IPA chart, we followed the rules to convert all phonemes into the phonetic scheme used in the competition data. Finally, we resampled all competition audio to a uniform sampling rate of 16 kHz. We employed a VITS-based acoustic model with the hifigan vocoder. For the Spoke task, we trained a multi-speaker model and incorporated speaker information into the duration predictor, vocoder, and flow layers of the model. The evaluation results of our system showed a quality MOS score of 3.6 for the Hub task and 3.4 for the Spoke task, placing our system at an average level among all participating teams.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge