Min-Fong Hong

Cascaded Local Implicit Transformer for Arbitrary-Scale Super-Resolution

Mar 29, 2023Abstract:Implicit neural representation has recently shown a promising ability in representing images with arbitrary resolutions. In this paper, we present a Local Implicit Transformer (LIT), which integrates the attention mechanism and frequency encoding technique into a local implicit image function. We design a cross-scale local attention block to effectively aggregate local features. To further improve representative power, we propose a Cascaded LIT (CLIT) that exploits multi-scale features, along with a cumulative training strategy that gradually increases the upsampling scales during training. We have conducted extensive experiments to validate the effectiveness of these components and analyze various training strategies. The qualitative and quantitative results demonstrate that LIT and CLIT achieve favorable results and outperform the prior works in arbitrary super-resolution tasks.

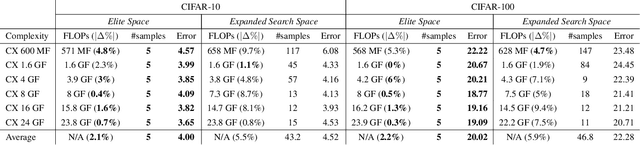

Network Space Search for Pareto-Efficient Spaces

Apr 22, 2021

Abstract:Network spaces have been known as a critical factor in both handcrafted network designs or defining search spaces for Neural Architecture Search (NAS). However, an effective space involves tremendous prior knowledge and/or manual effort, and additional constraints are required to discover efficiency-aware architectures. In this paper, we define a new problem, Network Space Search (NSS), as searching for favorable network spaces instead of a single architecture. We propose an NSS method to directly search for efficient-aware network spaces automatically, reducing the manual effort and immense cost in discovering satisfactory ones. The resultant network spaces, named Elite Spaces, are discovered from Expanded Search Space with minimal human expertise imposed. The Pareto-efficient Elite Spaces are aligned with the Pareto front under various complexity constraints and can be further served as NAS search spaces, benefiting differentiable NAS approaches (e.g. In CIFAR-100, an averagely 2.3% lower error rate and 3.7% closer to target constraint than the baseline with around 90% fewer samples required to find satisfactory networks). Moreover, our NSS approach is capable of searching for superior spaces in future unexplored spaces, revealing great potential in searching for network spaces automatically.

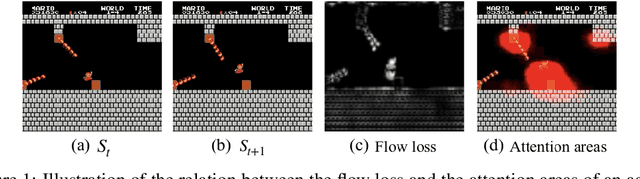

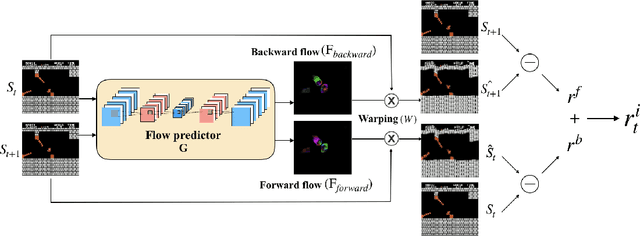

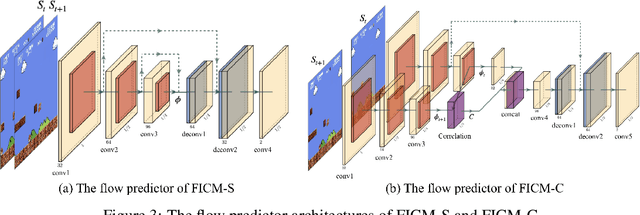

Exploration via Flow-Based Intrinsic Rewards

May 24, 2019

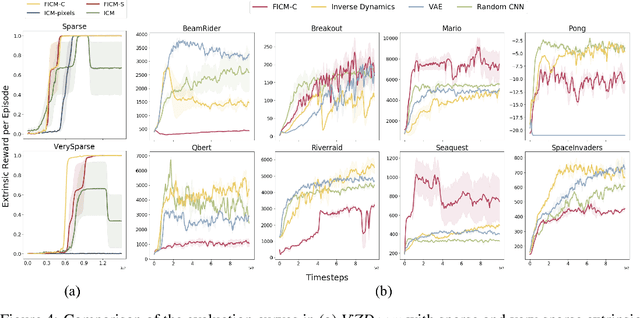

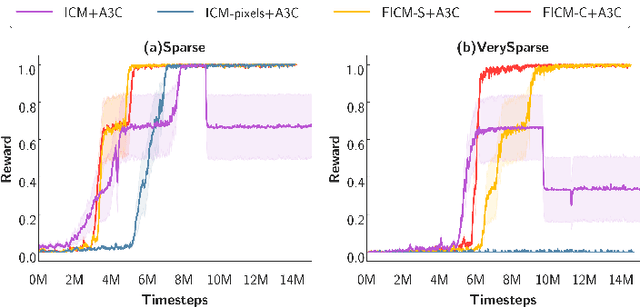

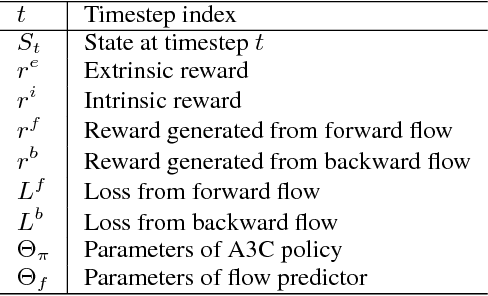

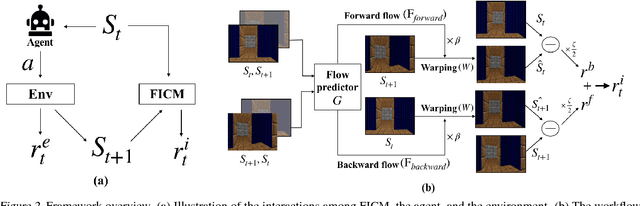

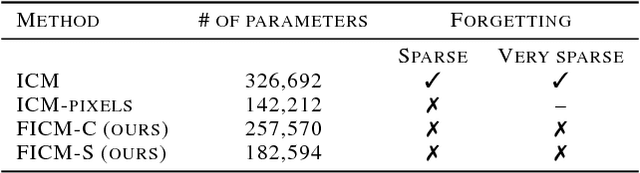

Abstract:Exploration bonuses derived from the novelty of observations in an environment have become a popular approach to motivate exploration for reinforcement learning (RL) agents in the past few years. Recent methods such as curiosity-driven exploration usually estimate the novelty of new observations by the prediction errors of their system dynamics models. In this paper, we introduce the concept of optical flow estimation from the field of computer vision to the RL domain and utilize the errors from optical flow estimation to evaluate the novelty of new observations. We introduce a flow-based intrinsic curiosity module (FICM) capable of learning the motion features and understanding the observations in a more comprehensive and efficient fashion. We evaluate our method and compare it with a number of baselines on several benchmark environments, including Atari games, Super Mario Bros., and ViZDoom. Our results show that the proposed method is superior to the baselines in certain environments, especially for those featuring sophisticated moving patterns or with high-dimensional observation spaces. We further analyze the hyper-parameters used in the training phase and discuss our insights into them.

Never Forget: Balancing Exploration and Exploitation via Learning Optical Flow

Jan 24, 2019

Abstract:Exploration bonus derived from the novelty of the states in an environment has become a popular approach to motivate exploration for deep reinforcement learning agents in the past few years. Recent methods such as curiosity-driven exploration usually estimate the novelty of new observations by the prediction errors of their system dynamics models. Due to the capacity limitation of the models and difficulty of performing next-frame prediction, however, these methods typically fail to balance between exploration and exploitation in high-dimensional observation tasks, resulting in the agents forgetting the visited paths and exploring those states repeatedly. Such inefficient exploration behavior causes significant performance drops, especially in large environments with sparse reward signals. In this paper, we propose to introduce the concept of optical flow estimation from the field of computer vision to deal with the above issue. We propose to employ optical flow estimation errors to examine the novelty of new observations, such that agents are able to memorize and understand the visited states in a more comprehensive fashion. We compare our method against the previous approaches in a number of experimental experiments. Our results indicate that the proposed method appears to deliver superior and long-lasting performance than the previous methods. We further provide a set of comprehensive ablative analysis of the proposed method, and investigate the impact of optical flow estimation on the learning curves of the DRL agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge