Milena Rmus

Automated scientific minimization of regret

May 23, 2025Abstract:We introduce automated scientific minimization of regret (ASMR) -- a framework for automated computational cognitive science. Building on the principles of scientific regret minimization, ASMR leverages Centaur -- a recently proposed foundation model of human cognition -- to identify gaps in an interpretable cognitive model. These gaps are then addressed through automated revisions generated by a language-based reasoning model. We demonstrate the utility of this approach in a multi-attribute decision-making task, showing that ASMR discovers cognitive models that predict human behavior at noise ceiling while retaining interpretability. Taken together, our results highlight the potential of ASMR to automate core components of the cognitive modeling pipeline.

Towards Automation of Cognitive Modeling using Large Language Models

Feb 02, 2025

Abstract:Computational cognitive models, which formalize theories of cognition, enable researchers to quantify cognitive processes and arbitrate between competing theories by fitting models to behavioral data. Traditionally, these models are handcrafted, which requires significant domain knowledge, coding expertise, and time investment. Previous work has demonstrated that Large Language Models (LLMs) are adept at pattern recognition in-context, solving complex problems, and generating executable code. In this work, we leverage these abilities to explore the potential of LLMs in automating the generation of cognitive models based on behavioral data. We evaluated the LLM in two different tasks: model identification (relating data to a source model), and model generation (generating the underlying cognitive model). We performed these tasks across two cognitive domains - decision making and learning. In the case of data simulated from canonical cognitive models, we found that the LLM successfully identified and generated the ground truth model. In the case of human data, where behavioral noise and lack of knowledge of the true underlying process pose significant challenges, the LLM generated models that are identical or close to the winning model from cognitive science literature. Our findings suggest that LLMs can have a transformative impact on cognitive modeling. With this project, we aim to contribute to an ongoing effort of automating scientific discovery in cognitive science.

Centaur: a foundation model of human cognition

Oct 26, 2024

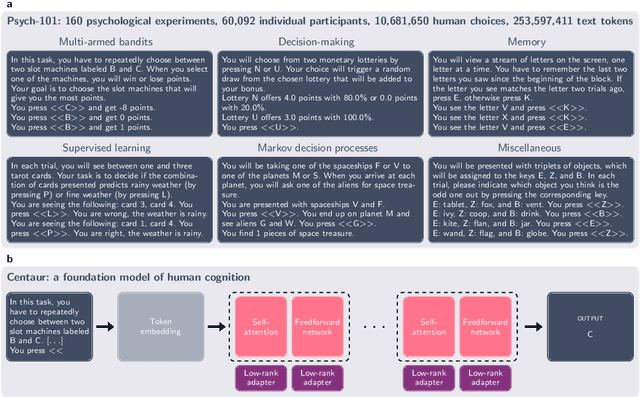

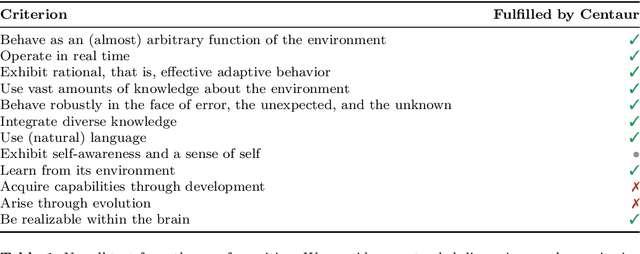

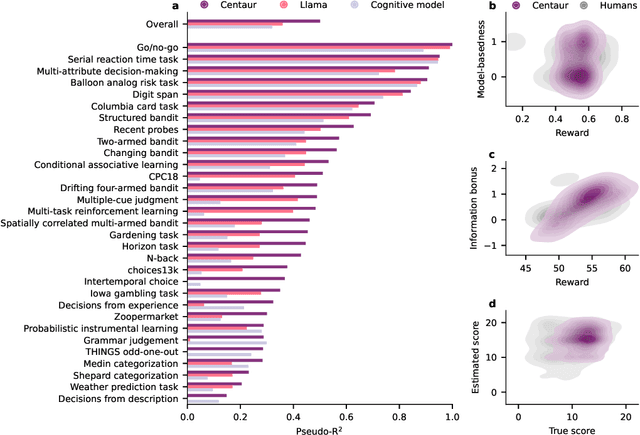

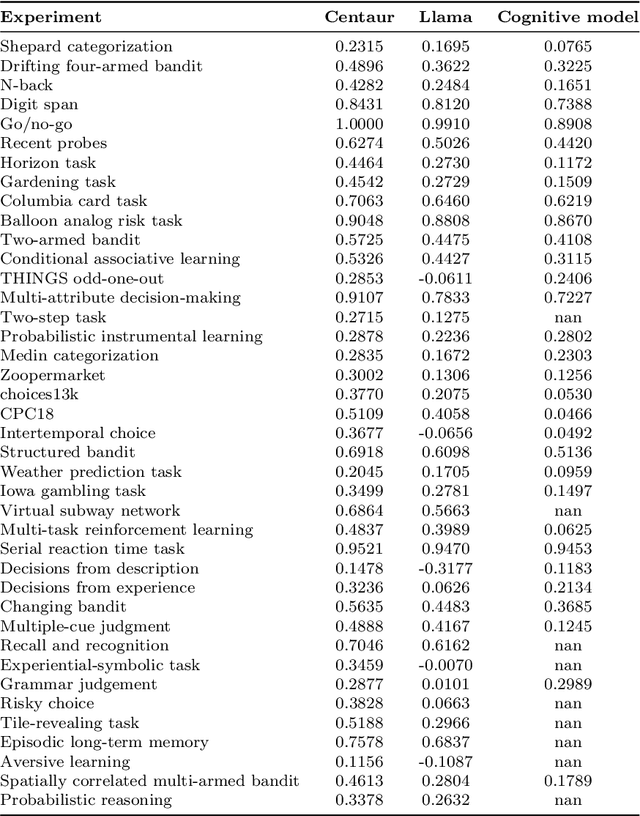

Abstract:Establishing a unified theory of cognition has been a major goal of psychology. While there have been previous attempts to instantiate such theories by building computational models, we currently do not have one model that captures the human mind in its entirety. Here we introduce Centaur, a computational model that can predict and simulate human behavior in any experiment expressible in natural language. We derived Centaur by finetuning a state-of-the-art language model on a novel, large-scale data set called Psych-101. Psych-101 reaches an unprecedented scale, covering trial-by-trial data from over 60,000 participants performing over 10,000,000 choices in 160 experiments. Centaur not only captures the behavior of held-out participants better than existing cognitive models, but also generalizes to new cover stories, structural task modifications, and entirely new domains. Furthermore, we find that the model's internal representations become more aligned with human neural activity after finetuning. Taken together, Centaur is the first real candidate for a unified model of human cognition. We anticipate that it will have a disruptive impact on the cognitive sciences, challenging the existing paradigm for developing computational models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge