Mike E. Davies

A Gaussian Process Regression based Dynamical Models Learning Algorithm for Target Tracking

Nov 25, 2022

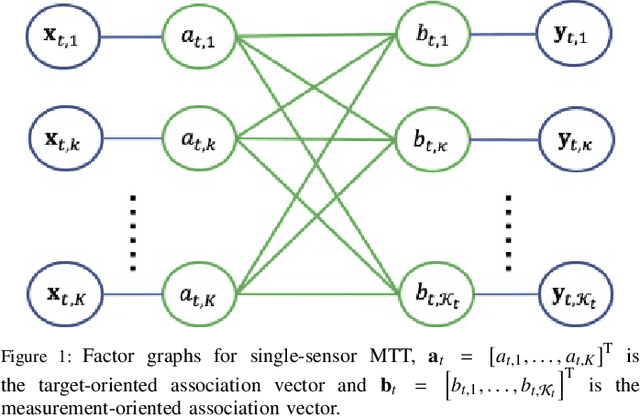

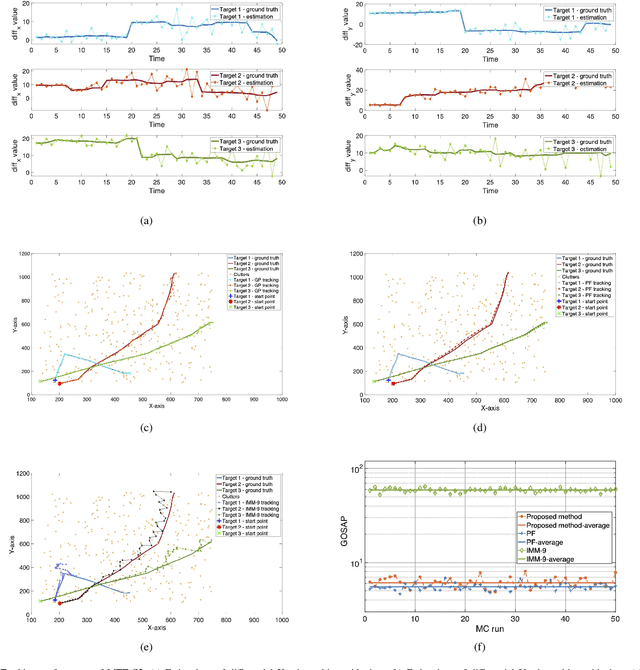

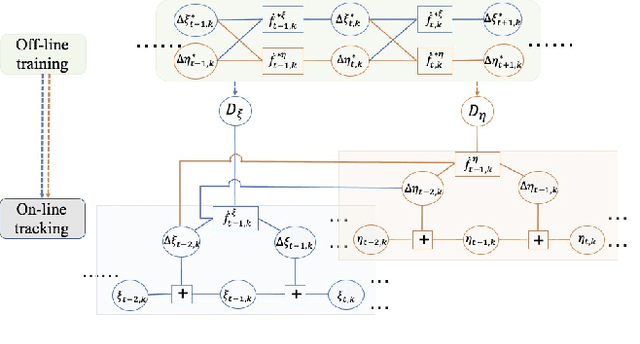

Abstract:Maneuvering target tracking is a challenging problem for sensor systems because of the unpredictability of the targets' motions. This paper proposes a novel data-driven method for learning the dynamical motion model of a target. Non-parametric Gaussian process regression (GPR) is used to learn a target's naturally shift invariant motion (NSIM) behavior, which is translationally invariant and does not need to be constantly updated as the target moves. The learned Gaussian processes (GPs) can be applied to track targets within different surveillance regions from the surveillance region of the training data by being incorporated into the particle filter (PF) implementation. The performance of our proposed approach is evaluated over different maneuvering scenarios by being compared with commonly used interacting multiple model (IMM)-PF methods and provides around $90\%$ performance improvement for a multi-target tracking (MTT) highly maneuvering scenario.

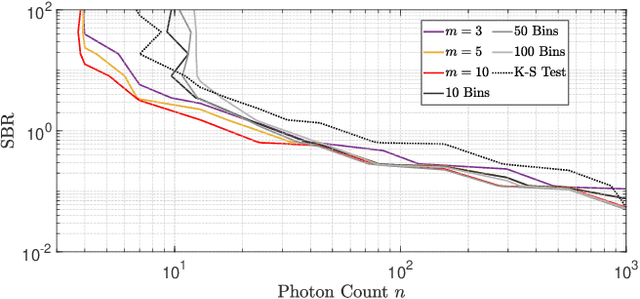

Spline Sketches: An Efficient Approach for Photon Counting Lidar

Oct 13, 2022

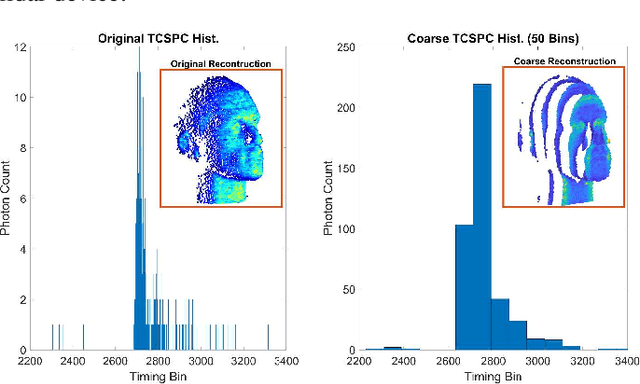

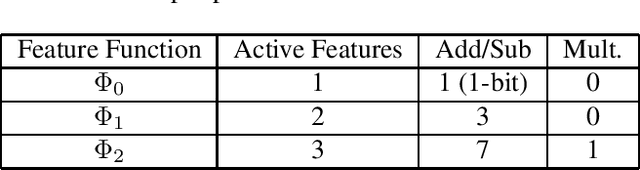

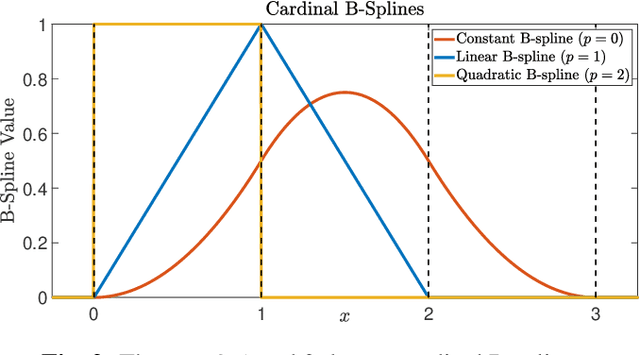

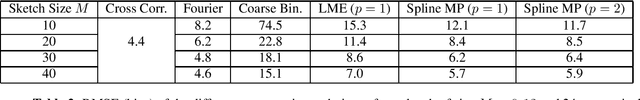

Abstract:Photon counting lidar has become an invaluable tool for 3D depth imaging due to the fine-precision it can achieve over long ranges. However, high frame rate, high resolution lidar devices produce an enormous amount of time-of-flight (ToF) data which can cause a severe data processing bottleneck hindering the deployment of real-time systems. In this paper, an efficient photon counting approach is proposed that exploits the simplicity of piecewise polynomial splines to form a hardware-friendly compressed statistic, or a so-called spline sketch, of the ToF data without sacrificing the quality of the recovered image. As each piecewise polynomial spline is a simple function with limited support over the timing depth window, the spline sketch can be computed efficiently on-chip with minimal computational overhead. \MD{We show that a piecewise linear or quadratic spline sketch, requiring minimal on-chip arithmetic computation per photon detection, can reconstruct real-world depth images with negligible loss of resolution whilst achieving $95\%$ compression compared to the full ToF data, as well as offering multi-peak detection performance. These contrast with previously proposed coarse binning histograms that suffer from a highly nonuniform accuracy across depth and can fail catastrophically when associated with bright reflectors. Further, by building range-walk correction into the proposed estimation algorithms, it is demonstrated that the spline sketches can be made robust to photon pile-up effects.} The computational complexity of both the reconstruction and range walk correction algorithms scale only with the size of the spline sketch which is independent to both the photon count and temporal resolution of the lidar device.

Adaptive Kernel Kalman Filter

Mar 15, 2022

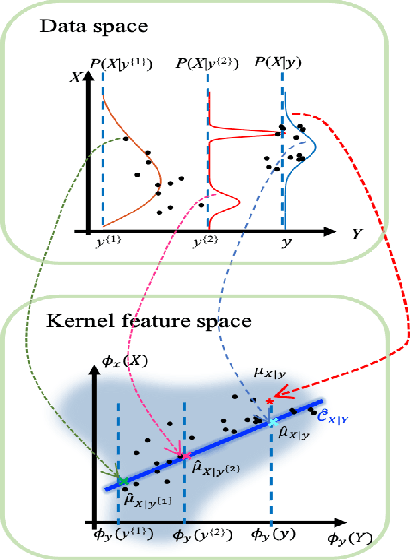

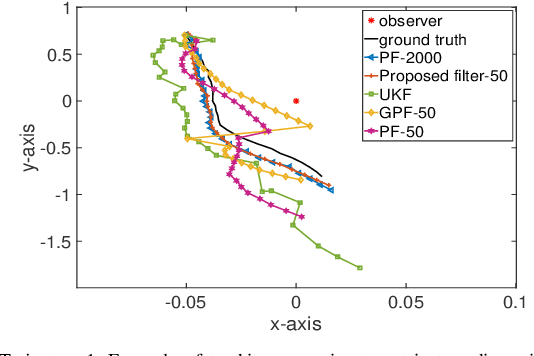

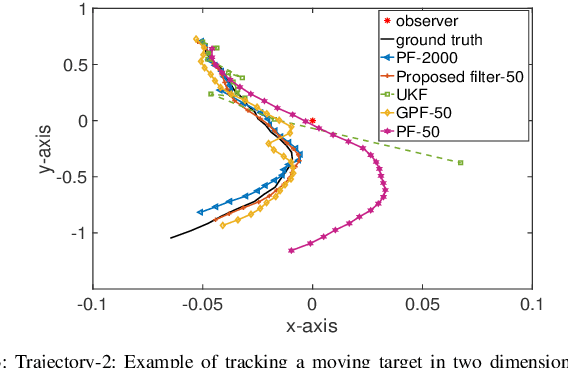

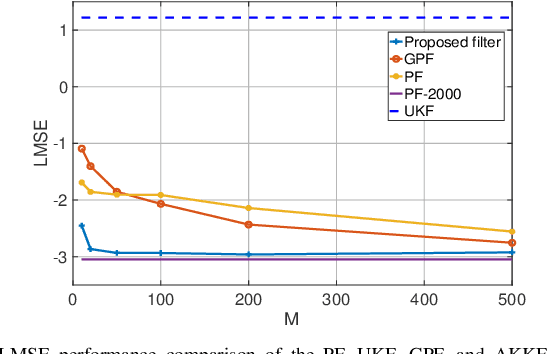

Abstract:Sequential Bayesian filters in non-linear dynamic systems require the recursive estimation of the predictive and posterior distributions. This paper introduces a Bayesian filter called the adaptive kernel Kalman filter (AKKF). With this filter, the arbitrary predictive and posterior distributions of hidden states are approximated using the empirical kernel mean embeddings (KMEs) in reproducing kernel Hilbert spaces (RKHSs). In parallel with the KMEs, some particles, in the data space, are used to capture the properties of the dynamical system model. Specifically, particles are generated and updated in the data space, while the corresponding kernel weight mean vector and covariance matrix associated with the feature mappings of the particles are predicted and updated in the RKHSs based on the kernel Kalman rule (KKR). Simulation results are presented to confirm the improved performance of our approach with significantly reduced particle numbers, by comparing with the unscented Kalman filter (UKF), particle filter (PF) and Gaussian particle filter (GPF). For example, compared with the GPF, the proposed approach provides around 5% logarithmic mean square error (LMSE) tracking performance improvement in the bearing-only tracking (BOT) system when using 50 particles.

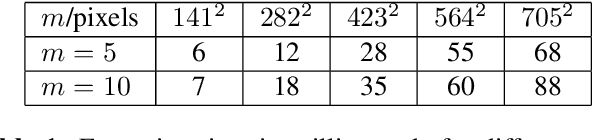

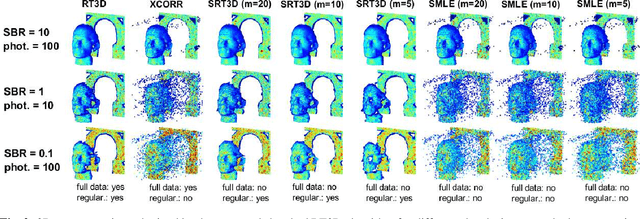

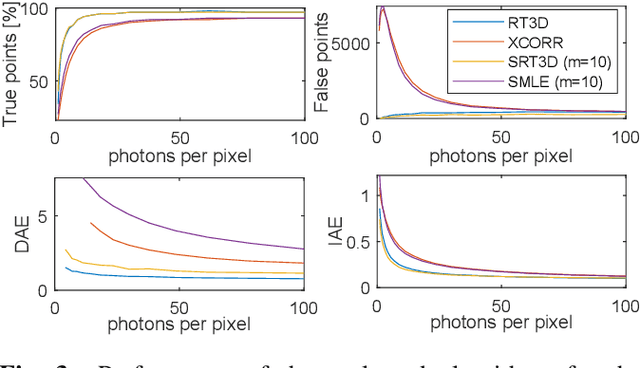

Sketched RT3D: How to reconstruct billions of photons per second

Mar 02, 2022

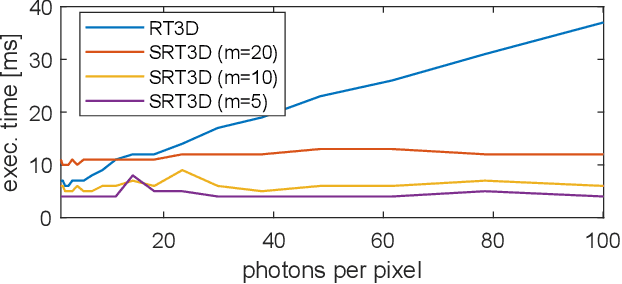

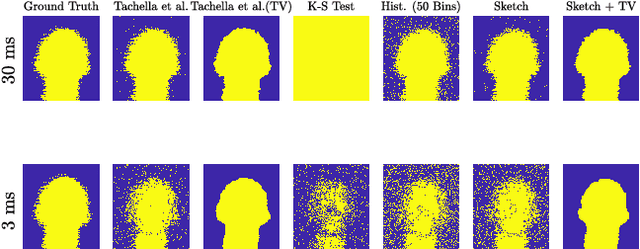

Abstract:Single-photon light detection and ranging (lidar) captures depth and intensity information of a 3D scene. Reconstructing a scene from observed photons is a challenging task due to spurious detections associated with background illumination sources. To tackle this problem, there is a plethora of 3D reconstruction algorithms which exploit spatial regularity of natural scenes to provide stable reconstructions. However, most existing algorithms have computational and memory complexity proportional to the number of recorded photons. This complexity hinders their real-time deployment on modern lidar arrays which acquire billions of photons per second. Leveraging a recent lidar sketching framework, we show that it is possible to modify existing reconstruction algorithms such that they only require a small sketch of the photon information. In particular, we propose a sketched version of a recent state-of-the-art algorithm which uses point cloud denoisers to provide spatially regularized reconstructions. A series of experiments performed on real lidar datasets demonstrates a significant reduction of execution time and memory requirements, while achieving the same reconstruction performance than in the full data case.

Deep Unrolling for Magnetic Resonance Fingerprinting

Jan 25, 2022

Abstract:Magnetic Resonance Fingerprinting (MRF) has emerged as a promising quantitative MR imaging approach. Deep learning methods have been proposed for MRF and demonstrated improved performance over classical compressed sensing algorithms. However many of these end-to-end models are physics-free, while consistency of the predictions with respect to the physical forward model is crucial for reliably solving inverse problems. To address this, recently [1] proposed a proximal gradient descent framework that directly incorporates the forward acquisition and Bloch dynamic models within an unrolled learning mechanism. However, [1] only evaluated the unrolled model on synthetic data using Cartesian sampling trajectories. In this paper, as a complementary to [1], we investigate other choices of encoders to build the proximal neural network, and evaluate the deep unrolling algorithm on real accelerated MRF scans with non-Cartesian k-space sampling trajectories.

Robust Equivariant Imaging: a fully unsupervised framework for learning to image from noisy and partial measurements

Nov 25, 2021

Abstract:Deep networks provide state-of-the-art performance in multiple imaging inverse problems ranging from medical imaging to computational photography. However, most existing networks are trained with clean signals which are often hard or impossible to obtain. Equivariant imaging (EI) is a recent self-supervised learning framework that exploits the group invariance present in signal distributions to learn a reconstruction function from partial measurement data alone. While EI results are impressive, its performance degrades with increasing noise. In this paper, we propose a Robust Equivariant Imaging (REI) framework which can learn to image from noisy partial measurements alone. The proposed method uses Stein's Unbiased Risk Estimator (SURE) to obtain a fully unsupervised training loss that is robust to noise. We show that REI leads to considerable performance gains on linear and nonlinear inverse problems, thereby paving the way for robust unsupervised imaging with deep networks. Code will be available at: https://github.com/edongdongchen/REI.

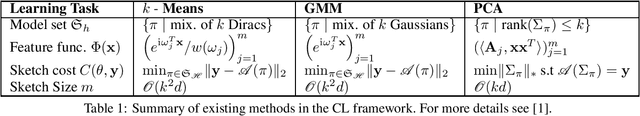

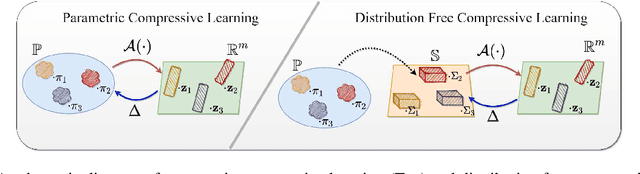

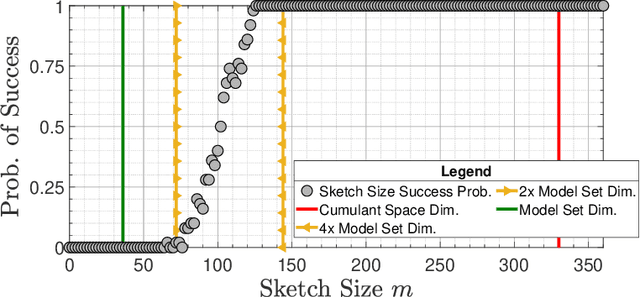

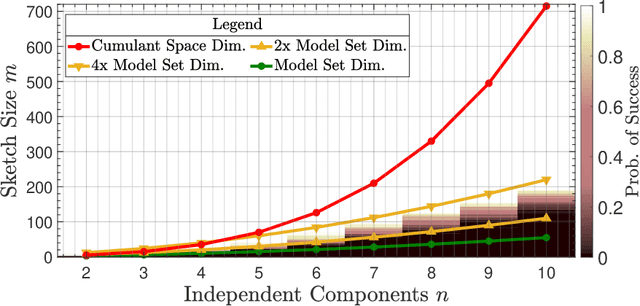

Compressive Independent Component Analysis: Theory and Algorithms

Oct 15, 2021

Abstract:Compressive learning forms the exciting intersection between compressed sensing and statistical learning where one exploits forms of sparsity and structure to reduce the memory and/or computational complexity of the learning task. In this paper, we look at the independent component analysis (ICA) model through the compressive learning lens. In particular, we show that solutions to the cumulant based ICA model have particular structure that induces a low dimensional model set that resides in the cumulant tensor space. By showing a restricted isometry property holds for random cumulants e.g. Gaussian ensembles, we prove the existence of a compressive ICA scheme. Thereafter, we propose two algorithms of the form of an iterative projection gradient (IPG) and an alternating steepest descent (ASD) algorithm for compressive ICA, where the order of compression asserted from the restricted isometry property is realised through empirical results. We provide analysis of the CICA algorithms including the effects of finite samples. The effects of compression are characterised by a trade-off between the sketch size and the statistical efficiency of the ICA estimates. By considering synthetic and real datasets, we show the substantial memory gains achieved over well-known ICA algorithms by using one of the proposed CICA algorithms. Finally, we conclude the paper with open problems including interesting challenges from the emerging field of compressive learning.

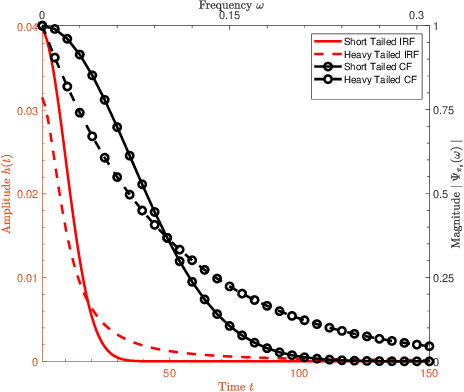

Surface Detection for Sketched Single Photon Lidar

May 14, 2021

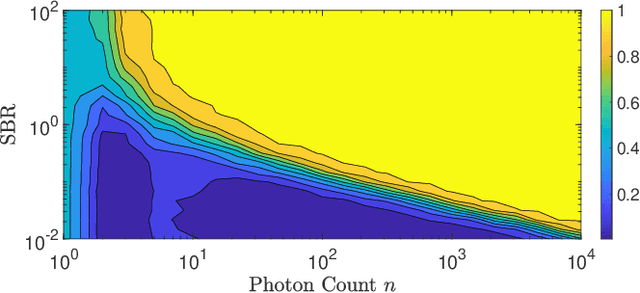

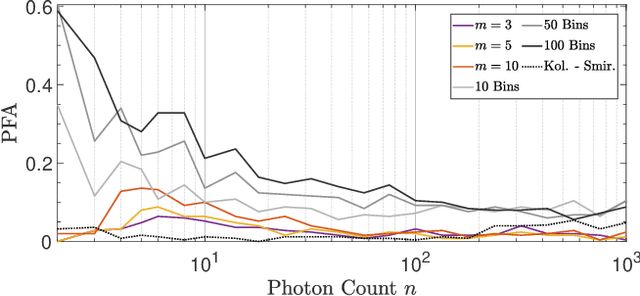

Abstract:Single-photon lidar devices are able to collect an ever-increasing amount of time-stamped photons in small time periods due to increasingly larger arrays, generating a memory and computational bottleneck on the data processing side. Recently, a sketching technique was introduced to overcome this bottleneck which compresses the amount of information to be stored and processed. The size of the sketch scales with the number of underlying parameters of the time delay distribution and not, fundamentally, with either the number of detected photons or the time-stamp resolution. In this paper, we propose a detection algorithm based solely on a small sketch that determines if there are surfaces or objects in the scene or not. If a surface is detected, the depth and intensity of a single object can be computed in closed-form directly from the sketch. The computational load of the proposed detection algorithm depends solely on the size of the sketch, in contrast to previous algorithms that depend at least linearly in the number of collected photons or histogram bins, paving the way for fast, accurate and memory efficient lidar estimation. Our experiments demonstrate the memory and statistical efficiency of the proposed algorithm both on synthetic and real lidar datasets.

Equivariant Imaging: Learning Beyond the Range Space

Mar 26, 2021

Abstract:In various imaging problems, we only have access to compressed measurements of the underlying signals, hindering most learning-based strategies which usually require pairs of signals and associated measurements for training. Learning only from compressed measurements is impossible in general, as the compressed observations do not contain information outside the range of the forward sensing operator. We propose a new end-to-end self-supervised framework that overcomes this limitation by exploiting the equivariances present in natural signals. Our proposed learning strategy performs as well as fully supervised methods. Experiments demonstrate the potential of this framework on inverse problems including sparse-view X-ray computed tomography on real clinical data and image inpainting on natural images. Code will be released.

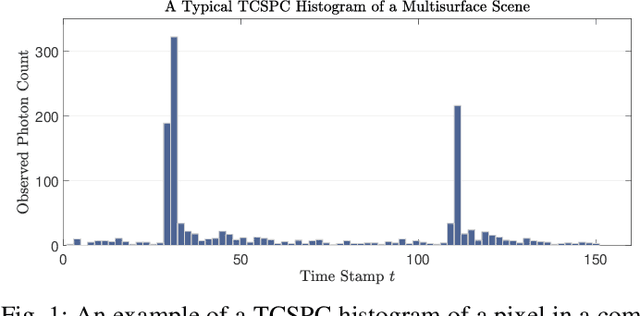

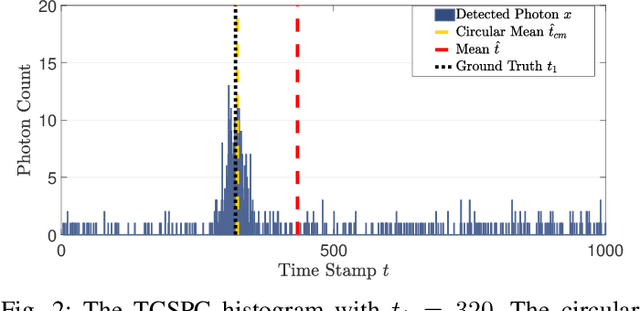

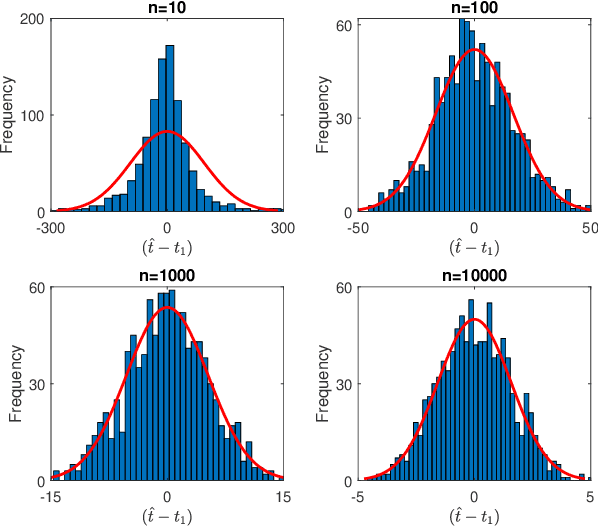

A Sketching Framework for Reduced Data Transfer in Photon Counting Lidar

Feb 17, 2021

Abstract:Single-photon lidar has become a prominent tool for depth imaging in recent years. At the core of the technique, the depth of a target is measured by constructing a histogram of time delays between emitted light pulses and detected photon arrivals. A major data processing bottleneck arises on the device when either the number of photons per pixel is large or the resolution of the time stamp is fine, as both the space requirement and the complexity of the image reconstruction algorithms scale with these parameters. We solve this limiting bottleneck of existing lidar techniques by sampling the characteristic function of the time of flight (ToF) model to build a compressive statistic, a so-called sketch of the time delay distribution, which is sufficient to infer the spatial distance and intensity of the object. The size of the sketch scales with the degrees of freedom of the ToF model (number of objects) and not, fundamentally, with the number of photons or the time stamp resolution. Moreover, the sketch is highly amenable for on-chip online processing. We show theoretically that the loss of information for compression is controlled and the mean squared error of the inference quickly converges towards the optimal Cram\'er-Rao bound (i.e. no loss of information) for modest sketch sizes. The proposed compressed single-photon lidar framework is tested and evaluated on real life datasets of complex scenes where it is shown that a compression rate of up-to 1/150 is achievable in practice without sacrificing the overall resolution of the reconstructed image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge