Michel F. Valstar

Applying Cooperative Machine Learning to Speed Up the Annotation of Social Signals in Large Multi-modal Corpora

Feb 07, 2018

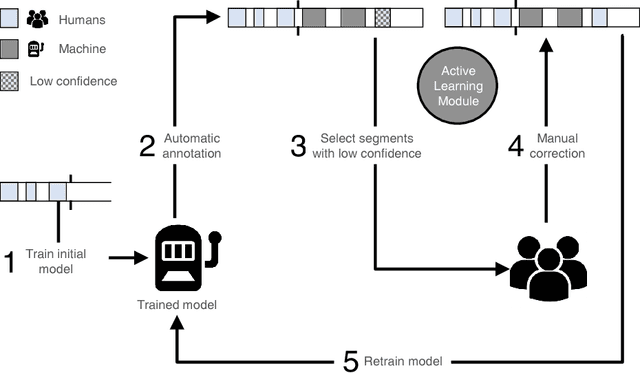

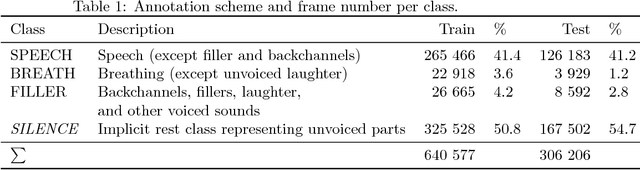

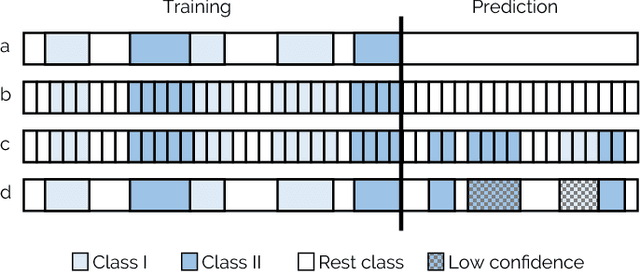

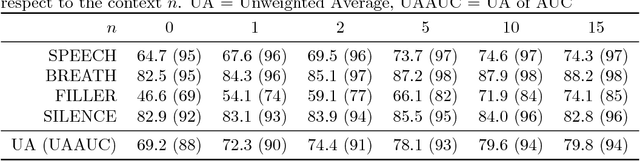

Abstract:Scientific disciplines, such as Behavioural Psychology, Anthropology and recently Social Signal Processing are concerned with the systematic exploration of human behaviour. A typical work-flow includes the manual annotation (also called coding) of social signals in multi-modal corpora of considerable size. For the involved annotators this defines an exhausting and time-consuming task. In the article at hand we present a novel method and also provide the tools to speed up the coding procedure. To this end, we suggest and evaluate the use of Cooperative Machine Learning (CML) techniques to reduce manual labelling efforts by combining the power of computational capabilities and human intelligence. The proposed CML strategy starts with a small number of labelled instances and concentrates on predicting local parts first. Afterwards, a session-independent classification model is created to finish the remaining parts of the database. Confidence values are computed to guide the manual inspection and correction of the predictions. To bring the proposed approach into application we introduce NOVA - an open-source tool for collaborative and machine-aided annotations. In particular, it gives labellers immediate access to CML strategies and directly provides visual feedback on the results. Our experiments show that the proposed method has the potential to significantly reduce human labelling efforts.

FERA 2017 - Addressing Head Pose in the Third Facial Expression Recognition and Analysis Challenge

Feb 14, 2017

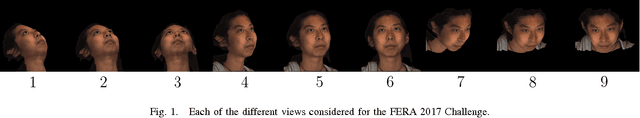

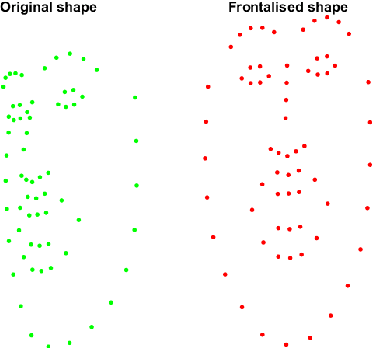

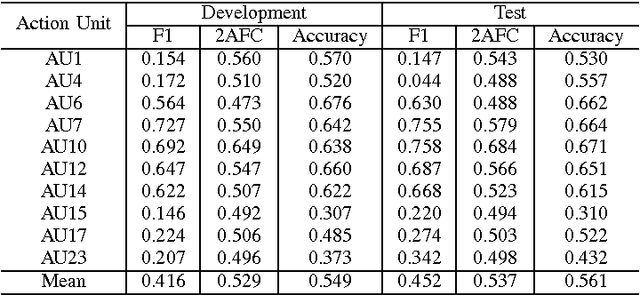

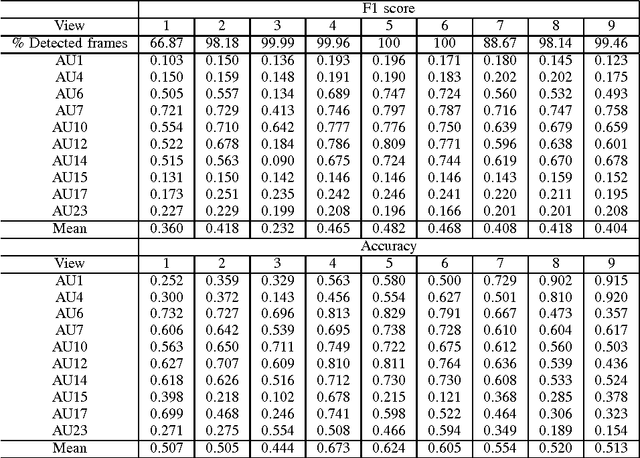

Abstract:The field of Automatic Facial Expression Analysis has grown rapidly in recent years. However, despite progress in new approaches as well as benchmarking efforts, most evaluations still focus on either posed expressions, near-frontal recordings, or both. This makes it hard to tell how existing expression recognition approaches perform under conditions where faces appear in a wide range of poses (or camera views), displaying ecologically valid expressions. The main obstacle for assessing this is the availability of suitable data, and the challenge proposed here addresses this limitation. The FG 2017 Facial Expression Recognition and Analysis challenge (FERA 2017) extends FERA 2015 to the estimation of Action Units occurrence and intensity under different camera views. In this paper we present the third challenge in automatic recognition of facial expressions, to be held in conjunction with the 12th IEEE conference on Face and Gesture Recognition, May 2017, in Washington, United States. Two sub-challenges are defined: the detection of AU occurrence, and the estimation of AU intensity. In this work we outline the evaluation protocol, the data used, and the results of a baseline method for both sub-challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge