Michael Villarreal

Analyzing Emissions and Energy Efficiency in Mixed Traffic Control at Unsignalized Intersections

Nov 20, 2023Abstract:Greenhouse gas emissions have dramatically risen since the early 1900s with U.S. transportation generating 28% of the U.S' emissions. As such, there is interest in reducing transportation-related emissions. Specifically, sustainability research has sprouted around signalized intersections as intersections allow different streams of traffic to cross and change directions. Recent research has developed mixed traffic control eco-driving strategies at signalized intersections to decrease emissions. However, the inherent structure of a signalized intersection generates increased emissions by creating frequent acceleration/deceleration events, excessive idling from traffic congestion, and stop-and-go waves. Thus, we believe unsignalized intersections hold potential for further sustainability improvements. In this work, we provide an emissions analysis on unsignalized intersections with complex, real-world topologies and traffic demands where mixed traffic control strategies are employed by robot vehicles (RVs) to reduce waiting times and congestion. We find with at least 10% RV penetration rate, RVs generate less fuel consumption and NOx emissions than signalized intersections by up to 27% and 28%, respectively. With at least 30% RVs, CO and HC emissions are reduced by up to 42% and 43%, respectively. Additionally, RVs can reduce emissions across the whole network despite only employing their strategies at the intersections.

Can ChatGPT Enable ITS? The Case of Mixed Traffic Control via Reinforcement Learning

Jun 13, 2023Abstract:The surge in Reinforcement Learning (RL) applications in Intelligent Transportation Systems (ITS) has contributed to its growth as well as highlighted key challenges. However, defining objectives of RL agents in traffic control and management tasks, as well as aligning policies with these goals through an effective formulation of Markov Decision Process (MDP), can be challenging and often require domain experts in both RL and ITS. Recent advancements in Large Language Models (LLMs) such as GPT-4 highlight their broad general knowledge, reasoning capabilities, and commonsense priors across various domains. In this work, we conduct a large-scale user study involving 70 participants to investigate whether novices can leverage ChatGPT to solve complex mixed traffic control problems. Three environments are tested, including ring road, bottleneck, and intersection. We find ChatGPT has mixed results. For intersection and bottleneck, ChatGPT increases number of successful policies by 150% and 136% compared to solely beginner capabilities, with some of them even outperforming experts. However, ChatGPT does not provide consistent improvements across all scenarios.

Efficient Quality-Diversity Optimization through Diverse Quality Species

Apr 14, 2023Abstract:A prevalent limitation of optimizing over a single objective is that it can be misguided, becoming trapped in local optimum. This can be rectified by Quality-Diversity (QD) algorithms, where a population of high-quality and diverse solutions to a problem is preferred. Most conventional QD approaches, for example, MAP-Elites, explicitly manage a behavioral archive where solutions are broken down into predefined niches. In this work, we show that a diverse population of solutions can be found without the limitation of needing an archive or defining the range of behaviors in advance. Instead, we break down solutions into independently evolving species and use unsupervised skill discovery to learn diverse, high-performing solutions. We show that this can be done through gradient-based mutations that take on an information theoretic perspective of jointly maximizing mutual information and performance. We propose Diverse Quality Species (DQS) as an alternative to archive-based QD algorithms. We evaluate it over several simulated robotic environments and show that it can learn a diverse set of solutions from varying species. Furthermore, our results show that DQS is more sample-efficient and performant when compared to other QD algorithms. Relevant code and hyper-parameters are available at: https://github.com/rwickman/NEAT_RL.

Hybrid Traffic Control and Coordination from Pixels

Feb 17, 2023Abstract:Traffic congestion is a persistent problem in our society. Existing methods for traffic control have proven futile in alleviating current congestion levels leading researchers to explore ideas with robot vehicles given the increased emergence of vehicles with different levels of autonomy on our roads. This gives rise to hybrid traffic control, where robot vehicles regulate human-driven vehicles, through reinforcement learning (RL). However, most existing studies use precise observations that involve global information, such as network throughput, as well as local information, such as vehicle positions and velocities. Obtaining this information requires updating existing road infrastructure with vast sensor networks and communication to potentially unwilling human drivers. We consider image observations as the alternative for hybrid traffic control via RL: 1) images are readily available through satellite imagery, in-car camera systems, and traffic monitoring systems; 2) Images do not require a complete re-imagination of the observation space from network to network; and 3) images only require communication to equipment. In this work, we show that robot vehicles using image observations can achieve similar performance to using precise information on networks, including ring, figure eight, merge, bottleneck, and intersections. We also demonstrate increased performance (up to 26%) in certain cases on tested networks, despite only using local traffic information as opposed to global traffic information.

AutoJoin: Efficient Adversarial Training for Robust Maneuvering via Denoising Autoencoder and Joint Learning

May 22, 2022

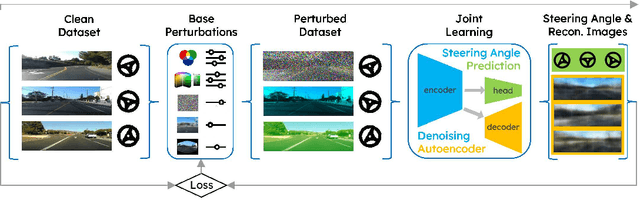

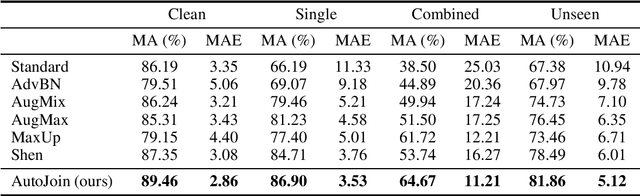

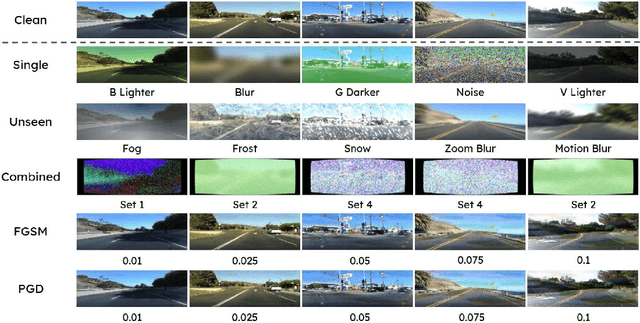

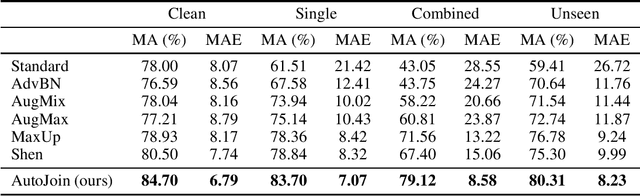

Abstract:As a result of increasingly adopted machine learning algorithms and ubiquitous sensors, many 'perception-to-control' systems have been deployed in various settings. For these systems to be trustworthy, we need to improve their robustness with adversarial training being one approach. In this work, we propose a gradient-free adversarial training technique, called AutoJoin. AutoJoin is a very simple yet effective and efficient approach to produce robust models for imaged-based autonomous maneuvering. Compared to other SOTA methods with testing on over 5M perturbed and clean images, AutoJoin achieves significant performance increases up to the 40% range under perturbed datasets while improving on clean performance for almost every dataset tested. In particular, AutoJoin can triple the clean performance improvement compared to the SOTA work by Shen et al. Regarding efficiency, AutoJoin demonstrates strong advantages over other SOTA techniques by saving up to 83% time per training epoch and 90% training data. The core idea of AutoJoin is to use a decoder attachment to the original regression model creating a denoising autoencoder within the architecture. This allows the tasks 'steering' and 'denoising sensor input' to be jointly learnt and enable the two tasks to reinforce each other's performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge