Ryan Wickman

NL2OR: Solve Complex Operations Research Problems Using Natural Language Inputs

Aug 14, 2024

Abstract:Operations research (OR) uses mathematical models to enhance decision-making, but developing these models requires expert knowledge and can be time-consuming. Automated mathematical programming (AMP) has emerged to simplify this process, but existing systems have limitations. This paper introduces a novel methodology that uses recent advances in Large Language Model (LLM) to create and edit OR solutions from non-expert user queries expressed using Natural Language. This reduces the need for domain expertise and the time to formulate a problem. The paper presents an end-to-end pipeline, named NL2OR, that generates solutions to OR problems from natural language input, and shares experimental results on several important OR problems.

Efficient Quality-Diversity Optimization through Diverse Quality Species

Apr 14, 2023Abstract:A prevalent limitation of optimizing over a single objective is that it can be misguided, becoming trapped in local optimum. This can be rectified by Quality-Diversity (QD) algorithms, where a population of high-quality and diverse solutions to a problem is preferred. Most conventional QD approaches, for example, MAP-Elites, explicitly manage a behavioral archive where solutions are broken down into predefined niches. In this work, we show that a diverse population of solutions can be found without the limitation of needing an archive or defining the range of behaviors in advance. Instead, we break down solutions into independently evolving species and use unsupervised skill discovery to learn diverse, high-performing solutions. We show that this can be done through gradient-based mutations that take on an information theoretic perspective of jointly maximizing mutual information and performance. We propose Diverse Quality Species (DQS) as an alternative to archive-based QD algorithms. We evaluate it over several simulated robotic environments and show that it can learn a diverse set of solutions from varying species. Furthermore, our results show that DQS is more sample-efficient and performant when compared to other QD algorithms. Relevant code and hyper-parameters are available at: https://github.com/rwickman/NEAT_RL.

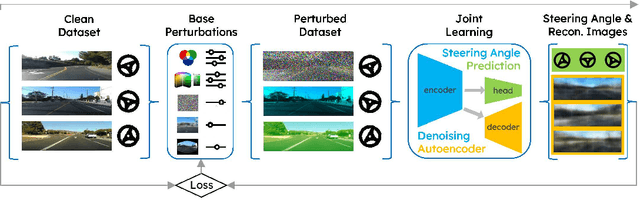

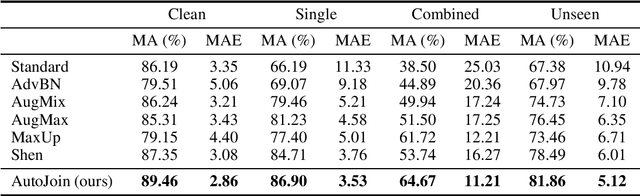

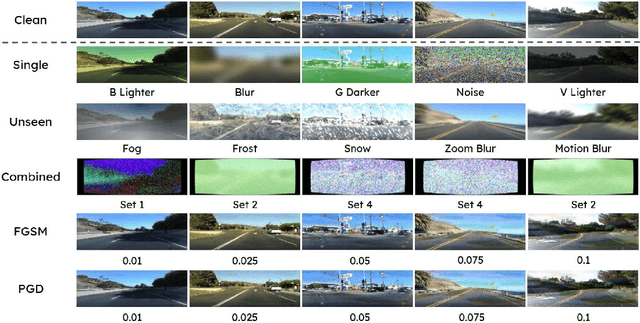

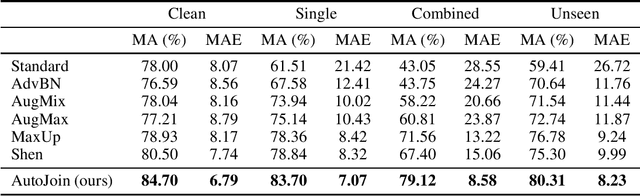

AutoJoin: Efficient Adversarial Training for Robust Maneuvering via Denoising Autoencoder and Joint Learning

May 22, 2022

Abstract:As a result of increasingly adopted machine learning algorithms and ubiquitous sensors, many 'perception-to-control' systems have been deployed in various settings. For these systems to be trustworthy, we need to improve their robustness with adversarial training being one approach. In this work, we propose a gradient-free adversarial training technique, called AutoJoin. AutoJoin is a very simple yet effective and efficient approach to produce robust models for imaged-based autonomous maneuvering. Compared to other SOTA methods with testing on over 5M perturbed and clean images, AutoJoin achieves significant performance increases up to the 40% range under perturbed datasets while improving on clean performance for almost every dataset tested. In particular, AutoJoin can triple the clean performance improvement compared to the SOTA work by Shen et al. Regarding efficiency, AutoJoin demonstrates strong advantages over other SOTA techniques by saving up to 83% time per training epoch and 90% training data. The core idea of AutoJoin is to use a decoder attachment to the original regression model creating a denoising autoencoder within the architecture. This allows the tasks 'steering' and 'denoising sensor input' to be jointly learnt and enable the two tasks to reinforce each other's performance.

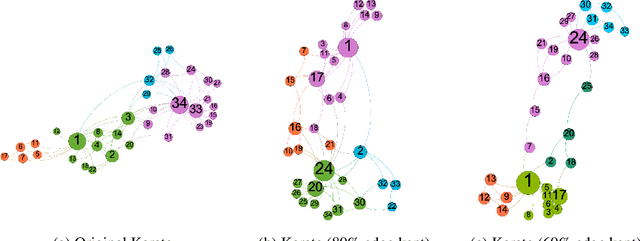

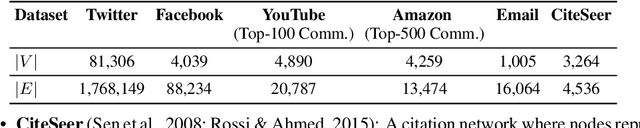

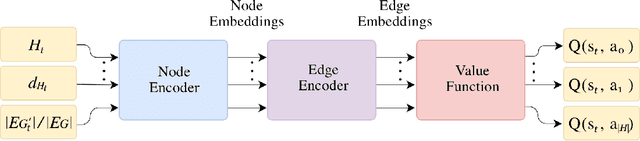

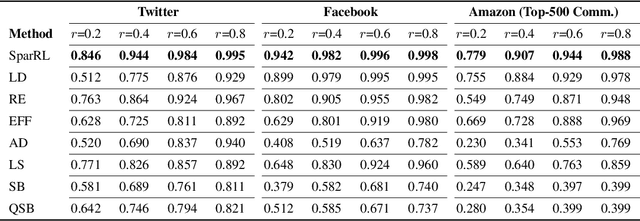

SparRL: Graph Sparsification via Deep Reinforcement Learning

Dec 02, 2021

Abstract:Graph sparsification concerns data reduction where an edge-reduced graph of a similar structure is preferred. Existing methods are mostly sampling-based, which introduce high computation complexity in general and lack of flexibility for a different reduction objective. We present SparRL, the first general and effective reinforcement learning-based framework for graph sparsification. SparRL can easily adapt to different reduction goals and promise graph-size-independent complexity. Extensive experiments show that SparRL outperforms all prevailing sparsification methods in producing high-quality sparsified graphs concerning a variety of objectives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge