Michael Taylor

ADD: Physics-Based Motion Imitation with Adversarial Differential Discriminators

May 08, 2025

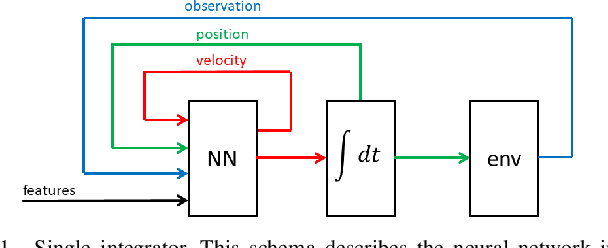

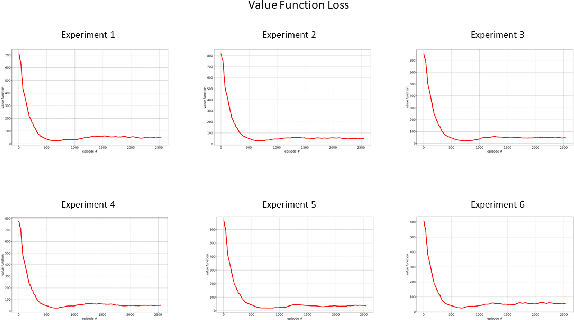

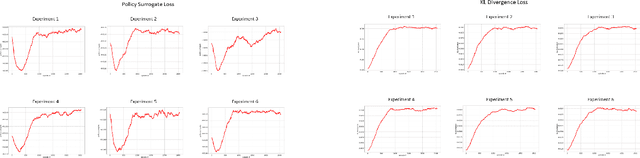

Abstract:Multi-objective optimization problems, which require the simultaneous optimization of multiple terms, are prevalent across numerous applications. Existing multi-objective optimization methods often rely on manually tuned aggregation functions to formulate a joint optimization target. The performance of such hand-tuned methods is heavily dependent on careful weight selection, a time-consuming and laborious process. These limitations also arise in the setting of reinforcement-learning-based motion tracking for physically simulated characters, where intricately crafted reward functions are typically used to achieve high-fidelity results. Such solutions not only require domain expertise and significant manual adjustment, but also limit the applicability of the resulting reward function across diverse skills. To bridge this gap, we present a novel adversarial multi-objective optimization technique that is broadly applicable to a range of multi-objective optimization problems, including motion tracking. The proposed adversarial differential discriminator receives a single positive sample, yet is still effective at guiding the optimization process. We demonstrate that our technique can enable characters to closely replicate a variety of acrobatic and agile behaviors, achieving comparable quality to state-of-the-art motion-tracking methods, without relying on manually tuned reward functions. Results are best visualized through https://youtu.be/rz8BYCE9E2w.

Maximum Solar Energy Tracking Leverage High-DoF Robotics System with Deep Reinforcement Learning

Nov 21, 2024

Abstract:Solar trajectory monitoring is a pivotal challenge in solar energy systems, underpinning applications such as autonomous energy harvesting and environmental sensing. A prevalent failure mode in sustained solar tracking arises when the predictive algorithm erroneously diverges from the solar locus, erroneously anchoring to extraneous celestial or terrestrial features. This phenomenon is attributable to an inadequate assimilation of solar-specific objectness attributes within the tracking paradigm. To mitigate this deficiency inherent in extant methodologies, we introduce an innovative objectness regularization framework that compels tracking points to remain confined within the delineated boundaries of the solar entity. By encapsulating solar objectness indicators during the training phase, our approach obviates the necessity for explicit solar mask computation during operational deployment. Furthermore, we leverage the high-DoF robot arm to integrate our method to improve its robustness and flexibility in different outdoor environments.

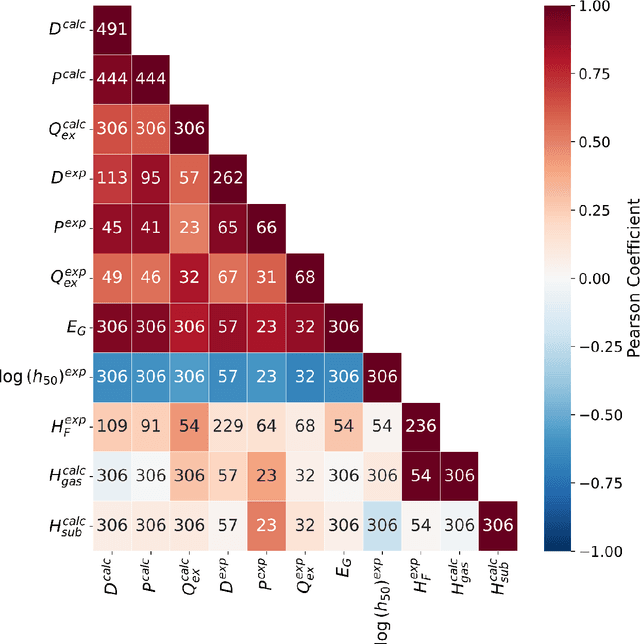

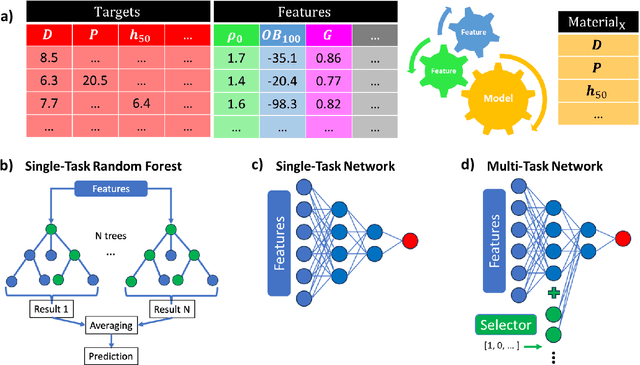

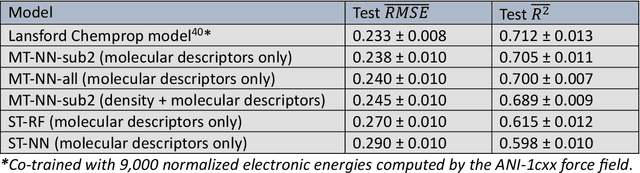

Multi-Task Multi-Fidelity Learning of Properties for Energetic Materials

Aug 21, 2024

Abstract:Data science and artificial intelligence are playing an increasingly important role in the physical sciences. Unfortunately, in the field of energetic materials data scarcity limits the accuracy and even applicability of ML tools. To address data limitations, we compiled multi-modal data: both experimental and computational results for several properties. We find that multi-task neural networks can learn from multi-modal data and outperform single-task models trained for specific properties. As expected, the improvement is more significant for data-scarce properties. These models are trained using descriptors built from simple molecular information and can be readily applied for large-scale materials screening to explore multiple properties simultaneously. This approach is widely applicable to fields outside energetic materials.

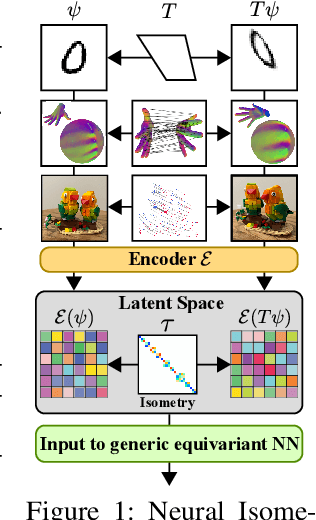

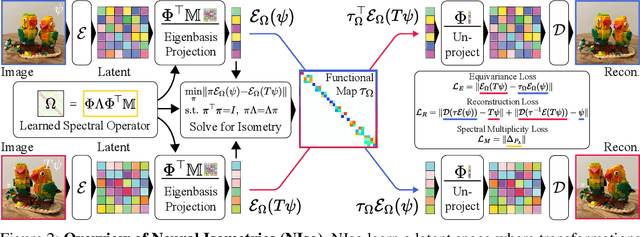

Neural Isometries: Taming Transformations for Equivariant ML

May 29, 2024

Abstract:Real-world geometry and 3D vision tasks are replete with challenging symmetries that defy tractable analytical expression. In this paper, we introduce Neural Isometries, an autoencoder framework which learns to map the observation space to a general-purpose latent space wherein encodings are related by isometries whenever their corresponding observations are geometrically related in world space. Specifically, we regularize the latent space such that maps between encodings preserve a learned inner product and commute with a learned functional operator, in the same manner as rigid-body transformations commute with the Laplacian. This approach forms an effective backbone for self-supervised representation learning, and we demonstrate that a simple off-the-shelf equivariant network operating in the pre-trained latent space can achieve results on par with meticulously-engineered, handcrafted networks designed to handle complex, nonlinear symmetries. Furthermore, isometric maps capture information about the respective transformations in world space, and we show that this allows us to regress camera poses directly from the coefficients of the maps between encodings of adjacent views of a scene.

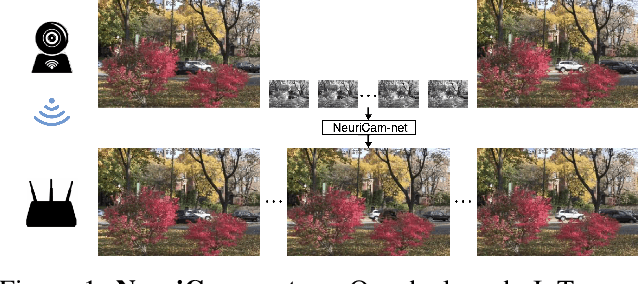

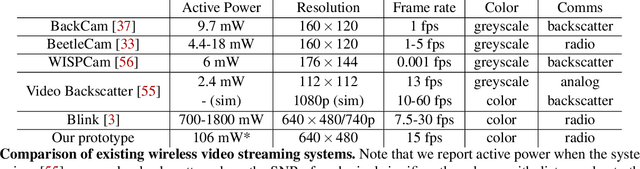

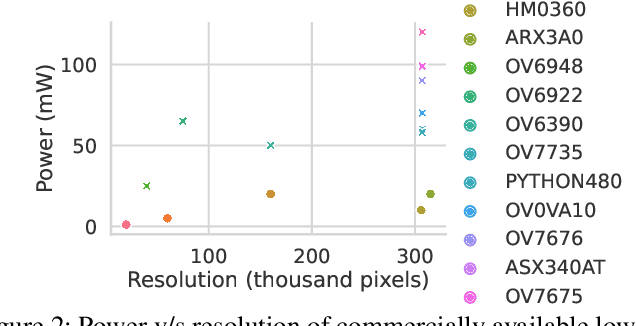

NeuriCam: Video Super-Resolution and Colorization Using Key Frames

Jul 25, 2022

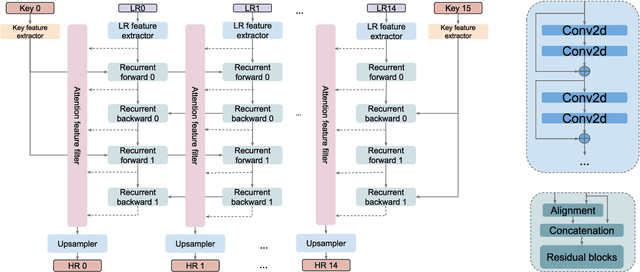

Abstract:We present NeuriCam, a key-frame video super-resolution and colorization based system, to achieve low-power video capture from dual-mode IOT cameras. Our idea is to design a dual-mode camera system where the first mode is low power (1.1~mW) but only outputs gray-scale, low resolution and noisy video and the second mode consumes much higher power (100~mW) but outputs color and higher resolution images. To reduce total energy consumption, we heavily duty cycle the high power mode to output an image only once every second. The data from this camera system is then wirelessly streamed to a nearby plugged-in gateway, where we run our real-time neural network decoder to reconstruct a higher resolution color video. To achieve this, we introduce an attention feature filter mechanism that assigns different weights to different features, based on the correlation between the feature map and contents of the input frame at each spatial location. We design a wireless hardware prototype using off-the-shelf cameras and address practical issues including packet loss and perspective mismatch. Our evaluation shows that our dual-camera hardware reduces camera energy consumption while achieving an average gray-scale PSNR gain of 3.7~dB over prior video super resolution methods and 5.6~dB RGB gain over existing color propagation methods. Open-source code: https://github.com/vb000/NeuriCam.

Learning Bipedal Robot Locomotion from Human Movement

May 26, 2021

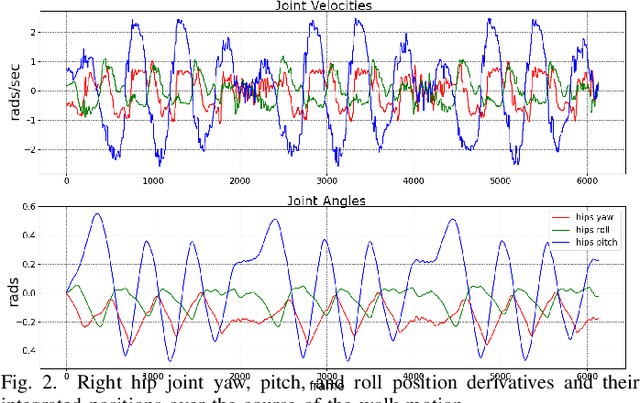

Abstract:Teaching an anthropomorphic robot from human example offers the opportunity to impart humanlike qualities on its movement. In this work we present a reinforcement learning based method for teaching a real world bipedal robot to perform movements directly from human motion capture data. Our method seamlessly transitions from training in a simulation environment to executing on a physical robot without requiring any real world training iterations or offline steps. To overcome the disparity in joint configurations between the robot and the motion capture actor, our method incorporates motion re-targeting into the training process. Domain randomization techniques are used to compensate for the differences between the simulated and physical systems. We demonstrate our method on an internally developed humanoid robot with movements ranging from a dynamic walk cycle to complex balancing and waving. Our controller preserves the style imparted by the motion capture data and exhibits graceful failure modes resulting in safe operation for the robot. This work was performed for research purposes only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge