Michael Herty

Data/moment-driven approaches for fast predictive control of collective dynamics

Feb 23, 2024

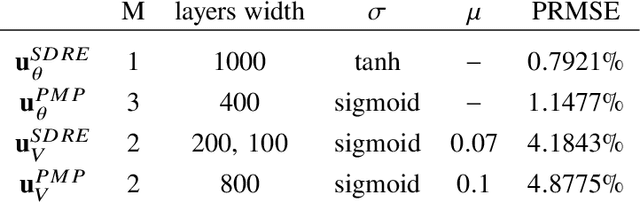

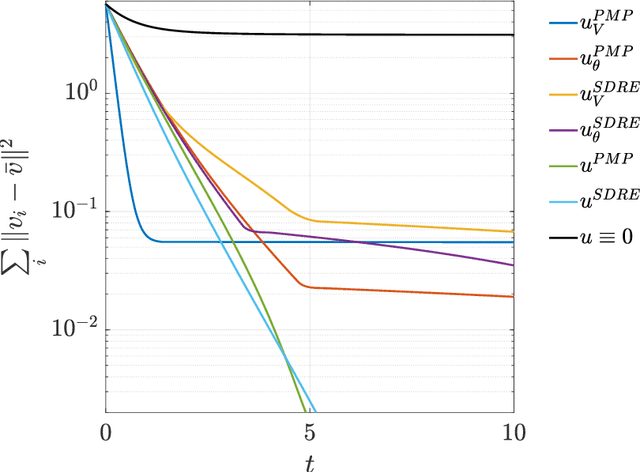

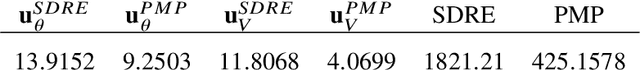

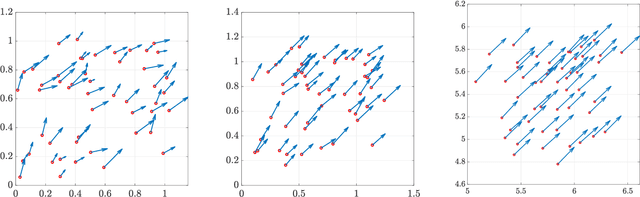

Abstract:Feedback control synthesis for large-scale particle systems is reviewed in the framework of model predictive control (MPC). The high-dimensional character of collective dynamics hampers the performance of traditional MPC algorithms based on fast online dynamic optimization at every time step. Two alternatives to MPC are proposed. First, the use of supervised learning techniques for the offline approximation of optimal feedback laws is discussed. Then, a procedure based on sequential linearization of the dynamics based on macroscopic quantities of the particle ensemble is reviewed. Both approaches circumvent the online solution of optimal control problems enabling fast, real-time, feedback synthesis for large-scale particle systems. Numerical experiments assess the performance of the proposed algorithms.

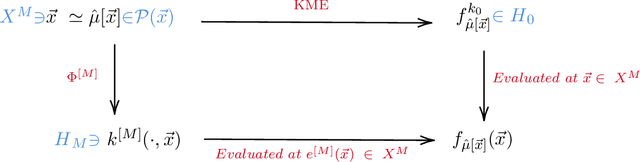

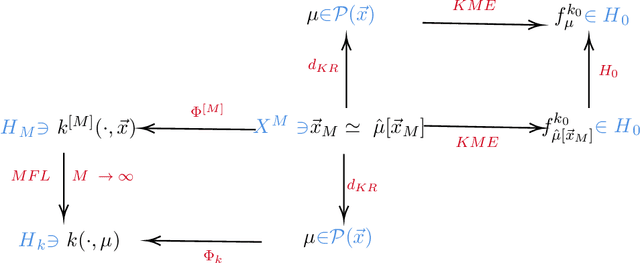

On kernel-based statistical learning in the mean field limit

Oct 27, 2023

Abstract:In many applications of machine learning, a large number of variables are considered. Motivated by machine learning of interacting particle systems, we consider the situation when the number of input variables goes to infinity. First, we continue the recent investigation of the mean field limit of kernels and their reproducing kernel Hilbert spaces, completing the existing theory. Next, we provide results relevant for approximation with such kernels in the mean field limit, including a representer theorem. Finally, we use these kernels in the context of statistical learning in the mean field limit, focusing on Support Vector Machines. In particular, we show mean field convergence of empirical and infinite-sample solutions as well as the convergence of the corresponding risks. On the one hand, our results establish rigorous mean field limits in the context of kernel methods, providing new theoretical tools and insights for large-scale problems. On the other hand, our setting corresponds to a new form of limit of learning problems, which seems to have not been investigated yet in the statistical learning theory literature.

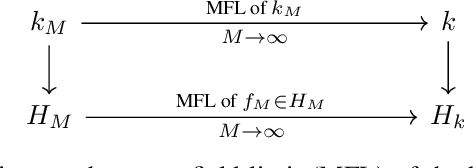

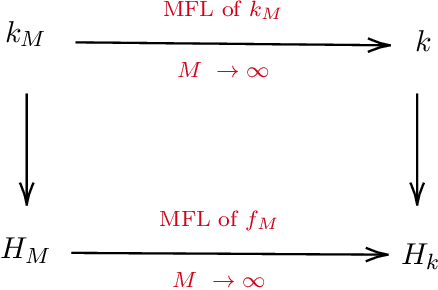

Reproducing kernel Hilbert spaces in the mean field limit

Mar 17, 2023

Abstract:Kernel methods, being supported by a well-developed theory and coming with efficient algorithms, are among the most popular and successful machine learning techniques. From a mathematical point of view, these methods rest on the concept of kernels and function spaces generated by kernels, so called reproducing kernel Hilbert spaces. Motivated by recent developments of learning approaches in the context of interacting particle systems, we investigate kernel methods acting on data with many measurement variables. We show the rigorous mean field limit of kernels and provide a detailed analysis of the limiting reproducing kernel Hilbert space. Furthermore, several examples of kernels, that allow a rigorous mean field limit, are presented.

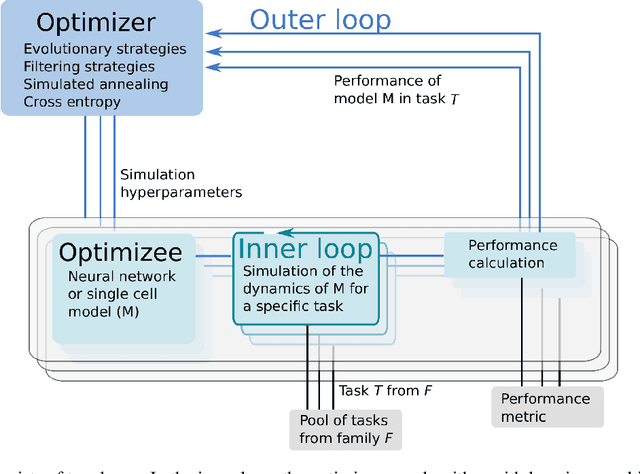

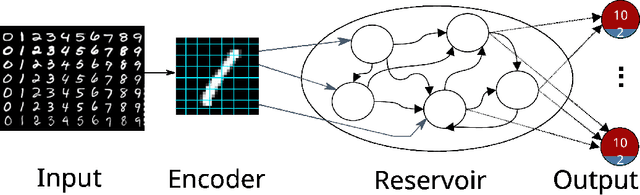

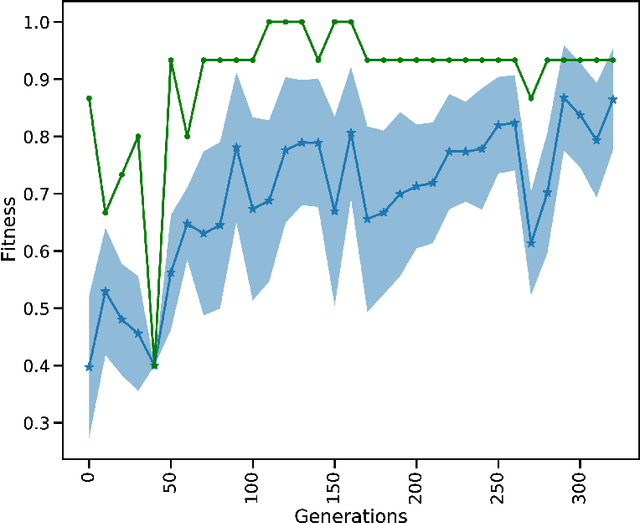

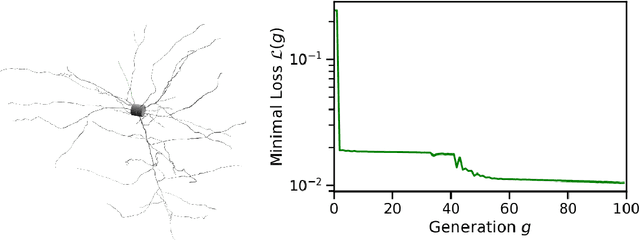

Exploring hyper-parameter spaces of neuroscience models on high performance computers with Learning to Learn

Feb 28, 2022

Abstract:Neuroscience models commonly have a high number of degrees of freedom and only specific regions within the parameter space are able to produce dynamics of interest. This makes the development of tools and strategies to efficiently find these regions of high importance to advance brain research. Exploring the high dimensional parameter space using numerical simulations has been a frequently used technique in the last years in many areas of computational neuroscience. High performance computing (HPC) can provide today a powerful infrastructure to speed up explorations and increase our general understanding of the model's behavior in reasonable times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge