Michael Forsting

SALT: Introducing a Framework for Hierarchical Segmentations in Medical Imaging using Softmax for Arbitrary Label Trees

Jul 11, 2024

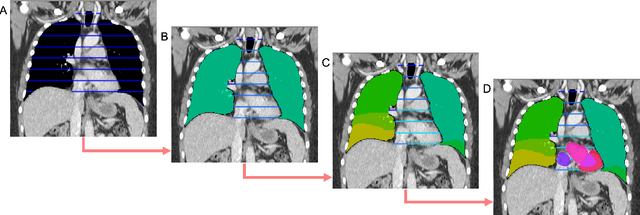

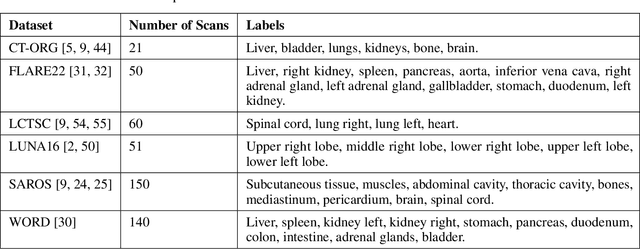

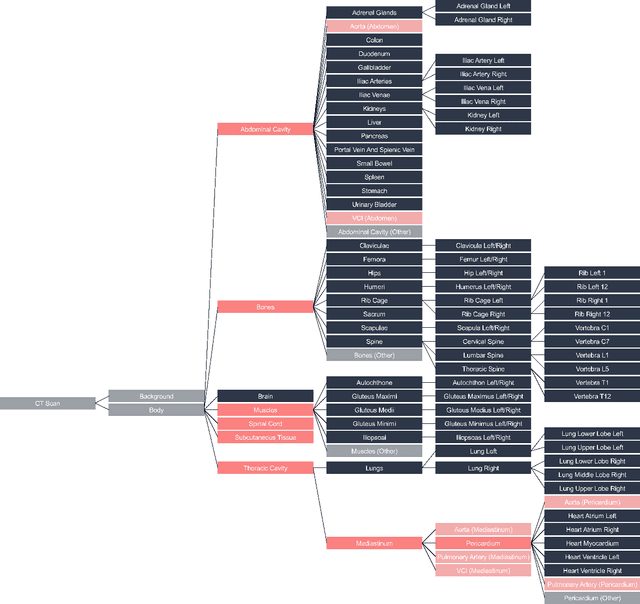

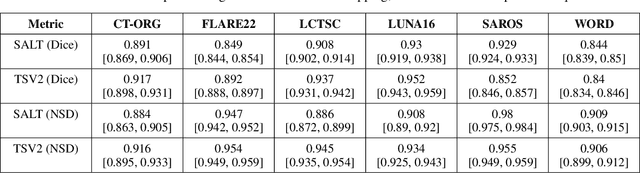

Abstract:Traditional segmentation networks approach anatomical structures as standalone elements, overlooking the intrinsic hierarchical connections among them. This study introduces Softmax for Arbitrary Label Trees (SALT), a novel approach designed to leverage the hierarchical relationships between labels, improving the efficiency and interpretability of the segmentations. This study introduces a novel segmentation technique for CT imaging, which leverages conditional probabilities to map the hierarchical structure of anatomical landmarks, such as the spine's division into lumbar, thoracic, and cervical regions and further into individual vertebrae. The model was developed using the SAROS dataset from The Cancer Imaging Archive (TCIA), comprising 900 body region segmentations from 883 patients. The dataset was further enhanced by generating additional segmentations with the TotalSegmentator, for a total of 113 labels. The model was trained on 600 scans, while validation and testing were conducted on 150 CT scans. Performance was assessed using the Dice score across various datasets, including SAROS, CT-ORG, FLARE22, LCTSC, LUNA16, and WORD. Among the evaluated datasets, SALT achieved its best results on the LUNA16 and SAROS datasets, with Dice scores of 0.93 and 0.929 respectively. The model demonstrated reliable accuracy across other datasets, scoring 0.891 on CT-ORG and 0.849 on FLARE22. The LCTSC dataset showed a score of 0.908 and the WORD dataset also showed good performance with a score of 0.844. SALT used the hierarchical structures inherent in the human body to achieve whole-body segmentations with an average of 35 seconds for 100 slices. This rapid processing underscores its potential for integration into clinical workflows, facilitating the automatic and efficient computation of full-body segmentations with each CT scan, thus enhancing diagnostic processes and patient care.

MOMO -- Deep Learning-driven classification of external DICOM studies for PACS archivation

Dec 01, 2021

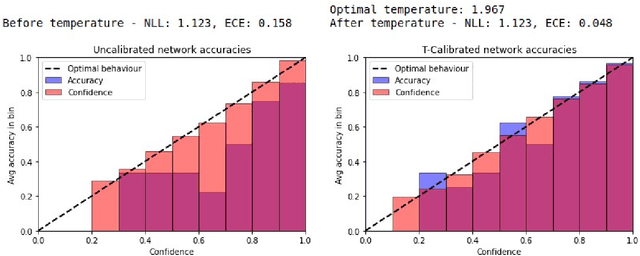

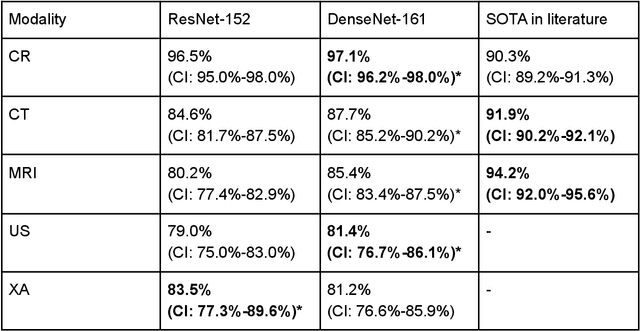

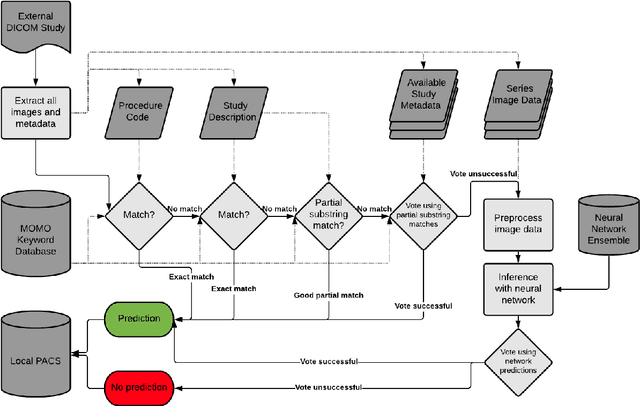

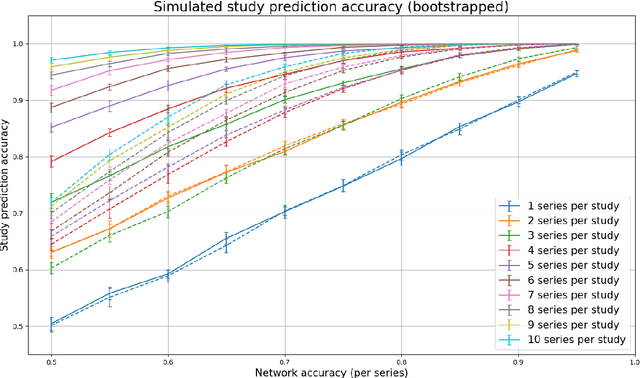

Abstract:Patients regularly continue assessment or treatment in other facilities than they began them in, receiving their previous imaging studies as a CD-ROM and requiring clinical staff at the new hospital to import these studies into their local database. However, between different facilities, standards for nomenclature, contents, or even medical procedures may vary, often requiring human intervention to accurately classify the received studies in the context of the recipient hospital's standards. In this study, the authors present MOMO (MOdality Mapping and Orchestration), a deep learning-based approach to automate this mapping process utilizing metadata substring matching and a neural network ensemble, which is trained to recognize the 76 most common imaging studies across seven different modalities. A retrospective study is performed to measure the accuracy that this algorithm can provide. To this end, a set of 11,934 imaging series with existing labels was retrieved from the local hospital's PACS database to train the neural networks. A set of 843 completely anonymized external studies was hand-labeled to assess the performance of our algorithm. Additionally, an ablation study was performed to measure the performance impact of the network ensemble in the algorithm, and a comparative performance test with a commercial product was conducted. In comparison to a commercial product (96.20% predictive power, 82.86% accuracy, 1.36% minor errors), a neural network ensemble alone performs the classification task with less accuracy (99.05% predictive power, 72.69% accuracy, 10.3% minor errors). However, MOMO outperforms either by a large margin in accuracy and with increased predictive power (99.29% predictive power, 92.71% accuracy, 2.63% minor errors).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge