Mi Lu

Lumos3D: A Single-Forward Framework for Low-Light 3D Scene Restoration

Nov 12, 2025

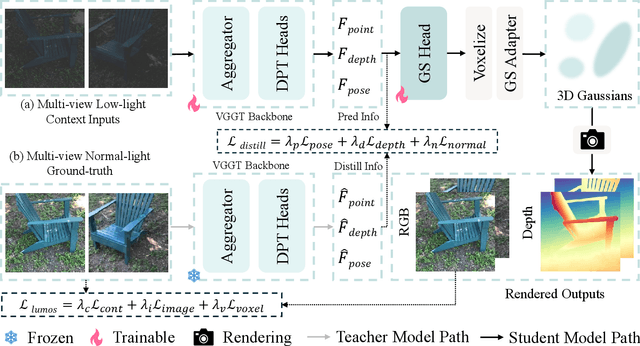

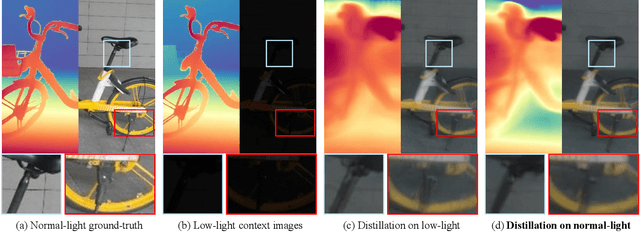

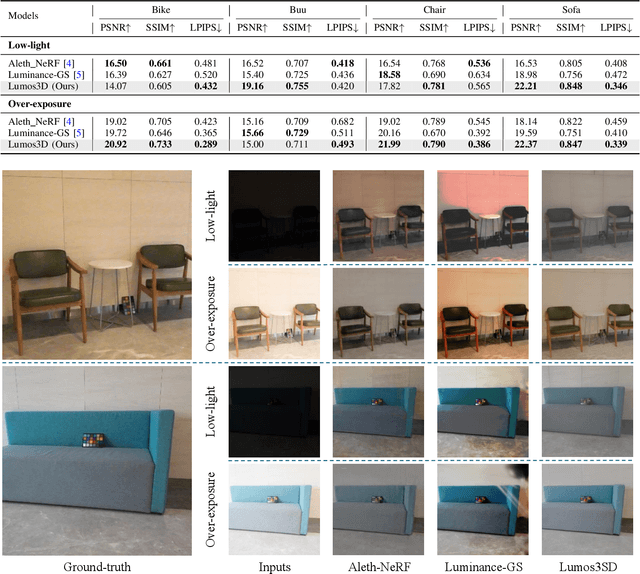

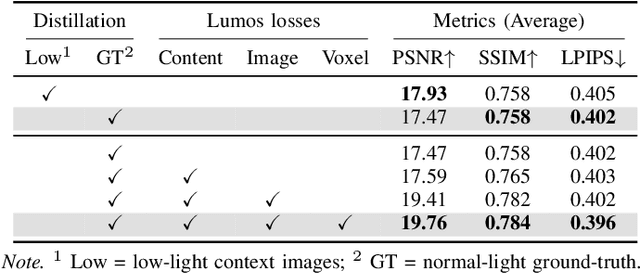

Abstract:Restoring 3D scenes captured under low-light con- ditions remains a fundamental yet challenging problem. Most existing approaches depend on precomputed camera poses and scene-specific optimization, which greatly restricts their scala- bility to dynamic real-world environments. To overcome these limitations, we introduce Lumos3D, a generalizable pose-free framework for 3D low-light scene restoration. Trained once on a single dataset, Lumos3D performs inference in a purely feed- forward manner, directly restoring illumination and structure from unposed, low-light multi-view images without any per- scene training or optimization. Built upon a geometry-grounded backbone, Lumos3D reconstructs a normal-light 3D Gaussian representation that restores illumination while faithfully pre- serving structural details. During training, a cross-illumination distillation scheme is employed, where the teacher network is distilled on normal-light ground truth to transfer accurate geometric information, such as depth, to the student model. A dedicated Lumos loss is further introduced to promote photomet- ric consistency within the reconstructed 3D space. Experiments on real-world datasets demonstrate that Lumos3D achieves high- fidelity low-light 3D scene restoration with accurate geometry and strong generalization to unseen cases. Furthermore, the framework naturally extends to handle over-exposure correction, highlighting its versatility for diverse lighting restoration tasks.

Stylos: Multi-View 3D Stylization with Single-Forward Gaussian Splatting

Sep 30, 2025Abstract:We present Stylos, a single-forward 3D Gaussian framework for 3D style transfer that operates on unposed content, from a single image to a multi-view collection, conditioned on a separate reference style image. Stylos synthesizes a stylized 3D Gaussian scene without per-scene optimization or precomputed poses, achieving geometry-aware, view-consistent stylization that generalizes to unseen categories, scenes, and styles. At its core, Stylos adopts a Transformer backbone with two pathways: geometry predictions retain self-attention to preserve geometric fidelity, while style is injected via global cross-attention to enforce visual consistency across views. With the addition of a voxel-based 3D style loss that aligns aggregated scene features to style statistics, Stylos enforces view-consistent stylization while preserving geometry. Experiments across multiple datasets demonstrate that Stylos delivers high-quality zero-shot stylization, highlighting the effectiveness of global style-content coupling, the proposed 3D style loss, and the scalability of our framework from single view to large-scale multi-view settings.

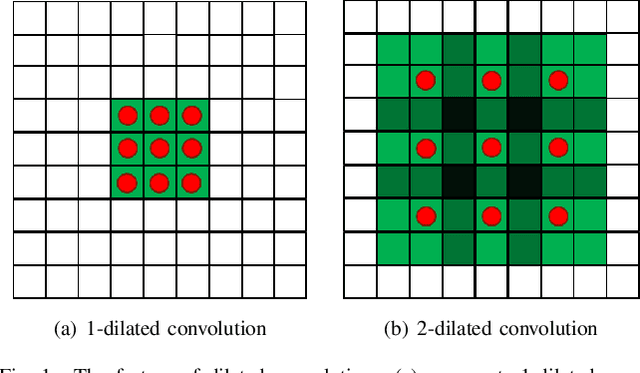

DiNAT-IR: Exploring Dilated Neighborhood Attention for High-Quality Image Restoration

Jul 23, 2025Abstract:Transformers, with their self-attention mechanisms for modeling long-range dependencies, have become a dominant paradigm in image restoration tasks. However, the high computational cost of self-attention limits scalability to high-resolution images, making efficiency-quality trade-offs a key research focus. To address this, Restormer employs channel-wise self-attention, which computes attention across channels instead of spatial dimensions. While effective, this approach may overlook localized artifacts that are crucial for high-quality image restoration. To bridge this gap, we explore Dilated Neighborhood Attention (DiNA) as a promising alternative, inspired by its success in high-level vision tasks. DiNA balances global context and local precision by integrating sliding-window attention with mixed dilation factors, effectively expanding the receptive field without excessive overhead. However, our preliminary experiments indicate that directly applying this global-local design to the classic deblurring task hinders accurate visual restoration, primarily due to the constrained global context understanding within local attention. To address this, we introduce a channel-aware module that complements local attention, effectively integrating global context without sacrificing pixel-level precision. The proposed DiNAT-IR, a Transformer-based architecture specifically designed for image restoration, achieves competitive results across multiple benchmarks, offering a high-quality solution for diverse low-level computer vision problems.

XYScanNet: An Interpretable State Space Model for Perceptual Image Deblurring

Dec 13, 2024

Abstract:Deep state-space models (SSMs), like recent Mamba architectures, are emerging as a promising alternative to CNN and Transformer networks. Existing Mamba-based restoration methods process the visual data by leveraging a flatten-and-scan strategy that converts image patches into a 1D sequence before scanning. However, this scanning paradigm ignores local pixel dependencies and introduces spatial misalignment by positioning distant pixels incorrectly adjacent, which reduces local noise-awareness and degrades image sharpness in low-level vision tasks. To overcome these issues, we propose a novel slice-and-scan strategy that alternates scanning along intra- and inter-slices. We further design a new Vision State Space Module (VSSM) for image deblurring, and tackle the inefficiency challenges of the current Mamba-based vision module. Building upon this, we develop XYScanNet, an SSM architecture integrated with a lightweight feature fusion module for enhanced image deblurring. XYScanNet, maintains competitive distortion metrics and significantly improves perceptual performance. Experimental results show that XYScanNet enhances KID by $17\%$ compared to the nearest competitor. Our code will be released soon.

DeblurDiNAT: A Lightweight and Effective Transformer for Image Deblurring

Mar 19, 2024

Abstract:Blurry images may contain local and global non-uniform artifacts, which complicate the deblurring process and make it more challenging to achieve satisfactory results. Recently, Transformers generate improved deblurring outcomes than existing CNN architectures. However, the large model size and long inference time are still two bothersome issues which have not been fully explored. To this end, we propose DeblurDiNAT, a compact encoder-decoder Transformer which efficiently restores clean images from real-world blurry ones. We adopt an alternating dilation factor structure with the aim of global-local feature learning. Also, we observe that simply using self-attention layers in networks does not always produce good deblurred results. To solve this problem, we propose a channel modulation self-attention (CMSA) block, where a cross-channel learner (CCL) is utilized to capture channel relationships. In addition, we present a divide and multiply feed-forward network (DMFN) allowing fast feature propagation. Moreover, we design a lightweight gated feature fusion (LGFF) module, which performs controlled feature merging. Comprehensive experimental results show that the proposed model, named DeblurDiNAT, provides a favorable performance boost without introducing noticeable computational costs over the baseline, and achieves state-of-the-art (SOTA) performance on several image deblurring datasets. Compared to nearest competitors, our space-efficient and time-saving method demonstrates a stronger generalization ability with 3%-68% fewer parameters and produces deblurred images that are visually closer to the ground truth.

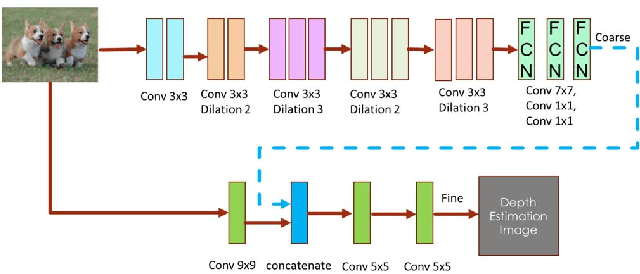

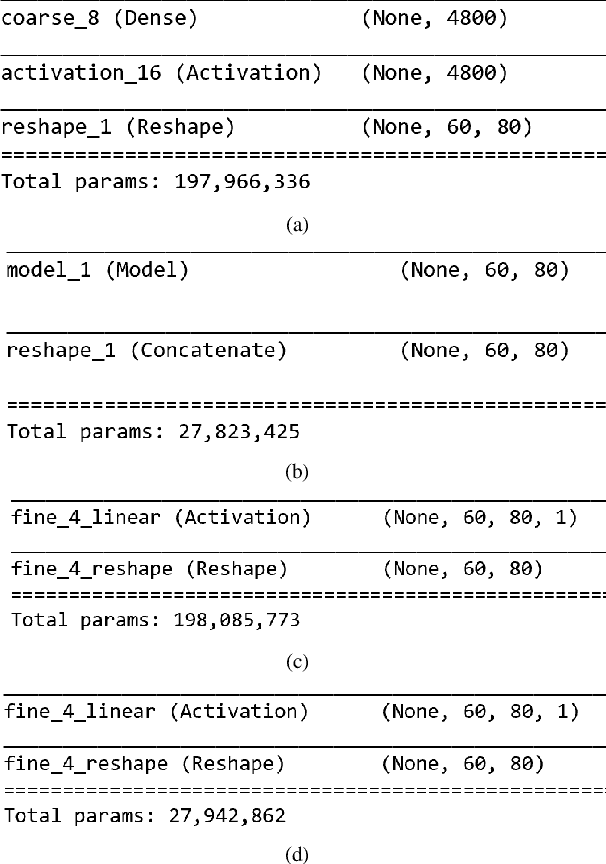

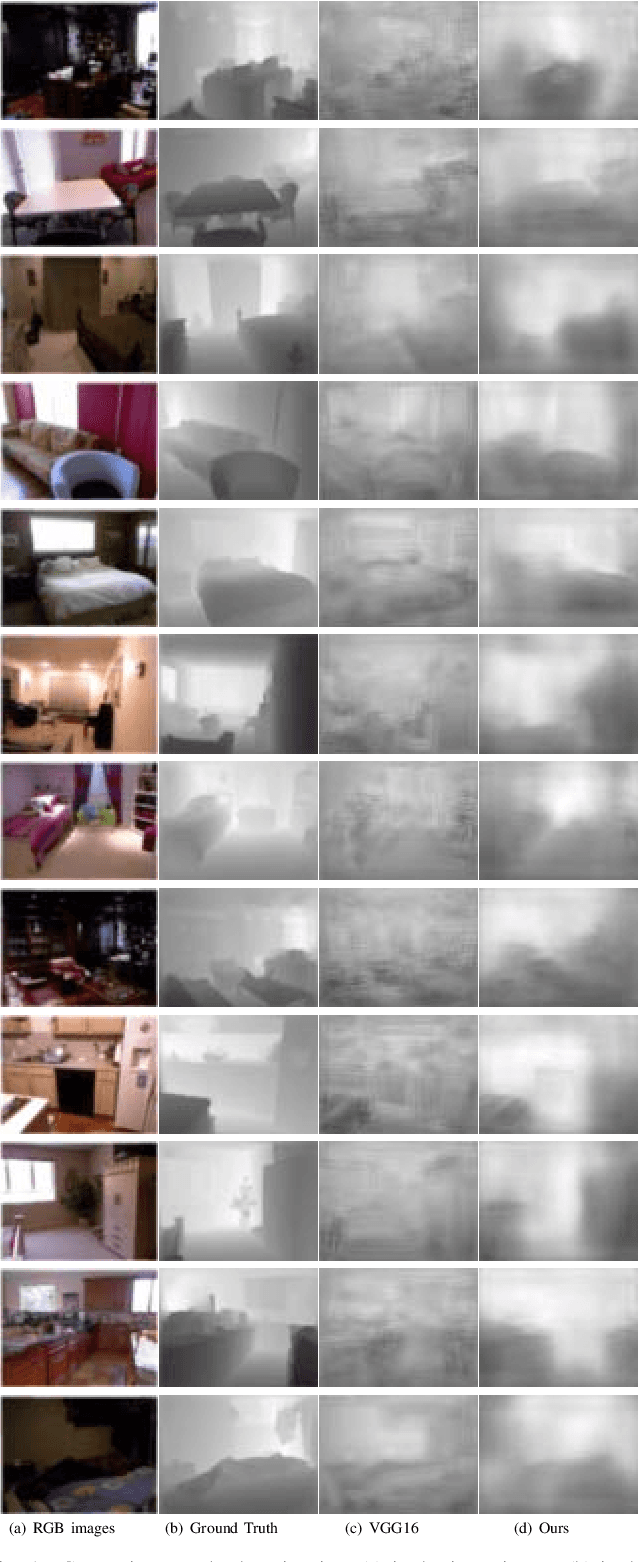

Dilated Fully Convolutional Neural Network for Depth Estimation from a Single Image

Mar 12, 2021

Abstract:Depth prediction plays a key role in understanding a 3D scene. Several techniques have been developed throughout the years, among which Convolutional Neural Network has recently achieved state-of-the-art performance on estimating depth from a single image. However, traditional CNNs suffer from the lower resolution and information loss caused by the pooling layers. And oversized parameters generated from fully connected layers often lead to a exploded memory usage problem. In this paper, we present an advanced Dilated Fully Convolutional Neural Network to address the deficiencies. Taking advantages of the exponential expansion of the receptive field in dilated convolutions, our model can minimize the loss of resolution. It also reduces the amount of parameters significantly by replacing the fully connected layers with the fully convolutional layers. We show experimentally on NYU Depth V2 datasets that the depth prediction obtained from our model is considerably closer to ground truth than that from traditional CNNs techniques.

Advanced Multiple Linear Regression Based Dark Channel Prior Applied on Dehazing Image and Generating Synthetic Haze

Mar 12, 2021

Abstract:Haze removal is an extremely challenging task, and object detection in the hazy environment has recently gained much attention due to the popularity of autonomous driving and traffic surveillance. In this work, the authors propose a multiple linear regression haze removal model based on a widely adopted dehazing algorithm named Dark Channel Prior. Training this model with a synthetic hazy dataset, the proposed model can reduce the unanticipated deviations generated from the rough estimations of transmission map and atmospheric light in Dark Channel Prior. To increase object detection accuracy in the hazy environment, the authors further present an algorithm to build a synthetic hazy COCO training dataset by generating the artificial haze to the MS COCO training dataset. The experimental results demonstrate that the proposed model obtains higher image quality and shares more similarity with ground truth images than most conventional pixel-based dehazing algorithms and neural network based haze-removal models. The authors also evaluate the mean average precision of Mask R-CNN when training the network with synthetic hazy COCO training dataset and preprocessing test hazy dataset by removing the haze with the proposed dehazing model. It turns out that both approaches can increase the object detection accuracy significantly and outperform most existing object detection models over hazy images.

Multiple Linear Regression Haze-removal Model Based on Dark Channel Prior

Apr 25, 2019

Abstract:Dark Channel Prior (DCP) is a widely recognized traditional dehazing algorithm. However, it may fail in bright region and the brightness of the restored image is darker than hazy image. In this paper, we propose an effective method to optimize DCP. We build a multiple linear regression haze-removal model based on DCP atmospheric scattering model and train this model with RESIDE dataset, which aims to reduce the unexpected errors caused by the rough estimations of transmission map t(x) and atmospheric light A. The RESIDE dataset provides enough synthetic hazy images and their corresponding groundtruth images to train and test. We compare the performances of different dehazing algorithms in terms of two important full-reference metrics, the peak-signal-to-noise ratio (PSNR) as well as the structural similarity index measure (SSIM). The experiment results show that our model gets highest SSIM value and its PSNR value is also higher than most of state-of-the-art dehazing algorithms. Our results also overcome the weakness of DCP on real-world hazy images

An optimized system to solve text-based CAPTCHA

Jun 11, 2018

Abstract:CAPTCHA(Completely Automated Public Turing test to Tell Computers and Humans Apart) can be used to protect data from auto bots. Countless kinds of CAPTCHAs are thus designed, while we most frequently utilize text-based scheme because of most convenience and user-friendly way \cite{bursztein2011text}. Currently, various types of CAPTCHAs need corresponding segmentation to identify single character due to the numerous different segmentation ways. Our goal is to defeat the CAPTCHA, thus firstly the CAPTCHAs need to be split into character by character. There isn't a regular segmentation algorithm to obtain the divided characters in all kinds of examples, which means that we have to treat the segmentation individually. In this paper, we build a whole system to defeat the CAPTCHAs as well as achieve state-of-the-art performance. In detail, we present our self-adaptive algorithm to segment different kinds of characters optimally, and then utilize both the existing methods and our own constructed convolutional neural network as an extra classifier. Results are provided showing how our system work well towards defeating these CAPTCHAs.

English Out-of-Vocabulary Lexical Evaluation Task

Apr 11, 2018

Abstract:Unlike previous unknown nouns tagging task (Curran, 2005) (Ciaramita and Johnson, 2003), this is the first attempt to focus on out-of- vocabulary(OOV) lexical evaluation tasks that does not require any prior knowledge. The OOV words are words that only appear in test samples. The goal of tasks is to pro- vide solutions for OOV lexical classification and predication. The tasks require annotators to conclude the attributes of the OOV words based on their related contexts. Then, we uti- lize unsupervised word embedding methods such as Word2Vec(Mikolov et al., 2013) and Word2GM (Athiwaratkun and Wilson, 2017) to perform the baseline experiments on the cat- egorical classification task and OOV words at- tribute prediction tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge