Mengguang Li

FPGA-Based Neural Thrust Controller for UAVs

Mar 28, 2024Abstract:The advent of unmanned aerial vehicles (UAVs) has improved a variety of fields by providing a versatile, cost-effective and accessible platform for implementing state-of-the-art algorithms. To accomplish a broader range of tasks, there is a growing need for enhanced on-board computing to cope with increasing complexity and dynamic environmental conditions. Recent advances have seen the application of Deep Neural Networks (DNNs), particularly in combination with Reinforcement Learning (RL), to improve the adaptability and performance of UAVs, especially in unknown environments. However, the computational requirements of DNNs pose a challenge to the limited computing resources available on many UAVs. This work explores the use of Field Programmable Gate Arrays (FPGAs) as a viable solution to this challenge, offering flexibility, high performance, energy and time efficiency. We propose a novel hardware board equipped with an Artix-7 FPGA for a popular open-source micro-UAV platform. We successfully validate its functionality by implementing an RL-based low-level controller using real-world experiments.

A Modular Aerial System Based on Homogeneous Quadrotors with Fault-Tolerant Control

Feb 02, 2024Abstract:The standard quadrotor is one of the most popular and widely used aerial vehicle of recent decades, offering great maneuverability with mechanical simplicity. However, the under-actuation characteristic limits its applications, especially when it comes to generating desired wrench with six degrees of freedom (DOF). Therefore, existing work often compromises between mechanical complexity and the controllable DOF of the aerial system. To take advantage of the mechanical simplicity of a standard quadrotor, we propose a modular aerial system, IdentiQuad, that combines only homogeneous quadrotor-based modules. Each IdentiQuad can be operated alone like a standard quadrotor, but at the same time allows task-specific assembly, increasing the controllable DOF of the system. Each module is interchangeable within its assembly. We also propose a general controller for different configurations of assemblies, capable of tolerating rotor failures and balancing the energy consumption of each module. The functionality and robustness of the system and its controller are validated using physics-based simulations for different assembly configurations.

Optimal Collaborative Transportation for Under-Capacitated Vehicle Routing Problems using Aerial Drone Swarms

Oct 04, 2023

Abstract:Swarms of aerial drones have recently been considered for last-mile deliveries in urban logistics or automated construction. At the same time, collaborative transportation of payloads by multiple drones is another important area of recent research. However, efficient coordination algorithms for collaborative transportation of many payloads by many drones remain to be considered. In this work, we formulate the collaborative transportation of payloads by a swarm of drones as a novel, under-capacitated generalization of vehicle routing problems (VRP), which may also be of separate interest. In contrast to standard VRP and capacitated VRP, we must additionally consider waiting times for payloads lifted cooperatively by multiple drones, and the corresponding coordination. Algorithmically, we provide a solution encoding that avoids deadlocks and formulate an appropriate alternating minimization scheme to solve the problem. On the hardware side, we integrate our algorithms with collision avoidance and drone controllers. The approach and the impact of the system integration are successfully verified empirically, both on a swarm of real nano-quadcopters and for large swarms in simulation. Overall, we provide a framework for collaborative transportation with aerial drone swarms, that uses only as many drones as necessary for the transportation of any single payload.

UAV Swarms for Joint Data Ferrying and Dynamic Cell Coverage via Optimal Transport Descent and Quadratic Assignment

Jul 06, 2023Abstract:Both data ferrying with disruption-tolerant networking (DTN) and mobile cellular base stations constitute important techniques for UAV-aided communication in situations of crises where standard communication infrastructure is unavailable. For optimal use of a limited number of UAVs, we propose providing both DTN and a cellular base station on each UAV. Here, DTN is used for large amounts of low-priority data, while capacity-constrained cell coverage remains reserved for emergency calls or command and control. We optimize cell coverage via a novel optimal transport-based formulation using alternating minimization, while for data ferrying we periodically deliver data between dynamic clusters by solving quadratic assignment problems. In our evaluation, we consider different scenarios with varying mobility models and a wide range of flight patterns. Overall, we tractably achieve optimal cell coverage under quality-of-service costs with DTN-based data ferrying, enabling large-scale deployment of UAV swarms for crisis communication.

Scalable Task-Driven Robotic Swarm Control via Collision Avoidance and Learning Mean-Field Control

Sep 15, 2022

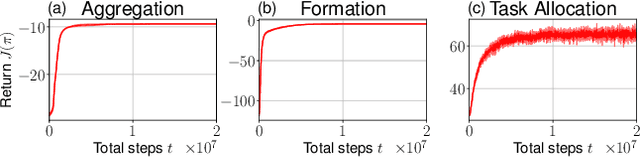

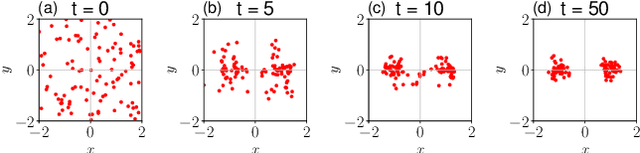

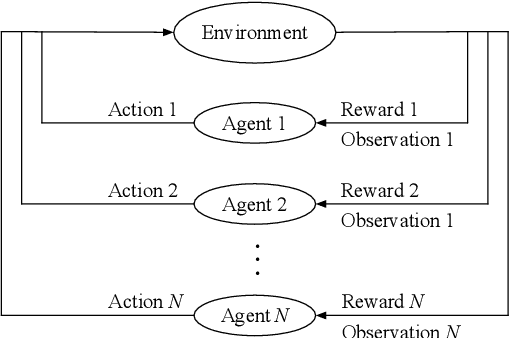

Abstract:In recent years, reinforcement learning and its multi-agent analogue have achieved great success in solving various complex control problems. However, multi-agent reinforcement learning remains challenging both in its theoretical analysis and empirical design of algorithms, especially for large swarms of embodied robotic agents where a definitive toolchain remains part of active research. We use emerging state-of-the-art mean-field control techniques in order to convert many-agent swarm control into more classical single-agent control of distributions. This allows profiting from advances in single-agent reinforcement learning at the cost of assuming weak interaction between agents. As a result, the mean-field model is violated by the nature of real systems with embodied, physically colliding agents. Here, we combine collision avoidance and learning of mean-field control into a unified framework for tractably designing intelligent robotic swarm behavior. On the theoretical side, we provide novel approximation guarantees for both general mean-field control in continuous spaces and with collision avoidance. On the practical side, we show that our approach outperforms multi-agent reinforcement learning and allows for decentralized open-loop application while avoiding collisions, both in simulation and real UAV swarms. Overall, we propose a framework for the design of swarm behavior that is both mathematically well-founded and practically useful, enabling the solution of otherwise intractable swarm problems.

A Survey on Large-Population Systems and Scalable Multi-Agent Reinforcement Learning

Sep 08, 2022

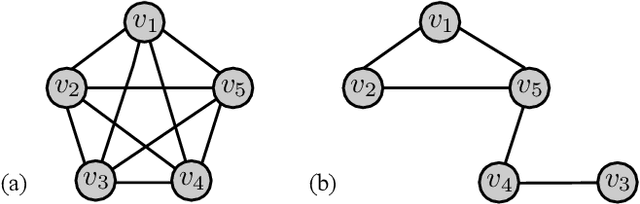

Abstract:The analysis and control of large-population systems is of great interest to diverse areas of research and engineering, ranging from epidemiology over robotic swarms to economics and finance. An increasingly popular and effective approach to realizing sequential decision-making in multi-agent systems is through multi-agent reinforcement learning, as it allows for an automatic and model-free analysis of highly complex systems. However, the key issue of scalability complicates the design of control and reinforcement learning algorithms particularly in systems with large populations of agents. While reinforcement learning has found resounding empirical success in many scenarios with few agents, problems with many agents quickly become intractable and necessitate special consideration. In this survey, we will shed light on current approaches to tractably understanding and analyzing large-population systems, both through multi-agent reinforcement learning and through adjacent areas of research such as mean-field games, collective intelligence, or complex network theory. These classically independent subject areas offer a variety of approaches to understanding or modeling large-population systems, which may be of great use for the formulation of tractable MARL algorithms in the future. Finally, we survey potential areas of application for large-scale control and identify fruitful future applications of learning algorithms in practical systems. We hope that our survey could provide insight and future directions to junior and senior researchers in theoretical and applied sciences alike.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge