Meixiang Quan

LOF: Structure-Aware Line Tracking based on Optical Flow

Sep 17, 2021

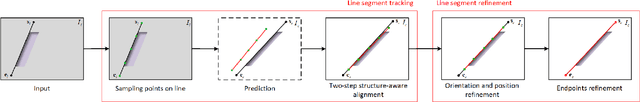

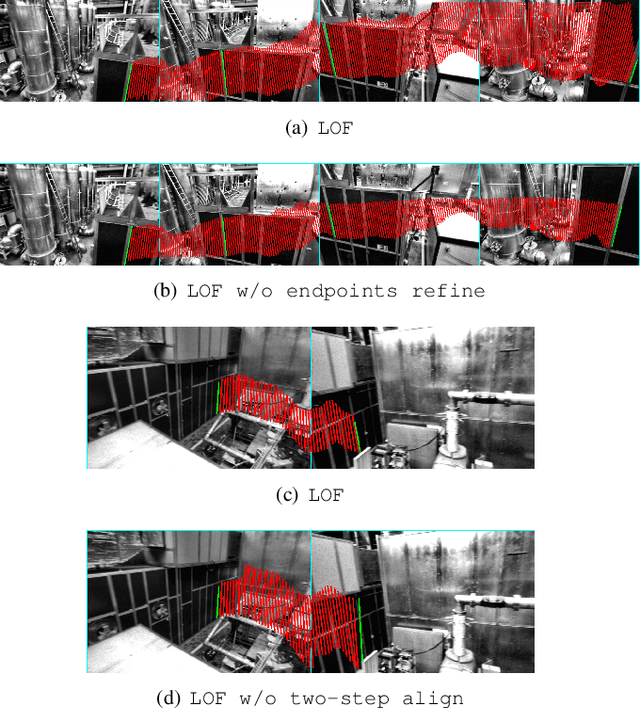

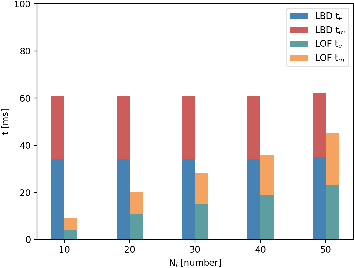

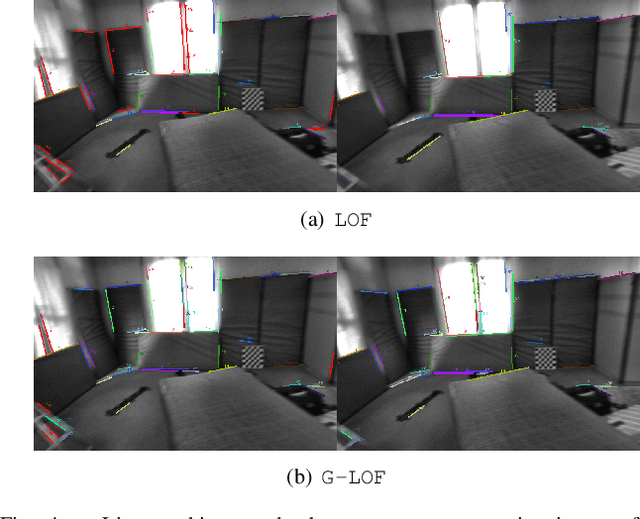

Abstract:Lines provide the significantly richer geometric structural information about the environment than points, so lines are widely used in recent Visual Odometry (VO) works. Since VO with lines use line tracking results to locate and map, line tracking is a crucial component in VO. Although the state-of-the-art line tracking methods have made great progress, they are still heavily dependent on line detection or the predicted line segments. In order to relieve the dependencies described above to track line segments completely, accurately, and robustly at higher computational efficiency, we propose a structure-aware Line tracking algorithm based entirely on Optical Flow (LOF). Firstly, we propose a gradient-based strategy to sample pixels on lines that are suitable for line optical flow calculation. Then, in order to align the lines by fully using the structural relationship between the sampled points on it and effectively removing the influence of sampled points on it occluded by other objects, we propose a two-step structure-aware line segment alignment method. Furthermore, we propose a line refinement method to refine the orientation, position, and endpoints of the aligned line segments. Extensive experimental results demonstrate that the proposed LOF outperforms the state-of-the-art performance in line tracking accuracy, robustness, and efficiency, which also improves the location accuracy and robustness of VO system with lines.

Active collaboration in relative observation for Multi-agent visual SLAM based on Deep Q Network

Sep 23, 2019

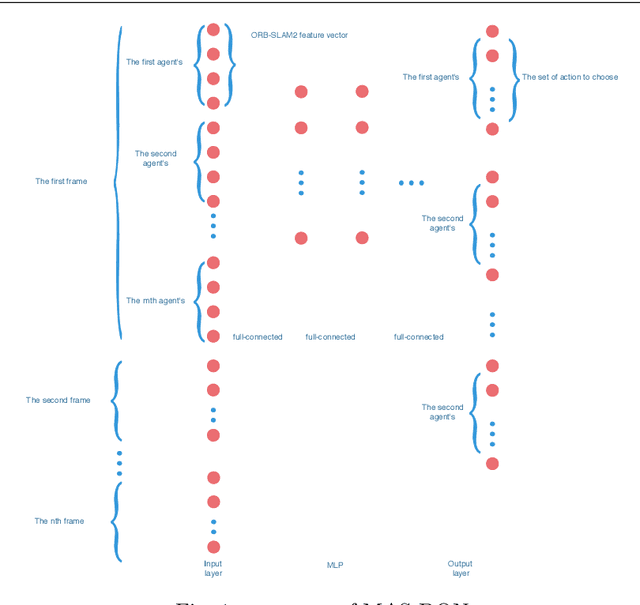

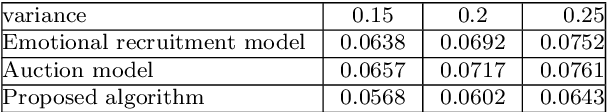

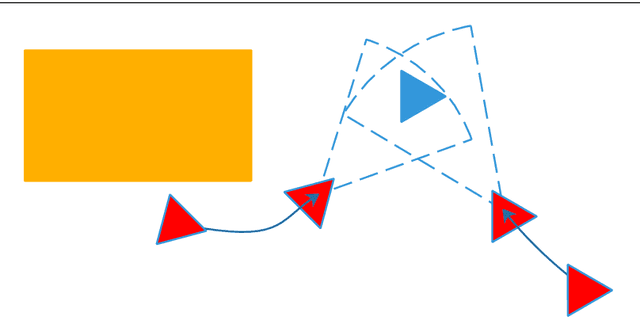

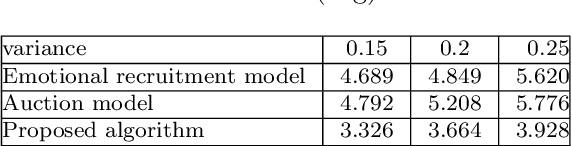

Abstract:This paper proposes a unique active relative localization mechanism for multi-agent Simultaneous Localization and Mapping(SLAM),in which a agent to be observed are considered as a task, which is performed by others assisting that agent by relative observation. A task allocation algorithm based on deep reinforcement learning are proposed for this mechanism. Each agent can choose whether to localize other agents or to continue independent SLAM on it own initiative. By this way, the process of each agent SLAM will be interacted by the collaboration. Firstly, based on the characteristics of ORBSLAM, a unique observation function which models the whole MAS is obtained. Secondly, a novel type of Deep Q network(DQN) called MAS-DQN is deployed to learn correspondence between Q Value and state-action pair,abstract representation of agents in MAS are learned in the process of collaboration among agents. Finally, each agent must act with a certain degree of freedom according to MAS-DQN. The simulation results of comparative experiments prove that this mechanism improves the efficiency of cooperation in the process of multi-agent SLAM.

Tightly-coupled Monocular Visual-odometric SLAM using Wheels and a MEMS Gyroscope

Apr 13, 2018

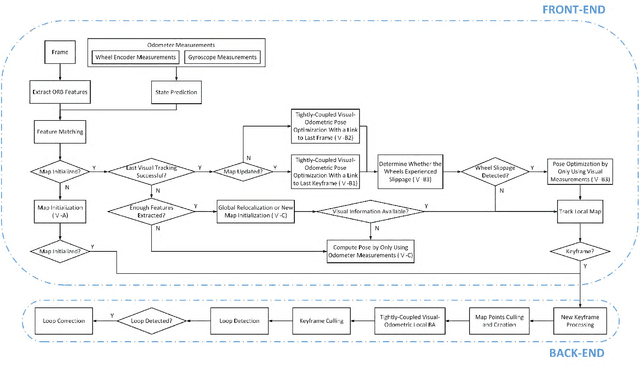

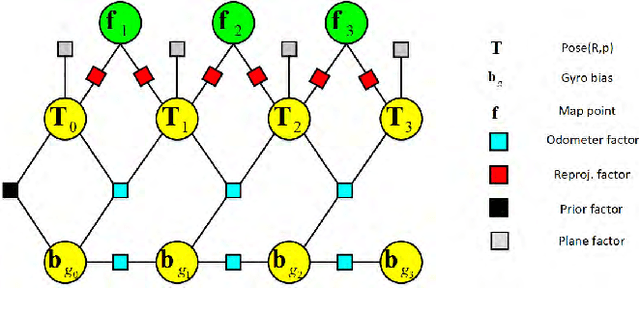

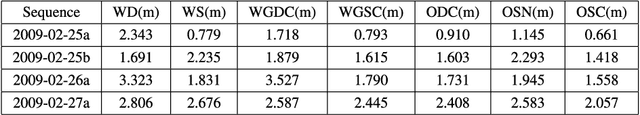

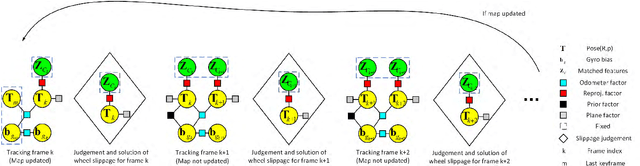

Abstract:In this paper, we present a novel tightly-coupled probabilistic monocular visual-odometric Simultaneous Localization and Mapping algorithm using wheels and a MEMS gyroscope, which can provide accurate, robust and long-term localization for the ground robot moving on a plane. Firstly, we present an odometer preintegration theory that integrates the wheel encoder measurements and gyroscope measurements to a local frame. The preintegration theory properly addresses the manifold structure of the rotation group SO(3) and carefully deals with uncertainty propagation and bias correction. Then the novel odometer error term is formulated using the odometer preintegration model and it is tightly integrated into the visual optimization framework. Furthermore, we introduce a complete tracking framework to provide different strategies for motion tracking when (1) both measurements are available, (2) visual measurements are not available, and (3) wheel encoder experiences slippage, which leads the system to be accurate and robust. Finally, the proposed algorithm is evaluated by performing extensive experiments, the experimental results demonstrate the superiority of the proposed system.

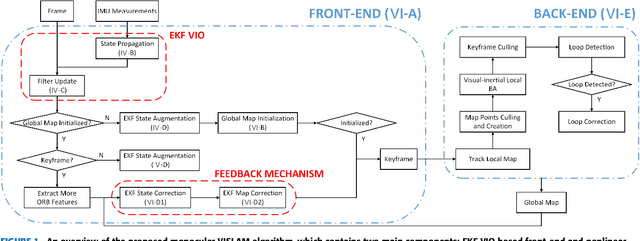

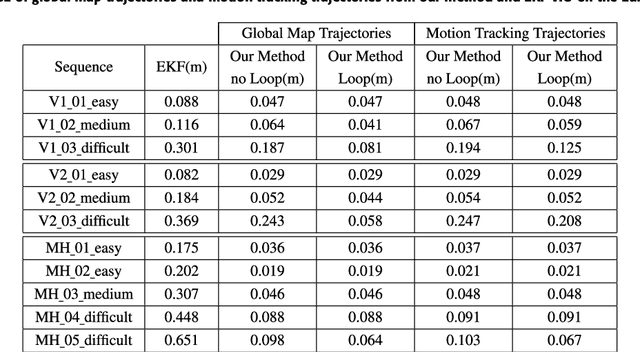

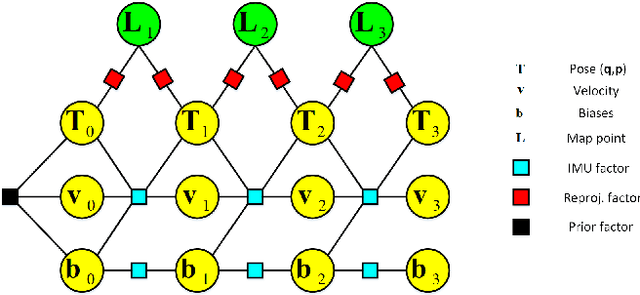

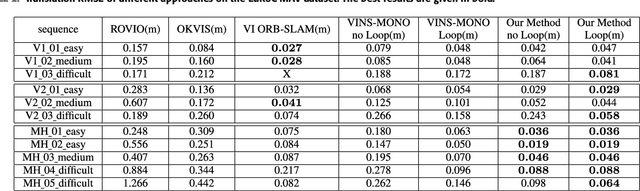

Accurate Monocular Visual-inertial SLAM using a Map-assisted EKF Approach

Mar 31, 2018

Abstract:This paper presents a novel tightly-coupled monocular visual-inertial Simultaneous Localization and Mapping algorithm, which provides accurate and robust localization within the globally consistent map in real time on a standard CPU. This is achieved by firstly performing the visual-inertial extended kalman filter(EKF) to provide motion estimate at a high rate. However the filter becomes inconsistent due to the well known linearization issues. So we perform a keyframe-based visual-inertial bundle adjustment to improve the consistency and accuracy of the system. In addition, a loop closure detection and correction module is also added to eliminate the accumulated drift when revisiting an area. Finally, the optimized motion estimates and map are fed back to the EKF-based visual-inertial odometry module, thus the inconsistency and estimation error of the EKF estimator are reduced. In this way, the system can continuously provide reliable motion estimates for the long-term operation. The performance of the algorithm is validated on public datasets and real-world experiments, which proves the superiority of the proposed algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge