Mehrab Hamidi

AI-Med Group, AI Innovation Center, Sharif University of Technology, Tehran, Iran, DML Lab, Department of Computer Engineering, Sharif University of Technology, Tehran, Iran

Interpretability in Action: Exploratory Analysis of VPT, a Minecraft Agent

Jul 16, 2024Abstract:Understanding the mechanisms behind decisions taken by large foundation models in sequential decision making tasks is critical to ensuring that such systems operate transparently and safely. In this work, we perform exploratory analysis on the Video PreTraining (VPT) Minecraft playing agent, one of the largest open-source vision-based agents. We aim to illuminate its reasoning mechanisms by applying various interpretability techniques. First, we analyze the attention mechanism while the agent solves its training task - crafting a diamond pickaxe. The agent pays attention to the last four frames and several key-frames further back in its six-second memory. This is a possible mechanism for maintaining coherence in a task that takes 3-10 minutes, despite the short memory span. Secondly, we perform various interventions, which help us uncover a worrying case of goal misgeneralization: VPT mistakenly identifies a villager wearing brown clothes as a tree trunk when the villager is positioned stationary under green tree leaves, and punches it to death.

Reverse Engineering Deep ReLU Networks An Optimization-based Algorithm

Dec 07, 2023Abstract:Reverse engineering deep ReLU networks is a critical problem in understanding the complex behavior and interpretability of neural networks. In this research, we present a novel method for reconstructing deep ReLU networks by leveraging convex optimization techniques and a sampling-based approach. Our method begins by sampling points in the input space and querying the black box model to obtain the corresponding hyperplanes. We then define a convex optimization problem with carefully chosen constraints and conditions to guarantee its convexity. The objective function is designed to minimize the discrepancy between the reconstructed networks output and the target models output, subject to the constraints. We employ gradient descent to optimize the objective function, incorporating L1 or L2 regularization as needed to encourage sparse or smooth solutions. Our research contributes to the growing body of work on reverse engineering deep ReLU networks and paves the way for new advancements in neural network interpretability and security.

Accurate and Rapid Diagnosis of COVID-19 Pneumonia with Batch Effect Removal of Chest CT-Scans and Interpretable Artificial Intelligence

Nov 23, 2020

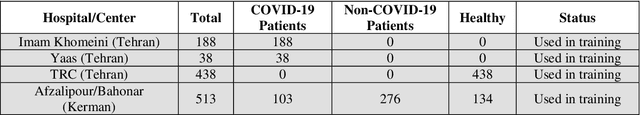

Abstract:Since late 2019, COVID-19 has been spreading over the world and caused the death of many people. The high transmission rate of the virus demands the rapid identification of infected patients to reduce the spread of the disease. The current gold-standard test, Reverse-Transcription Polymerase Chain Reaction (RT-PCR), suffers from a high rate of false negatives. Diagnosis from CT-scan images as an alternative with higher accuracy and sensitivity has the challenge of distinguishing COVID-19 from other lung diseases which demand expert radiologists. In peak times, artificial intelligence (AI) based diagnostic systems can help radiologists to accelerate the process of diagnosis, increase the accuracy, and understand the severity of the disease. We designed an interpretable deep neural network to distinguish healthy people, patients with COVID-19, and patients with other lung diseases from chest CT-scan images. Our model also detects the infected areas of the lung and is able to calculate the percentage of the infected volume. We preprocessed the images to eliminate the batch effect related to CT-scan devices and medical centers and then adopted a weakly supervised method to train the model without having any label for infected parts and any tags for the slices of the CT-scan images that had signs of disease. We trained and evaluated the model on a large dataset of 3359 CT-scan images from 6 medical centers. The model reached a sensitivity of 97.75% and a specificity of 87% in separating healthy people from the diseased and a sensitivity of 98.15% and a specificity of 81.03% in distinguishing COVID-19 from other diseases. The model also reached similar metrics in 1435 samples from 6 unseen medical centers that prove its generalizability. The performance of the model on a large diverse dataset, its generalizability, and interpretability makes it suitable to be used as a diagnostic system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge