Md. Mahfuzur Rahman

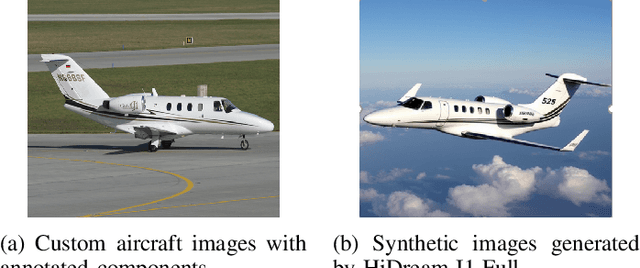

Physics-Based Benchmarking Metrics for Multimodal Synthetic Images

Nov 19, 2025

Abstract:Current state of the art measures like BLEU, CIDEr, VQA score, SigLIP-2 and CLIPScore are often unable to capture semantic or structural accuracy, especially for domain-specific or context-dependent scenarios. For this, this paper proposes a Physics-Constrained Multimodal Data Evaluation (PCMDE) metric combining large language models with reasoning, knowledge based mapping and vision-language models to overcome these limitations. The architecture is comprised of three main stages: (1) feature extraction of spatial and semantic information with multimodal features through object detection and VLMs; (2) Confidence-Weighted Component Fusion for adaptive component-level validation; and (3) physics-guided reasoning using large language models for structural and relational constraints (e.g., alignment, position, consistency) enforcement.

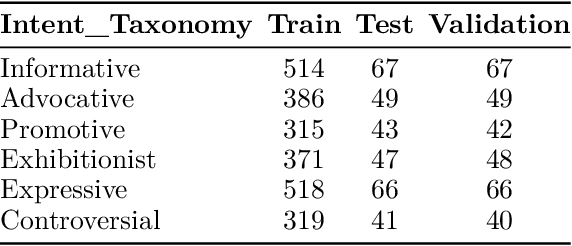

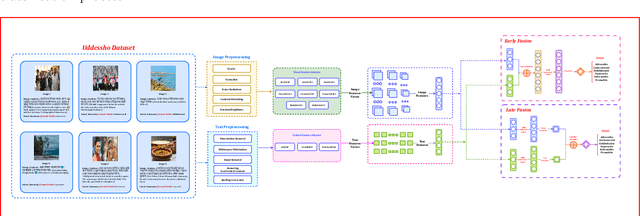

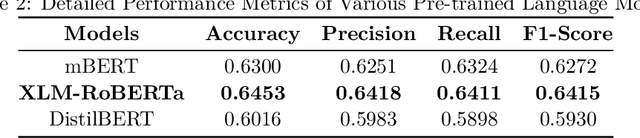

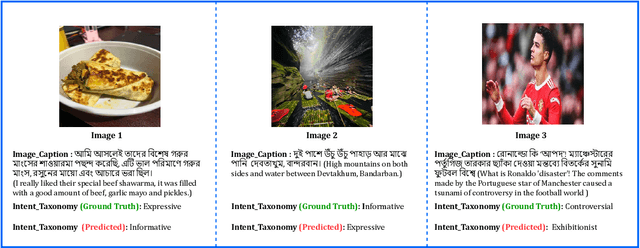

Uddessho: An Extensive Benchmark Dataset for Multimodal Author Intent Classification in Low-Resource Bangla Language

Sep 14, 2024

Abstract:With the increasing popularity of daily information sharing and acquisition on the Internet, this paper introduces an innovative approach for intent classification in Bangla language, focusing on social media posts where individuals share their thoughts and opinions. The proposed method leverages multimodal data with particular emphasis on authorship identification, aiming to understand the underlying purpose behind textual content, especially in the context of varied user-generated posts on social media. Current methods often face challenges in low-resource languages like Bangla, particularly when author traits intricately link with intent, as observed in social media posts. To address this, we present the Multimodal-based Author Bangla Intent Classification (MABIC) framework, utilizing text and images to gain deeper insights into the conveyed intentions. We have created a dataset named "Uddessho," comprising 3,048 instances sourced from social media. Our methodology comprises two approaches for classifying textual intent and multimodal author intent, incorporating early fusion and late fusion techniques. In our experiments, the unimodal approach achieved an accuracy of 64.53% in interpreting Bangla textual intent. In contrast, our multimodal approach significantly outperformed traditional unimodal methods, achieving an accuracy of 76.19%. This represents an improvement of 11.66%. To our best knowledge, this is the first research work on multimodal-based author intent classification for low-resource Bangla language social media posts.

UAV (Unmanned Aerial Vehicles): Diverse Applications of UAV Datasets in Segmentation, Classification, Detection, and Tracking

Sep 05, 2024

Abstract:Unmanned Aerial Vehicles (UAVs), have greatly revolutionized the process of gathering and analyzing data in diverse research domains, providing unmatched adaptability and effectiveness. This paper presents a thorough examination of Unmanned Aerial Vehicle (UAV) datasets, emphasizing their wide range of applications and progress. UAV datasets consist of various types of data, such as satellite imagery, images captured by drones, and videos. These datasets can be categorized as either unimodal or multimodal, offering a wide range of detailed and comprehensive information. These datasets play a crucial role in disaster damage assessment, aerial surveillance, object recognition, and tracking. They facilitate the development of sophisticated models for tasks like semantic segmentation, pose estimation, vehicle re-identification, and gesture recognition. By leveraging UAV datasets, researchers can significantly enhance the capabilities of computer vision models, thereby advancing technology and improving our understanding of complex, dynamic environments from an aerial perspective. This review aims to encapsulate the multifaceted utility of UAV datasets, emphasizing their pivotal role in driving innovation and practical applications in multiple domains.

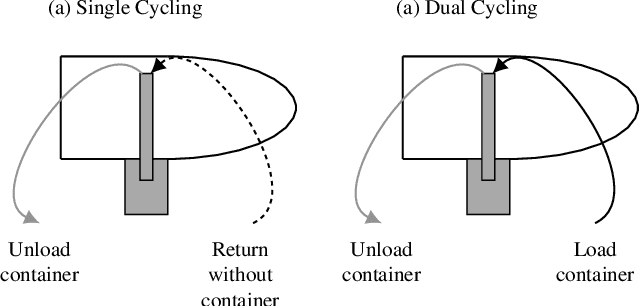

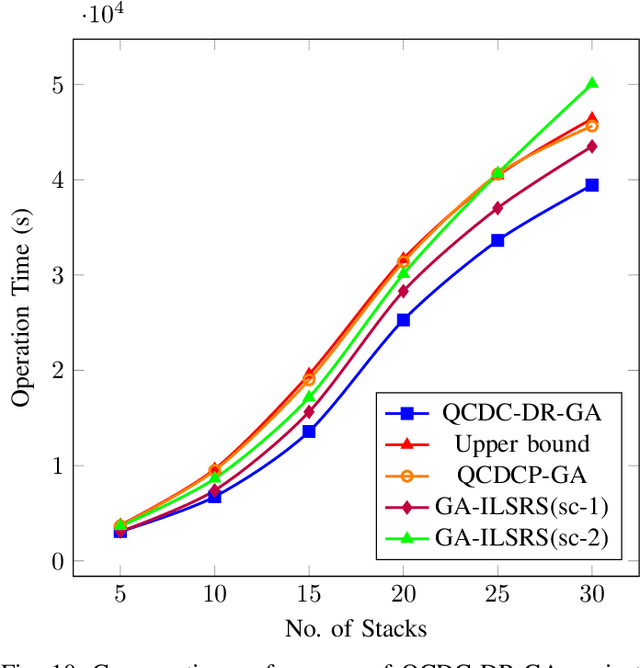

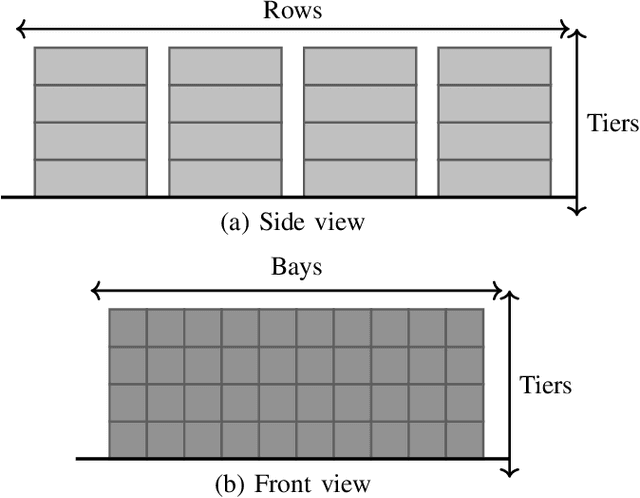

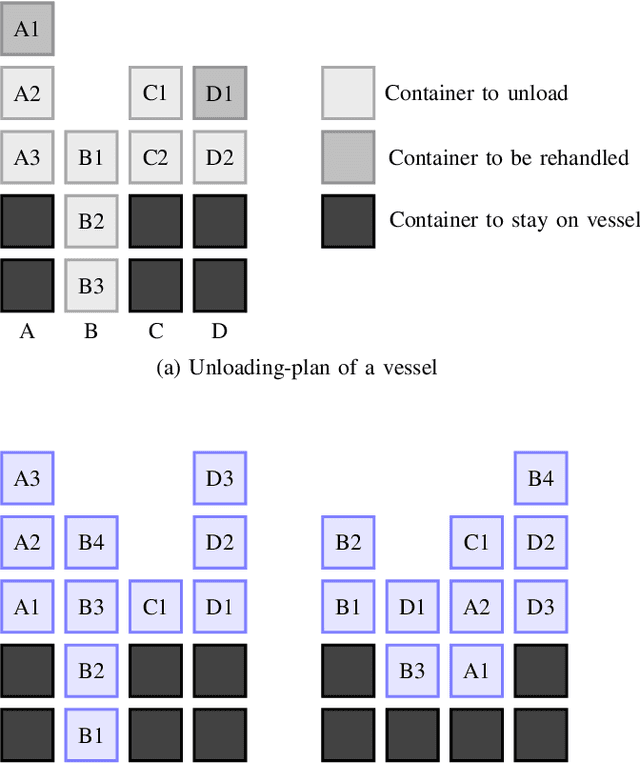

Optimizing Container Loading and Unloading through Dual-Cycling and Dockyard Rehandle Reduction Using a Hybrid Genetic Algorithm

Jun 12, 2024

Abstract:This paper addresses the optimization of container unloading and loading operations at ports, integrating quay-crane dual-cycling with dockyard rehandle minimization. We present a unified model encompassing both operations: ship container unloading and loading by quay crane, and the other is reducing dockyard rehandles while loading the ship. We recognize that optimizing one aspect in isolation can lead to suboptimal outcomes due to interdependencies. Specifically, optimizing unloading sequences for minimal operation time may inadvertently increase dockyard rehandles during loading and vice versa. To address this NP-hard problem, we propose a hybrid genetic algorithm (GA) QCDC-DR-GA comprising one-dimensional and two-dimensional GA components. Our model, QCDC-DR-GA, consistently outperforms four state-of-the-art methods in maximizing dual cycles and minimizing dockyard rehandles. Compared to those methods, it reduced 15-20% of total operation time for large vessels. Statistical validation through a two-tailed paired t-test confirms the superiority of QCDC-DR-GA at a 5% significance level. The approach effectively combines QCDC optimization with dockyard rehandle minimization, optimizing the total unloading-loading time. Results underscore the inefficiency of separately optimizing QCDC and dockyard rehandles. Fragmented approaches, such as QCDC Scheduling Optimized by bi-level GA and GA-ILSRS (Scenario 2), show limited improvement compared to QCDC-DR-GA. As in GA-ILSRS (Scenario 1), neglecting dual-cycle optimization leads to inferior performance than QCDC-DR-GA. This emphasizes the necessity of simultaneously considering both aspects for optimal resource utilization and overall operational efficiency.

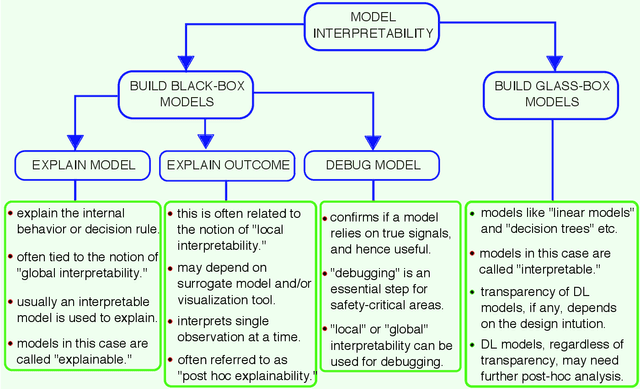

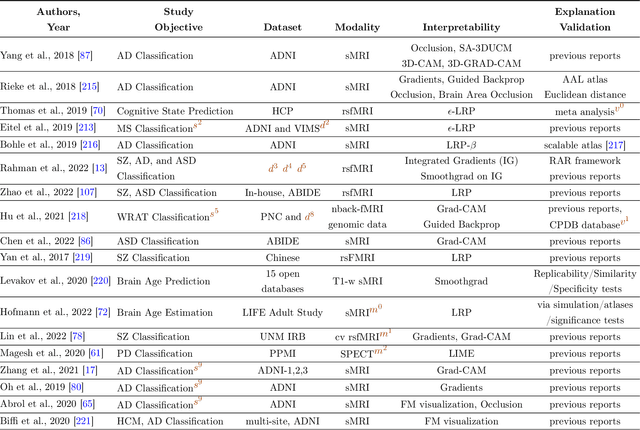

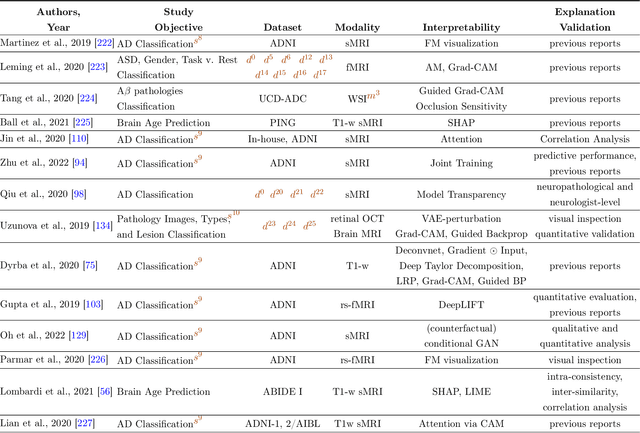

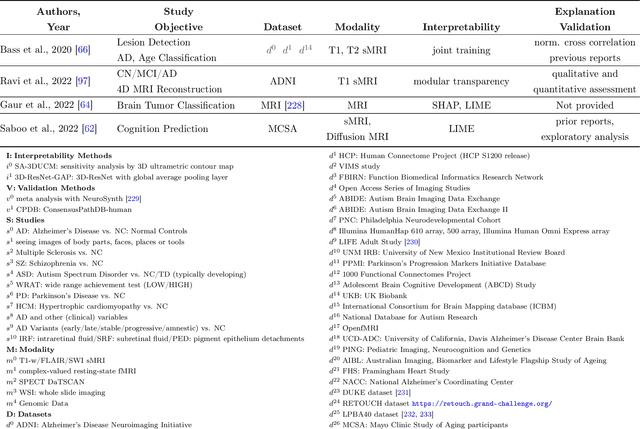

Looking deeper into interpretable deep learning in neuroimaging: a comprehensive survey

Jul 14, 2023

Abstract:Deep learning (DL) models have been popular due to their ability to learn directly from the raw data in an end-to-end paradigm, alleviating the concern of a separate error-prone feature extraction phase. Recent DL-based neuroimaging studies have also witnessed a noticeable performance advancement over traditional machine learning algorithms. But the challenges of deep learning models still exist because of the lack of transparency in these models for their successful deployment in real-world applications. In recent years, Explainable AI (XAI) has undergone a surge of developments mainly to get intuitions of how the models reached the decisions, which is essential for safety-critical domains such as healthcare, finance, and law enforcement agencies. While the interpretability domain is advancing noticeably, researchers are still unclear about what aspect of model learning a post hoc method reveals and how to validate its reliability. This paper comprehensively reviews interpretable deep learning models in the neuroimaging domain. Firstly, we summarize the current status of interpretability resources in general, focusing on the progression of methods, associated challenges, and opinions. Secondly, we discuss how multiple recent neuroimaging studies leveraged model interpretability to capture anatomical and functional brain alterations most relevant to model predictions. Finally, we discuss the limitations of the current practices and offer some valuable insights and guidance on how we can steer our future research directions to make deep learning models substantially interpretable and thus advance scientific understanding of brain disorders.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge