Matthias Rätsch

NVP-HRI: Zero Shot Natural Voice and Posture-based Human-Robot Interaction via Large Language Model

Mar 12, 2025Abstract:Effective Human-Robot Interaction (HRI) is crucial for future service robots in aging societies. Existing solutions are biased toward only well-trained objects, creating a gap when dealing with new objects. Currently, HRI systems using predefined gestures or language tokens for pretrained objects pose challenges for all individuals, especially elderly ones. These challenges include difficulties in recalling commands, memorizing hand gestures, and learning new names. This paper introduces NVP-HRI, an intuitive multi-modal HRI paradigm that combines voice commands and deictic posture. NVP-HRI utilizes the Segment Anything Model (SAM) to analyze visual cues and depth data, enabling precise structural object representation. Through a pre-trained SAM network, NVP-HRI allows interaction with new objects via zero-shot prediction, even without prior knowledge. NVP-HRI also integrates with a large language model (LLM) for multimodal commands, coordinating them with object selection and scene distribution in real time for collision-free trajectory solutions. We also regulate the action sequence with the essential control syntax to reduce LLM hallucination risks. The evaluation of diverse real-world tasks using a Universal Robot showcased up to 59.2\% efficiency improvement over traditional gesture control, as illustrated in the video https://youtu.be/EbC7al2wiAc. Our code and design will be openly available at https://github.com/laiyuzhi/NVP-HRI.git.

NMM-HRI: Natural Multi-modal Human-Robot Interaction with Voice and Deictic Posture via Large Language Model

Jan 01, 2025

Abstract:Translating human intent into robot commands is crucial for the future of service robots in an aging society. Existing Human-Robot Interaction (HRI) systems relying on gestures or verbal commands are impractical for the elderly due to difficulties with complex syntax or sign language. To address the challenge, this paper introduces a multi-modal interaction framework that combines voice and deictic posture information to create a more natural HRI system. The visual cues are first processed by the object detection model to gain a global understanding of the environment, and then bounding boxes are estimated based on depth information. By using a large language model (LLM) with voice-to-text commands and temporally aligned selected bounding boxes, robot action sequences can be generated, while key control syntax constraints are applied to avoid potential LLM hallucination issues. The system is evaluated on real-world tasks with varying levels of complexity using a Universal Robots UR3e manipulator. Our method demonstrates significantly better performance in HRI in terms of accuracy and robustness. To benefit the research community and the general public, we will make our code and design open-source.

Ethically aligned Deep Learning: Unbiased Facial Aesthetic Prediction

Nov 09, 2021

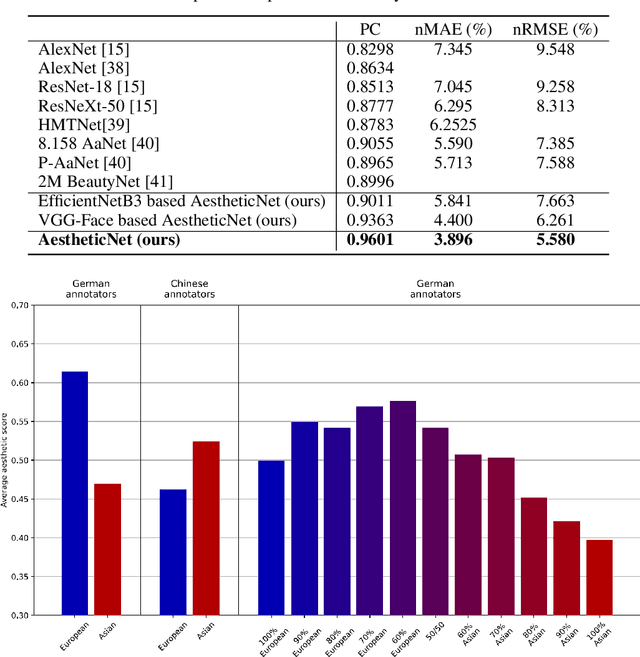

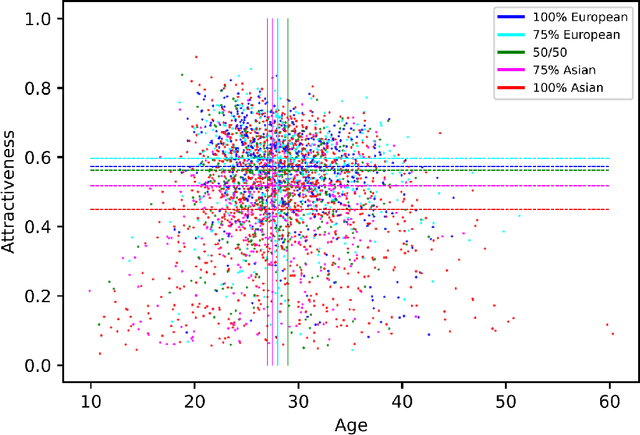

Abstract:Facial beauty prediction (FBP) aims to develop a machine that automatically makes facial attractiveness assessment. In the past those results were highly correlated with human ratings, therefore also with their bias in annotating. As artificial intelligence can have racist and discriminatory tendencies, the cause of skews in the data must be identified. Development of training data and AI algorithms that are robust against biased information is a new challenge for scientists. As aesthetic judgement usually is biased, we want to take it one step further and propose an Unbiased Convolutional Neural Network for FBP. While it is possible to create network models that can rate attractiveness of faces on a high level, from an ethical point of view, it is equally important to make sure the model is unbiased. In this work, we introduce AestheticNet, a state-of-the-art attractiveness prediction network, which significantly outperforms competitors with a Pearson Correlation of 0.9601. Additionally, we propose a new approach for generating a bias-free CNN to improve fairness in machine learning.

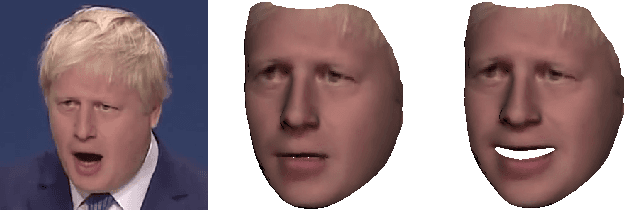

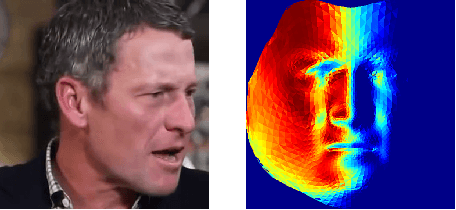

Evaluation of Dense 3D Reconstruction from 2D Face Images in the Wild

Apr 20, 2018

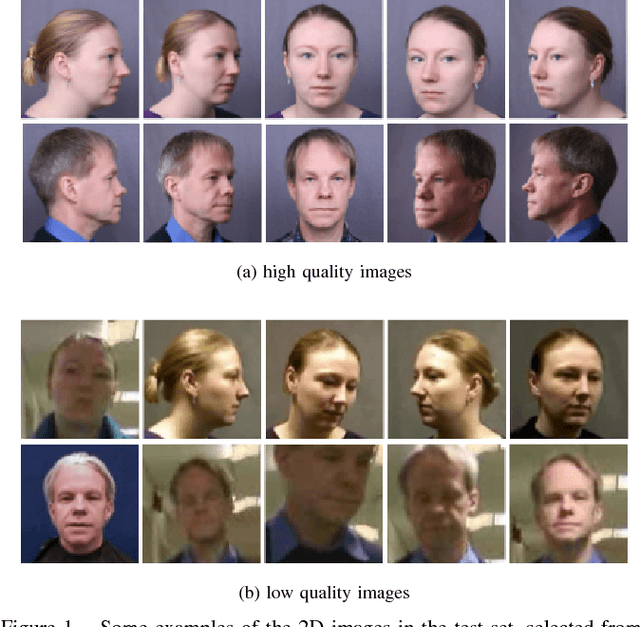

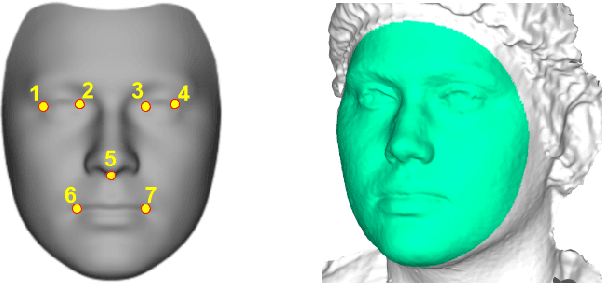

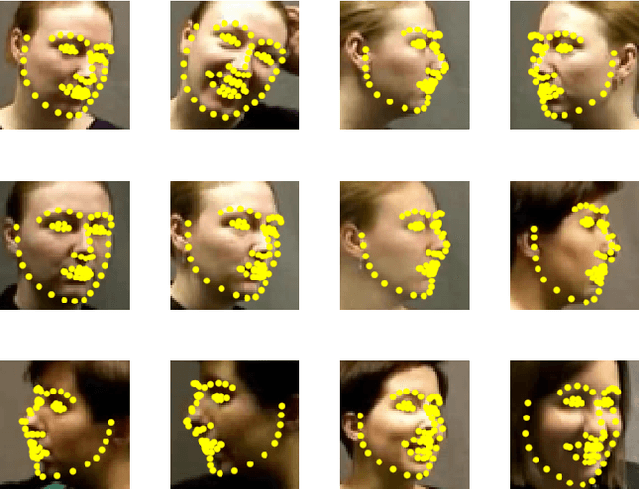

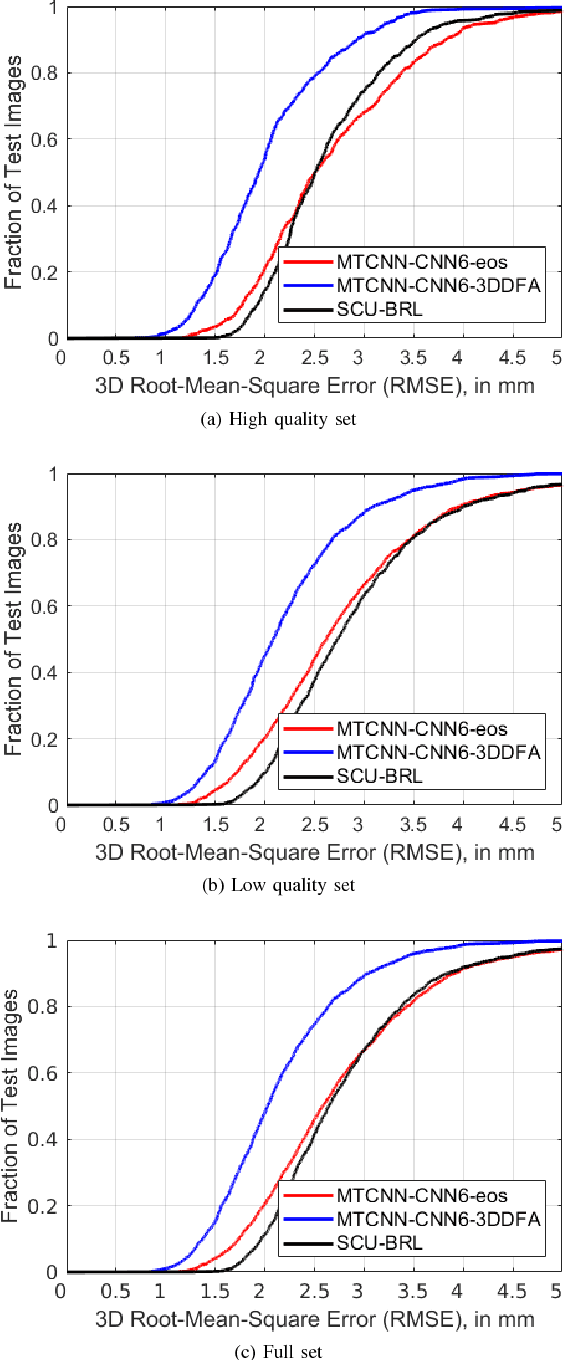

Abstract:This paper investigates the evaluation of dense 3D face reconstruction from a single 2D image in the wild. To this end, we organise a competition that provides a new benchmark dataset that contains 2000 2D facial images of 135 subjects as well as their 3D ground truth face scans. In contrast to previous competitions or challenges, the aim of this new benchmark dataset is to evaluate the accuracy of a 3D dense face reconstruction algorithm using real, accurate and high-resolution 3D ground truth face scans. In addition to the dataset, we provide a standard protocol as well as a Python script for the evaluation. Last, we report the results obtained by three state-of-the-art 3D face reconstruction systems on the new benchmark dataset. The competition is organised along with the 2018 13th IEEE Conference on Automatic Face & Gesture Recognition.

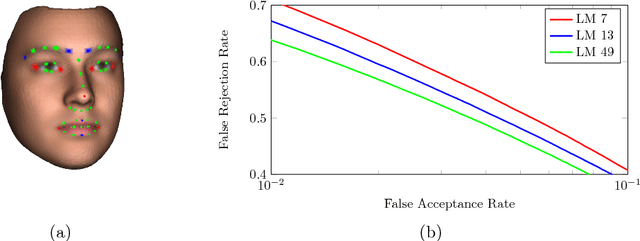

A 3D Face Modelling Approach for Pose-Invariant Face Recognition in a Human-Robot Environment

Jun 01, 2016

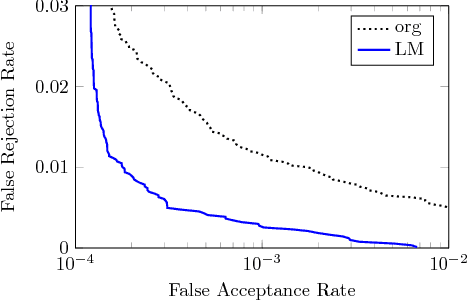

Abstract:Face analysis techniques have become a crucial component of human-machine interaction in the fields of assistive and humanoid robotics. However, the variations in head-pose that arise naturally in these environments are still a great challenge. In this paper, we present a real-time capable 3D face modelling framework for 2D in-the-wild images that is applicable for robotics. The fitting of the 3D Morphable Model is based exclusively on automatically detected landmarks. After fitting, the face can be corrected in pose and transformed back to a frontal 2D representation that is more suitable for face recognition. We conduct face recognition experiments with non-frontal images from the MUCT database and uncontrolled, in the wild images from the PaSC database, the most challenging face recognition database to date, showing an improved performance. Finally, we present our SCITOS G5 robot system, which incorporates our framework as a means of image pre-processing for face analysis.

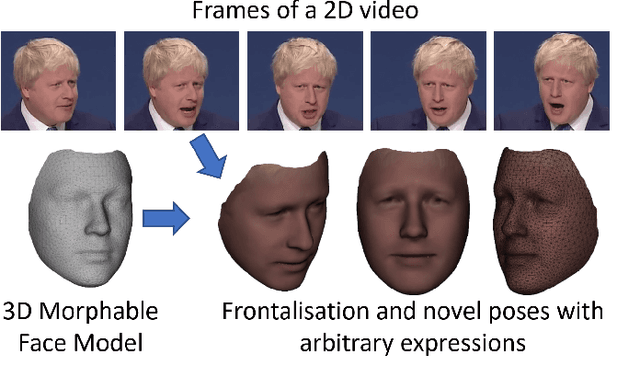

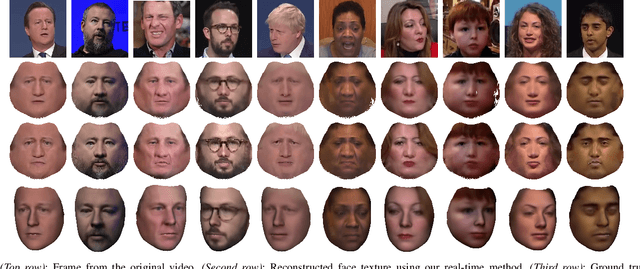

3D Face Tracking and Texture Fusion in the Wild

May 22, 2016

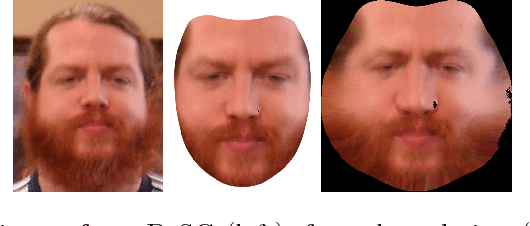

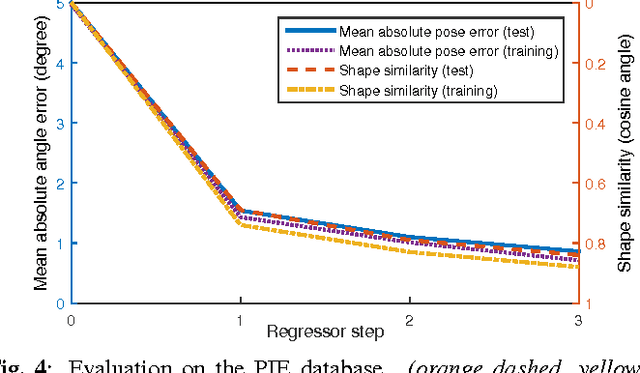

Abstract:We present a fully automatic approach to real-time 3D face reconstruction from monocular in-the-wild videos. With the use of a cascaded-regressor based face tracking and a 3D Morphable Face Model shape fitting, we obtain a semi-dense 3D face shape. We further use the texture information from multiple frames to build a holistic 3D face representation from the video frames. Our system is able to capture facial expressions and does not require any person-specific training. We demonstrate the robustness of our approach on the challenging 300 Videos in the Wild (300-VW) dataset. Our real-time fitting framework is available as an open source library at http://4dface.org.

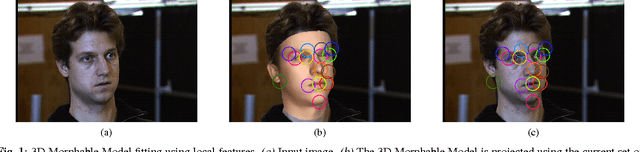

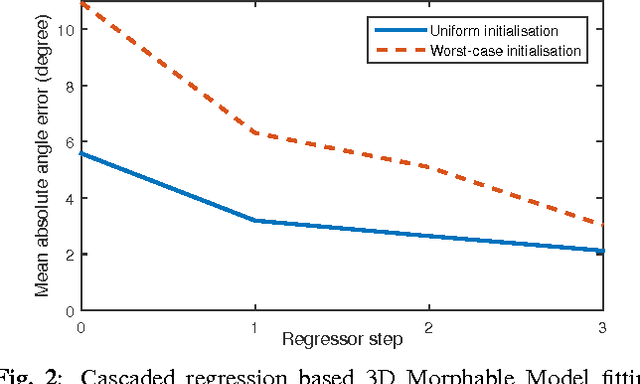

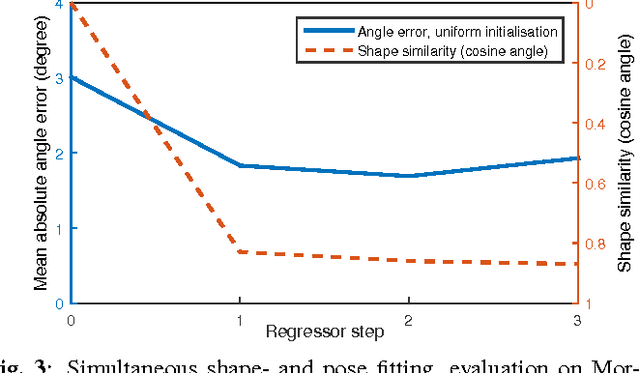

Fitting 3D Morphable Models using Local Features

Mar 08, 2015

Abstract:In this paper, we propose a novel fitting method that uses local image features to fit a 3D Morphable Model to 2D images. To overcome the obstacle of optimising a cost function that contains a non-differentiable feature extraction operator, we use a learning-based cascaded regression method that learns the gradient direction from data. The method allows to simultaneously solve for shape and pose parameters. Our method is thoroughly evaluated on Morphable Model generated data and first results on real data are presented. Compared to traditional fitting methods, which use simple raw features like pixel colour or edge maps, local features have been shown to be much more robust against variations in imaging conditions. Our approach is unique in that we are the first to use local features to fit a Morphable Model. Because of the speed of our method, it is applicable for realtime applications. Our cascaded regression framework is available as an open source library (https://github.com/patrikhuber).

* Submitted to ICIP 2015; 4 pages, 4 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge