Matthew L. Elwin

LeVR: A Modular VR Teleoperation Framework for Imitation Learning in Dexterous Manipulation

Sep 17, 2025

Abstract:We introduce LeVR, a modular software framework designed to bridge two critical gaps in robotic imitation learning. First, it provides robust and intuitive virtual reality (VR) teleoperation for data collection using robot arms paired with dexterous hands, addressing a common limitation in existing systems. Second, it natively integrates with the powerful LeRobot imitation learning (IL) framework, enabling the use of VR-based teleoperation data and streamlining the demonstration collection process. To demonstrate LeVR, we release LeFranX, an open-source implementation for the Franka FER arm and RobotEra XHand, two widely used research platforms. LeFranX delivers a seamless, end-to-end workflow from data collection to real-world policy deployment. We validate our system by collecting a public dataset of 100 expert demonstrations and use it to successfully fine-tune state-of-the-art visuomotor policies. We provide our open-source framework, implementation, and dataset to accelerate IL research for the robotics community.

Cooperative Payload Estimation by a Team of Mocobots

Feb 07, 2025Abstract:Consider the following scenario: a human guides multiple mobile manipulators to grasp a common payload. For subsequent high-performance autonomous manipulation of the payload by the mobile manipulator team, or for collaborative manipulation with the human, the robots should be able to discover where the other robots are attached to the payload, as well as the payload's mass and inertial properties. In this paper, we describe a method for the robots to autonomously discover this information. The robots cooperatively manipulate the payload, and the twist, twist derivative, and wrench data at their grasp frames are used to estimate the transformation matrices between the grasp frames, the location of the payload's center of mass, and the payload's inertia matrix. The method is validated experimentally with a team of three mobile cobots, or mocobots.

Self-Healing Distributed Swarm Formation Control Using Image Moments

Dec 12, 2023

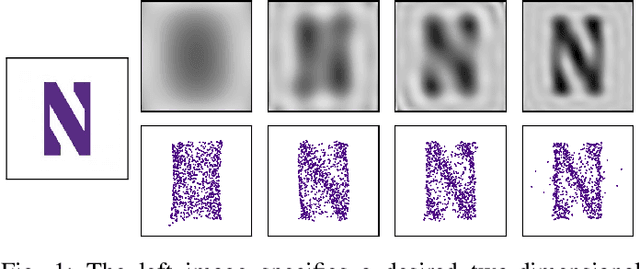

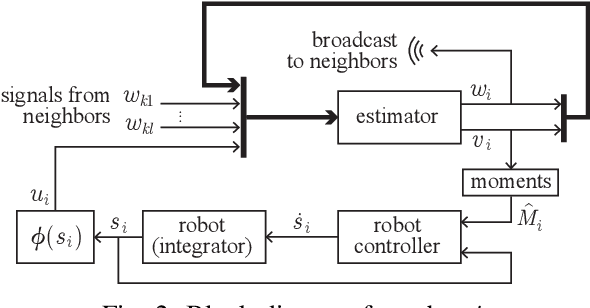

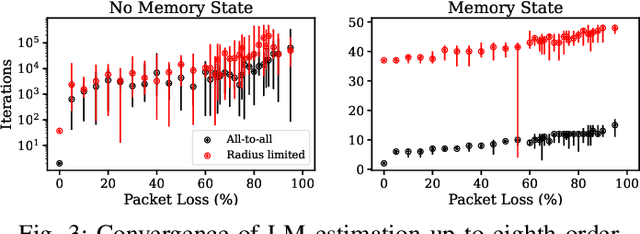

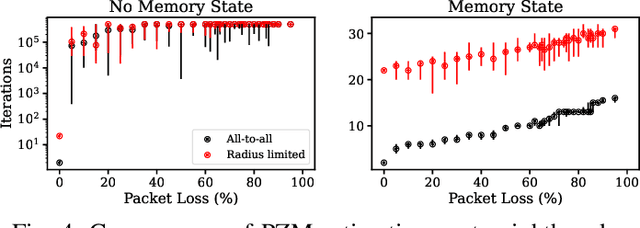

Abstract:Human-swarm interaction is facilitated by a low-dimensional encoding of the swarm formation, independent of the (possibly large) number of robots. We propose using image moments to encode two-dimensional formations of robots. Each robot knows the desired formation moments, and simultaneously estimates the current moments of the entire swarm while controlling its motion to better achieve the desired group moments. The estimator is a distributed optimization, requiring no centralized processing, and self-healing, meaning that the process is robust to initialization errors, packet drops, and robots being added to or removed from the swarm. Our experimental results with a swarm of 50 robots, suffering nearly 50% packet loss, show that distributed estimation and control of image moments effectively achieves desired swarm formations.

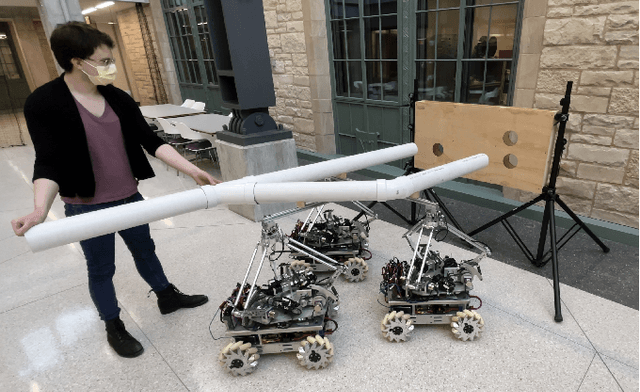

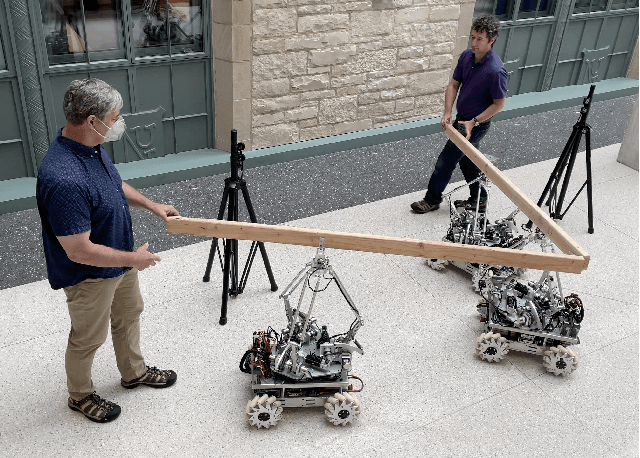

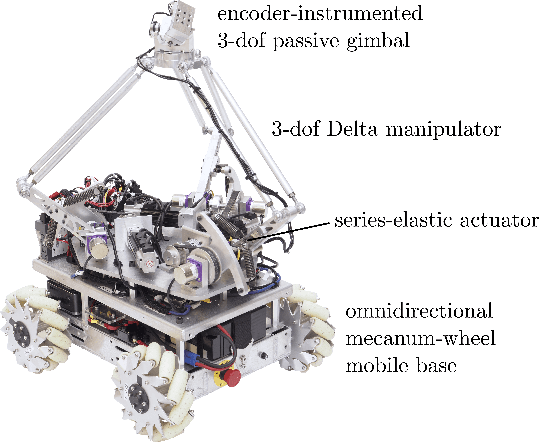

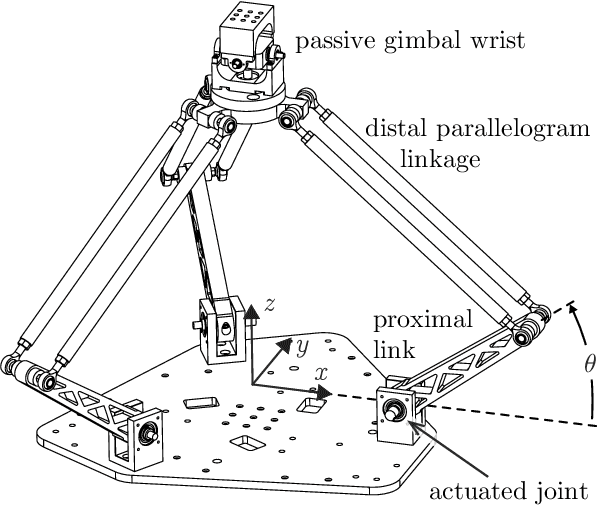

Human-Multirobot Collaborative Mobile Manipulation: the Omnid Mocobots

Jun 28, 2022

Abstract:The Omnid human-collaborative mobile manipulators are an experimental platform for testing control architectures for autonomous and human-collaborative multirobot mobile manipulation. An Omnid consists of a mecanum-wheel omnidirectional mobile base and a series-elastic Delta-type parallel manipulator, and it is a specific implementation of a broader class of mobile collaborative robots ("mocobots") suitable for safe human co-manipulation of delicate, flexible, and articulated payloads. Key features of mocobots include passive compliance, for the safety of the human and the payload, and high-fidelity end-effector force control independent of the potentially imprecise motions of the mobile base. We describe general considerations for the design of teams of mocobots; the design of the Omnids in light of these considerations; manipulator and mobile base controllers to achieve useful multirobot collaborative behaviors; and initial experiments in human-multirobot collaborative mobile manipulation of large, unwieldy payloads. For these experiments, the only communication among the humans and Omnids is mechanical, through the payload.

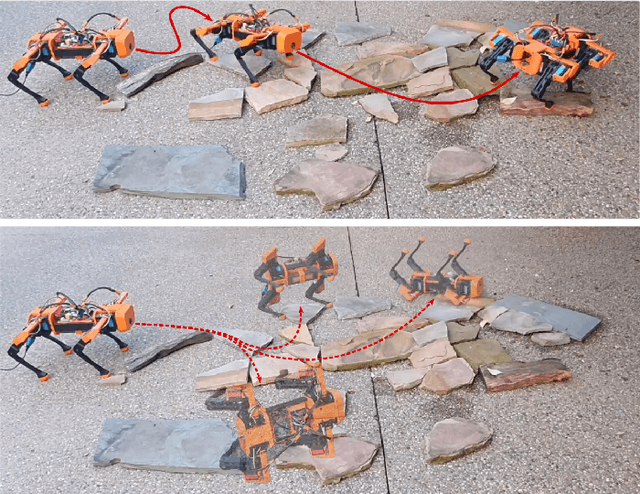

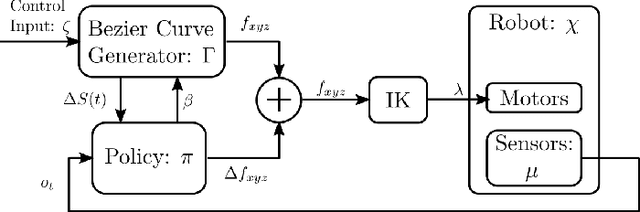

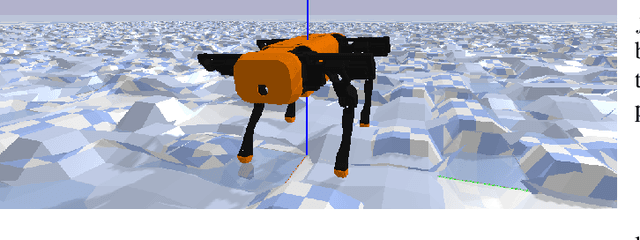

Dynamics and Domain Randomized Gait Modulation with Bezier Curves for Sim-to-Real Legged Locomotion

Oct 22, 2020

Abstract:We present a sim-to-real framework that uses dynamics and domain randomized offline reinforcement learning to enhance open-loop gaits for legged robots, allowing them to traverse uneven terrain without sensing foot impacts. Our approach, D$^2$-Randomized Gait Modulation with Bezier Curves (D$^2$-GMBC), uses augmented random search with randomized dynamics and terrain to train, in simulation, a policy that modifies the parameters and output of an open-loop Bezier curve gait generator for quadrupedal robots. The policy, using only inertial measurements, enables the robot to traverse unknown rough terrain, even when the robot's physical parameters do not match the open-loop model. We compare the resulting policy to hand-tuned Bezier Curve gaits and to policies trained without randomization, both in simulation and on a real quadrupedal robot. With D$^2$-GMBC, across a variety of experiments on unobserved and unknown uneven terrain, the robot walks significantly farther than with either hand-tuned gaits or gaits learned without domain randomization. Additionally, using D$^2$-GMBC, the robot can walk laterally and rotate while on the rough terrain, even though it was trained only for forward walking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge