C. Lin Liu

Cooperative Payload Estimation by a Team of Mocobots

Feb 07, 2025Abstract:Consider the following scenario: a human guides multiple mobile manipulators to grasp a common payload. For subsequent high-performance autonomous manipulation of the payload by the mobile manipulator team, or for collaborative manipulation with the human, the robots should be able to discover where the other robots are attached to the payload, as well as the payload's mass and inertial properties. In this paper, we describe a method for the robots to autonomously discover this information. The robots cooperatively manipulate the payload, and the twist, twist derivative, and wrench data at their grasp frames are used to estimate the transformation matrices between the grasp frames, the location of the payload's center of mass, and the payload's inertia matrix. The method is validated experimentally with a team of three mobile cobots, or mocobots.

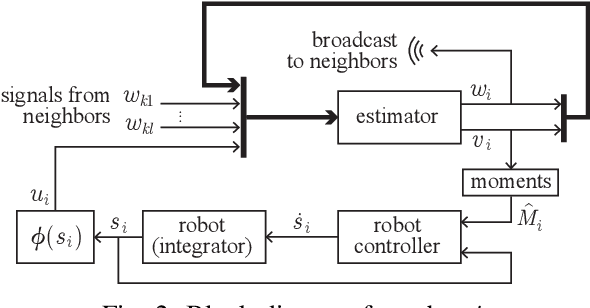

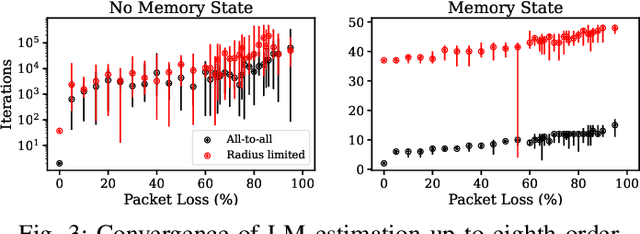

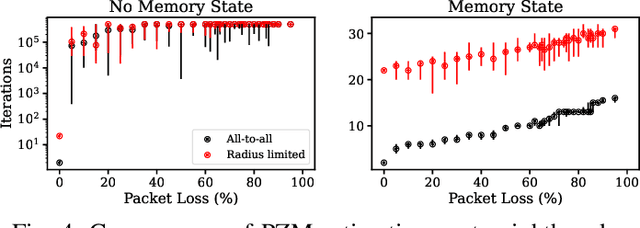

Self-Healing Distributed Swarm Formation Control Using Image Moments

Dec 12, 2023

Abstract:Human-swarm interaction is facilitated by a low-dimensional encoding of the swarm formation, independent of the (possibly large) number of robots. We propose using image moments to encode two-dimensional formations of robots. Each robot knows the desired formation moments, and simultaneously estimates the current moments of the entire swarm while controlling its motion to better achieve the desired group moments. The estimator is a distributed optimization, requiring no centralized processing, and self-healing, meaning that the process is robust to initialization errors, packet drops, and robots being added to or removed from the swarm. Our experimental results with a swarm of 50 robots, suffering nearly 50% packet loss, show that distributed estimation and control of image moments effectively achieves desired swarm formations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge