Maurice Rahme

Dynamics and Domain Randomized Gait Modulation with Bezier Curves for Sim-to-Real Legged Locomotion

Oct 22, 2020

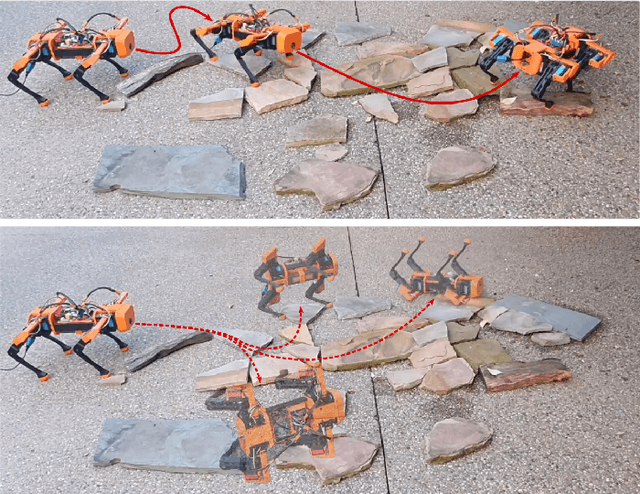

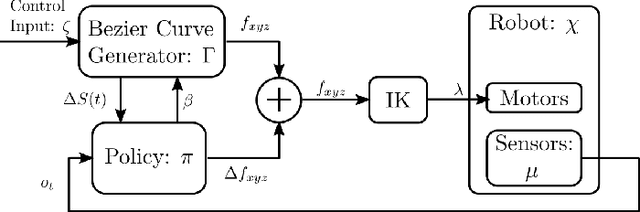

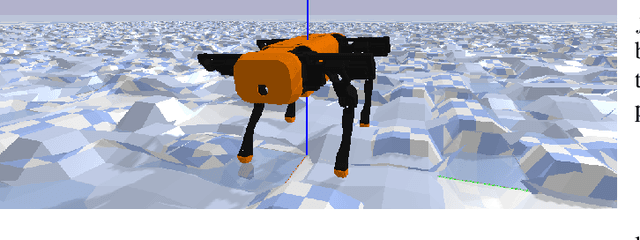

Abstract:We present a sim-to-real framework that uses dynamics and domain randomized offline reinforcement learning to enhance open-loop gaits for legged robots, allowing them to traverse uneven terrain without sensing foot impacts. Our approach, D$^2$-Randomized Gait Modulation with Bezier Curves (D$^2$-GMBC), uses augmented random search with randomized dynamics and terrain to train, in simulation, a policy that modifies the parameters and output of an open-loop Bezier curve gait generator for quadrupedal robots. The policy, using only inertial measurements, enables the robot to traverse unknown rough terrain, even when the robot's physical parameters do not match the open-loop model. We compare the resulting policy to hand-tuned Bezier Curve gaits and to policies trained without randomization, both in simulation and on a real quadrupedal robot. With D$^2$-GMBC, across a variety of experiments on unobserved and unknown uneven terrain, the robot walks significantly farther than with either hand-tuned gaits or gaits learned without domain randomization. Additionally, using D$^2$-GMBC, the robot can walk laterally and rotate while on the rough terrain, even though it was trained only for forward walking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge