Matthew Howard

Training People to Reward Robots

May 15, 2025Abstract:Learning from demonstration (LfD) is a technique that allows expert teachers to teach task-oriented skills to robotic systems. However, the most effective way of guiding novice teachers to approach expert-level demonstrations quantitatively for specific teaching tasks remains an open question. To this end, this paper investigates the use of machine teaching (MT) to guide novice teachers to improve their teaching skills based on reinforcement learning from demonstration (RLfD). The paper reports an experiment in which novices receive MT-derived guidance to train their ability to teach a given motor skill with only 8 demonstrations and generalise this to previously unseen ones. Results indicate that the MT-guidance not only enhances robot learning performance by 89% on the training skill but also causes a 70% improvement in robot learning performance on skills not seen by subjects during training. These findings highlight the effectiveness of MT-guidance in upskilling human teaching behaviours, ultimately improving demonstration quality in RLfD.

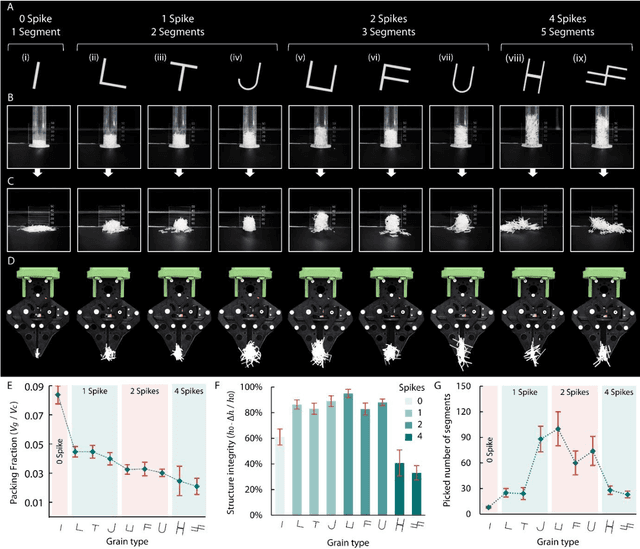

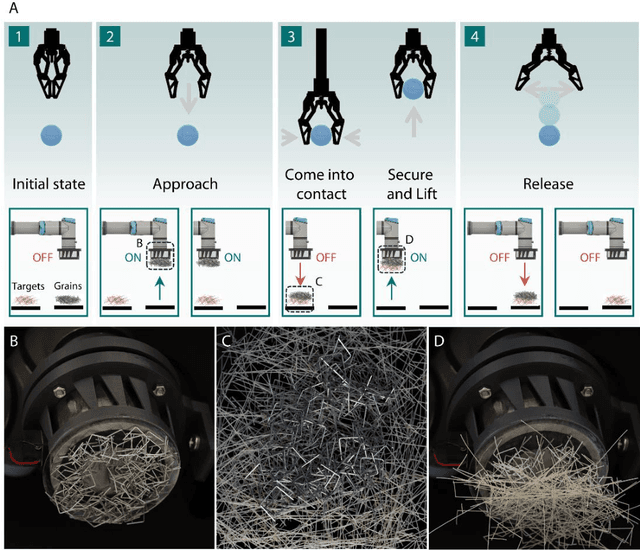

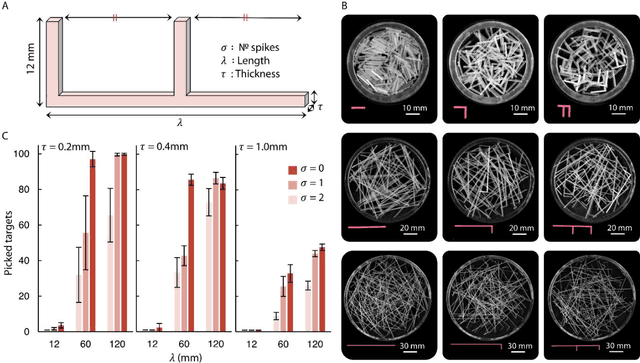

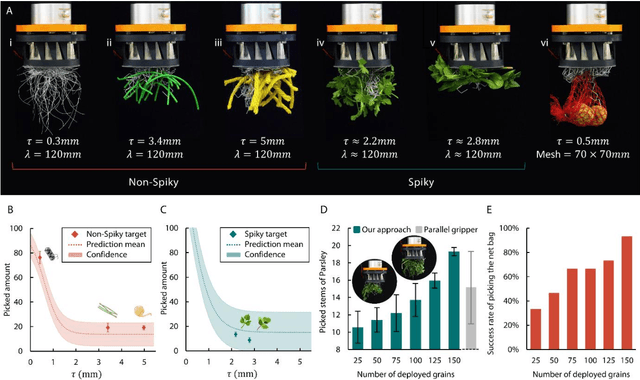

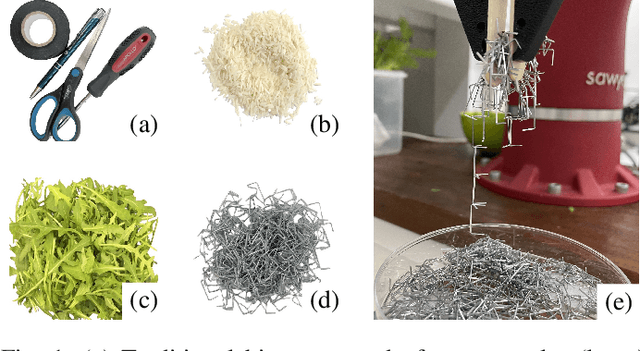

Complex picking via entanglement of granular mechanical metamaterials

Jul 25, 2024

Abstract:When objects are packed in a cluster, physical interactions are unavoidable. Such interactions emerge because of the objects geometric features; some of these features promote entanglement, while others create repulsion. When entanglement occurs, the cluster exhibits a global, complex behaviour, which arises from the stochastic interactions between objects. We hereby refer to such a cluster as an entangled granular metamaterial. We investigate the geometrical features of the objects which make up the cluster, henceforth referred to as grains, that maximise entanglement. We hypothesise that a cluster composed from grains with high propensity to tangle, will also show propensity to interact with a second cluster of tangled objects. To demonstrate this, we use the entangled granular metamaterials to perform complex robotic picking tasks, where conventional grippers struggle. We employ an electromagnet to attract the metamaterial (ferromagnetic) and drop it onto a second cluster of objects (targets, non-ferromagnetic). When the electromagnet is re-activated, the entanglement ensures that both the metamaterial and the targets are picked, with varying degrees of physical engagement that strongly depend on geometric features. Interestingly, although the metamaterials structural arrangement is random, it creates repeatable and consistent interactions with a second tangled media, enabling robust picking of the latter.

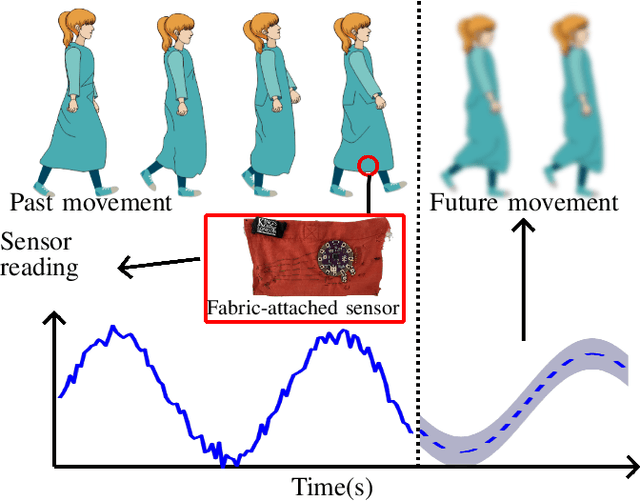

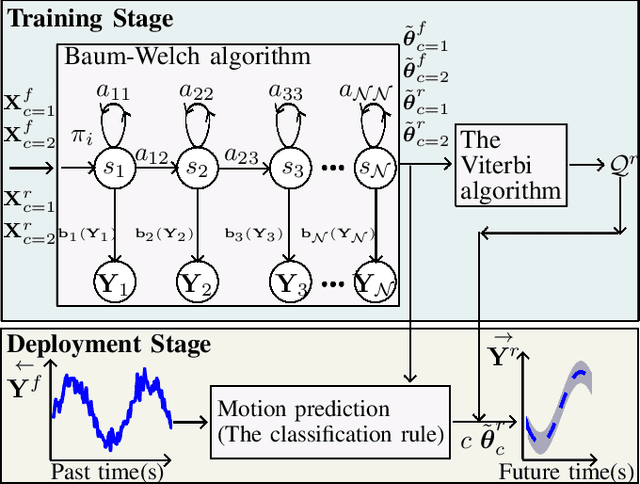

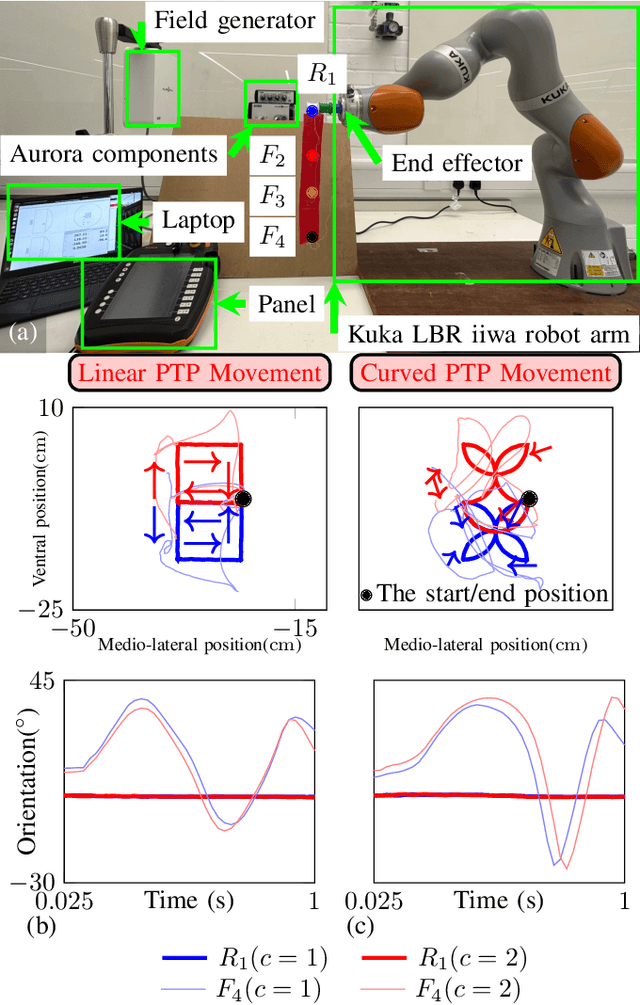

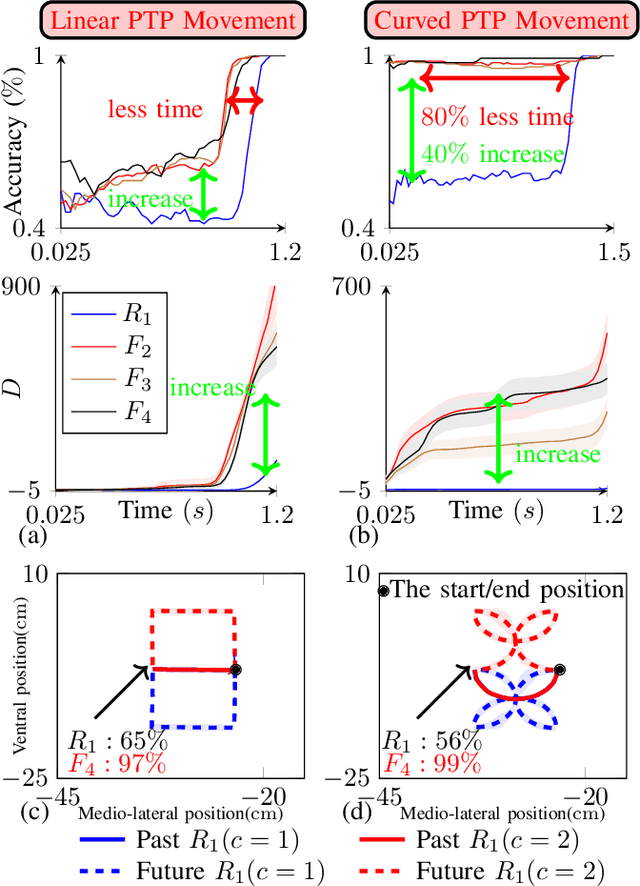

Trajectory Forecasting with Loose Clothing Using Left-to-Right Hidden Markov Model

Sep 17, 2023

Abstract:Trajectory forecasting has become an interesting research area driven by advancements in wearable sensing technology. Sensors can be seamlessly integrated into clothing using cutting-edge electronic textiles technology, allowing long-term recording of human movements outside the laboratory. Motivated by the recent findings that clothing-attached sensors can achieve higher activity recognition accuracy than body-attached sensors, this work investigates motion prediction and trajectory forecasting using rigid-attached and clothing-attached sensors. The future trajectory is forecasted from the probabilistic trajectory model formulated by left-to-right hidden Markov model (LR-HMM) and motion prediction accuracy is computed by the classification rule. Surprisingly, the results show that clothing-attached sensors can forecast the future trajectory and have better performance than body-attached sensors in terms of motion prediction accuracy. In some cases, the clothing-attached sensor can enhance accuracy by 45% compared to the body-attached sensor and requires approximately 80% less duration of the historical trajectory to achieve the same level of accuracy as the body-attached sensor.

Approximate, Adapt, Anonymize (3A): a Framework for Privacy Preserving Training Data Release for Machine Learning

Jul 04, 2023

Abstract:The availability of large amounts of informative data is crucial for successful machine learning. However, in domains with sensitive information, the release of high-utility data which protects the privacy of individuals has proven challenging. Despite progress in differential privacy and generative modeling for privacy-preserving data release in the literature, only a few approaches optimize for machine learning utility: most approaches only take into account statistical metrics on the data itself and fail to explicitly preserve the loss metrics of machine learning models that are to be subsequently trained on the generated data. In this paper, we introduce a data release framework, 3A (Approximate, Adapt, Anonymize), to maximize data utility for machine learning, while preserving differential privacy. We also describe a specific implementation of this framework that leverages mixture models to approximate, kernel-inducing points to adapt, and Gaussian differential privacy to anonymize a dataset, in order to ensure that the resulting data is both privacy-preserving and high utility. We present experimental evidence showing minimal discrepancy between performance metrics of models trained on real versus privatized datasets, when evaluated on held-out real data. We also compare our results with several privacy-preserving synthetic data generation models (such as differentially private generative adversarial networks), and report significant increases in classification performance metrics compared to state-of-the-art models. These favorable comparisons show that the presented framework is a promising direction of research, increasing the utility of low-risk synthetic data release for machine learning.

* 10 pages, 3 figures, AAAI Workshop

A Probabilistic Model of Activity Recognition with Loose Clothing

Sep 23, 2022

Abstract:Human activity recognition has become an attractive research area with the development of on-body wearable sensing technology. With comfortable electronic-textiles, sensors can be embedded into clothing so that it is possible to record human movement outside the laboratory for long periods. However, a long-standing issue is how to deal with motion artefacts introduced by movement of clothing with respect to the body. Surprisingly, recent empirical findings suggest that cloth-attached sensor can actually achieve higher accuracy of activity recognition than rigid-attached sensor, particularly when predicting from short time-windows. In this work, a probabilistic model is introduced in which this improved accuracy and resposiveness is explained by the increased statistical distance between movements recorded via fabric sensing. The predictions of the model are verified in simulated and real human motion capture experiments, where it is evident that this counterintuitive effect is closely captured.

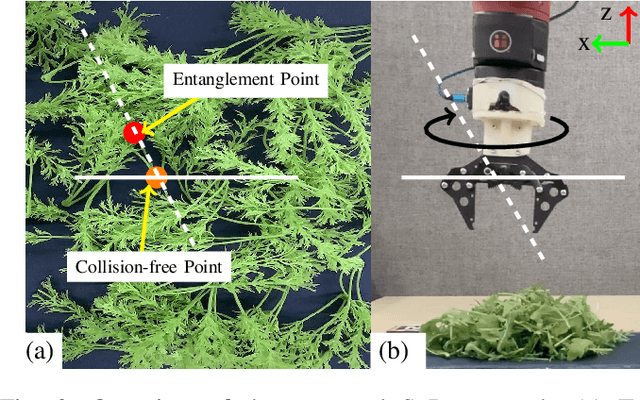

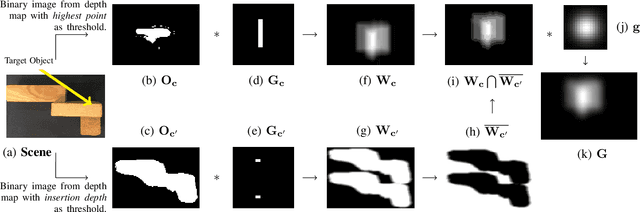

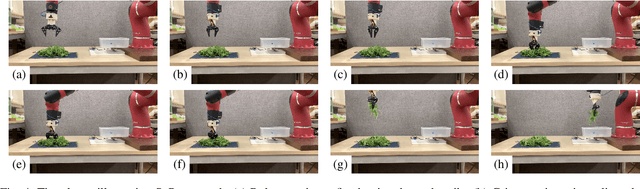

Robotic Untangling of Herbs and Salads with Parallel Grippers

Aug 09, 2022

Abstract:The picking of one or more objects from an unsorted pile continues to be non-trivial for robotic systems. This is especially so when the pile consists of a granular material (GM) containing individual items that tangle with one another, causing more to be picked out than desired. One of the key features of such tangle-prone GMs is the presence of protrusions extending out from the main body of items in the pile. This work characterises the role the latter play in causing mechanical entanglement and their impact on picking consistency. It reports experiments in which picking GMs with different protrusion lengths (PLs) results in up to 76% increase in picked mass variance, suggesting PL to be an informative feature in the design of picking strategies. Moreover, to counter this effect, it proposes a new spread-and-pick (SnP) approach that significantly reduces tangling, making picking more consistent. Compared to prior approaches that seek to pick from a tangle-free point in the pile, the proposed method results in a decrease in picking error (PE) of up to 51%, and shows good generalisation to previously unseen GMs.

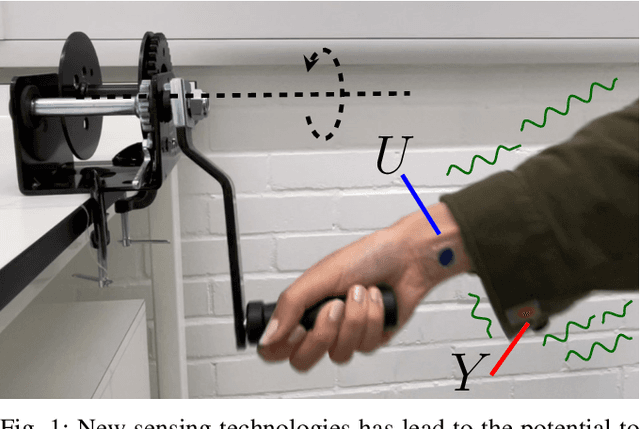

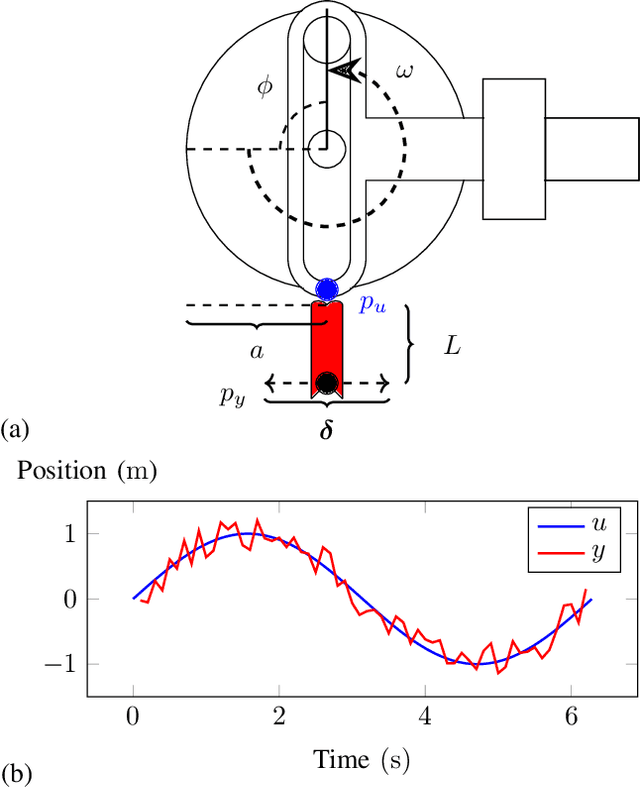

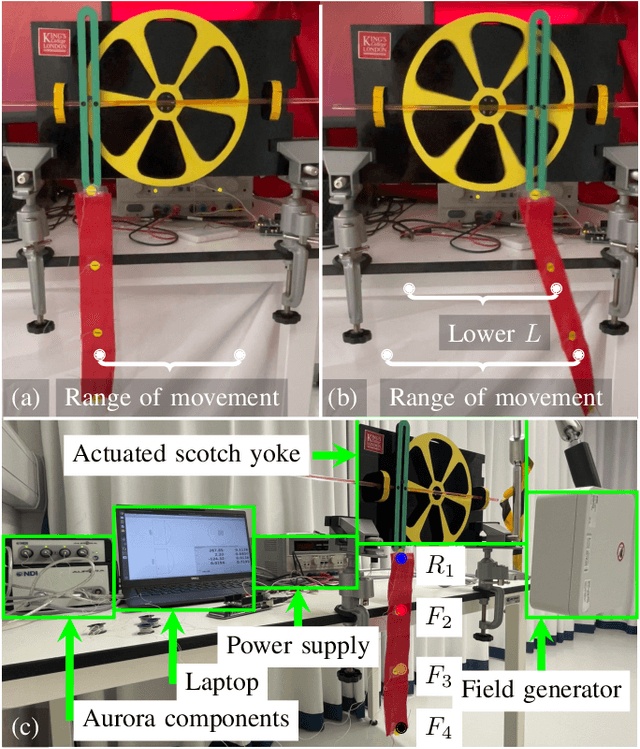

Training Humans to Train Robots Dynamic Motor Skills

May 13, 2021

Abstract:Learning from demonstration (LfD) is commonly considered to be a natural and intuitive way to allow novice users to teach motor skills to robots. However, it is important to acknowledge that the effectiveness of LfD is heavily dependent on the quality of teaching, something that may not be assured with novices. It remains an open question as to the most effective way of guiding demonstrators to produce informative demonstrations beyond ad hoc advice for specific teaching tasks. To this end, this paper investigates the use of machine teaching to derive an index for determining the quality of demonstrations and evaluates its use in guiding and training novices to become better teachers. Experiments with a simple learner robot suggest that guidance and training of teachers through the proposed approach can lead to up to 66.5% decrease in error in the learnt skill.

Exploiting Ergonomic Priors in Human-to-Robot Task Transfer

Mar 01, 2020

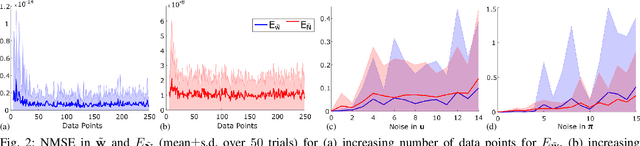

Abstract:In recent years, there has been a booming shift in the development of versatile, autonomous robots by introducing means to intuitively teach robots task-oriented behaviour by demonstration. In this paper, a method based on programming by demonstration is proposed to learn null space policies from constrained motion data. The main advantage to using this is generalisation of a task by retargeting a systems redundancy as well as the capability to fully replace an entire system with another of varying link number and lengths while still accurately repeating a task subject to the same constraints. The effectiveness of the method has been demonstrated in a 3-link simulation and a real world experiment using a human subject as the demonstrator and is verified through task reproduction on a 7DoF physical robot. In simulation, the method works accurately with even as little as five data points producing errors less than 10^-14. The approach is shown to outperform the current state-of-the-art approach in a simulated 3DoF robot manipulator control problem where motions are reproduced using learnt constraints. Retargeting of a systems null space component is also demonstrated in a task where controlling how redundancy is resolved allows for obstacle avoidance. Finally, the approach is verified in a real world experiment using demonstrations from a human subject where the learnt task space trajectory is transferred onto a 7DoF physical robot of a different embodiment.

Exploiting Variable Impedance for Energy Efficient Sequential Movements

Feb 27, 2020

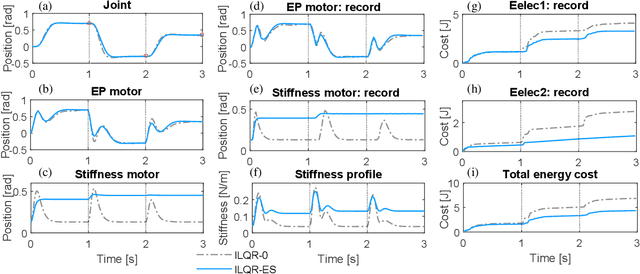

Abstract:Compliant robotics have seen successful applications in energy efficient locomotion and cyclic manipulation. However, fully exploitation of variable physical impedance for energy efficient sequential movements has not been extensively addressed. This work employs a hierarchical approach to encapsulate low-level optimal control for sub-movement generation into an outer loop of iterative policy improvement, thereby benefits of both optimal control and reinforcement learning are leveraged. The framework enables optimizing efficiency trade-off for minimal energy expenses in a model-free manner, by taking account of cost function weighting, variable impedance exploitation, and transition timing, which are associated with the skill of compliance. The effectiveness of the proposed method is evaluated using two consecutive reaching tasks on a variable impedance actuator. The results demonstrate significant energy saving by improving the skill of compliance, with a 30% electrical consumption reduction measured on hardware.

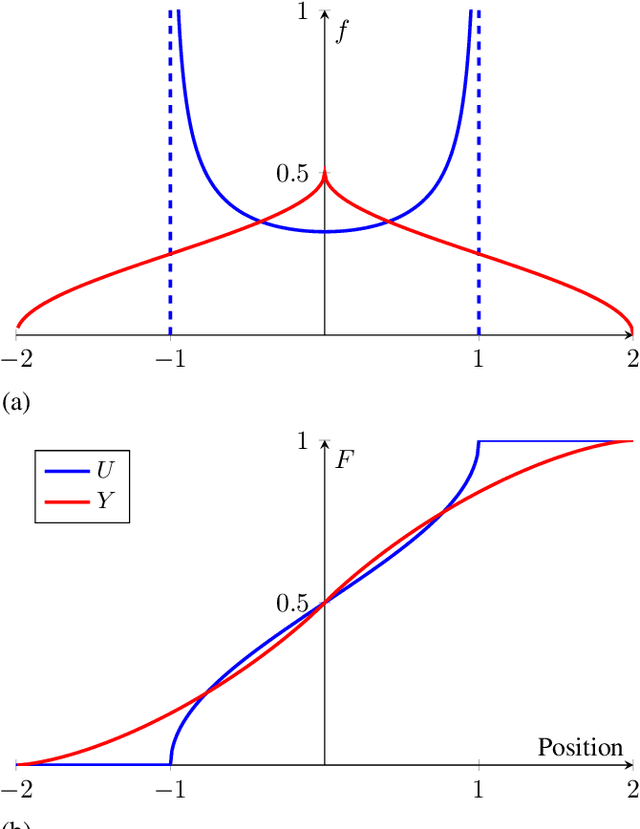

Learning Singularity Avoidance

Mar 25, 2019

Abstract:With the increase in complexity of robotic systems and the rise in non-expert users, it can be assumed that task constraints are not explicitly known. In tasks where avoiding singularity is critical to its success, this paper provides an approach, especially for non-expert users, for the system to learn the constraints contained in a set of demonstrations, such that they can be used to optimise an autonomous controller to avoid singularity, without having to explicitly know the task constraints. The proposed approach avoids singularity, and thereby unpredictable behaviour when carrying out a task, by maximising the learnt manipulability throughout the motion of the constrained system, and is not limited to kinematic systems. Its benefits are demonstrated through comparisons with other control policies which show that the constrained manipulability of a system learnt through demonstration can be used to avoid singularities in cases where these other policies would fail. In the absence of the systems manipulability subject to a tasks constraints, the proposed approach can be used instead to infer these with results showing errors less than 10^-5 in 3DOF simulated systems as well as 10^-2 using a 7DOF real world robotic system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge