Matthew B. Luebbers

Proceedings of 1st Workshop on Advancing Artificial Intelligence through Theory of Mind

Apr 28, 2025

Abstract:This volume includes a selection of papers presented at the Workshop on Advancing Artificial Intelligence through Theory of Mind held at AAAI 2025 in Philadelphia US on 3rd March 2025. The purpose of this volume is to provide an open access and curated anthology for the ToM and AI research community.

Bi-Directional Mental Model Reconciliation for Human-Robot Interaction with Large Language Models

Mar 10, 2025Abstract:In human-robot interactions, human and robot agents maintain internal mental models of their environment, their shared task, and each other. The accuracy of these representations depends on each agent's ability to perform theory of mind, i.e. to understand the knowledge, preferences, and intentions of their teammate. When mental models diverge to the extent that it affects task execution, reconciliation becomes necessary to prevent the degradation of interaction. We propose a framework for bi-directional mental model reconciliation, leveraging large language models to facilitate alignment through semi-structured natural language dialogue. Our framework relaxes the assumption of prior model reconciliation work that either the human or robot agent begins with a correct model for the other agent to align to. Through our framework, both humans and robots are able to identify and communicate missing task-relevant context during interaction, iteratively progressing toward a shared mental model.

Workspace Optimization Techniques to Improve Prediction of Human Motion During Human-Robot Collaboration

Jan 23, 2024

Abstract:Understanding human intentions is critical for safe and effective human-robot collaboration. While state of the art methods for human goal prediction utilize learned models to account for the uncertainty of human motion data, that data is inherently stochastic and high variance, hindering those models' utility for interactions requiring coordination, including safety-critical or close-proximity tasks. Our key insight is that robot teammates can deliberately configure shared workspaces prior to interaction in order to reduce the variance in human motion, realizing classifier-agnostic improvements in goal prediction. In this work, we present an algorithmic approach for a robot to arrange physical objects and project "virtual obstacles" using augmented reality in shared human-robot workspaces, optimizing for human legibility over a given set of tasks. We compare our approach against other workspace arrangement strategies using two human-subjects studies, one in a virtual 2D navigation domain and the other in a live tabletop manipulation domain involving a robotic manipulator arm. We evaluate the accuracy of human motion prediction models learned from each condition, demonstrating that our workspace optimization technique with virtual obstacles leads to higher robot prediction accuracy using less training data.

Improving Human Legibility in Collaborative Robot Tasks through Augmented Reality and Workspace Preparation

Nov 09, 2023

Abstract:Understanding the intentions of human teammates is critical for safe and effective human-robot interaction. The canonical approach for human-aware robot motion planning is to first predict the human's goal or path, and then construct a robot plan that avoids collision with the human. This method can generate unsafe interactions if the human model and subsequent predictions are inaccurate. In this work, we present an algorithmic approach for both arranging the configuration of objects in a shared human-robot workspace, and projecting ``virtual obstacles'' in augmented reality, optimizing for legibility in a given task. These changes to the workspace result in more legible human behavior, improving robot predictions of human goals, thereby improving task fluency and safety. To evaluate our approach, we propose two user studies involving a collaborative tabletop task with a manipulator robot, and a warehouse navigation task with a mobile robot.

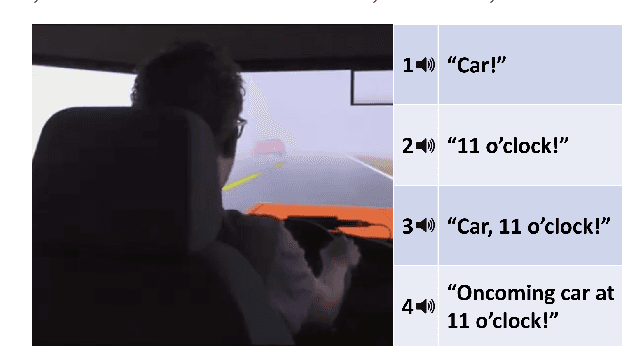

Automated Failure-Mode Clustering and Labeling for Informed Car-To-Driver Handover in Autonomous Vehicles

May 09, 2020

Abstract:The car-to-driver handover is a critically important component of safe autonomous vehicle operation when the vehicle is unable to safely proceed on its own. Current implementations of this handover in automobiles take the form of a generic alarm indicating an imminent transfer of control back to the human driver. However, certain levels of vehicle autonomy may allow the driver to engage in other, non-driving related tasks prior to a handover, leading to substantial difficulty in quickly regaining situational awareness. This delay in re-orientation could potentially lead to life-threatening failures unless mitigating steps are taken. Explainable AI has been shown to improve fluency and teamwork in human-robot collaboration scenarios. Therefore, we hypothesize that by utilizing autonomous explanation, these car-to-driver handovers can be performed more safely and reliably. The rationale is, by providing the driver with additional situational knowledge, they will more rapidly focus on the relevant parts of the driving environment. Towards this end, we propose an algorithmic failure-mode identification and explanation approach to enable informed handovers from vehicle to driver. Furthermore, we propose a set of human-subjects driving-simulator studies to determine the appropriate form of explanation during handovers, as well as validate our framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge