Massimo Mischi

Active Inference for Closed-loop transmit beamsteering in Fetal Doppler Ultrasound

Oct 07, 2024

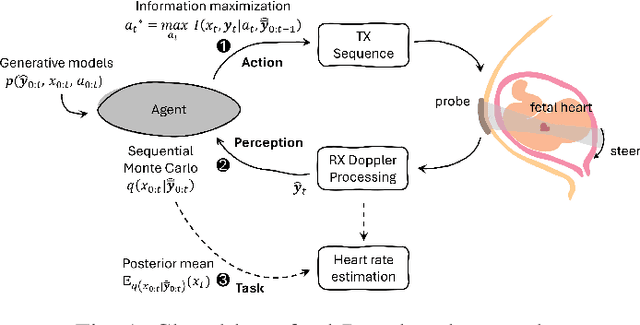

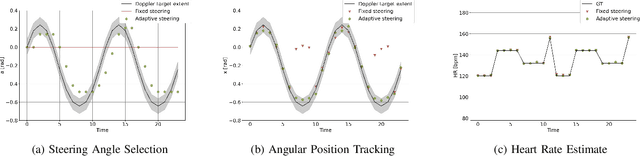

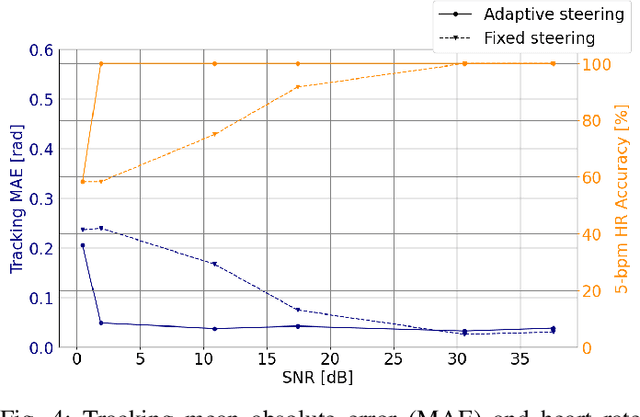

Abstract:Doppler ultrasound is widely used to monitor fetal heart rate during labor and pregnancy. Unfortunately, it is highly sensitive to fetal and maternal movements, which can cause the displacement of the fetal heart with respect to the ultrasound beam, in turn reducing the Doppler signal-to-noise ratio and leading to erratic, noisy, or missing heart rate readings. To tackle this issue, we augment the conventional Doppler ultrasound system with a rational agent that autonomously steers the ultrasound beam to track the position of the fetal heart. The proposed cognitive ultrasound system leverages a sequential Monte Carlo method to infer the fetal heart position from the power Doppler signal, and employs a greedy information-seeking criterion to select the steering angle that minimizes the positional uncertainty for future timesteps. The fetal heart rate is then calculated using the Doppler signal at the estimated fetal heart position. Our results show that the system can accurately track the fetal heart position across challenging signal-to-noise ratio scenarios, mainly thanks to its dynamic transmit beam steering capability. Additionally, we find that optimizing the transmit beamsteering to minimize positional uncertainty also optimizes downstream heart rate estimation performance. In conclusion, this work showcases the power of closed-loop cognitive ultrasound in boosting the capabilities of traditional systems.

Ultrasound Signal Processing: From Models to Deep Learning

Apr 09, 2022

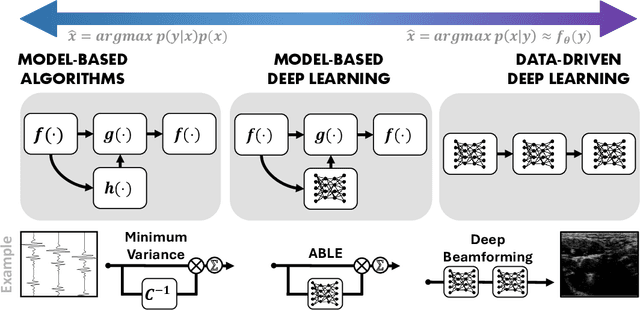

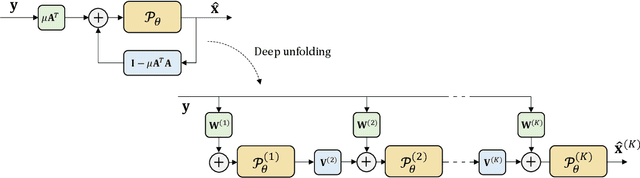

Abstract:Medical ultrasound imaging relies heavily on high-quality signal processing algorithms to provide reliable and interpretable image reconstructions. Hand-crafted reconstruction methods, often based on approximations of the underlying measurement model, are useful in practice, but notoriously fall behind in terms of image quality. More sophisticated solutions, based on statistical modelling, careful parameter tuning, or through increased model complexity, can be sensitive to different environments. Recently, deep learning based methods have gained popularity, which are optimized in a data-driven fashion. These model-agnostic methods often rely on generic model structures, and require vast training data to converge to a robust solution. A relatively new paradigm combines the power of the two: leveraging data-driven deep learning, as well as exploiting domain knowledge. These model-based solutions yield high robustness, and require less trainable parameters and training data than conventional neural networks. In this work we provide an overview of these methods from the recent literature, and discuss a wide variety of ultrasound applications. We aim to inspire the reader to further research in this area, and to address the opportunities within the field of ultrasound signal processing. We conclude with a future perspective on these model-based deep learning techniques for medical ultrasound applications.

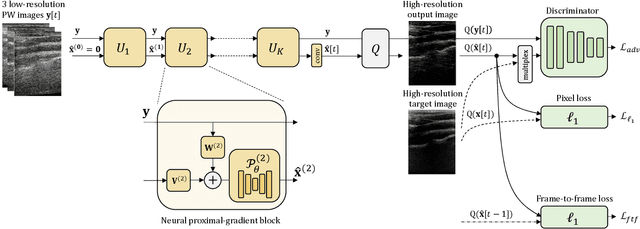

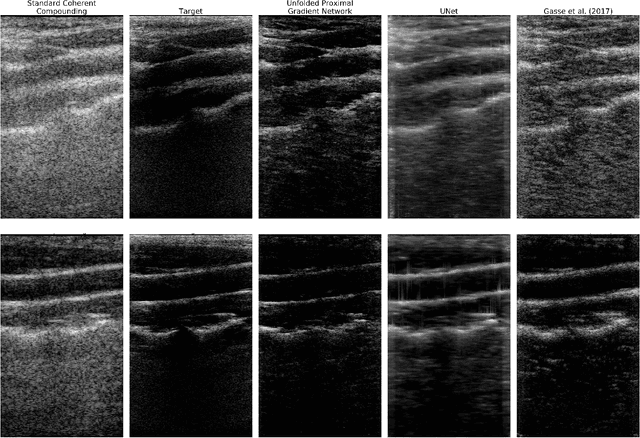

Deep Proximal Learning for High-Resolution Plane Wave Compounding

Dec 23, 2021

Abstract:Plane Wave imaging enables many applications that require high frame rates, including localisation microscopy, shear wave elastography, and ultra-sensitive Doppler. To alleviate the degradation of image quality with respect to conventional focused acquisition, typically, multiple acquisitions from distinctly steered plane waves are coherently (i.e. after time-of-flight correction) compounded into a single image. This poses a trade-off between image quality and achievable frame-rate. To that end, we propose a new deep learning approach, derived by formulating plane wave compounding as a linear inverse problem, that attains high resolution, high-contrast images from just 3 plane wave transmissions. Our solution unfolds the iterations of a proximal gradient descent algorithm as a deep network, thereby directly exploiting the physics-based generative acquisition model into the neural network design. We train our network in a greedy manner, i.e. layer-by-layer, using a combination of pixel, temporal, and distribution (adversarial) losses to achieve both perceptual fidelity and data consistency. Through the strong model-based inductive bias, the proposed architecture outperforms several standard benchmark architectures in terms of image quality, with a low computational and memory footprint.

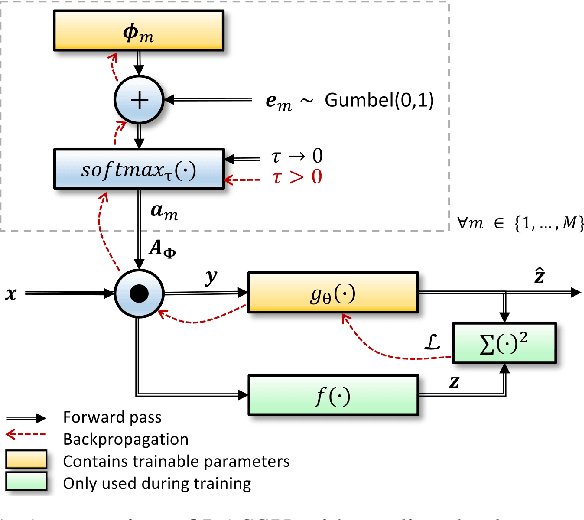

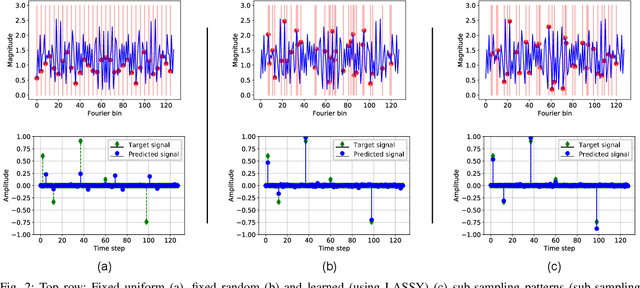

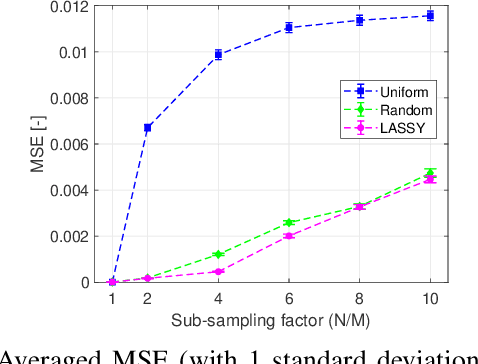

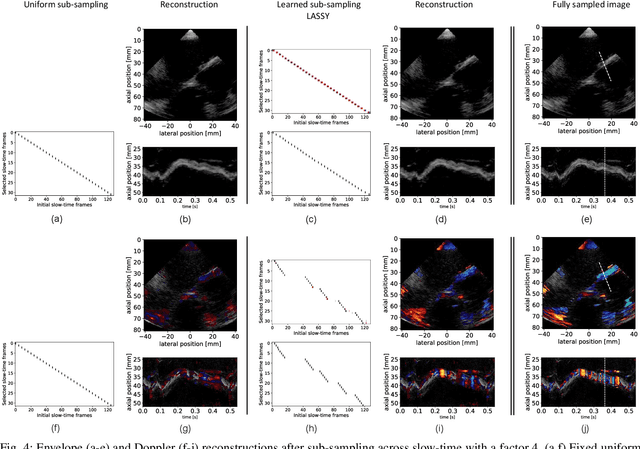

Learning Sub-Sampling and Signal Recovery with Applications in Ultrasound Imaging

Aug 15, 2019

Abstract:Limitations on bandwidth and power consumption impose strict bounds on data rates of diagnostic imaging systems. Consequently, the design of suitable (i.e. task- and data-aware) compression and reconstruction techniques has attracted considerable attention in recent years. Compressed sensing emerged as a popular framework for sparse signal reconstruction from a small set of compressed measurements. However, typical compressed sensing designs measure a (non)linearly weighted combination of all input signal elements, which poses practical challenges. These designs are also not necessarily task-optimal. In addition, real-time recovery is hampered by the iterative and time-consuming nature of sparse recovery algorithms. Recently, deep learning methods have shown promise for fast recovery from compressed measurements, but the design of adequate and practical sensing strategies remains a challenge. Here, we propose a deep learning solution, termed LASSY (LeArning Sub-Sampling and recoverY), that jointly learns a task-driven sub-sampling pattern and subsequent reconstruction model. The learned sub-sampling patterns are straightforwardly implementable, and based on the task at hand. LASSY's effectiveness is demonstrated in-silico for sparse signal recovery from partial Fourier measurements, and in-vivo for both anatomical-image and motion (Doppler) reconstruction from sub-sampled medical ultrasound imaging data.

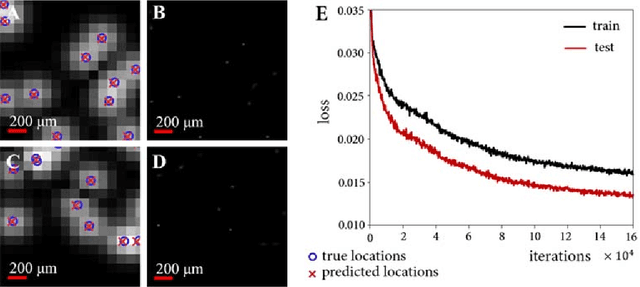

Super-resolution Ultrasound Localization Microscopy through Deep Learning

Apr 20, 2018

Abstract:Ultrasound localization microscopy has enabled super-resolution vascular imaging in laboratory environments through precise localization of individual ultrasound contrast agents across numerous imaging frames. However, analysis of high-density regions with significant overlaps among the agents' point spread responses yields high localization errors, constraining the technique to low-concentration conditions. As such, long acquisition times are required to sufficiently cover the vascular bed. In this work, we present a fast and precise method for obtaining super-resolution vascular images from high-density contrast-enhanced ultrasound imaging data. This method, which we term Deep Ultrasound Localization Microscopy (Deep-ULM), exploits modern deep learning strategies and employs a convolutional neural network to perform localization microscopy in dense scenarios. This end-to-end fully convolutional neural network architecture is trained effectively using on-line synthesized data, enabling robust inference in-vivo under a wide variety of imaging conditions. We show that deep learning attains super-resolution with challenging contrast-agent concentrations (microbubble densities), both in-silico as well as in-vivo, as we go from ultrasound scans of a rodent spinal cord in an experimental setting to standard clinically-acquired recordings in a human prostate. Deep-ULM achieves high quality sub-diffraction recovery, and is suitable for real-time applications, resolving about 135 high-resolution 64x64-patches per second on a standard PC. Exploiting GPU computation, this number increases to 2500 patches per second.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge