Ruud JG van Sloun

Active inference and deep generative modeling for cognitive ultrasound

Oct 17, 2024

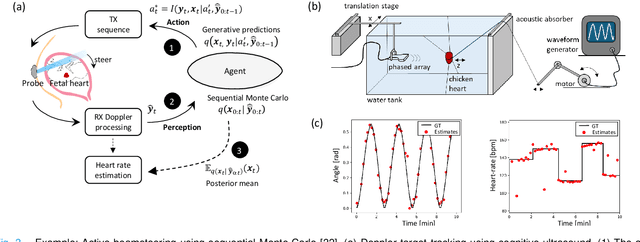

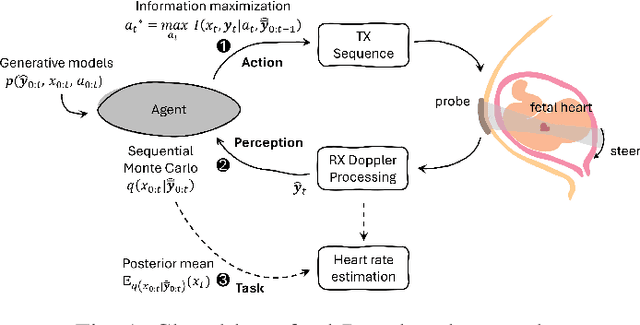

Abstract:Ultrasound (US) has the unique potential to offer access to medical imaging to anyone, everywhere. Devices have become ultra-portable and cost-effective, akin to the stethoscope. Nevertheless US image quality and diagnostic efficacy are still highly operator- and patient-dependent. In difficult-to-image patients, image quality is often insufficient for reliable diagnosis. In this paper, we put forth that US imaging systems can be recast as information-seeking agents that engage in reciprocal interactions with their anatomical environment. Such agents autonomously adapt their transmit-receive sequences to fully personalize imaging and actively maximize information gain in-situ. To that end, we will show that the sequence of pulse-echo experiments that a US system performs can be interpreted as a perception-action loop: the action is the data acquisition, probing tissue with acoustic waves and recording reflections at the detection array, and perception is the inference of the anatomical and or functional state, potentially including associated diagnostic quantities. We then equip systems with a mechanism to actively reduce uncertainty and maximize diagnostic value across a sequence of experiments, treating action and perception jointly using Bayesian inference given generative models of the environment and action-conditional pulse-echo observations. Since the representation capacity of the generative models dictates both the quality of inferred anatomical states and the effectiveness of inferred sequences of future imaging actions, we will be greatly leveraging the enormous advances in deep generative modelling that are currently disrupting many fields and society at large. Finally, we show some examples of cognitive, closed-loop, US systems that perform active beamsteering and adaptive scanline selection, based on deep generative models that track anatomical belief states.

Active Inference for Closed-loop transmit beamsteering in Fetal Doppler Ultrasound

Oct 07, 2024

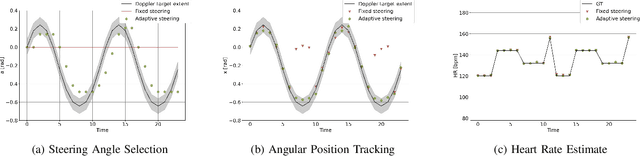

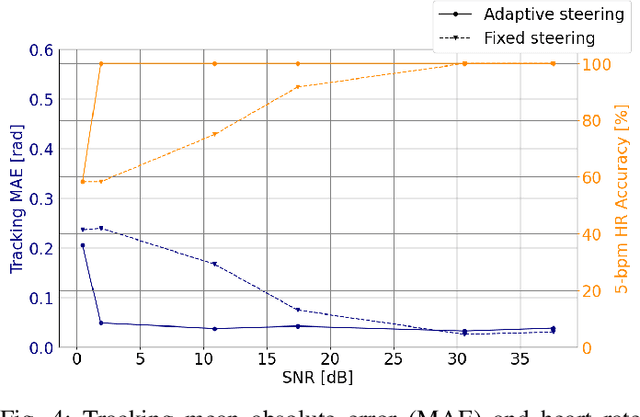

Abstract:Doppler ultrasound is widely used to monitor fetal heart rate during labor and pregnancy. Unfortunately, it is highly sensitive to fetal and maternal movements, which can cause the displacement of the fetal heart with respect to the ultrasound beam, in turn reducing the Doppler signal-to-noise ratio and leading to erratic, noisy, or missing heart rate readings. To tackle this issue, we augment the conventional Doppler ultrasound system with a rational agent that autonomously steers the ultrasound beam to track the position of the fetal heart. The proposed cognitive ultrasound system leverages a sequential Monte Carlo method to infer the fetal heart position from the power Doppler signal, and employs a greedy information-seeking criterion to select the steering angle that minimizes the positional uncertainty for future timesteps. The fetal heart rate is then calculated using the Doppler signal at the estimated fetal heart position. Our results show that the system can accurately track the fetal heart position across challenging signal-to-noise ratio scenarios, mainly thanks to its dynamic transmit beam steering capability. Additionally, we find that optimizing the transmit beamsteering to minimize positional uncertainty also optimizes downstream heart rate estimation performance. In conclusion, this work showcases the power of closed-loop cognitive ultrasound in boosting the capabilities of traditional systems.

Deep Learning for Ultrasound Beamforming

Sep 23, 2021

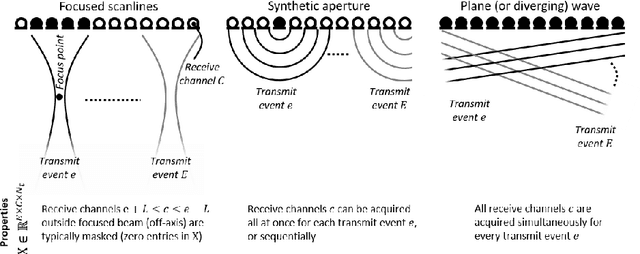

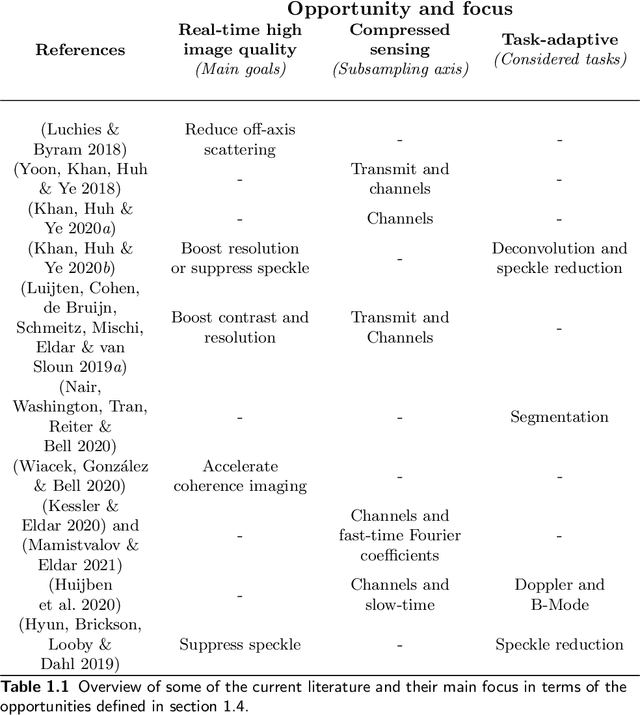

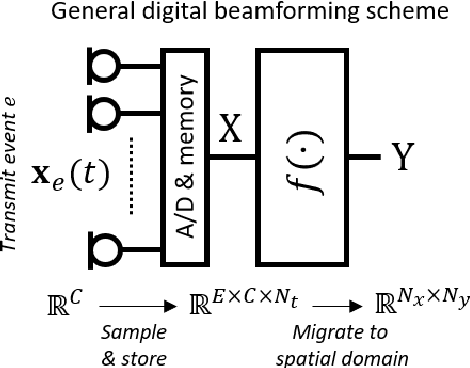

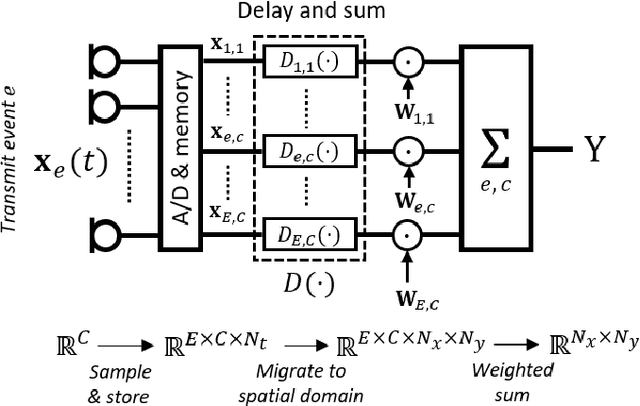

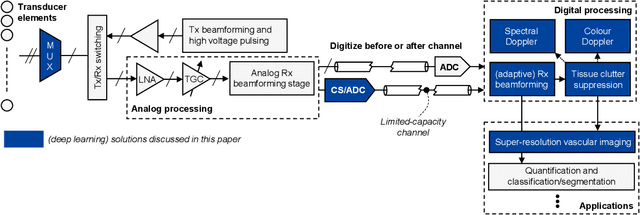

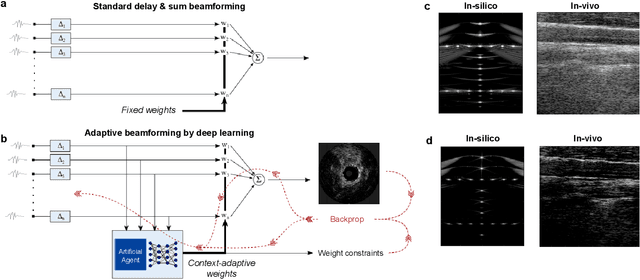

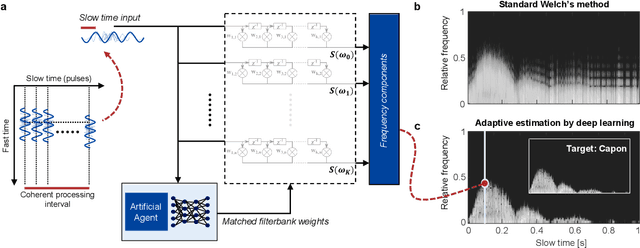

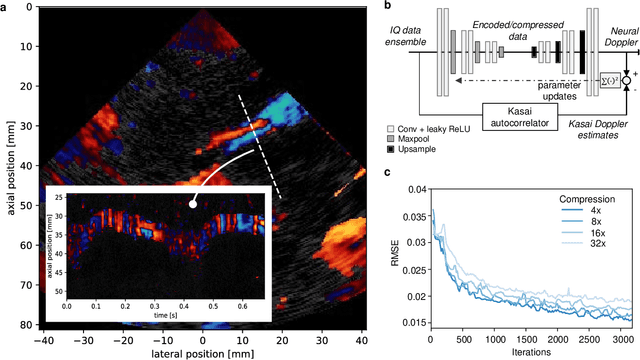

Abstract:Diagnostic imaging plays a critical role in healthcare, serving as a fundamental asset for timely diagnosis, disease staging and management as well as for treatment choice, planning, guidance, and follow-up. Among the diagnostic imaging options, ultrasound imaging is uniquely positioned, being a highly cost-effective modality that offers the clinician an unmatched and invaluable level of interaction, enabled by its real-time nature. Ultrasound probes are becoming increasingly compact and portable, with the market demand for low-cost pocket-sized and (in-body) miniaturized devices expanding. At the same time, there is a strong trend towards 3D imaging and the use of high-frame-rate imaging schemes; both accompanied by dramatically increasing data rates that pose a heavy burden on the probe-system communication and subsequent image reconstruction algorithms. With the demand for high-quality image reconstruction and signal extraction from less (e.g unfocused or parallel) transmissions that facilitate fast imaging, and a push towards compact probes, modern ultrasound imaging leans heavily on innovations in powerful digital receive channel processing. Beamforming, the process of mapping received ultrasound echoes to the spatial image domain, naturally lies at the heart of the ultrasound image formation chain. In this chapter on Deep Learning for Ultrasound Beamforming, we discuss why and when deep learning methods can play a compelling role in the digital beamforming pipeline, and then show how these data-driven systems can be leveraged for improved ultrasound image reconstruction.

Deep learning in ultrasound imaging

Jul 29, 2019

Abstract:We consider deep learning strategies in ultrasound systems, from the front-end to advanced applications. Our goal is to provide the reader with a broad understanding of the possible impact of deep learning methodologies on many aspects of ultrasound imaging. In particular, we discuss methods that lie at the interface of signal acquisition and machine learning, exploiting both data structure (e.g. sparsity in some domain) and data dimensionality (big data) already at the raw radio-frequency channel stage. As some examples, we outline efficient and effective deep learning solutions for adaptive beamforming and adaptive spectral Doppler through artificial agents, learn compressive encodings for color Doppler, and provide a framework for structured signal recovery by learning fast approximations of iterative minimization problems, with applications to clutter suppression and super-resolution ultrasound. These emerging technologies may have considerable impact on ultrasound imaging, showing promise across key components in the receive processing chain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge