Martin Fischer

SYNBUILD-3D: A large, multi-modal, and semantically rich synthetic dataset of 3D building models at Level of Detail 4

Aug 28, 2025Abstract:3D building models are critical for applications in architecture, energy simulation, and navigation. Yet, generating accurate and semantically rich 3D buildings automatically remains a major challenge due to the lack of large-scale annotated datasets in the public domain. Inspired by the success of synthetic data in computer vision, we introduce SYNBUILD-3D, a large, diverse, and multi-modal dataset of over 6.2 million synthetic 3D residential buildings at Level of Detail (LoD) 4. In the dataset, each building is represented through three distinct modalities: a semantically enriched 3D wireframe graph at LoD 4 (Modality I), the corresponding floor plan images (Modality II), and a LiDAR-like roof point cloud (Modality III). The semantic annotations for each building wireframe are derived from the corresponding floor plan images and include information on rooms, doors, and windows. Through its tri-modal nature, future work can use SYNBUILD-3D to develop novel generative AI algorithms that automate the creation of 3D building models at LoD 4, subject to predefined floor plan layouts and roof geometries, while enforcing semantic-geometric consistency. Dataset and code samples are publicly available at https://github.com/kdmayer/SYNBUILD-3D.

A Mobile Robotic Approach to Autonomous Surface Scanning in Legal Medicine

Feb 20, 2025Abstract:Purpose: Comprehensive legal medicine documentation includes both an internal but also an external examination of the corpse. Typically, this documentation is conducted manually during conventional autopsy. A systematic digital documentation would be desirable, especially for the external examination of wounds, which is becoming more relevant for legal medicine analysis. For this purpose, RGB surface scanning has been introduced. While a manual full surface scan using a handheld camera is timeconsuming and operator dependent, floor or ceiling mounted robotic systems require substantial space and a dedicated room. Hence, we consider whether a mobile robotic system can be used for external documentation. Methods: We develop a mobile robotic system that enables full-body RGB-D surface scanning. Our work includes a detailed configuration space analysis to identify the environmental parameters that need to be considered to successfully perform a surface scan. We validate our findings through an experimental study in the lab and demonstrate the system's application in a legal medicine environment. Results: Our configuration space analysis shows that a good trade-off between coverage and time is reached with three robot base positions, leading to a coverage of 94.96 %. Experiments validate the effectiveness of the system in accurately capturing body surface geometry with an average surface coverage of 96.90 +- 3.16 % and 92.45 +- 1.43 % for a body phantom and actual corpses, respectively. Conclusion: This work demonstrates the potential of a mobile robotic system to automate RGB-D surface scanning in legal medicine, complementing the use of post-mortem CT scans for inner documentation. Our results indicate that the proposed system can contribute to more efficient and autonomous legal medicine documentation, reducing the need for manual intervention.

A Modified da Vinci Surgical Instrument for OCE based Elasticity Estimation with Deep Learning

Mar 14, 2024

Abstract:Robot-assisted surgery has advantages compared to conventional laparoscopic procedures, e.g., precise movement of the surgical instruments, improved dexterity, and high-resolution visualization of the surgical field. However, mechanical tissue properties may provide additional information, e.g., on the location of lesions or vessels. While elastographic imaging has been proposed, it is not readily available as an online modality during robot-assisted surgery. We propose modifying a da~Vinci surgical instrument to realize optical coherence elastography (OCE) for quantitative elasticity estimation. The modified da~Vinci instrument is equipped with piezoelectric elements for shear wave excitation and we employ fast optical coherence tomography (OCT) imaging to track propagating wave fields, which are directly related to biomechanical tissue properties. All high-voltage components are mounted at the proximal end outside the patient. We demonstrate that external excitation at the instrument shaft can effectively stimulate shear waves, even when considering damping. Comparing conventional and deep learning-based signal processing, resulting in mean absolute errors of 19.27 kPa and 6.29 kPa, respectively. These results illustrate that precise quantitative elasticity estimates can be obtained. We also demonstrate quantitative elasticity estimation on ex-vivo tissue samples of heart, liver and stomach, and show that the measurements can be used to distinguish soft and stiff tissue types.

LeanAI: A method for AEC practitioners to effectively plan AI implementations

Jun 29, 2023

Abstract:Recent developments in Artificial Intelligence (AI) provide unprecedented automation opportunities in the Architecture, Engineering, and Construction (AEC) industry. However, despite the enthusiasm regarding the use of AI, 85% of current big data projects fail. One of the main reasons for AI project failures in the AEC industry is the disconnect between those who plan or decide to use AI and those who implement it. AEC practitioners often lack a clear understanding of the capabilities and limitations of AI, leading to a failure to distinguish between what AI should solve, what it can solve, and what it will solve, treating these categories as if they are interchangeable. This lack of understanding results in the disconnect between AI planning and implementation because the planning is based on a vision of what AI should solve without considering if it can or will solve it. To address this challenge, this work introduces the LeanAI method. The method has been developed using data from several ongoing longitudinal studies analyzing AI implementations in the AEC industry, which involved 50+ hours of interview data. The LeanAI method delineates what AI should solve, what it can solve, and what it will solve, forcing practitioners to clearly articulate these components early in the planning process itself by involving the relevant stakeholders. By utilizing the method, practitioners can effectively plan AI implementations, thus increasing the likelihood of success and ultimately speeding up the adoption of AI. A case example illustrates the usefulness of the method.

Points for Energy Renovation : A LiDAR-Derived Point Cloud Dataset of One Million English Buildings Linked to Energy Characteristics

Jun 28, 2023Abstract:Rapid renovation of Europe's inefficient buildings is required to reduce climate change. However, analyzing and evaluating buildings at scale is challenging because every building is unique. In current practice, the energy performance of buildings is assessed during on-site visits, which are slow, costly, and local. This paper presents a building point cloud dataset that promotes a data-driven, large-scale understanding of the 3D representation of buildings and their energy characteristics. We generate building point clouds by intersecting building footprints with geo-referenced LiDAR data and link them with attributes from UK's energy performance database via the Unique Property Reference Number (UPRN). To achieve a representative sample, we select one million buildings from a range of rural and urban regions across England, of which half a million are linked to energy characteristics. Building point clouds in new regions can be generated with the open-source code published alongside the paper. The dataset enables novel research in building energy modeling and can be easily expanded to other research fields by adding building features via the UPRN or geo-location.

BIM-GPT: a Prompt-Based Virtual Assistant Framework for BIM Information Retrieval

Apr 18, 2023

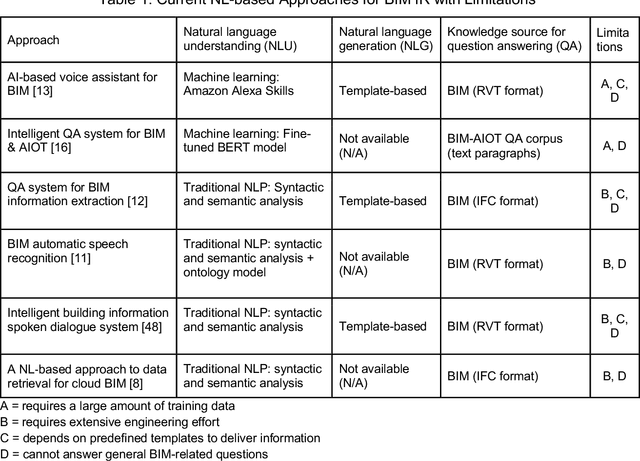

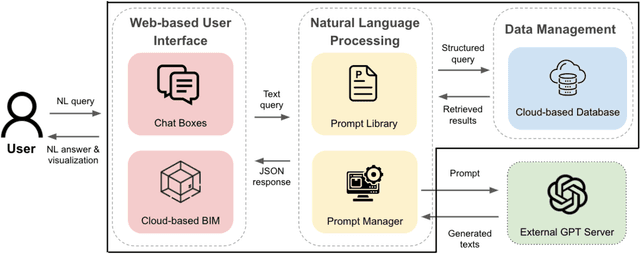

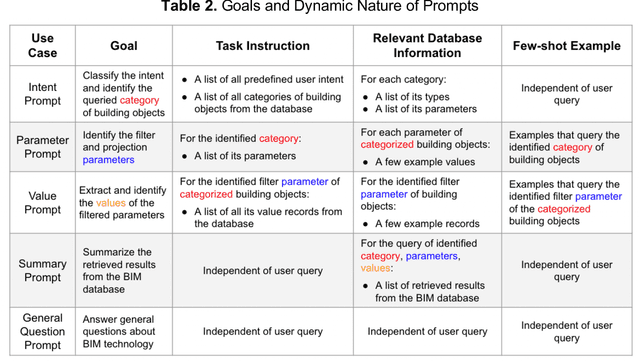

Abstract:Efficient information retrieval (IR) from building information models (BIMs) poses significant challenges due to the necessity for deep BIM knowledge or extensive engineering efforts for automation. We introduce BIM-GPT, a prompt-based virtual assistant (VA) framework integrating BIM and generative pre-trained transformer (GPT) technologies to support NL-based IR. A prompt manager and dynamic template generate prompts for GPT models, enabling interpretation of NL queries, summarization of retrieved information, and answering BIM-related questions. In tests on a BIM IR dataset, our approach achieved 83.5% and 99.5% accuracy rates for classifying NL queries with no data and 2% data incorporated in prompts, respectively. Additionally, we validated the functionality of BIM-GPT through a VA prototype for a hospital building. This research contributes to the development of effective and versatile VAs for BIM IR in the construction industry, significantly enhancing BIM accessibility and reducing engineering efforts and training data requirements for processing NL queries.

Digital Twin: Where do humans fit in?

Jan 08, 2023Abstract:Digital Twin (DT) technology is far from being comprehensive and mature, resulting in their piecemeal implementation in practice where some functions are automated by DTs, and others are still performed by humans. This piecemeal implementation of DTs often leaves practitioners wondering what roles (or functions) to allocate to DTs in a work system, and how might it impact humans. A lack of knowledge about the roles that humans and DTs play in a work system can result in significant costs, misallocation of resources, unrealistic expectations from DTs, and strategic misalignments. To alleviate this challenge, this paper answers the research question: When humans work with DTs, what types of roles can a DT play, and to what extent can those roles be automated? Specifically, we propose a two-dimensional conceptual framework, Levels of Digital Twin (LoDT). The framework is an integration of the types of roles a DT can play, broadly categorized under (1) Observer, (2) Analyst, (3) Decision Maker, and (4) Action Executor, and the extent of automation for each of these roles, divided into five different levels ranging from completely manual to fully automated. A particular DT can play any number of roles at varying levels. The framework can help practitioners systematically plan DT deployments, clearly communicate goals and deliverables, and lay out a strategic vision. A case study illustrates the usefulness of the framework.

Vitruvio: 3D Building Meshes via Single Perspective Sketches

Oct 24, 2022

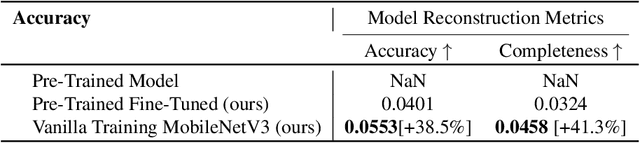

Abstract:Today's architectural engineering and construction (AEC) software require a learning curve to generate a three-dimension building representation. This limits the ability to quickly validate the volumetric implications of an initial design idea communicated via a single sketch. Allowing designers to translate a single sketch to a 3D building will enable owners to instantly visualize 3D project information without the cognitive load required. If previous state-of-the-art (SOTA) data-driven methods for single view reconstruction (SVR) showed outstanding results in the reconstruction process from a single image or sketch, they lacked specific applications, analysis, and experiments in the AEC. Therefore, this research addresses this gap, introducing a deep learning method: Vitruvio. Vitruvio adapts Occupancy Network for SVR tasks on a specific building dataset (Manhattan 1K). This adaptation brings two main improvements. First, it accelerates the inference process by more than 26\% (from 0.5s to 0.37s). Second, it increases the reconstruction accuracy (measured by the Chamfer Distance) by 18\%. During this adaptation in the AEC domain, we evaluate the effect of the building orientation in the learning procedure since it constitutes an important design factor. While aligning all the buildings to a canonical pose improved the overall quantitative metrics, it did not capture fine-grain details in more complex building shapes (as shown in our qualitative analysis). Finally, Vitruvio outputs a 3D-printable building mesh with arbitrary topology and genus from a single perspective sketch, providing a step forward to allow owners and designers to communicate 3D information via a 2D, effective, intuitive, and universal communication medium: the sketch.

A new perspective on Digital Twins: Imparting intelligence and agency to entities

Oct 11, 2022

Abstract:Despite the Digital Twin (DT) concept being in the industry for a long time, it remains ambiguous, unable to differentiate itself from information models, general computing, and simulation technologies. Part of this confusion stems from previous studies overlooking the DT's bidirectional nature, that enables the shift of agency (delegating control) from humans to physical elements, something that was not possible with earlier technologies. Thus, we present DTs in a new light by viewing them as a means of imparting intelligence and agency to entities, emphasizing that DTs are not just expert-centric tools but are active systems that extend the capabilities of the entities being twinned. This new perspective on DTs can help reduce confusion and humanize the concept by starting discussions about how intelligent a DT should be, and its roles and responsibilities, as well as setting a long-term direction for DTs.

Digital Twin: From Concept to Practice

Jan 14, 2022

Abstract:Recent technological developments and advances in Artificial Intelligence (AI) have enabled sophisticated capabilities to be a part of Digital Twin (DT), virtually making it possible to introduce automation into all aspects of work processes. Given these possibilities that DT can offer, practitioners are facing increasingly difficult decisions regarding what capabilities to select while deploying a DT in practice. The lack of research in this field has not helped either. It has resulted in the rebranding and reuse of emerging technological capabilities like prediction, simulation, AI, and Machine Learning (ML) as necessary constituents of DT. Inappropriate selection of capabilities in a DT can result in missed opportunities, strategic misalignments, inflated expectations, and risk of it being rejected as just hype by the practitioners. To alleviate this challenge, this paper proposes the digitalization framework, designed and developed by following a Design Science Research (DSR) methodology over a period of 18 months. The framework can help practitioners select an appropriate level of sophistication in a DT by weighing the pros and cons for each level, deciding evaluation criteria for the digital twin system, and assessing the implications of the selected DT on the organizational processes and strategies, and value creation. Three real-life case studies illustrate the application and usefulness of the framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge