Markus Hecher

Structure-Aware Encodings of Argumentation Properties for Clique-width

Nov 13, 2025

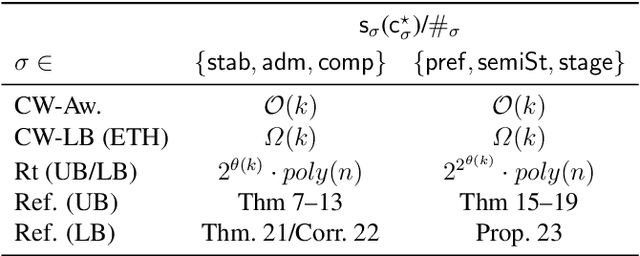

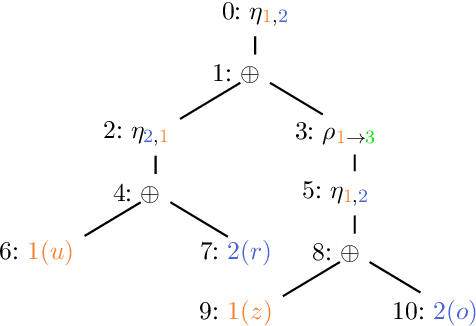

Abstract:Structural measures of graphs, such as treewidth, are central tools in computational complexity resulting in efficient algorithms when exploiting the parameter. It is even known that modern SAT solvers work efficiently on instances of small treewidth. Since these solvers are widely applied, research interests in compact encodings into (Q)SAT for solving and to understand encoding limitations. Even more general is the graph parameter clique-width, which unlike treewidth can be small for dense graphs. Although algorithms are available for clique-width, little is known about encodings. We initiate the quest to understand encoding capabilities with clique-width by considering abstract argumentation, which is a robust framework for reasoning with conflicting arguments. It is based on directed graphs and asks for computationally challenging properties, making it a natural candidate to study computational properties. We design novel reductions from argumentation problems to (Q)SAT. Our reductions linearly preserve the clique-width, resulting in directed decomposition-guided (DDG) reductions. We establish novel results for all argumentation semantics, including counting. Notably, the overhead caused by our DDG reductions cannot be significantly improved under reasonable assumptions.

Automated Hybrid Grounding Using Structural and Data-Driven Heuristics

Jul 23, 2025Abstract:The grounding bottleneck poses one of the key challenges that hinders the widespread adoption of Answer Set Programming in industry. Hybrid Grounding is a step in alleviating the bottleneck by combining the strength of standard bottom-up grounding with recently proposed techniques where rule bodies are decoupled during grounding. However, it has remained unclear when hybrid grounding shall use body-decoupled grounding and when to use standard bottom-up grounding. In this paper, we address this issue by developing automated hybrid grounding: we introduce a splitting algorithm based on data-structural heuristics that detects when to use body-decoupled grounding and when standard grounding is beneficial. We base our heuristics on the structure of rules and an estimation procedure that incorporates the data of the instance. The experiments conducted on our prototypical implementation demonstrate promising results, which show an improvement on hard-to-ground scenarios, whereas on hard-to-solve instances we approach state-of-the-art performance.

Facets in Argumentation: A Formal Approach to Argument Significance

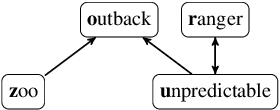

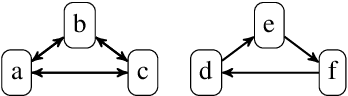

May 16, 2025Abstract:Argumentation is a central subarea of Artificial Intelligence (AI) for modeling and reasoning about arguments. The semantics of abstract argumentation frameworks (AFs) is given by sets of arguments (extensions) and conditions on the relationship between them, such as stable or admissible. Today's solvers implement tasks such as finding extensions, deciding credulous or skeptical acceptance, counting, or enumerating extensions. While these tasks are well charted, the area between decision, counting/enumeration and fine-grained reasoning requires expensive reasoning so far. We introduce a novel concept (facets) for reasoning between decision and enumeration. Facets are arguments that belong to some extensions (credulous) but not to all extensions (skeptical). They are most natural when a user aims to navigate, filter, or comprehend the significance of specific arguments, according to their needs. We study the complexity and show that tasks involving facets are much easier than counting extensions. Finally, we provide an implementation, and conduct experiments to demonstrate feasibility.

Rejection in Abstract Argumentation: Harder Than Acceptance?

Aug 20, 2024Abstract:Abstract argumentation is a popular toolkit for modeling, evaluating, and comparing arguments. Relationships between arguments are specified in argumentation frameworks (AFs), and conditions are placed on sets (extensions) of arguments that allow AFs to be evaluated. For more expressiveness, AFs are augmented with \emph{acceptance conditions} on directly interacting arguments or a constraint on the admissible sets of arguments, resulting in dialectic frameworks or constrained argumentation frameworks. In this paper, we consider flexible conditions for \emph{rejecting} an argument from an extension, which we call rejection conditions (RCs). On the technical level, we associate each argument with a specific logic program. We analyze the resulting complexity, including the structural parameter treewidth. Rejection AFs are highly expressive, giving rise to natural problems on higher levels of the polynomial hierarchy.

Extended Version of: On the Structural Hardness of Answer Set Programming: Can Structure Efficiently Confine the Power of Disjunctions?

Feb 05, 2024Abstract:Answer Set Programming (ASP) is a generic problem modeling and solving framework with a strong focus on knowledge representation and a rapid growth of industrial applications. So far, the study of complexity resulted in characterizing hardness and determining their sources, fine-grained insights in the form of dichotomy-style results, as well as detailed parameterized complexity landscapes. Unfortunately, for the well-known parameter treewidth disjunctive programs require double-exponential runtime under reasonable complexity assumptions. This quickly becomes out of reach. We deal with the classification of structural parameters for disjunctive ASP on the program's rule structure (incidence graph). First, we provide a polynomial kernel to obtain single-exponential runtime in terms of vertex cover size, despite subset-minimization being not represented in the program's structure. Then we turn our attention to strictly better structural parameters between vertex cover size and treewidth. Here, we provide double-exponential lower bounds for the most prominent parameters in that range: treedepth, feedback vertex size, and cliquewidth. Based on this, we argue that unfortunately our options beyond vertex cover size are limited. Our results provide an in-depth hardness study, relying on a novel reduction from normal to disjunctive programs, trading the increase of complexity for an exponential parameter compression.

IASCAR: Incremental Answer Set Counting by Anytime Refinement

Nov 13, 2023Abstract:Answer set programming (ASP) is a popular declarative programming paradigm with various applications. Programs can easily have many answer sets that cannot be enumerated in practice, but counting still allows quantifying solution spaces. If one counts under assumptions on literals, one obtains a tool to comprehend parts of the solution space, so-called answer set navigation. However, navigating through parts of the solution space requires counting many times, which is expensive in theory. Knowledge compilation compiles instances into representations on which counting works in polynomial time. However, these techniques exist only for CNF formulas, and compiling ASP programs into CNF formulas can introduce an exponential overhead. This paper introduces a technique to iteratively count answer sets under assumptions on knowledge compilations of CNFs that encode supported models. Our anytime technique uses the inclusion-exclusion principle to improve bounds by over- and undercounting systematically. In a preliminary empirical analysis, we demonstrate promising results. After compiling the input (offline phase), our approach quickly (re)counts.

Solving Projected Model Counting by Utilizing Treewidth and its Limits

May 31, 2023Abstract:In this paper, we introduce a novel algorithm to solve projected model counting (PMC). PMC asks to count solutions of a Boolean formula with respect to a given set of projection variables, where multiple solutions that are identical when restricted to the projection variables count as only one solution. Inspired by the observation that the so-called "treewidth" is one of the most prominent structural parameters, our algorithm utilizes small treewidth of the primal graph of the input instance. More precisely, it runs in time O(2^2k+4n2) where k is the treewidth and n is the input size of the instance. In other words, we obtain that the problem PMC is fixed-parameter tractable when parameterized by treewidth. Further, we take the exponential time hypothesis (ETH) into consideration and establish lower bounds of bounded treewidth algorithms for PMC, yielding asymptotically tight runtime bounds of our algorithm. While the algorithm above serves as a first theoretical upper bound and although it might be quite appealing for small values of k, unsurprisingly a naive implementation adhering to this runtime bound suffers already from instances of relatively small width. Therefore, we turn our attention to several measures in order to resolve this issue towards exploiting treewidth in practice: We present a technique called nested dynamic programming, where different levels of abstractions of the primal graph are used to (recursively) compute and refine tree decompositions of a given instance. Finally, we provide a nested dynamic programming algorithm and an implementation that relies on database technology for PMC and a prominent special case of PMC, namely model counting (#Sat). Experiments indicate that the advancements are promising, allowing us to solve instances of treewidth upper bounds beyond 200.

Characterizing Structural Hardness of Logic Programs: What makes Cycles and Reachability Hard for Treewidth?

Jan 18, 2023Abstract:Answer Set Programming (ASP) is a problem modeling and solving framework for several problems in KR with growing industrial applications. Also for studies of computational complexity and deeper insights into the hardness and its sources, ASP has been attracting researchers for many years. These studies resulted in fruitful characterizations in terms of complexity classes, fine-grained insights in form of dichotomy-style results, as well as detailed parameterized complexity landscapes. Recently, this lead to a novel result establishing that for the measure treewidth, which captures structural density of a program, the evaluation of the well-known class of normal programs is expected to be slightly harder than deciding satisfiability (SAT). However, it is unclear how to utilize this structural power of ASP. This paper deals with a novel reduction from SAT to normal ASP that goes beyond well-known encodings: We explicitly utilize the structural power of ASP, whereby we sublinearly decrease the treewidth, which probably cannot be significantly improved. Then, compared to existing results, this characterizes hardness in a fine-grained way by establishing the required functional dependency of the dependency graph's cycle length (SCC size) on the treewidth.

Treewidth-aware Reductions of Normal ASP to SAT -- Is Normal ASP Harder than SAT after All?

Oct 07, 2022

Abstract:Answer Set Programming (ASP) is a paradigm for modeling and solving problems for knowledge representation and reasoning. There are plenty of results dedicated to studying the hardness of (fragments of) ASP. So far, these studies resulted in characterizations in terms of computational complexity as well as in fine-grained insights presented in form of dichotomy-style results, lower bounds when translating to other formalisms like propositional satisfiability (SAT), and even detailed parameterized complexity landscapes. A generic parameter in parameterized complexity originating from graph theory is the so-called treewidth, which in a sense captures structural density of a program. Recently, there was an increase in the number of treewidth-based solvers related to SAT. While there are translations from (normal) ASP to SAT, no reduction that preserves treewidth or at least keeps track of the treewidth increase is known. In this paper we propose a novel reduction from normal ASP to SAT that is aware of the treewidth, and guarantees that a slight increase of treewidth is indeed sufficient. Further, we show a new result establishing that, when considering treewidth, already the fragment of normal ASP is slightly harder than SAT (under reasonable assumptions in computational complexity). This also confirms that our reduction probably cannot be significantly improved and that the slight increase of treewidth is unavoidable. Finally, we present an empirical study of our novel reduction from normal ASP to SAT, where we compare treewidth upper bounds that are obtained via known decomposition heuristics. Overall, our reduction works better with these heuristics than existing translations.

Advanced Tools and Methods for Treewidth-Based Problem Solving -- Extended Abstract

Aug 24, 2022

Abstract:Computer programs, so-called solvers, for solving the well-known Boolean satisfiability problem (Sat) have been improving for decades. Among the reasons, why these solvers are so fast, is the implicit usage of the formula's structural properties during solving. One of such structural indicators is the so-called treewidth, which tries to measure how close a formula instance is to being easy (tree-like). This work focuses on logic-based problems and treewidth-based methods and tools for solving them. Many of these problems are also relevant for knowledge representation and reasoning (KR) as well as artificial intelligence (AI) in general. We present a new type of problem reduction, which is referred to by decomposition-guided (DG). This reduction type forms the basis to solve a problem for quantified Boolean formulas (QBFs) of bounded treewidth that has been open since 2004. The solution of this problem then gives rise to a new methodology for proving precise lower bounds for a range of further formalisms in logic, KR, and AI. Despite the established lower bounds, we implement an algorithm for solving extensions of Sat efficiently, by directly using treewidth. Our implementation is based on finding abstractions of instances, which are then incrementally refined in the process. Thereby, our observations confirm that treewidth is an important measure that should be considered in the design of modern solvers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge