Mark Cheung

Reconstructing Fine-Grained Network Data using Autoencoder Architectures with Domain Knowledge Penalties

Apr 15, 2025

Abstract:The ability to reconstruct fine-grained network session data, including individual packets, from coarse-grained feature vectors is crucial for improving network security models. However, the large-scale collection and storage of raw network traffic pose significant challenges, particularly for capturing rare cyberattack samples. These challenges hinder the ability to retain comprehensive datasets for model training and future threat detection. To address this, we propose a machine learning approach guided by formal methods to encode and reconstruct network data. Our method employs autoencoder models with domain-informed penalties to impute PCAP session headers from structured feature representations. Experimental results demonstrate that incorporating domain knowledge through constraint-based loss terms significantly improves reconstruction accuracy, particularly for categorical features with session-level encodings. By enabling efficient reconstruction of detailed network sessions, our approach facilitates data-efficient model training while preserving privacy and storage efficiency.

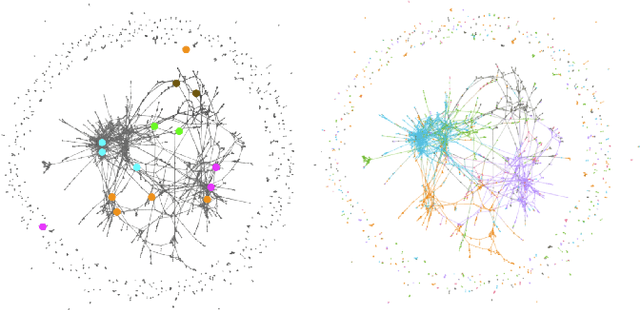

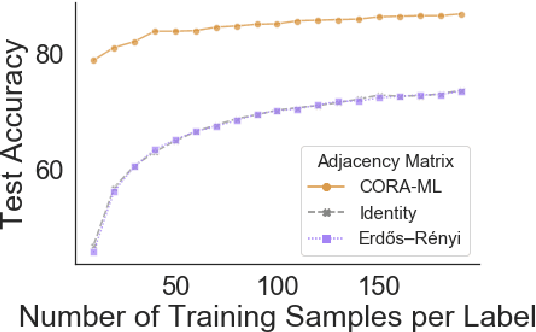

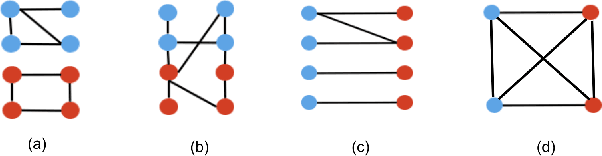

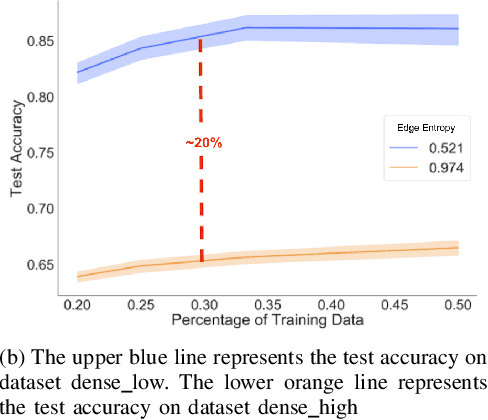

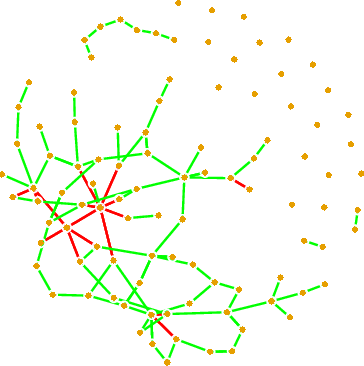

Edge Entropy as an Indicator of the Effectiveness of GNNs over CNNs for Node Classification

Dec 16, 2020

Abstract:Graph neural networks (GNNs) extend convolutional neural networks (CNNs) to graph-based data. A question that arises is how much performance improvement does the underlying graph structure in the GNN provide over the CNN (that ignores this graph structure). To address this question, we introduce edge entropy and evaluate how good an indicator it is for possible performance improvement of GNNs over CNNs. Our results on node classification with synthetic and real datasets show that lower values of edge entropy predict larger expected performance gains of GNNs over CNNs, and, conversely, higher edge entropy leads to expected smaller improvement gains.

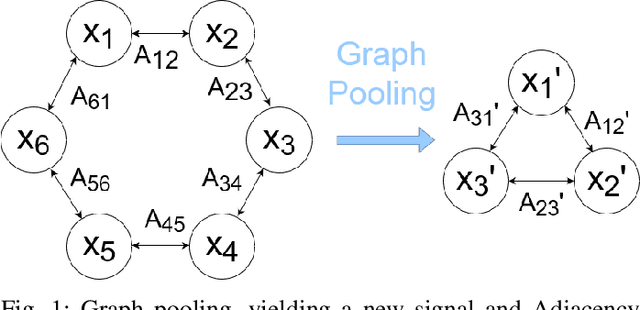

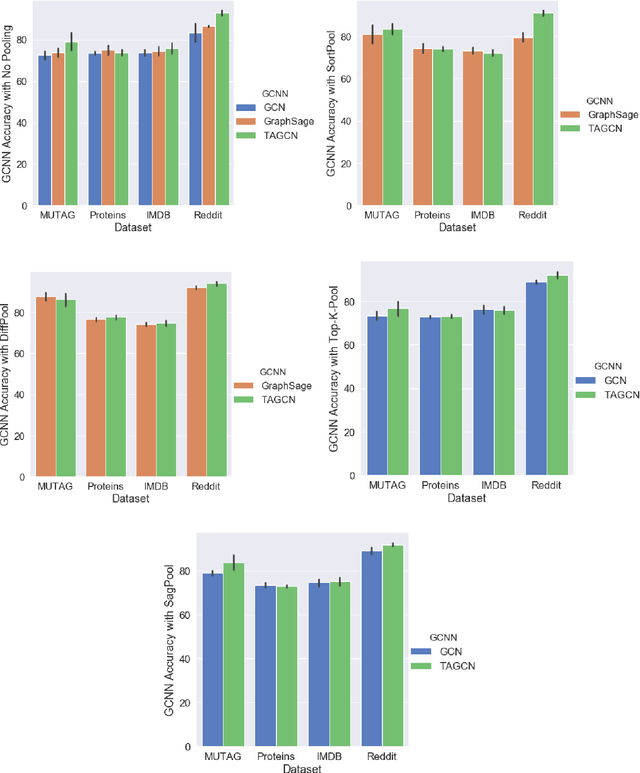

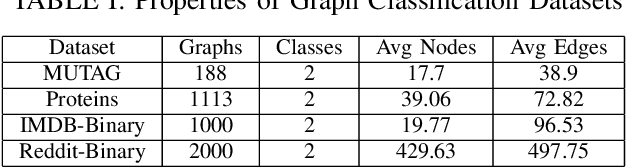

Pooling in Graph Convolutional Neural Networks

Apr 07, 2020

Abstract:Graph convolutional neural networks (GCNNs) are a powerful extension of deep learning techniques to graph-structured data problems. We empirically evaluate several pooling methods for GCNNs, and combinations of those graph pooling methods with three different architectures: GCN, TAGCN, and GraphSAGE. We confirm that graph pooling, especially DiffPool, improves classification accuracy on popular graph classification datasets and find that, on average, TAGCN achieves comparable or better accuracy than GCN and GraphSAGE, particularly for datasets with larger and sparser graph structures.

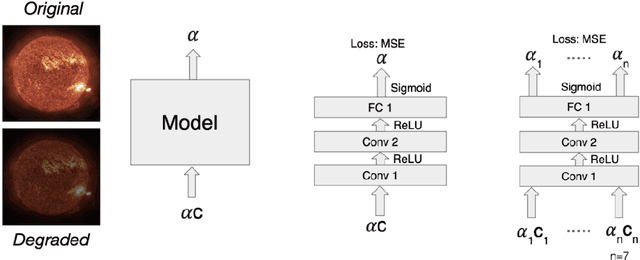

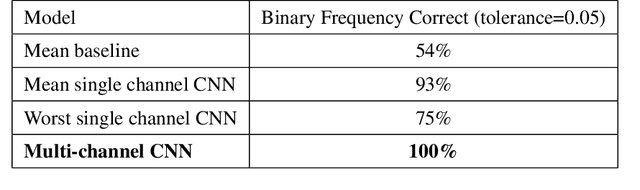

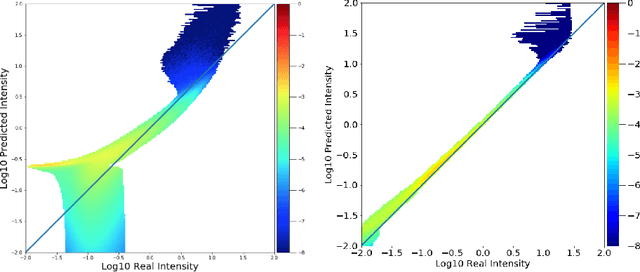

Auto-Calibration of Remote Sensing Solar Telescopes with Deep Learning

Nov 10, 2019

Abstract:As a part of NASA's Heliophysics System Observatory (HSO) fleet of satellites,the Solar Dynamics Observatory (SDO) has continuously monitored the Sun since2010. Ultraviolet (UV) and Extreme UV (EUV) instruments in orbit, such asSDO's Atmospheric Imaging Assembly (AIA) instrument, suffer time-dependent degradation which reduces instrument sensitivity. Accurate calibration for (E)UV instruments currently depends on periodic sounding rockets, which are infrequent and not practical for heliophysics missions in deep space. In the present work, we develop a Convolutional Neural Network (CNN) that auto-calibrates SDO/AIA channels and corrects sensitivity degradation by exploiting spatial patterns in multi-wavelength observations to arrive at a self-calibration of (E)UV imaging instruments. Our results remove a major impediment to developing future HSOmissions of the same scientific caliber as SDO but in deep space, able to observe the Sun from more vantage points than just SDO's current geosynchronous orbit.This approach can be adopted to perform autocalibration of other imaging systems exhibiting similar forms of degradation

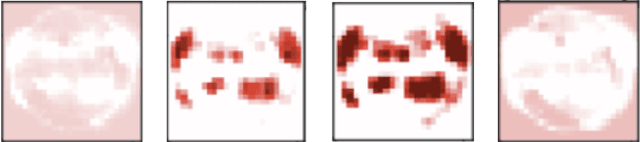

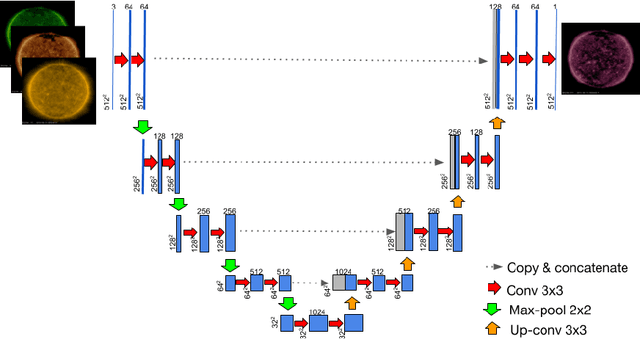

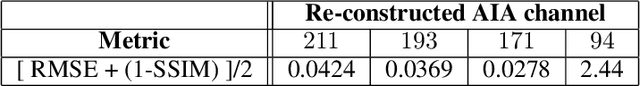

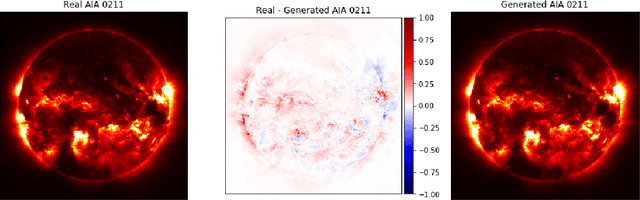

Using U-Nets to Create High-Fidelity Virtual Observations of the Solar Corona

Nov 10, 2019

Abstract:Understanding and monitoring the complex and dynamic processes of the Sun is important for a number of human activities on Earth and in space. For this reason, NASA's Solar Dynamics Observatory (SDO) has been continuously monitoring the multi-layered Sun's atmosphere in high-resolution since its launch in 2010, generating terabytes of observational data every day. The synergy between machine learning and this enormous amount of data has the potential, still largely unexploited, to advance our understanding of the Sun and extend the capabilities of heliophysics missions. In the present work, we show that deep learning applied to SDO data can be successfully used to create a high-fidelity virtual telescope that generates synthetic observations of the solar corona by image translation. Towards this end we developed a deep neural network, structured as an encoder-decoder with skip connections (U-Net), that reconstructs the Sun's image of one instrument channel given temporally aligned images in three other channels. The approach we present has the potential to reduce the telemetry needs of SDO, enhance the capabilities of missions that have less observing channels, and transform the concept development of future missions.

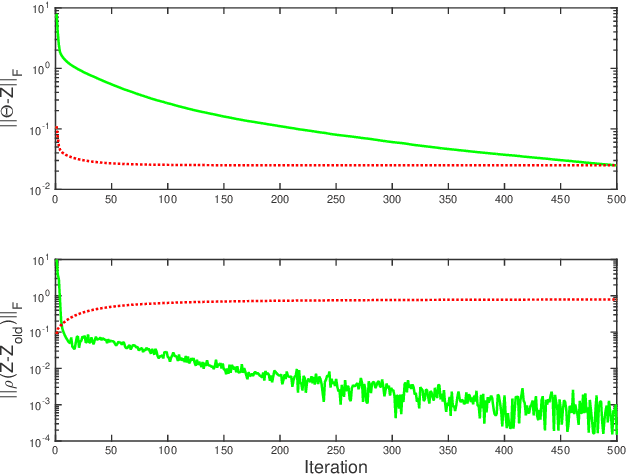

Contrastive Structured Anomaly Detection for Gaussian Graphical Models

May 02, 2016

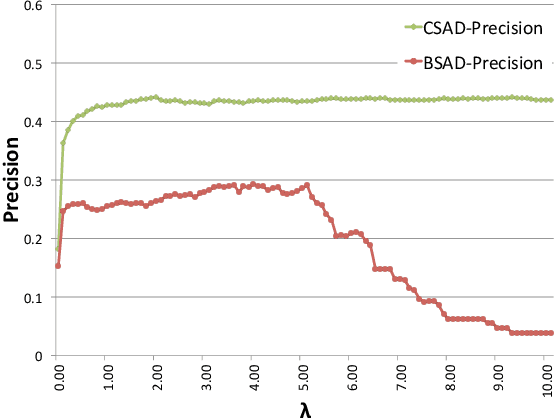

Abstract:Gaussian graphical models (GGMs) are probabilistic tools of choice for analyzing conditional dependencies between variables in complex systems. Finding changepoints in the structural evolution of a GGM is therefore essential to detecting anomalies in the underlying system modeled by the GGM. In order to detect structural anomalies in a GGM, we consider the problem of estimating changes in the precision matrix of the corresponding Gaussian distribution. We take a two-step approach to solving this problem:- (i) estimating a background precision matrix using system observations from the past without any anomalies, and (ii) estimating a foreground precision matrix using a sliding temporal window during anomaly monitoring. Our primary contribution is in estimating the foreground precision using a novel contrastive inverse covariance estimation procedure. In order to accurately learn only the structural changes to the GGM, we maximize a penalized log-likelihood where the penalty is the $l_1$ norm of difference between the foreground precision being estimated and the already learned background precision. We modify the alternating direction method of multipliers (ADMM) algorithm for sparse inverse covariance estimation to perform contrastive estimation of the foreground precision matrix. Our results on simulated GGM data show significant improvement in precision and recall for detecting structural changes to the GGM, compared to a non-contrastive sliding window baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge