Mario Botsch

GarmentCodeData: A Dataset of 3D Made-to-Measure Garments With Sewing Patterns

May 27, 2024

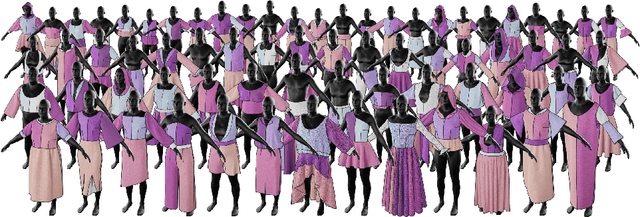

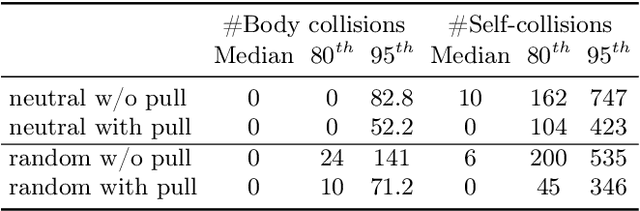

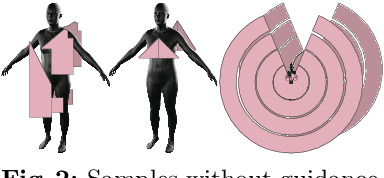

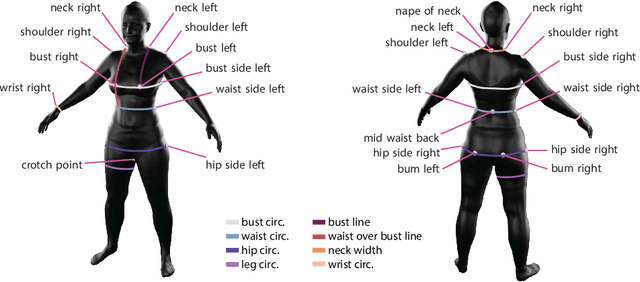

Abstract:Recent research interest in the learning-based processing of garments, from virtual fitting to generation and reconstruction, stumbles on a scarcity of high-quality public data in the domain. We contribute to resolving this need by presenting the first large-scale synthetic dataset of 3D made-to-measure garments with sewing patterns, as well as its generation pipeline. GarmentCodeData contains 115,000 data points that cover a variety of designs in many common garment categories: tops, shirts, dresses, jumpsuits, skirts, pants, etc., fitted to a variety of body shapes sampled from a custom statistical body model based on CAESAR, as well as a standard reference body shape, applying three different textile materials. To enable the creation of datasets of such complexity, we introduce a set of algorithms for automatically taking tailor's measures on sampled body shapes, sampling strategies for sewing pattern design, and propose an automatic, open-source 3D garment draping pipeline based on a fast XPBD simulator, while contributing several solutions for collision resolution and drape correctness to enable scalability. Dataset: http://hdl.handle.net/20.500.11850/673889

Non-Negative Kernel Sparse Coding for the Classification of Motion Data

Mar 12, 2019

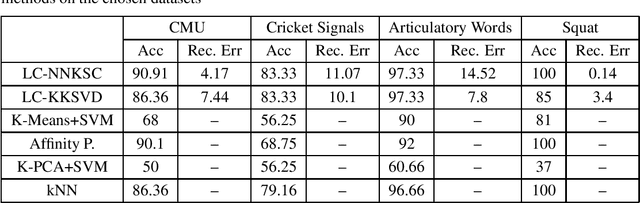

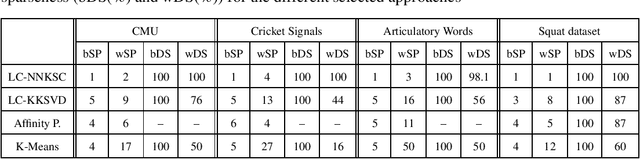

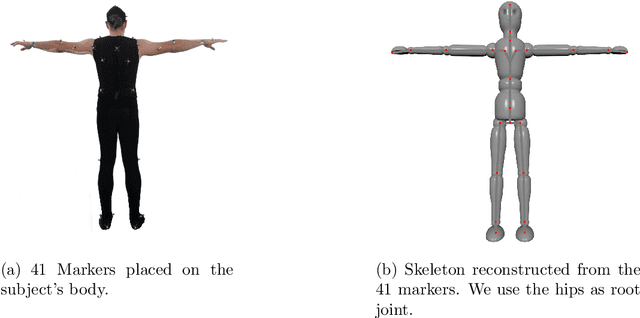

Abstract:We are interested in the decomposition of motion data into a sparse linear combination of base functions which enable efficient data processing. We combine two prominent frameworks: dynamic time warping (DTW), which offers particularly successful pairwise motion data comparison, and sparse coding (SC), which enables an automatic decomposition of vectorial data into a sparse linear combination of base vectors. We enhance SC as follows: an efficient kernelization which extends its application domain to general similarity data such as offered by DTW, and its restriction to non-negative linear representations of signals and base vectors in order to guarantee a meaningful dictionary. Empirical evaluations on motion capture benchmarks show the effectiveness of our framework regarding interpretation and discrimination concerns.

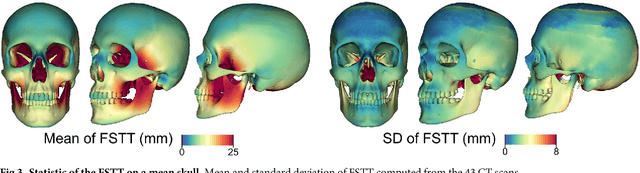

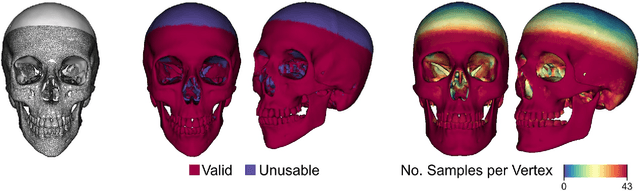

A method for automatic forensic facial reconstruction based on dense statistics of soft tissue thickness

Aug 22, 2018

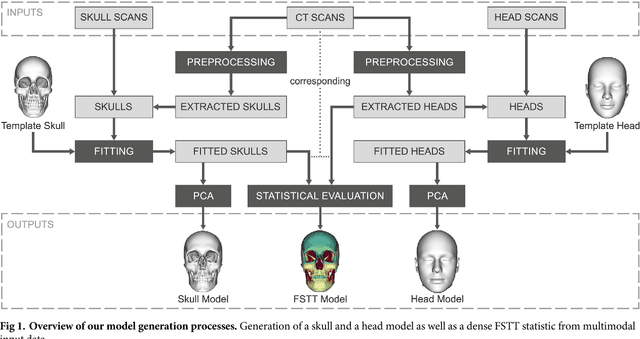

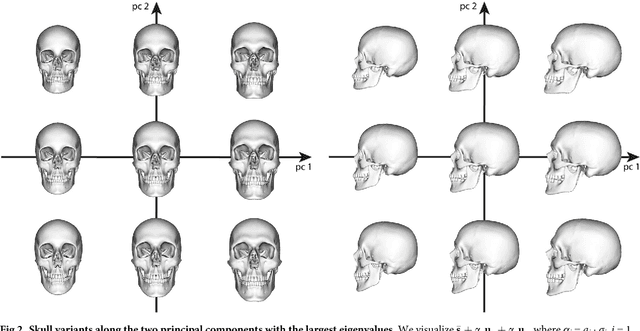

Abstract:In this paper, we present a method for automated estimation of a human face given a skull remain. The proposed method is based on three statistical models. A volumetric (tetrahedral) skull model encoding the variations of different skulls, a surface head model encoding the head variations, and a dense statistic of facial soft tissue thickness (FSTT). All data are automatically derived from computed tomography (CT) head scans and optical face scans. In order to obtain a proper dense FSTT statistic, we register a skull model to each skull extracted from a CT scan and determine the FSTT value for each vertex of the skull model towards the associated extracted skin surface. The FSTT values at predefined landmarks from our statistic are well in agreement with data from the literature. To recover a face from a skull remain, we first fit our skull model to the given skull. Next, we generate spheres with radius of the respective FSTT value obtained from our statistic at each vertex of the registered skull. Finally, we fit a head model to the union of all spheres. The proposed automated method enables a probabilistic face-estimation that facilitates forensic recovery even from incomplete skull remains. The FSTT statistic allows the generation of plausible head variants, which can be adjusted intuitively using principal component analysis. We validate our face recovery process using an anonymized head CT scan. The estimation generated from the given skull visually compares well with the skin surface extracted from the CT scan itself.

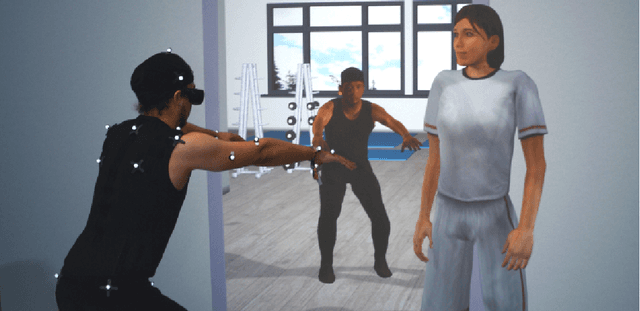

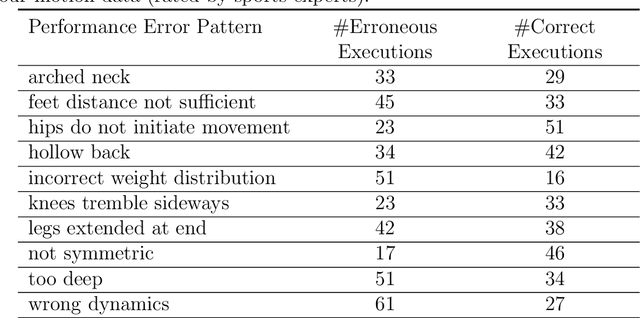

Automatic Error Analysis of Human Motor Performance for Interactive Coaching in Virtual Reality

Sep 26, 2017

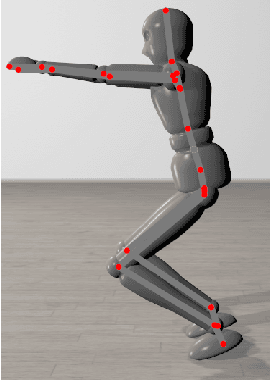

Abstract:In the context of fitness coaching or for rehabilitation purposes, the motor actions of a human participant must be observed and analyzed for errors in order to provide effective feedback. This task is normally carried out by human coaches, and it needs to be solved automatically in technical applications that are to provide automatic coaching (e.g. training environments in VR). However, most coaching systems only provide coarse information on movement quality, such as a scalar value per body part that describes the overall deviation from the correct movement. Further, they are often limited to static body postures or rather simple movements of single body parts. While there are many approaches to distinguish between different types of movements (e.g., between walking and jumping), the detection of more subtle errors in a motor performance is less investigated. We propose a novel approach to classify errors in sports or rehabilitation exercises such that feedback can be delivered in a rapid and detailed manner: Homogeneous sub-sequences of exercises are first temporally aligned via Dynamic Time Warping. Next, we extract a feature vector from the aligned sequences, which serves as a basis for feature selection using Random Forests. The selected features are used as input for Support Vector Machines, which finally classify the movement errors. We compare our algorithm to a well established state-of-the-art approach in time series classification, 1-Nearest Neighbor combined with Dynamic Time Warping, and show our algorithm's superiority regarding classification quality as well as computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge