Maria Trocan

CIC: Circular Image Compression

Jul 18, 2024

Abstract:Learned image compression (LIC) is currently the cutting-edge method. However, the inherent difference between testing and training images of LIC results in performance degradation to some extent. Especially for out-of-sample, out-of-distribution, or out-of-domain testing images, the performance of LIC dramatically degraded. Classical LIC is a serial image compression (SIC) approach that utilizes an open-loop architecture with serial encoding and decoding units. Nevertheless, according to the theory of automatic control, a closed-loop architecture holds the potential to improve the dynamic and static performance of LIC. Therefore, a circular image compression (CIC) approach with closed-loop encoding and decoding elements is proposed to minimize the gap between testing and training images and upgrade the capability of LIC. The proposed CIC establishes a nonlinear loop equation and proves that steady-state error between reconstructed and original images is close to zero by Talor series expansion. The proposed CIC method possesses the property of Post-Training and plug-and-play which can be built on any existing advanced SIC methods. Experimental results on five public image compression datasets demonstrate that the proposed CIC outperforms five open-source state-of-the-art competing SIC algorithms in reconstruction capacity. Experimental results further show that the proposed method is suitable for out-of-sample testing images with dark backgrounds, sharp edges, high contrast, grid shapes, or complex patterns.

CMISR: Circular Medical Image Super-Resolution

Aug 15, 2023Abstract:Classical methods of medical image super-resolution (MISR) utilize open-loop architecture with implicit under-resolution (UR) unit and explicit super-resolution (SR) unit. The UR unit can always be given, assumed, or estimated, while the SR unit is elaborately designed according to various SR algorithms. The closed-loop feedback mechanism is widely employed in current MISR approaches and can efficiently improve their performance. The feedback mechanism may be divided into two categories: local and global feedback. Therefore, this paper proposes a global feedback-based closed-cycle framework, circular MISR (CMISR), with unambiguous UR and SR elements. Mathematical model and closed-loop equation of CMISR are built. Mathematical proof with Taylor-series approximation indicates that CMISR has zero recovery error in steady-state. In addition, CMISR holds plug-and-play characteristic which can be established on any existing MISR algorithms. Five CMISR algorithms are respectively proposed based on the state-of-the-art open-loop MISR algorithms. Experimental results with three scale factors and on three open medical image datasets show that CMISR is superior to MISR in reconstruction performance and is particularly suited to medical images with strong edges or intense contrast.

CSwin2SR: Circular Swin2SR for Compressed Image Super-Resolution

Jan 20, 2023Abstract:Closed-loop negative feedback mechanism is extensively utilized in automatic control systems and brings about extraordinary dynamic and static performance. In order to further improve the reconstruction capability of current methods of compressed image super-resolution, a circular Swin2SR (CSwin2SR) approach is proposed. The CSwin2SR contains a serial Swin2SR for initial super-resolution reestablishment and circular Swin2SR for enhanced super-resolution reestablishment. Simulated experimental results show that the proposed CSwin2SR dramatically outperforms the classical Swin2SR in the capacity of super-resolution recovery. On DIV2K test and valid datasets, the average increment of PSNR is greater than 1dB and the related average increment of SSIM is greater than 0.006.

ICRICS: Iterative Compensation Recovery for Image Compressive Sensing

Jul 19, 2022

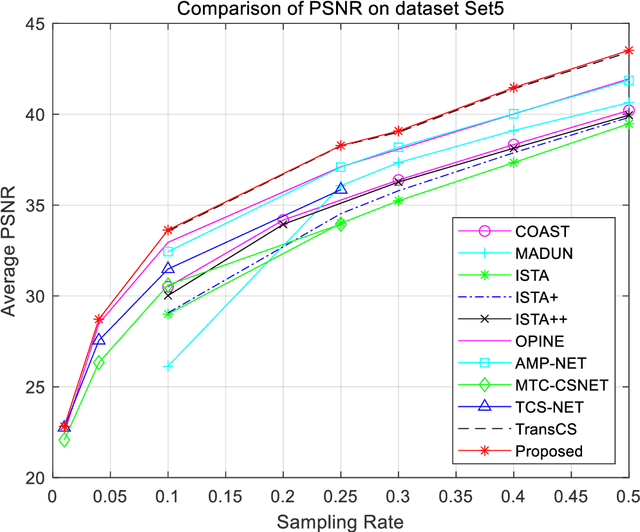

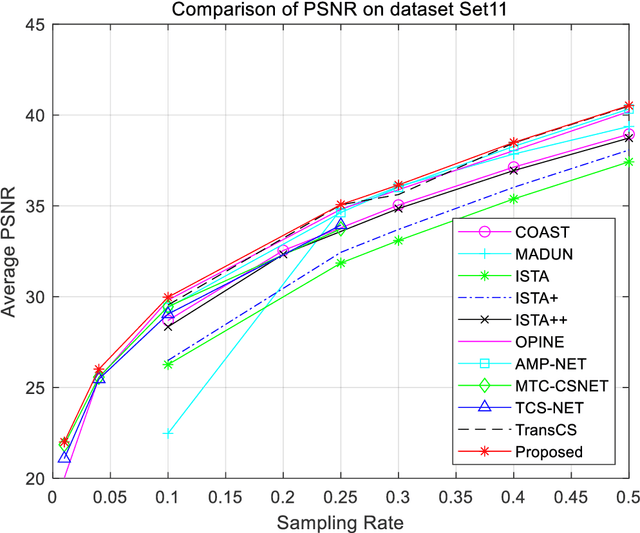

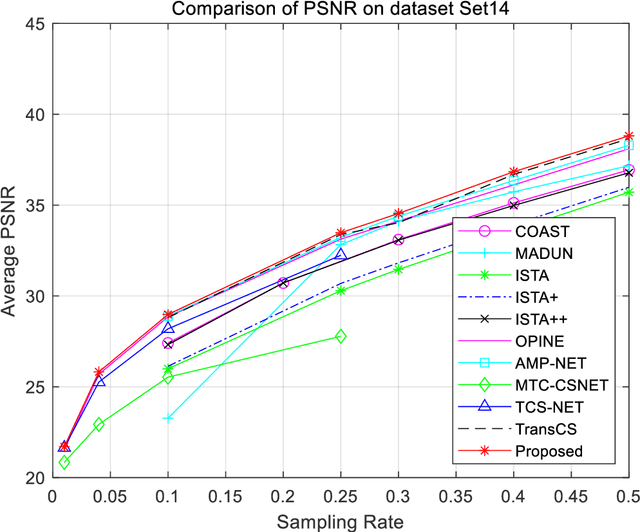

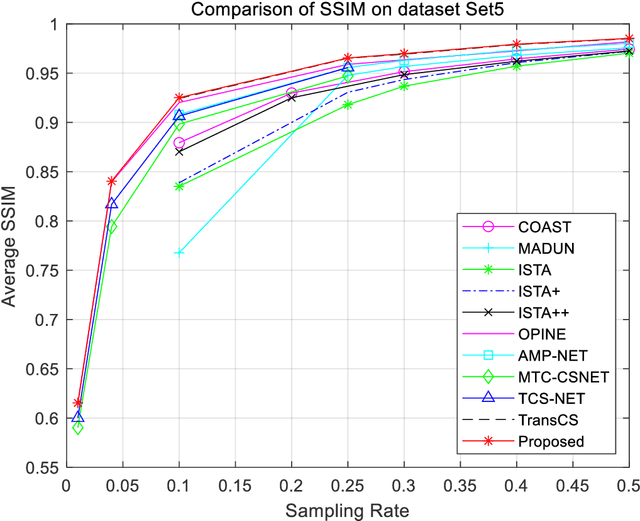

Abstract:Closed-loop architecture is widely utilized in automatic control systems and attain distinguished performance. However, classical compressive sensing systems employ open-loop architecture with separated sampling and reconstruction units. Therefore, a method of iterative compensation recovery for image compressive sensing (ICRICS) is proposed by introducing closed-loop framework into traditional compresses sensing systems. The proposed method depends on any existing approaches and upgrades their reconstruction performance by adding negative feedback structure. Theory analysis on negative feedback of compressive sensing systems is performed. An approximate mathematical proof of the effectiveness of the proposed method is also provided. Simulation experiments on more than 3 image datasets show that the proposed method is superior to 10 competition approaches in reconstruction performance. The maximum increment of average peak signal-to-noise ratio is 4.36 dB and the maximum increment of average structural similarity is 0.034 on one dataset. The proposed method based on negative feedback mechanism can efficiently correct the recovery error in the existing systems of image compressive sensing.

Patch Selection for Melanoma Classification

Jun 27, 2022

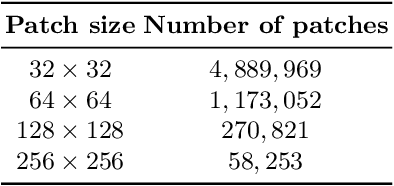

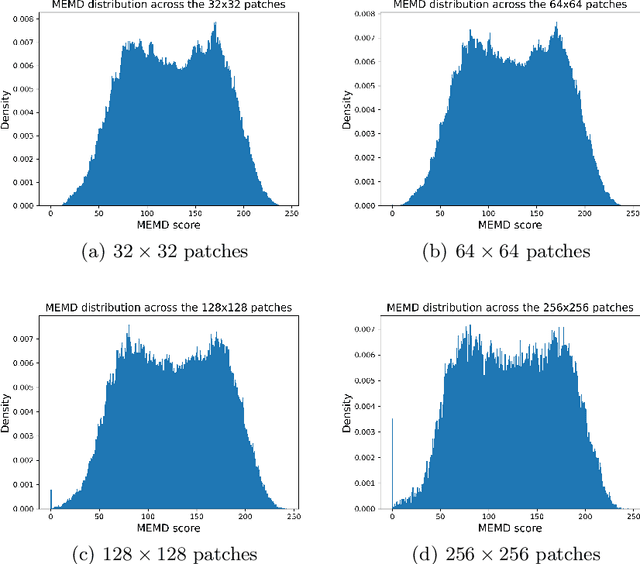

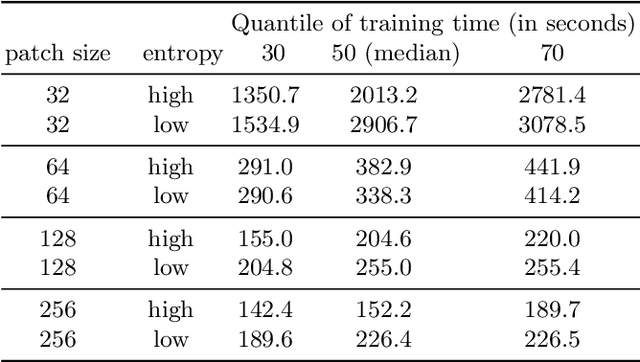

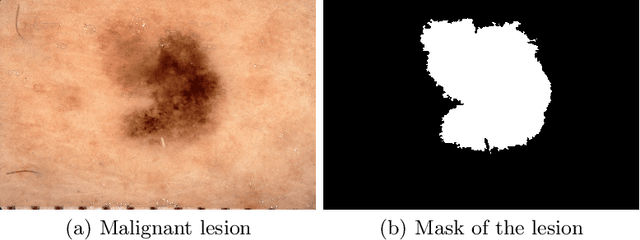

Abstract:In medical image processing, the most important information is often located on small parts of the image. Patch-based approaches aim at using only the most relevant parts of the image. Finding ways to automatically select the patches is a challenge. In this paper, we investigate two criteria to choose patches: entropy and a spectral similarity criterion. We perform experiments at different levels of patch size. We train a Convolutional Neural Network on the subsets of patches and analyze the training time. We find that, in addition to requiring less preprocessing time, the classifiers trained on the datasets of patches selected based on entropy converge faster than on those selected based on the spectral similarity criterion and, furthermore, lead to higher accuracy. Moreover, patches of high entropy lead to faster convergence and better accuracy than patches of low entropy.

A Practical Tutorial on Explainable AI Techniques

Nov 13, 2021

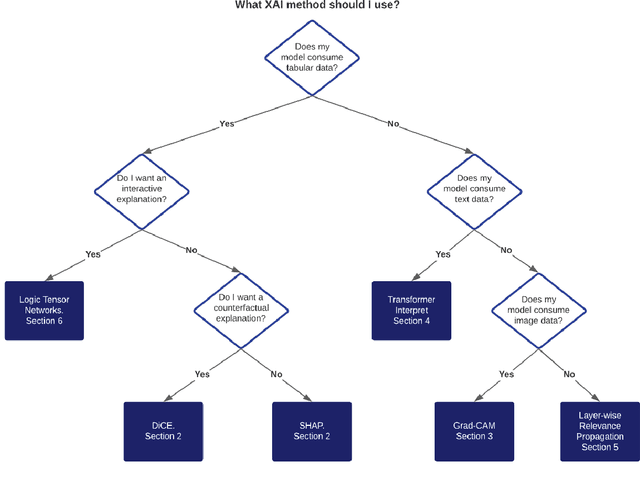

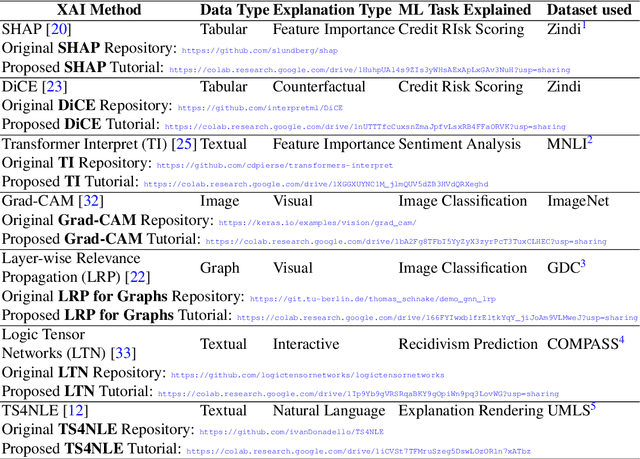

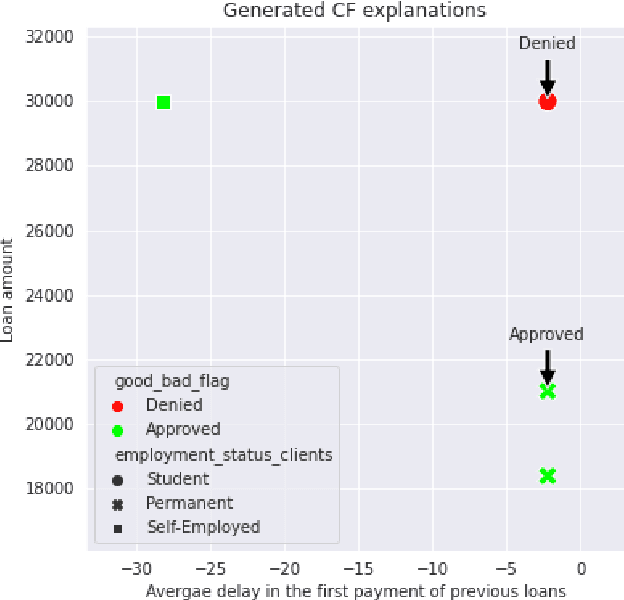

Abstract:Last years have been characterized by an upsurge of opaque automatic decision support systems, such as Deep Neural Networks (DNNs). Although they have great generalization and prediction skills, their functioning does not allow obtaining detailed explanations of their behaviour. As opaque machine learning models are increasingly being employed to make important predictions in critical environments, the danger is to create and use decisions that are not justifiable or legitimate. Therefore, there is a general agreement on the importance of endowing machine learning models with explainability. The reason is that EXplainable Artificial Intelligence (XAI) techniques can serve to verify and certify model outputs and enhance them with desirable notions such as trustworthiness, accountability, transparency and fairness. This tutorial is meant to be the go-to handbook for any audience with a computer science background aiming at getting intuitive insights of machine learning models, accompanied with straight, fast, and intuitive explanations out of the box. We believe that these methods provide a valuable contribution for applying XAI techniques in their particular day-to-day models, datasets and use-cases. Figure \ref{fig:Flowchart} acts as a flowchart/map for the reader and should help him to find the ideal method to use according to his type of data. The reader will find a description of the proposed method as well as an example of use and a Python notebook that he can easily modify as he pleases in order to apply it to his own case of application.

Cascade Decoders-Based Autoencoders for Image Reconstruction

Jun 29, 2021

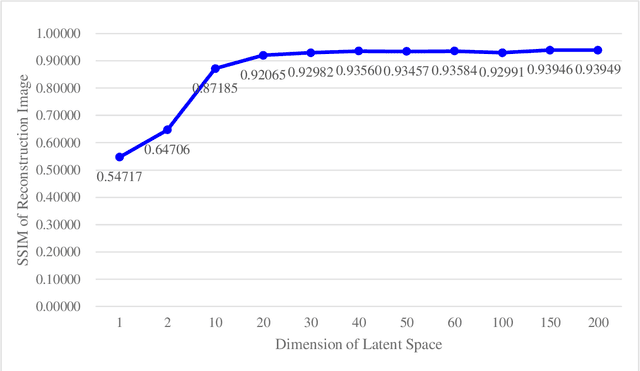

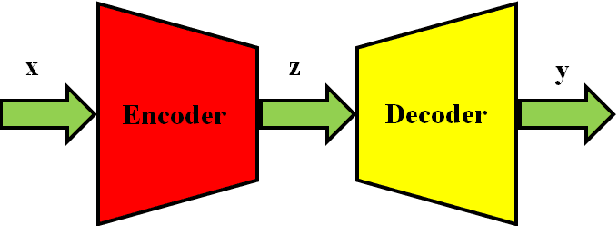

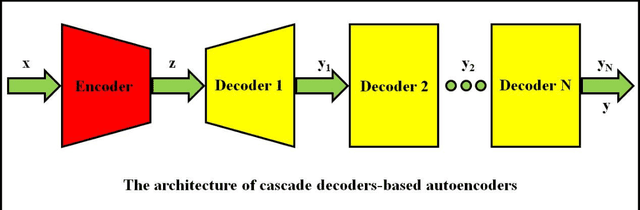

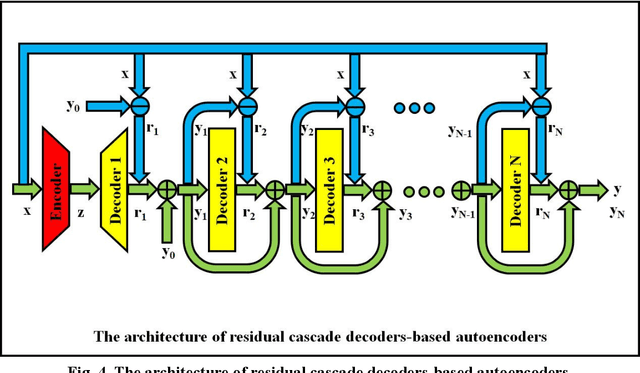

Abstract:Autoencoders are composed of coding and decoding units, hence they hold the inherent potential of high-performance data compression and signal compressed sensing. The main disadvantages of current autoencoders comprise the following several aspects: the research objective is not data reconstruction but feature representation; the performance evaluation of data recovery is neglected; it is hard to achieve lossless data reconstruction by pure autoencoders, even by pure deep learning. This paper aims for image reconstruction of autoencoders, employs cascade decoders-based autoencoders, perfects the performance of image reconstruction, approaches gradually lossless image recovery, and provides solid theory and application basis for autoencoders-based image compression and compressed sensing. The proposed serial decoders-based autoencoders include the architectures of multi-level decoders and the related optimization algorithms. The cascade decoders consist of general decoders, residual decoders, adversarial decoders and their combinations. It is evaluated by the experimental results that the proposed autoencoders outperform the classical autoencoders in the performance of image reconstruction.

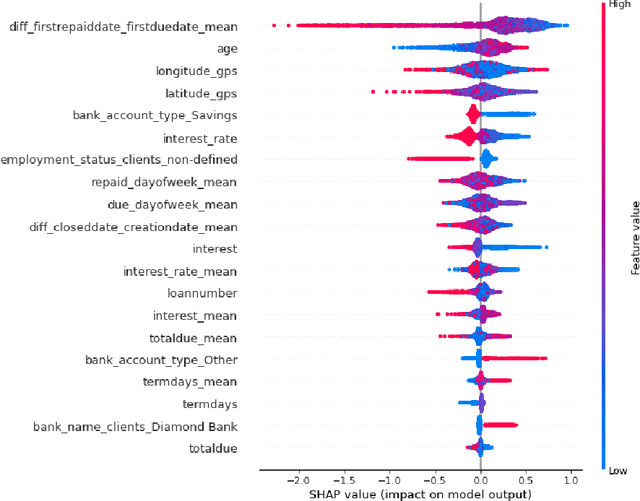

Explaining Credit Risk Scoring through Feature Contribution Alignment with Expert Risk Analysts

Mar 15, 2021

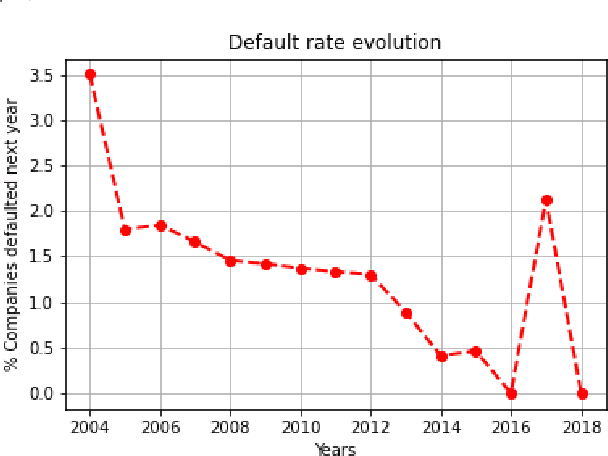

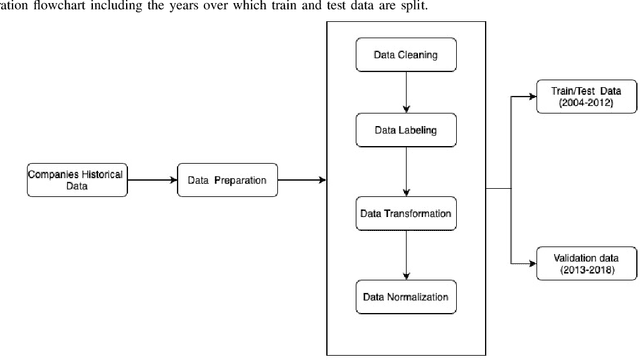

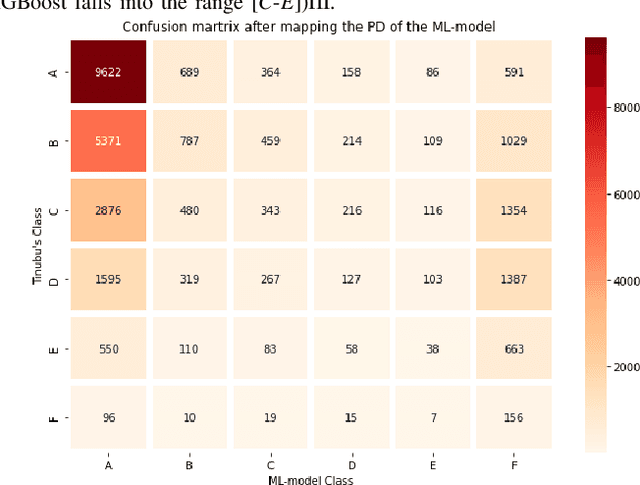

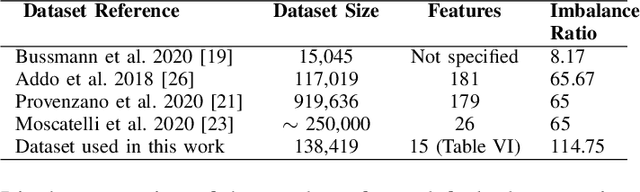

Abstract:Credit assessments activities are essential for financial institutions and allow the global economy to grow. Building robust, solid and accurate models that estimate the probability of a default of a company is mandatory for credit insurance companies, moreover when it comes to bridging the trade finance gap. Automating the risk assessment process will allow credit risk experts to reduce their workload and focus on the critical and complex cases, as well as to improve the loan approval process by reducing the time to process the application. The recent developments in Artificial Intelligence are offering new powerful opportunities. However, most AI techniques are labelled as blackbox models due to their lack of explainability. For both users and regulators, in order to deploy such technologies at scale, being able to understand the model logic is a must to grant accurate and ethical decision making. In this study, we focus on companies credit scoring and we benchmark different machine learning models. The aim is to build a model to predict whether a company will experience financial problems in a given time horizon. We address the black box problem using eXplainable Artificial Techniques in particular, post-hoc explanations using SHapley Additive exPlanations. We bring light by providing an expert-aligned feature relevance score highlighting the disagreement between a credit risk expert and a model feature attribution explanation in order to better quantify the convergence towards a better human-aligned decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge