Natalia Diaz-Rodriguez

Greybox XAI: a Neural-Symbolic learning framework to produce interpretable predictions for image classification

Sep 26, 2022

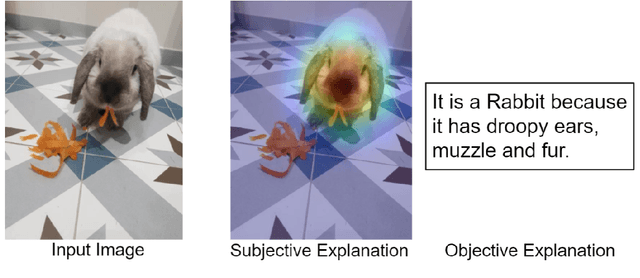

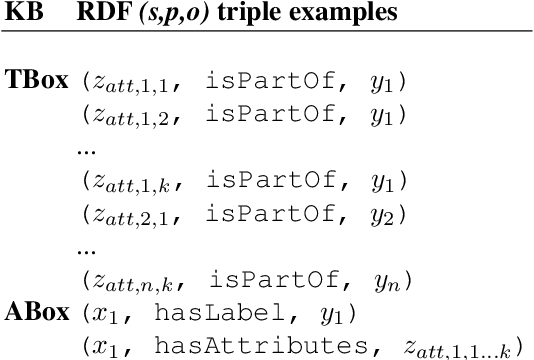

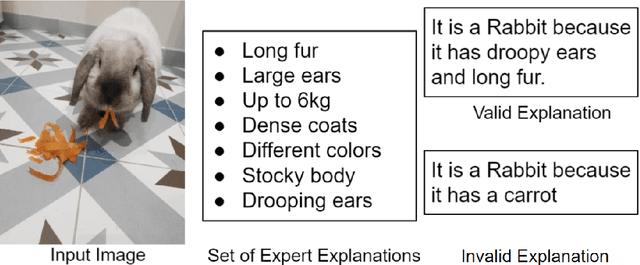

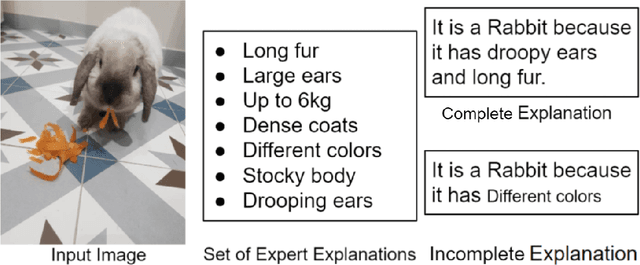

Abstract:Although Deep Neural Networks (DNNs) have great generalization and prediction capabilities, their functioning does not allow a detailed explanation of their behavior. Opaque deep learning models are increasingly used to make important predictions in critical environments, and the danger is that they make and use predictions that cannot be justified or legitimized. Several eXplainable Artificial Intelligence (XAI) methods that separate explanations from machine learning models have emerged, but have shortcomings in faithfulness to the model actual functioning and robustness. As a result, there is a widespread agreement on the importance of endowing Deep Learning models with explanatory capabilities so that they can themselves provide an answer to why a particular prediction was made. First, we address the problem of the lack of universal criteria for XAI by formalizing what an explanation is. We also introduced a set of axioms and definitions to clarify XAI from a mathematical perspective. Finally, we present the Greybox XAI, a framework that composes a DNN and a transparent model thanks to the use of a symbolic Knowledge Base (KB). We extract a KB from the dataset and use it to train a transparent model (i.e., a logistic regression). An encoder-decoder architecture is trained on RGB images to produce an output similar to the KB used by the transparent model. Once the two models are trained independently, they are used compositionally to form an explainable predictive model. We show how this new architecture is accurate and explainable in several datasets.

Explaining Credit Risk Scoring through Feature Contribution Alignment with Expert Risk Analysts

Mar 15, 2021

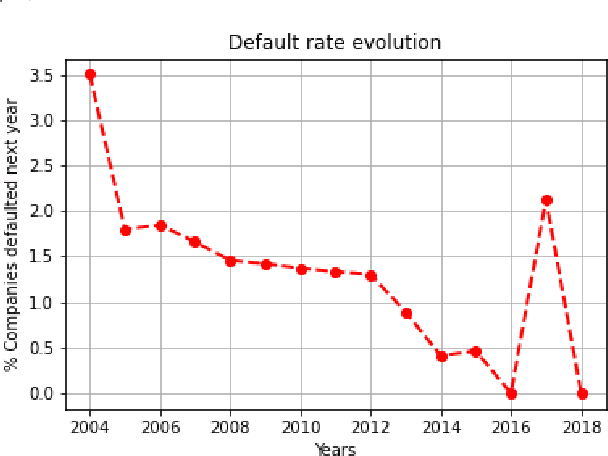

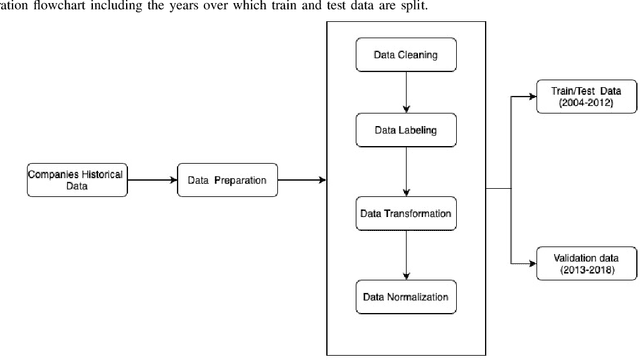

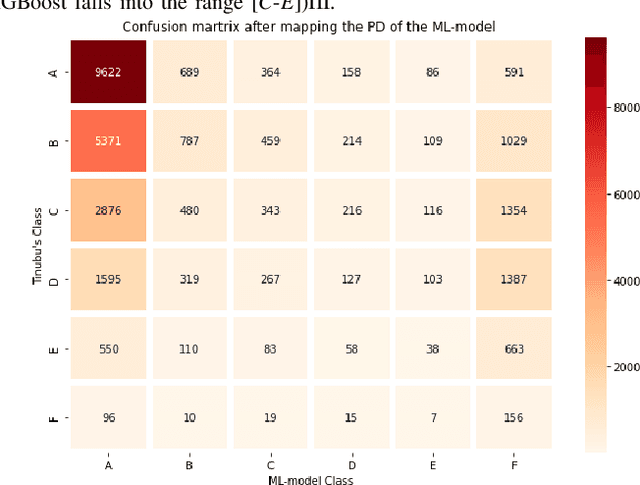

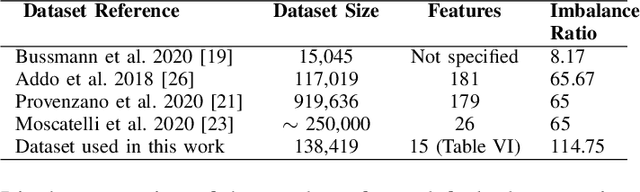

Abstract:Credit assessments activities are essential for financial institutions and allow the global economy to grow. Building robust, solid and accurate models that estimate the probability of a default of a company is mandatory for credit insurance companies, moreover when it comes to bridging the trade finance gap. Automating the risk assessment process will allow credit risk experts to reduce their workload and focus on the critical and complex cases, as well as to improve the loan approval process by reducing the time to process the application. The recent developments in Artificial Intelligence are offering new powerful opportunities. However, most AI techniques are labelled as blackbox models due to their lack of explainability. For both users and regulators, in order to deploy such technologies at scale, being able to understand the model logic is a must to grant accurate and ethical decision making. In this study, we focus on companies credit scoring and we benchmark different machine learning models. The aim is to build a model to predict whether a company will experience financial problems in a given time horizon. We address the black box problem using eXplainable Artificial Techniques in particular, post-hoc explanations using SHapley Additive exPlanations. We bring light by providing an expert-aligned feature relevance score highlighting the disagreement between a credit risk expert and a model feature attribution explanation in order to better quantify the convergence towards a better human-aligned decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge