Marco Chini

Adaptive federated learning for ship detection across diverse satellite imagery sources

Dec 12, 2025Abstract:We investigate the application of Federated Learning (FL) for ship detection across diverse satellite datasets, offering a privacy-preserving solution that eliminates the need for data sharing or centralized collection. This approach is particularly advantageous for handling commercial satellite imagery or sensitive ship annotations. Four FL models including FedAvg, FedProx, FedOpt, and FedMedian, are evaluated and compared to a local training baseline, where the YOLOv8 ship detection model is independently trained on each dataset without sharing learned parameters. The results reveal that FL models substantially improve detection accuracy over training on smaller local datasets and achieve performance levels close to global training that uses all datasets during the training. Furthermore, the study underscores the importance of selecting appropriate FL configurations, such as the number of communication rounds and local training epochs, to optimize detection precision while maintaining computational efficiency.

Urban Flood Mapping Using Satellite Synthetic Aperture Radar Data: A Review of Characteristics, Approaches and Datasets

Nov 06, 2024

Abstract:Understanding the extent of urban flooding is crucial for assessing building damage, casualties and economic losses. Synthetic Aperture Radar (SAR) technology offers significant advantages for mapping flooded urban areas due to its ability to collect data regardless weather and solar illumination conditions. However, the wide range of existing methods makes it difficult to choose the best approach for a specific situation and to identify future research directions. Therefore, this study provides a comprehensive review of current research on urban flood mapping using SAR data, summarizing key characteristics of floodwater in SAR images and outlining various approaches from scientific articles. Additionally, we provide a brief overview of the advantages and disadvantages of each method category, along with guidance on selecting the most suitable approach for different scenarios. This study focuses on the challenges and advancements in SAR-based urban flood mapping. It specifically addresses the limitations of spatial and temporal resolution in SAR data and discusses the essential pre-processing steps. Moreover, the article explores the potential benefits of Polarimetric SAR (PolSAR) techniques and uncertainty analysis for future research. Furthermore, it highlights a lack of open-access SAR datasets for urban flood mapping, hindering development in advanced deep learning-based methods. Besides, we evaluated the Technology Readiness Levels (TRLs) of urban flood mapping techniques to identify challenges and future research areas. Finally, the study explores the practical applications of SAR-based urban flood mapping in both the private and public sectors and provides a comprehensive overview of the benefits and potential impact of these methods.

Optical Image-to-Image Translation Using Denoising Diffusion Models: Heterogeneous Change Detection as a Use Case

Apr 17, 2024

Abstract:We introduce an innovative deep learning-based method that uses a denoising diffusion-based model to translate low-resolution images to high-resolution ones from different optical sensors while preserving the contents and avoiding undesired artifacts. The proposed method is trained and tested on a large and diverse data set of paired Sentinel-II and Planet Dove images. We show that it can solve serious image generation issues observed when the popular classifier-free guided Denoising Diffusion Implicit Model (DDIM) framework is used in the task of Image-to-Image Translation of multi-sensor optical remote sensing images and that it can generate large images with highly consistent patches, both in colors and in features. Moreover, we demonstrate how our method improves heterogeneous change detection results in two urban areas: Beirut, Lebanon, and Austin, USA. Our contributions are: i) a new training and testing algorithm based on denoising diffusion models for optical image translation; ii) a comprehensive image quality evaluation and ablation study; iii) a comparison with the classifier-free guided DDIM framework; and iv) change detection experiments on heterogeneous data.

Early Flood Warning Using Satellite-Derived Convective System and Precipitation Data -- A Retrospective Case Study of Central Vietnam

Mar 21, 2024Abstract:This paper addresses the challenges of an early flood warning caused by complex convective systems (CSs), by using Low-Earth Orbit and Geostationary satellite data. We focus on a sequence of extreme events that took place in central Vietnam during October 2020, with a specific emphasis on the events leading up to the floods, i.e., those occurring before October 10th, 2020. In this critical phase, several hydrometeorological indicators could be identified thanks to an increasingly advanced and dense observation network composed of Earth Observation satellites, in particular those enabling the characterization and monitoring of a CS, in terms of low-temperature clouds and heavy rainfall. Himawari-8 images, both individually and in time-series, allow identifying and tracking convective clouds. This is complemented by the observation of heavy/violent rainfall through GPM IMERG data, as well as the detection of strong winds using radiometers and scatterometers. Collectively, these datasets, along with the estimated intensity and duration of the event from each source, form a comprehensive dataset detailing the intricate behaviors of CSs. All of these factors are significant contributors to the magnitude of flooding and the short-term dynamics anticipated in the studied region.

Insight Into the Collocation of Multi-Source Satellite Imagery for Multi-Scale Vessel Detection

Mar 20, 2024Abstract:Ship detection from satellite imagery using Deep Learning (DL) is an indispensable solution for maritime surveillance. However, applying DL models trained on one dataset to others having differences in spatial resolution and radiometric features requires many adjustments. To overcome this issue, this paper focused on the DL models trained on datasets that consist of different optical images and a combination of radar and optical data. When dealing with a limited number of training images, the performance of DL models via this approach was satisfactory. They could improve 5-20% of average precision, depending on the optical images tested. Likewise, DL models trained on the combined optical and radar dataset could be applied to both optical and radar images. Our experiments showed that the models trained on an optical dataset could be used for radar images, while those trained on a radar dataset offered very poor scores when applied to optical images.

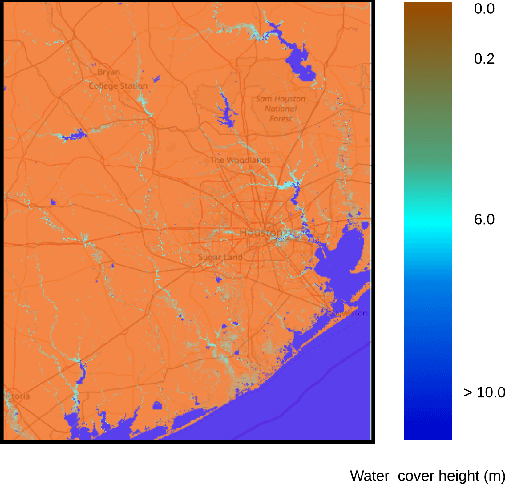

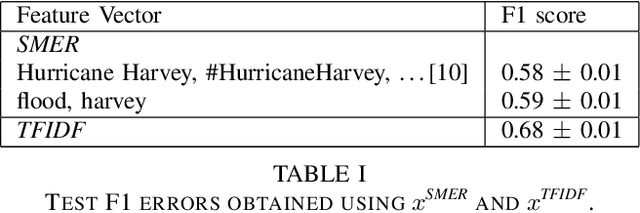

Computing flood probabilities using Twitter: application to the Houston urban area during Harvey

Dec 07, 2020

Abstract:In this paper, we investigate the conversion of a Twitter corpus into geo-referenced raster cells holding the probability of the associated geographical areas of being flooded. We describe a baseline approach that combines a density ratio function, aggregation using a spatio-temporal Gaussian kernel function, and TFIDF textual features. The features are transformed to probabilities using a logistic regression model. The described method is evaluated on a corpus collected after the floods that followed Hurricane Harvey in the Houston urban area in August-September 2017. The baseline reaches a F1 score of 68%. We highlight research directions likely to improve these initial results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge