Marcelo Prates

Neural-Symbolic Relational Reasoning on Graph Models: Effective Link Inference and Computation from Knowledge Bases

May 05, 2020

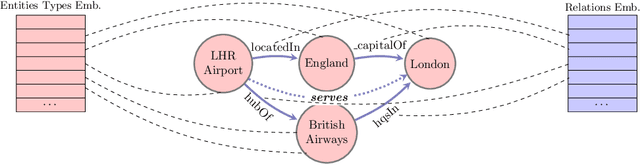

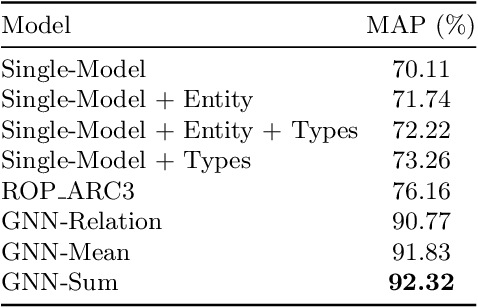

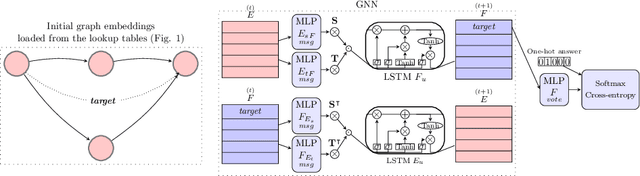

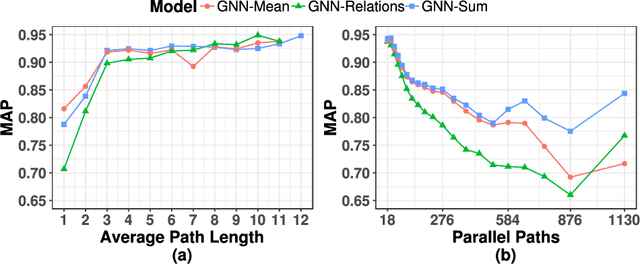

Abstract:The recent developments and growing interest in neural-symbolic models has shown that hybrid approaches can offer richer models for Artificial Intelligence. The integration of effective relational learning and reasoning methods is one of the key challenges in this direction, as neural learning and symbolic reasoning offer complementary characteristics that can benefit the development of AI systems. Relational labelling or link prediction on knowledge graphs has become one of the main problems in deep learning-based natural language processing research. Moreover, other fields which make use of neural-symbolic techniques may also benefit from such research endeavours. There have been several efforts towards the identification of missing facts from existing ones in knowledge graphs. Two lines of research try and predict knowledge relations between two entities by considering all known facts connecting them or several paths of facts connecting them. We propose a neural-symbolic graph neural network which applies learning over all the paths by feeding the model with the embedding of the minimal subset of the knowledge graph containing such paths. By learning to produce representations for entities and facts corresponding to word embeddings, we show how the model can be trained end-to-end to decode these representations and infer relations between entities in a multitask approach. Our contribution is two-fold: a neural-symbolic methodology leverages the resolution of relational inference in large graphs, and we also demonstrate that such neural-symbolic model is shown more effective than path-based approaches

Graph Neural Networks Meet Neural-Symbolic Computing: A Survey and Perspective

Mar 11, 2020

Abstract:Neural-symbolic computing has now become the subject of interest of both academic and industry research laboratories. Graph Neural Networks (GNN) have been widely used in relational and symbolic domains, with widespread application of GNNs in combinatorial optimization, constraint satisfaction, relational reasoning and other scientific domains. The need for improved explainability, interpretability and trust of AI systems in general demands principled methodologies, as suggested by neural-symbolic computing. In this paper, we review the state-of-the-art on the use of GNNs as a model of neural-symbolic computing. This includes the application of GNNs in several domains as well as its relationship to current developments in neural-symbolic computing.

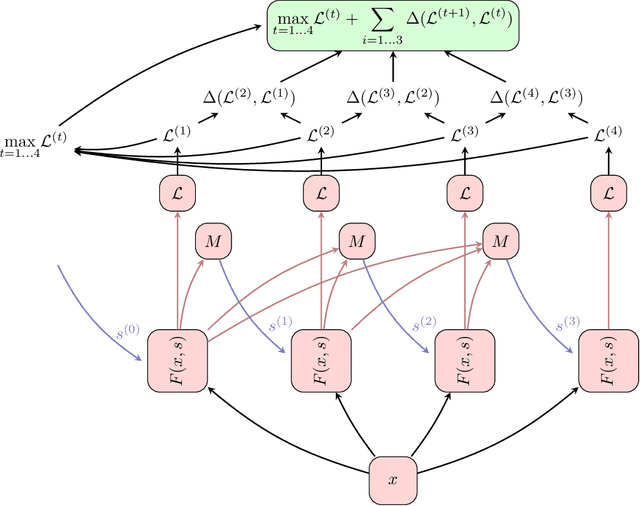

Think Again Networks and the Delta Loss

Apr 30, 2019

Abstract:This short paper introduces an abstraction called Think Again Networks (ThinkNet) which can be applied to any state-dependent function (such as a recurrent neural network).

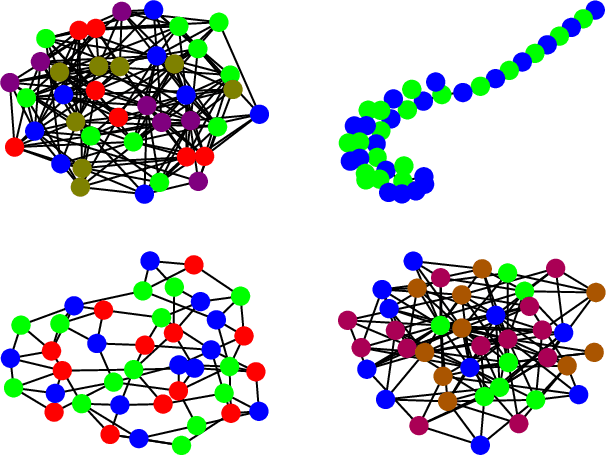

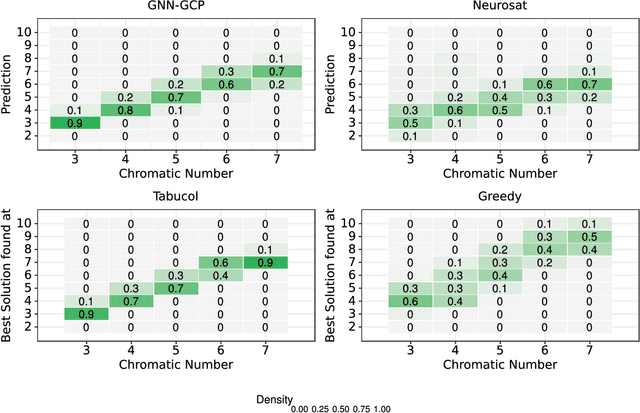

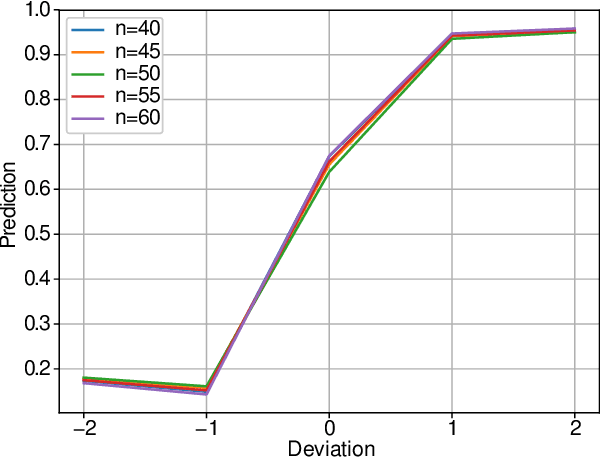

Graph Colouring Meets Deep Learning: Effective Graph Neural Network Models for Combinatorial Problems

Mar 11, 2019

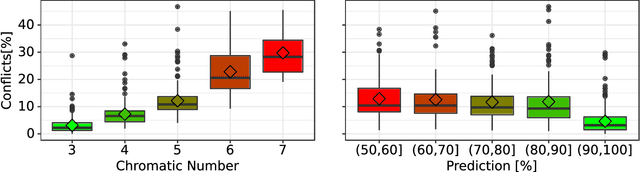

Abstract:Deep learning has consistently defied state-of-the-art techniques in many fields over the last decade. However, we are just beginning to understand the capabilities of neural learning in symbolic domains. Deep learning architectures that employ parameter sharing over graphs can produce models which can be trained on complex properties of relational data. These include highly relevant NP-Complete problems, such as SAT and TSP. In this work, we showcase how Graph Neural Networks (GNN) can be engineered -- with a very simple architecture -- to solve the fundamental combinatorial problem of graph colouring. Our results show that the model, which achieves high accuracy upon training on random instances, is able to generalise to graph distributions different from those seen at training time. Further, it performs better than the Tabucol and greedy baselines for some distributions. In addition, we show how vertex embeddings can be clustered in multidimensional spaces to yield constructive solutions even though our model is only trained as a binary classifier. In summary, our results contribute to shorten the gap in our understanding of the algorithms learned by GNNs, as well as hoarding empirical evidence for their capability on hard combinatorial problems. Our results thus contribute to the standing challenge of integrating robust learning and symbolic reasoning in Deep Learning systems.

Neural Networks Models for Analyzing Magic: the Gathering Cards

Oct 08, 2018

Abstract:Historically, games of all kinds have often been the subject of study in scientific works of Computer Science, including the field of machine learning. By using machine learning techniques and applying them to a game with defined rules or a structured dataset, it's possible to learn and improve on the already existing techniques and methods to tackle new challenges and solve problems that are out of the ordinary. The already existing work on card games tends to focus on gameplay and card mechanics. This work aims to apply neural networks models, including Convolutional Neural Networks and Recurrent Neural Networks, in order to analyze Magic: the Gathering cards, both in terms of card text and illustrations; the card images and texts are used to train the networks in order to be able to classify them into multiple categories. The ultimate goal was to develop a methodology that could generate card text matching it to an input image, which was attained by relating the prediction values of the images and generated text across the different categories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge