Neural-Symbolic Relational Reasoning on Graph Models: Effective Link Inference and Computation from Knowledge Bases

Paper and Code

May 05, 2020

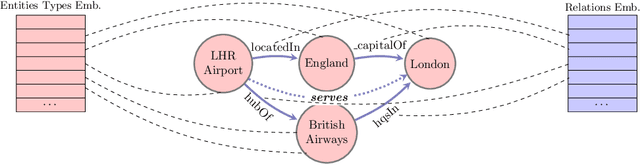

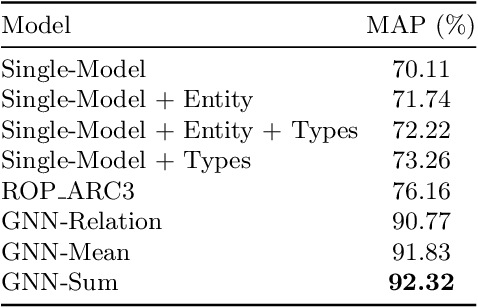

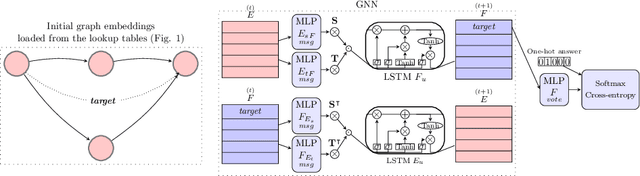

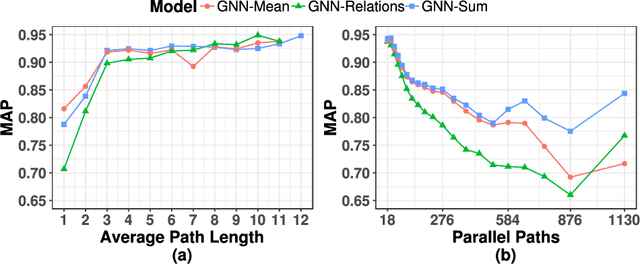

The recent developments and growing interest in neural-symbolic models has shown that hybrid approaches can offer richer models for Artificial Intelligence. The integration of effective relational learning and reasoning methods is one of the key challenges in this direction, as neural learning and symbolic reasoning offer complementary characteristics that can benefit the development of AI systems. Relational labelling or link prediction on knowledge graphs has become one of the main problems in deep learning-based natural language processing research. Moreover, other fields which make use of neural-symbolic techniques may also benefit from such research endeavours. There have been several efforts towards the identification of missing facts from existing ones in knowledge graphs. Two lines of research try and predict knowledge relations between two entities by considering all known facts connecting them or several paths of facts connecting them. We propose a neural-symbolic graph neural network which applies learning over all the paths by feeding the model with the embedding of the minimal subset of the knowledge graph containing such paths. By learning to produce representations for entities and facts corresponding to word embeddings, we show how the model can be trained end-to-end to decode these representations and infer relations between entities in a multitask approach. Our contribution is two-fold: a neural-symbolic methodology leverages the resolution of relational inference in large graphs, and we also demonstrate that such neural-symbolic model is shown more effective than path-based approaches

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge