Mao-Chuang Yeh

Improving Style Transfer with Calibrated Metrics

Oct 21, 2019

Abstract:Style transfer methods produce a transferred image which is a rendering of a content image in the manner of a style image. We seek to understand how to improve style transfer. To do so requires quantitative evaluation procedures, but the current evaluation is qualitative, mostly involving user studies. We describe a novel quantitative evaluation procedure. Our procedure relies on two statistics: the Effectiveness (E) statistic measures the extent that a given style has been transferred to the target, and the Coherence (C) statistic measures the extent to which the original image's content is preserved. Our statistics are calibrated to human preference: targets with larger values of E (resp C) will reliably be preferred by human subjects in comparisons of style (resp. content). We use these statistics to investigate the relative performance of a number of Neural Style Transfer(NST) methods, revealing several intriguing properties. Admissible methods lie on a Pareto frontier (i.e. improving E reduces C or vice versa). Three methods are admissible: Universal style transfer produces very good C but weak E; modifying the optimization used for Gatys' loss produces a method with strong E and strong C; and a modified cross-layer method has slightly better E at strong cost in C. While the histogram loss improves the E statistics of Gatys' method, it does not make the method admissible. Surprisingly, style weights have relatively little effect in improving EC scores, and most variability in the transfer is explained by the style itself (meaning experimenters can be misguided by selecting styles).

Quantitative Evaluation of Style Transfer

Mar 31, 2018

Abstract:Style transfer methods produce a transferred image which is a rendering of a content image in the manner of a style image. There is a rich literature of variant methods. However, evaluation procedures are qualitative, mostly involving user studies. We describe a novel quantitative evaluation procedure. One plots effectiveness (a measure of the extent to which the style was transferred) against coherence (a measure of the extent to which the transferred image decomposes into objects in the same way that the content image does) to obtain an EC plot. We construct EC plots comparing a number of recent style transfer methods. Most methods control within-layer gram matrices, but we also investigate a method that controls cross-layer gram matrices. These EC plots reveal a number of intriguing properties of recent style transfer methods. The style used has a strong effect on the outcome, for all methods. Using large style weights does not necessarily improve effectiveness, and can produce worse results. Cross-layer gram matrices easily beat all other methods, but some styles remain difficult for all methods. Ensemble methods show real promise. It is likely that, for current methods, each style requires a different choice of weights to obtain the best results, so that automated weight setting methods are desirable. Finally, we show evidence comparing our EC evaluations to human evaluations.

Improved Style Transfer by Respecting Inter-layer Correlations

Jan 05, 2018

Abstract:A popular series of style transfer methods apply a style to a content image by controlling mean and covariance of values in early layers of a feature stack. This is insufficient for transferring styles that have strong structure across spatial scales like, e.g., textures where dots lie on long curves. This paper demonstrates that controlling inter-layer correlations yields visible improvements in style transfer methods. We achieve this control by computing cross-layer, rather than within-layer, gram matrices. We find that (a) cross-layer gram matrices are sufficient to control within-layer statistics. Inter-layer correlations improves style transfer and texture synthesis. The paper shows numerous examples on "hard" real style transfer problems (e.g. long scale and hierarchical patterns); (b) a fast approximate style transfer method can control cross-layer gram matrices; (c) we demonstrate that multiplicative, rather than additive style and content loss, results in very good style transfer. Multiplicative loss produces a visible emphasis on boundaries, and means that one hyper-parameter can be eliminated.

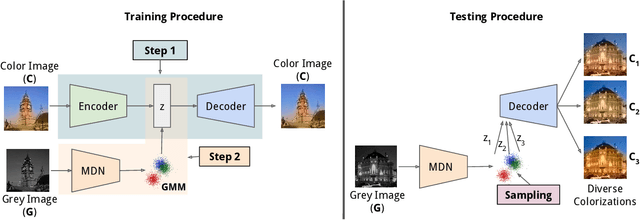

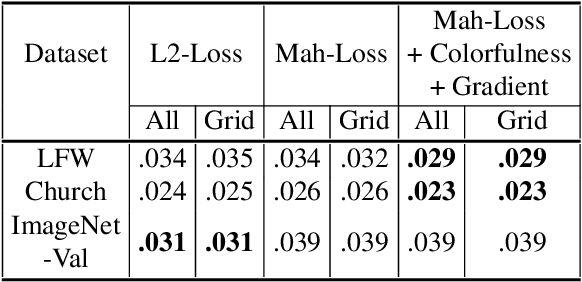

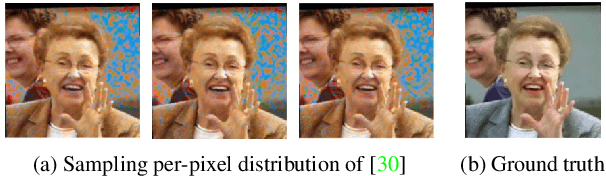

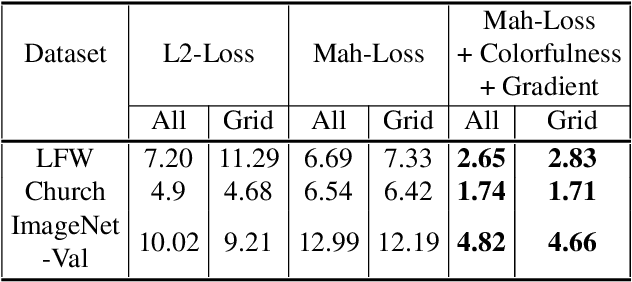

Learning Diverse Image Colorization

Apr 27, 2017

Abstract:Colorization is an ambiguous problem, with multiple viable colorizations for a single grey-level image. However, previous methods only produce the single most probable colorization. Our goal is to model the diversity intrinsic to the problem of colorization and produce multiple colorizations that display long-scale spatial co-ordination. We learn a low dimensional embedding of color fields using a variational autoencoder (VAE). We construct loss terms for the VAE decoder that avoid blurry outputs and take into account the uneven distribution of pixel colors. Finally, we build a conditional model for the multi-modal distribution between grey-level image and the color field embeddings. Samples from this conditional model result in diverse colorization. We demonstrate that our method obtains better diverse colorizations than a standard conditional variational autoencoder (CVAE) model, as well as a recently proposed conditional generative adversarial network (cGAN).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge