Maciej Wielgosz

Compression and Inference of Spiking Neural Networks on Resource-Constrained Hardware

Nov 15, 2025Abstract:Spiking neural networks (SNNs) communicate via discrete spikes in time rather than continuous activations. Their event-driven nature offers advantages for temporal processing and energy efficiency on resource-constrained hardware, but training and deployment remain challenging. We present a lightweight C-based runtime for SNN inference on edge devices and optimizations that reduce latency and memory without sacrificing accuracy. Trained models exported from SNNTorch are translated to a compact C representation; static, cache-friendly data layouts and preallocation avoid interpreter and allocation overheads. We further exploit sparse spiking activity to prune inactive neurons and synapses, shrinking computation in upstream convolutional layers. Experiments on N-MNIST and ST-MNIST show functional parity with the Python baseline while achieving ~10 speedups on desktop CPU and additional gains with pruning, together with large memory reductions that enable microcontroller deployment (Arduino Portenta H7). Results indicate that SNNs can be executed efficiently on conventional embedded platforms when paired with an optimized runtime and spike-driven model compression. Code: https://github.com/karol-jurzec/snn-generator/

DVS-PedX: Synthetic-and-Real Event-Based Pedestrian Dataset

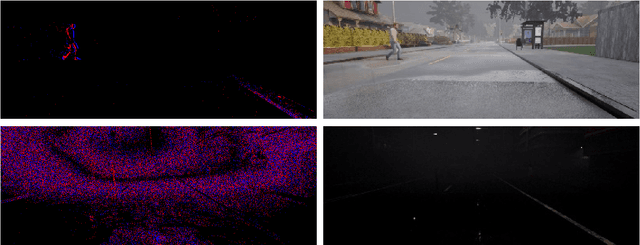

Sep 04, 2025Abstract:Event cameras like Dynamic Vision Sensors (DVS) report micro-timed brightness changes instead of full frames, offering low latency, high dynamic range, and motion robustness. DVS-PedX (Dynamic Vision Sensor Pedestrian eXploration) is a neuromorphic dataset designed for pedestrian detection and crossing-intention analysis in normal and adverse weather conditions across two complementary sources: (1) synthetic event streams generated in the CARLA simulator for controlled "approach-cross" scenes under varied weather and lighting; and (2) real-world JAAD dash-cam videos converted to event streams using the v2e tool, preserving natural behaviors and backgrounds. Each sequence includes paired RGB frames, per-frame DVS "event frames" (33 ms accumulations), and frame-level labels (crossing vs. not crossing). We also provide raw AEDAT 2.0/AEDAT 4.0 event files and AVI DVS video files and metadata for flexible re-processing. Baseline spiking neural networks (SNNs) using SpikingJelly illustrate dataset usability and reveal a sim-to-real gap, motivating domain adaptation and multimodal fusion. DVS-PedX aims to accelerate research in event-based pedestrian safety, intention prediction, and neuromorphic perception.

Spatiotemporal Analysis of Forest Machine Operations Using 3D Video Classification

May 30, 2025Abstract:This paper presents a deep learning-based framework for classifying forestry operations from dashcam video footage. Focusing on four key work elements - crane-out, cutting-and-to-processing, driving, and processing - the approach employs a 3D ResNet-50 architecture implemented with PyTorchVideo. Trained on a manually annotated dataset of field recordings, the model achieves strong performance, with a validation F1 score of 0.88 and precision of 0.90. These results underscore the effectiveness of spatiotemporal convolutional networks for capturing both motion patterns and appearance in real-world forestry environments. The system integrates standard preprocessing and augmentation techniques to improve generalization, but overfitting is evident, highlighting the need for more training data and better class balance. Despite these challenges, the method demonstrates clear potential for reducing the manual workload associated with traditional time studies, offering a scalable solution for operational monitoring and efficiency analysis in forestry. This work contributes to the growing application of AI in natural resource management and sets the foundation for future systems capable of real-time activity recognition in forest machinery. Planned improvements include dataset expansion, enhanced regularization, and deployment trials on embedded systems for in-field use.

Three-Factor Learning in Spiking Neural Networks: An Overview of Methods and Trends from a Machine Learning Perspective

Apr 06, 2025Abstract:Three-factor learning rules in Spiking Neural Networks (SNNs) have emerged as a crucial extension to traditional Hebbian learning and Spike-Timing-Dependent Plasticity (STDP), incorporating neuromodulatory signals to improve adaptation and learning efficiency. These mechanisms enhance biological plausibility and facilitate improved credit assignment in artificial neural systems. This paper takes a view on this topic from a machine learning perspective, providing an overview of recent advances in three-factor learning, discusses theoretical foundations, algorithmic implementations, and their relevance to reinforcement learning and neuromorphic computing. In addition, we explore interdisciplinary approaches, scalability challenges, and potential applications in robotics, cognitive modeling, and AI systems. Finally, we highlight key research gaps and propose future directions for bridging the gap between neuroscience and artificial intelligence.

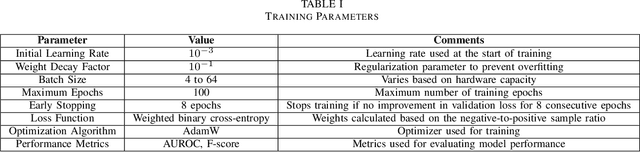

Pedestrian intention prediction in Adverse Weather Conditions with Spiking Neural Networks and Dynamic Vision Sensors

Jun 01, 2024

Abstract:This study examines the effectiveness of Spiking Neural Networks (SNNs) paired with Dynamic Vision Sensors (DVS) to improve pedestrian detection in adverse weather, a significant challenge for autonomous vehicles. Utilizing the high temporal resolution and low latency of DVS, which excels in dynamic, low-light, and high-contrast environments, we assess the efficiency of SNNs compared to traditional Convolutional Neural Networks (CNNs). Our experiments involved testing across diverse weather scenarios using a custom dataset from the CARLA simulator, mirroring real-world variability. SNN models, enhanced with Temporally Effective Batch Normalization, were trained and benchmarked against state-of-the-art CNNs to demonstrate superior accuracy and computational efficiency in complex conditions such as rain and fog. The results indicate that SNNs, integrated with DVS, significantly reduce computational overhead and improve detection accuracy in challenging conditions compared to CNNs. This highlights the potential of DVS combined with bio-inspired SNN processing to enhance autonomous vehicle perception and decision-making systems, advancing intelligent transportation systems' safety features in varying operational environments. Additionally, our research indicates that SNNs perform more efficiently in handling long perception windows and prediction tasks, rather than simple pedestrian detection.

SegmentAnyTree: A sensor and platform agnostic deep learning model for tree segmentation using laser scanning data

Jan 28, 2024

Abstract:This research advances individual tree crown (ITC) segmentation in lidar data, using a deep learning model applicable to various laser scanning types: airborne (ULS), terrestrial (TLS), and mobile (MLS). It addresses the challenge of transferability across different data characteristics in 3D forest scene analysis. The study evaluates the model's performance based on platform (ULS, MLS) and data density, testing five scenarios with varying input data, including sparse versions, to gauge adaptability and canopy layer efficacy. The model, based on PointGroup architecture, is a 3D CNN with separate heads for semantic and instance segmentation, validated on diverse point cloud datasets. Results show point cloud sparsification enhances performance, aiding sparse data handling and improving detection in dense forests. The model performs well with >50 points per sq. m densities but less so at 10 points per sq. m due to higher omission rates. It outperforms existing methods (e.g., Point2Tree, TLS2trees) in detection, omission, commission rates, and F1 score, setting new benchmarks on LAUTx, Wytham Woods, and TreeLearn datasets. In conclusion, this study shows the feasibility of a sensor-agnostic model for diverse lidar data, surpassing sensor-specific approaches and setting new standards in tree segmentation, particularly in complex forests. This contributes to future ecological modeling and forest management advancements.

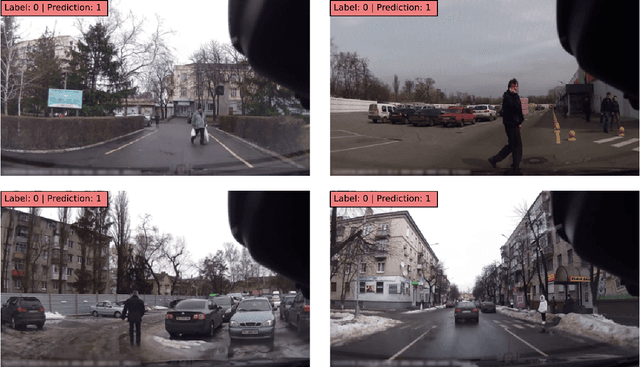

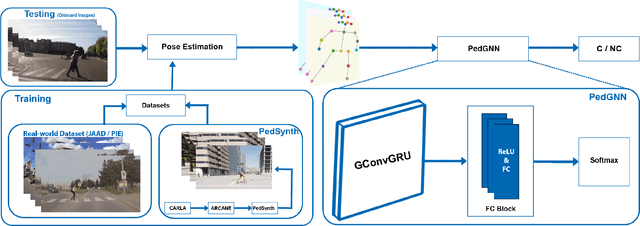

Synthetic Data Generation Framework, Dataset, and Efficient Deep Model for Pedestrian Intention Prediction

Jan 12, 2024

Abstract:Pedestrian intention prediction is crucial for autonomous driving. In particular, knowing if pedestrians are going to cross in front of the ego-vehicle is core to performing safe and comfortable maneuvers. Creating accurate and fast models that predict such intentions from sequential images is challenging. A factor contributing to this is the lack of datasets with diverse crossing and non-crossing (C/NC) scenarios. We address this scarceness by introducing a framework, named ARCANE, which allows programmatically generating synthetic datasets consisting of C/NC video clip samples. As an example, we use ARCANE to generate a large and diverse dataset named PedSynth. We will show how PedSynth complements widely used real-world datasets such as JAAD and PIE, so enabling more accurate models for C/NC prediction. Considering the onboard deployment of C/NC prediction models, we also propose a deep model named PedGNN, which is fast and has a very low memory footprint. PedGNN is based on a GNN-GRU architecture that takes a sequence of pedestrian skeletons as input to predict crossing intentions.

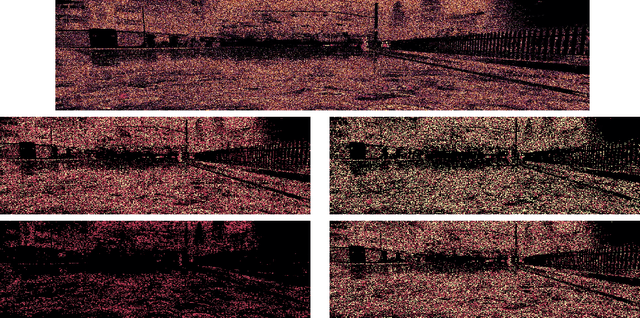

Automated forest inventory: analysis of high-density airborne LiDAR point clouds with 3D deep learning

Dec 22, 2023

Abstract:Detailed forest inventories are critical for sustainable and flexible management of forest resources, to conserve various ecosystem services. Modern airborne laser scanners deliver high-density point clouds with great potential for fine-scale forest inventory and analysis, but automatically partitioning those point clouds into meaningful entities like individual trees or tree components remains a challenge. The present study aims to fill this gap and introduces a deep learning framework that is able to perform such a segmentation across diverse forest types and geographic regions. From the segmented data, we then derive relevant biophysical parameters of individual trees as well as stands. The system has been tested on FOR-Instance, a dataset of point clouds that have been acquired in five different countries using surveying drones. The segmentation back-end achieves over 85% F-score for individual trees, respectively over 73% mean IoU across five semantic categories: ground, low vegetation, stems, live branches and dead branches. Building on the segmentation results our pipeline then densely calculates biophysical features of each individual tree (height, crown diameter, crown volume, DBH, and location) and properties per stand (digital terrain model and stand density). Especially crown-related features are in most cases retrieved with high accuracy, whereas the estimates for DBH and location are less reliable, due to the airborne scanning setup.

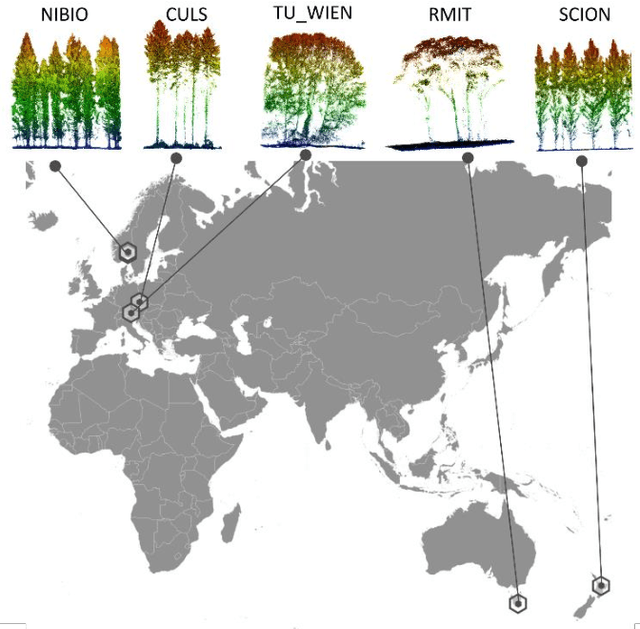

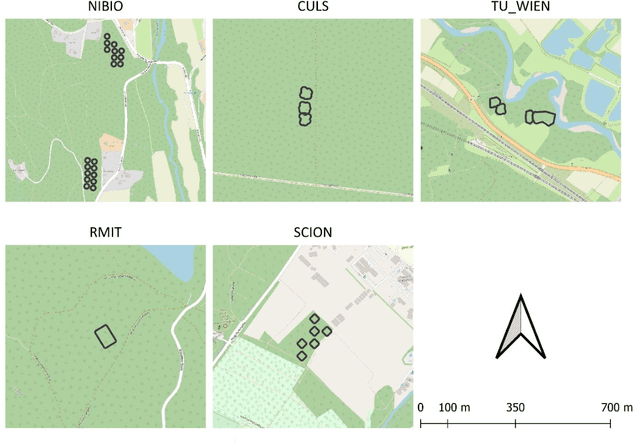

FOR-instance: a UAV laser scanning benchmark dataset for semantic and instance segmentation of individual trees

Sep 03, 2023

Abstract:The FOR-instance dataset (available at https://doi.org/10.5281/zenodo.8287792) addresses the challenge of accurate individual tree segmentation from laser scanning data, crucial for understanding forest ecosystems and sustainable management. Despite the growing need for detailed tree data, automating segmentation and tracking scientific progress remains difficult. Existing methodologies often overfit small datasets and lack comparability, limiting their applicability. Amid the progress triggered by the emergence of deep learning methodologies, standardized benchmarking assumes paramount importance in these research domains. This data paper introduces a benchmarking dataset for dense airborne laser scanning data, aimed at advancing instance and semantic segmentation techniques and promoting progress in 3D forest scene segmentation. The FOR-instance dataset comprises five curated and ML-ready UAV-based laser scanning data collections from diverse global locations, representing various forest types. The laser scanning data were manually annotated into individual trees (instances) and different semantic classes (e.g. stem, woody branches, live branches, terrain, low vegetation). The dataset is divided into development and test subsets, enabling method advancement and evaluation, with specific guidelines for utilization. It supports instance and semantic segmentation, offering adaptability to deep learning frameworks and diverse segmentation strategies, while the inclusion of diameter at breast height data expands its utility to the measurement of a classic tree variable. In conclusion, the FOR-instance dataset contributes to filling a gap in the 3D forest research, enhancing the development and benchmarking of segmentation algorithms for dense airborne laser scanning data.

Computer-Aided Cytology Diagnosis in Animals: CNN-Based Image Quality Assessment for Accurate Disease Classification

Aug 11, 2023Abstract:This paper presents a computer-aided cytology diagnosis system designed for animals, focusing on image quality assessment (IQA) using Convolutional Neural Networks (CNNs). The system's building blocks are tailored to seamlessly integrate IQA, ensuring reliable performance in disease classification. We extensively investigate the CNN's ability to handle various image variations and scenarios, analyzing the impact on detecting low-quality input data. Additionally, the network's capacity to differentiate valid cellular samples from those with artifacts is evaluated. Our study employs a ResNet18 network architecture and explores the effects of input sizes and cropping strategies on model performance. The research sheds light on the significance of CNN-based IQA in computer-aided cytology diagnosis for animals, enhancing the accuracy of disease classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge