M. Tuluhan Akbulut

Reward Conditioned Neural Movement Primitives for Population Based Variational Policy Optimization

Nov 09, 2020

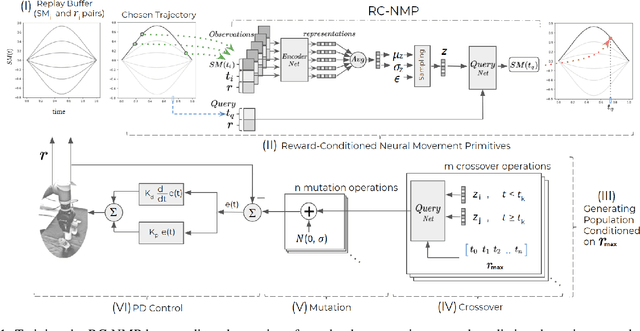

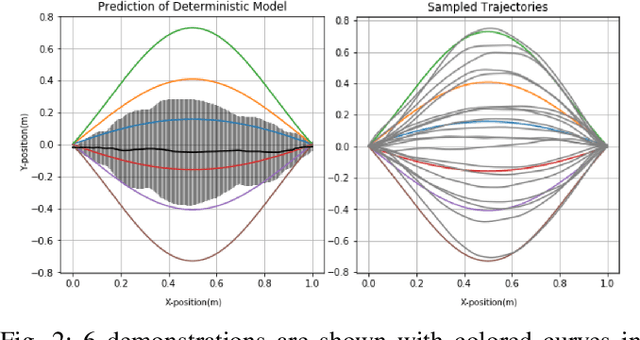

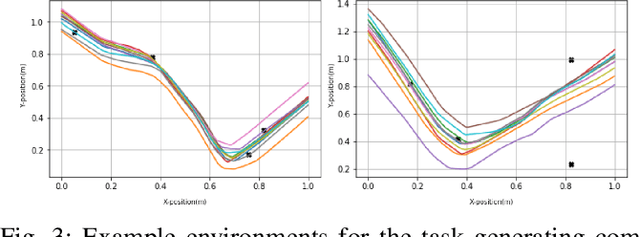

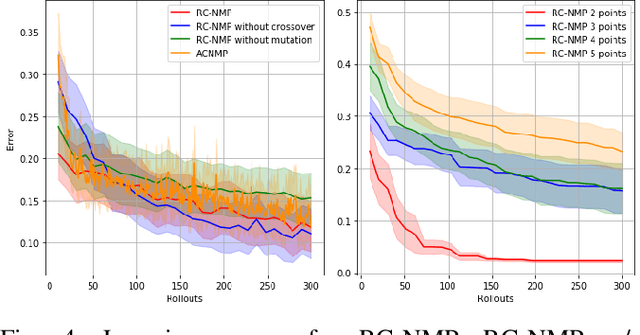

Abstract:The aim of this paper is to study the reward based policy exploration problem in a supervised learning approach and enable robots to form complex movement trajectories in challenging reward settings and search spaces. For this, the experience of the robot, which can be bootstrapped from demonstrated trajectories, is used to train a novel Neural Processes-based deep network that samples from its latent space and generates the required trajectories given desired rewards. Our framework can generate progressively improved trajectories by sampling them from high reward landscapes, increasing the reward gradually. Variational inference is used to create a stochastic latent space to sample varying trajectories in generating population of trajectories given target rewards. We benefit from Evolutionary Strategies and propose a novel crossover operation, which is applied in the self-organized latent space of the individual policies, allowing blending of the individuals that might address different factors in the reward function. Using a number of tasks that require sequential reaching to multiple points or passing through gaps between objects, we showed that our method provides stable learning progress and significant sample efficiency compared to a number of state-of-the-art robotic reinforcement learning methods. Finally, we show the real-world suitability of our method through real robot execution involving obstacle avoidance.

Adaptive Conditional Neural Movement Primitives via Representation Sharing Between Supervised and Reinforcement Learning

Mar 25, 2020

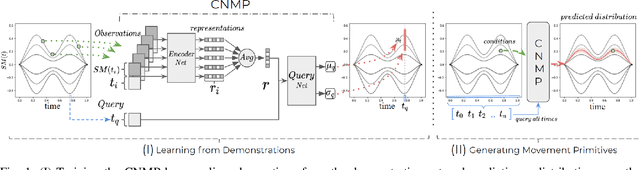

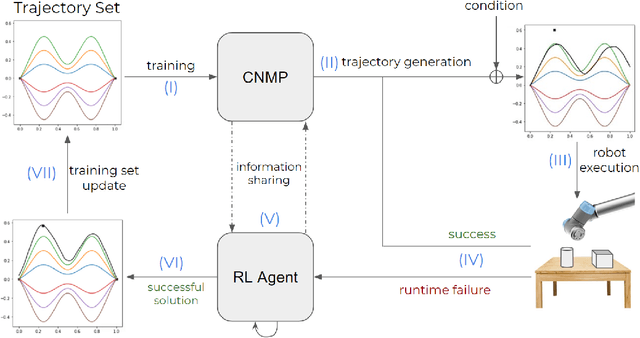

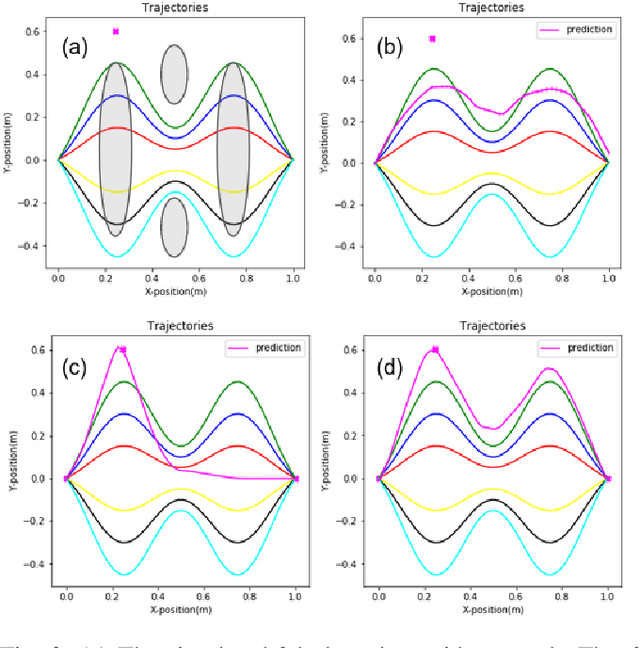

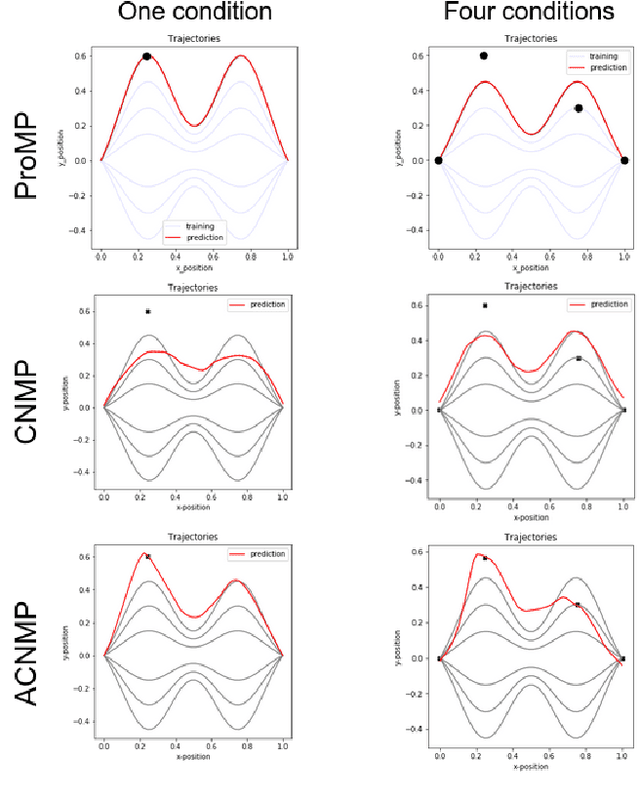

Abstract:Learning by Demonstration provides a sample efficient way to equip robots with complex sensorimotor skills in supervised manner. Several movement primitive representations can be used for flexible motor representation and learning. A recent state-of-the art approach is Conditional Neural Movement Primitives (CNMP) that can learn non-linear relations between environment parameters and complex multi-modal trajectories from a few expert demonstrations by forming powerful latent space representations. In this study, to improve the applicability of CNMP to changing tasks and/or environments, we couple it with a reinforcement learning agent that exploits the formed representations by the original CNMP network, and learns to generate synthetic demonstrations for further learning. This enables the CNMP network to generalize to new environments by adapting its internal representations. In the current implementation, the reinforcement learning agent is triggered when a failure in task execution is detected, and the CNMP is trained with the newly discovered demonstration (trajectory), which shares essential characteristics with the original demonstrations due to the representation sharing. As a result, the overall system increases its capacity and handle situations in scenarios where the initial CNMP network can not produce a useful trajectory. To show the validity of our proposed model, we compare our approach with original CNMP work and other movement primitives approaches. Furthermore, we presents the experimental results from the implementation of the proposed model on real robotics setups, which indicate the applicability of our approach as an effective adaptive learning by demonstration system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge